Python基於中文分詞的簡單搜尋引擎實現 Whoosh

阿新 • • 發佈:2018-11-15

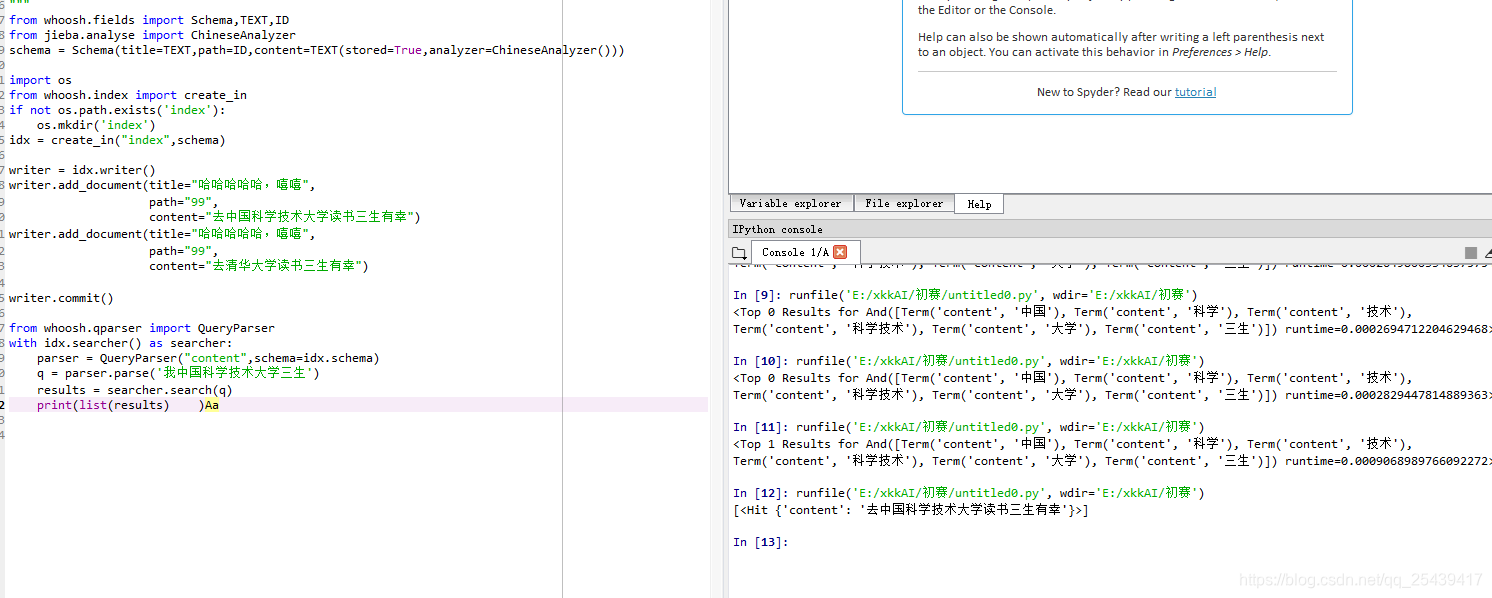

# -*- coding: utf-8 -*- """ Created on Tue Nov 13 22:53:33 2018 @author: Lenovo """ from whoosh.fields import Schema,TEXT,ID from jieba.analyse import ChineseAnalyzer schema = Schema(title=TEXT,path=ID,content=TEXT(stored=True,analyzer=ChineseAnalyzer())) import os from whoosh.index import create_in if not os.path.exists('index'): os.mkdir('index') idx = create_in("index",schema) writer = idx.writer() writer.add_document(title="哈哈哈哈哈,嘻嘻", path="99", content="少時誦詩書大撒所三生三世十里桃花") writer.commit() from whoosh.qparser import QueryParser with idx.searcher() as searcher: parser = QueryParser("content",schema=idx.schema) q = parser.parse('我是來自中國科學技術大學') results = searcher.search(q) print(results)

接下來看一看ChineseAnalyzer的原始碼:

# encoding=utf-8 from __future__ import unicode_literals from whoosh.analysis import RegexAnalyzer, LowercaseFilter, StopFilter, StemFilter from whoosh.analysis import Tokenizer, Token from whoosh.lang.porter import stem import jieba import re STOP_WORDS = frozenset(('a', 'an', 'and', 'are', 'as', 'at', 'be', 'by', 'can', 'for', 'from', 'have', 'if', 'in', 'is', 'it', 'may', 'not', 'of', 'on', 'or', 'tbd', 'that', 'the', 'this', 'to', 'us', 'we', 'when', 'will', 'with', 'yet', 'you', 'your', '的', '了', '和','我')) accepted_chars = re.compile(r"[\u4E00-\u9FD5]+") class ChineseTokenizer(Tokenizer): def __call__(self, text, **kargs): words = jieba.tokenize(text, mode="search") token = Token() for (w, start_pos, stop_pos) in words: if not accepted_chars.match(w) and len(w) <= 1: continue token.original = token.text = w # print(token) token.pos = start_pos token.startchar = start_pos token.endchar = stop_pos yield token def ChineseAnalyzer(stoplist=STOP_WORDS, minsize=1, stemfn=stem, cachesize=50000): return (ChineseTokenizer() | LowercaseFilter() | StopFilter(stoplist=stoplist, minsize=minsize) | StemFilter(stemfn=stemfn, ignore=None, cachesize=cachesize))

看到我們可以在STOP_WORDS里加入自己的停用詞,但是如何指定Dictionary好像還不具備這個功能,我們來對原始碼進行改造

通過層層追蹤,我們發現在Tokenizer中可以通過初始化指定詞典

# encoding=utf-8 from __future__ import unicode_literals from whoosh.analysis import RegexAnalyzer, LowercaseFilter, StopFilter, StemFilter from whoosh.analysis import Tokenizer, Token from whoosh.lang.porter import stem import jieba import re tk = jieba.Tokenizer(dictionary=None) STOP_WORDS = frozenset(('a', 'an', 'and', 'are', 'as', 'at', 'be', 'by', 'can', 'for', 'from', 'have', 'if', 'in', 'is', 'it', 'may', 'not', 'of', 'on', 'or', 'tbd', 'that', 'the', 'this', 'to', 'us', 'we', 'when', 'will', 'with', 'yet', 'you', 'your', '的', '了', '和','我')) accepted_chars = re.compile(r"[\u4E00-\u9FD5]+") class ChineseTokenizer(Tokenizer): def __call__(self, text, **kargs): words = tk.tokenize(text, mode="search") token = Token() for (w, start_pos, stop_pos) in words: if not accepted_chars.match(w) and len(w) <= 1: continue token.original = token.text = w # print(token) token.pos = start_pos token.startchar = start_pos token.endchar = stop_pos yield token def ChineseAnalyzer(stoplist=STOP_WORDS, minsize=1, stemfn=stem, cachesize=50000): return (ChineseTokenizer() | LowercaseFilter() | StopFilter(stoplist=stoplist, minsize=minsize) | StemFilter(stemfn=stemfn, ignore=None, cachesize=cachesize))

把初始化Tokenizer的操作作為全域性變數,這樣可以做到常駐記憶體,避免重複載入詞典耗時