《機器學習實戰》:決策樹之為自己配個隱形眼鏡

阿新 • • 發佈:2018-11-27

《機器學習實戰》:決策樹之為自己配個隱形眼鏡

檔案列表如下圖所示:

一、構建決策樹

建立trees.py檔案,輸入以下程式碼。

'''

Created on Oct 12, 2010

Decision Tree Source Code for Machine Learning in Action Ch. 3

@author: Peter Harrington

'''

from math import log

import operator

def createDataSet():

dataSet = [[1, 1, 'yes'],

[ 二、決策樹視覺化

建立treePlotter.py檔案,輸入以下程式碼。

'''

Created on Oct 14, 2010

@author: Peter Harrington

'''

import matplotlib.pyplot as plt

import trees

decisionNode = dict(boxstyle="sawtooth", fc="0.8")

leafNode = dict(boxstyle="round4", fc="0.8")

arrow_args = dict(arrowstyle="<-")

def getNumLeafs(myTree):

numLeafs = 0

#firstStr = myTree.keys()[0]

firstStr = list(myTree.keys())[0]

secondDict = myTree[firstStr]

for key in secondDict.keys():

if type(secondDict[

key]).__name__ == 'dict': # test to see if the nodes are dictonaires, if not they are leaf nodes

numLeafs += getNumLeafs(secondDict[key])

else:

numLeafs += 1

return numLeafs

def getTreeDepth(myTree):

maxDepth = 0

#firstStr = myTree.keys()[0]

firstStr = list(myTree.keys())[0]

secondDict = myTree[firstStr]

for key in secondDict.keys():

if type(secondDict[

key]).__name__ == 'dict': # test to see if the nodes are dictonaires, if not they are leaf nodes

thisDepth = 1 + getTreeDepth(secondDict[key])

else:

thisDepth = 1

if thisDepth > maxDepth: maxDepth = thisDepth

return maxDepth

def plotNode(nodeTxt, centerPt, parentPt, nodeType):

createPlot.ax1.annotate(nodeTxt, xy=parentPt, xycoords='axes fraction',

xytext=centerPt, textcoords='axes fraction',

va="center", ha="center", bbox=nodeType, arrowprops=arrow_args)

def plotMidText(cntrPt, parentPt, txtString):

xMid = (parentPt[0] - cntrPt[0]) / 2.0 + cntrPt[0]

yMid = (parentPt[1] - cntrPt[1]) / 2.0 + cntrPt[1]

createPlot.ax1.text(xMid, yMid, txtString, va="center", ha="center", rotation=30)

def plotTree(myTree, parentPt, nodeTxt): # if the first key tells you what feat was split on

numLeafs = getNumLeafs(myTree) # this determines the x width of this tree

depth = getTreeDepth(myTree)

#firstStr = myTree.keys()[0] # the text label for this node should be this

firstStr = list(myTree.keys())[0]

cntrPt = (plotTree.xOff + (1.0 + float(numLeafs)) / 2.0 / plotTree.totalW, plotTree.yOff)

plotMidText(cntrPt, parentPt, nodeTxt)

plotNode(firstStr, cntrPt, parentPt, decisionNode)

secondDict = myTree[firstStr]

plotTree.yOff = plotTree.yOff - 1.0 / plotTree.totalD

for key in secondDict.keys():

if type(secondDict[

key]).__name__ == 'dict': # test to see if the nodes are dictonaires, if not they are leaf nodes

plotTree(secondDict[key], cntrPt, str(key)) # recursion

else: # it's a leaf node print the leaf node

plotTree.xOff = plotTree.xOff + 1.0 / plotTree.totalW

plotNode(secondDict[key], (plotTree.xOff, plotTree.yOff), cntrPt, leafNode)

plotMidText((plotTree.xOff, plotTree.yOff), cntrPt, str(key))

plotTree.yOff = plotTree.yOff + 1.0 / plotTree.totalD

# if you do get a dictonary you know it's a tree, and the first element will be another dict

def createPlot(inTree):

fig = plt.figure(1, facecolor='white')

fig.clf()

axprops = dict(xticks=[], yticks=[])

createPlot.ax1 = plt.subplot(111, frameon=False, **axprops) # no ticks

# createPlot.ax1 = plt.subplot(111, frameon=False) #ticks for demo puropses

plotTree.totalW = float(getNumLeafs(inTree))

plotTree.totalD = float(getTreeDepth(inTree))

plotTree.xOff = -0.5 / plotTree.totalW;

plotTree.yOff = 1.0;

plotTree(inTree, (0.5, 1.0), '')

plt.show()

# def createPlot():

# fig = plt.figure(1, facecolor='white')

# fig.clf()

# createPlot.ax1 = plt.subplot(111, frameon=False) #ticks for demo puropses

# plotNode('a decision node', (0.5, 0.1), (0.1, 0.5), decisionNode)

# plotNode('a leaf node', (0.8, 0.1), (0.3, 0.8), leafNode)

# plt.show()

def retrieveTree(i):

listOfTrees = [{'no surfacing': {0: 'no', 1: {'flippers': {0: 'no', 1: 'yes'}}}},

{'no surfacing': {0: 'no', 1: {'flippers': {0: {'head': {0: 'no', 1: 'yes'}}, 1: 'no'}}}}

]

return listOfTrees[i]

createPlot(retrieveTree(1))

三、儲存決策樹資訊

建立saveTree.py檔案,輸入以下程式碼。

# -*- coding:utf-8 -*-

# @Time : 2018/10/24 9:26

# @Author : Shenxue

# @FileName: saveTree.py

# @Software: PyCharm

import pickle

"""

函式說明:storeTree函式負責把tree存放在當前目錄下的filename(.txt)檔案中

Parameters:

tree:-生成的樹

filename: -存放決策樹的檔案

Returns:

無

"""

def storeTree(tree, filename):

fw = open(filename, 'wb')

pickle.dump(tree, fw)

fw.close()

"""

函式說明:getTree函式負責在當前目錄下的filename(.txt)檔案中讀取決策樹的相關資料

Paraneters:

filename: -存放決策樹的檔案

Returns:

讀取的檔案內容

"""

def getTree(filename):

fr = open(filename, 'rb')

return pickle.load(fr)

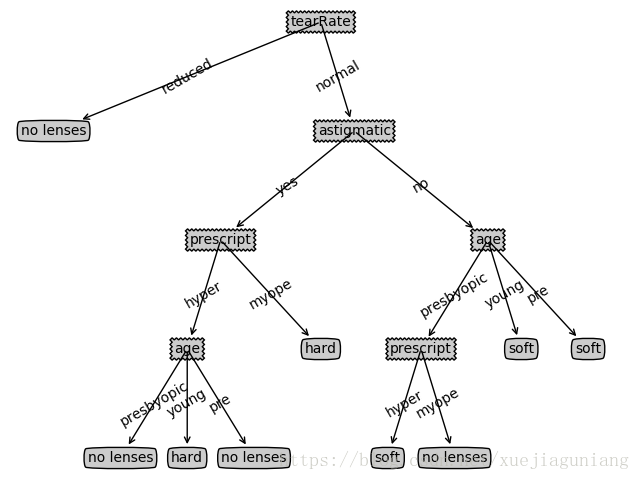

四、測試決策樹

建立test.py檔案,輸入以下程式碼,畫出決策樹的圖。

# -*- coding:utf-8 -*-

# @Time : 2018/10/24 9:40

# @Author : Shenxue

# @FileName: test.py

# @Software: PyCharm

import sys

import trees

import saveTree

import treePlotter

from scipy import misc

fr = open('lenses.txt')

lensesData = [data.strip().split('\t') for data in fr.readlines()]

lensesLabel = ['age', 'prescript', 'astigmatic', 'tearRate']

lensesTree = trees.createTree(lensesData, lensesLabel)

saveTree.storeTree(lensesTree, 'result.txt')

print(lensesTree)

print(treePlotter.createPlot(lensesTree))

畫出的圖: