郵件分詞去掉停用詞

阿新 • • 發佈:2018-11-29

!pip install nltk

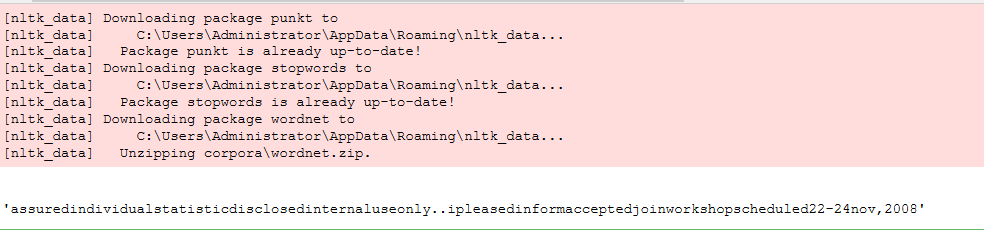

#讀取檔案 text = 'Be assured that individual statistics are not disclosed and this is for internal use only..I am pleased to inform you that you have been accepted to join the workshop scheduled for 22-24 Nov,2008.' import nltk nltk.download('punkt') nltk.download('stopwords') nltk.download('wordnet') from nltk.corpus import stopwords from nltk.stem import WordNetLemmatizer #預處理 def preprocessing(text): #text = text.decode("utf-8") tokens = [word for sent in nltk.sent_tokenize(text) for word in nltk.word_tokenize(sent)] stops = stopwords.words('english') tokens = [token fortoken in tokens if token not in stops] tokens = [token.lower() for token in tokens if len(token) >= 3] lmtzr = WordNetLemmatizer() tokens = [lmtzr.lemmatize(token) for token in tokens] preprocessed_text = ''.join(tokens) return preprocessed_text preprocessing(text)

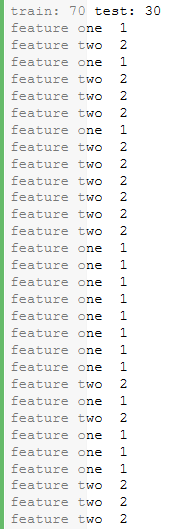

#劃分資料集 from sklearn.model_selection import train_test_split # 生成100條資料:100個2維的特徵向量,對應100個標籤 x = [["feature ","one "]] * 50 + [["feature ","two "]] * 50 y = [1] * 50 + [2] * 50 # 隨機抽取30%的測試集 x_train, x_test, y_train, y_test = train_test_split(x,y,test_size=0.3,random_state=0) print ("train:",len(x_train), "test:",len(x_test)) # 檢視被劃分出的測試集 for i in range(len(x_test)): print ("".join(x_test[i]), y_test[i])