視訊動作識別--Two-Stream Convolutional Networks for Action Recognition in Videos

Two-Stream Convolutional Networks for Action Recognition in Videos NIPS2014

http://www.robots.ox.ac.uk/~vgg/software/two_stream_action/

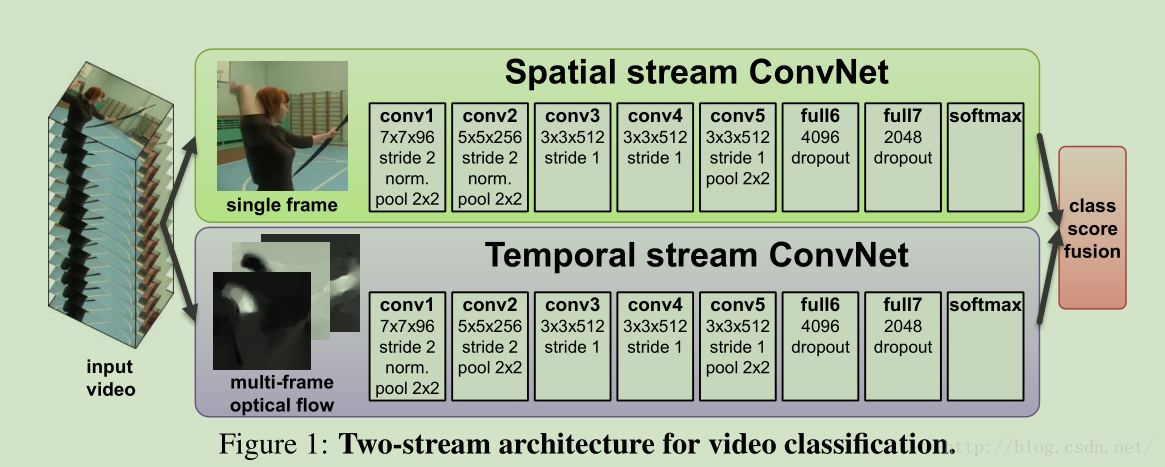

本文針對視訊中的動作分類問題,這裡使用 兩個獨立的CNN來分開處理 視訊中的空間資訊和時間資訊 spatial 和 tempal,然後我們再後融合 late fusion。 spatial stream 從視訊中的每一幀影象做動作識別,tempal stream 通過輸入稠密光流的運動資訊來識別動作。兩個 stream 都通過 CNN網路來完成。將時間和空間資訊分開來處理,就可以利用現成的資料庫來訓練這兩個網路。

2 Two-stream architecture for video recognition

視訊可以很自然的被分為 空間部分和時間部分,空間部分主要對應單張影象中的 appearance,傳遞視訊中描述的場景和物體的相關資訊。時間部分對應連續幀的運動,包含物體和觀察者(相機)的運動資訊。

Each stream is implemented using a deep ConvNet, softmax scores of which are combined by late fusion. We consider two fusion methods: averaging and training a multi-class linear SVM [6] on stacked L 2 -normalised softmax scores as features.

Spatial stream ConvNet: 這就是對單張影象進行分類,我們可以使用最新的網路結構,在影象分類資料庫上預訓練

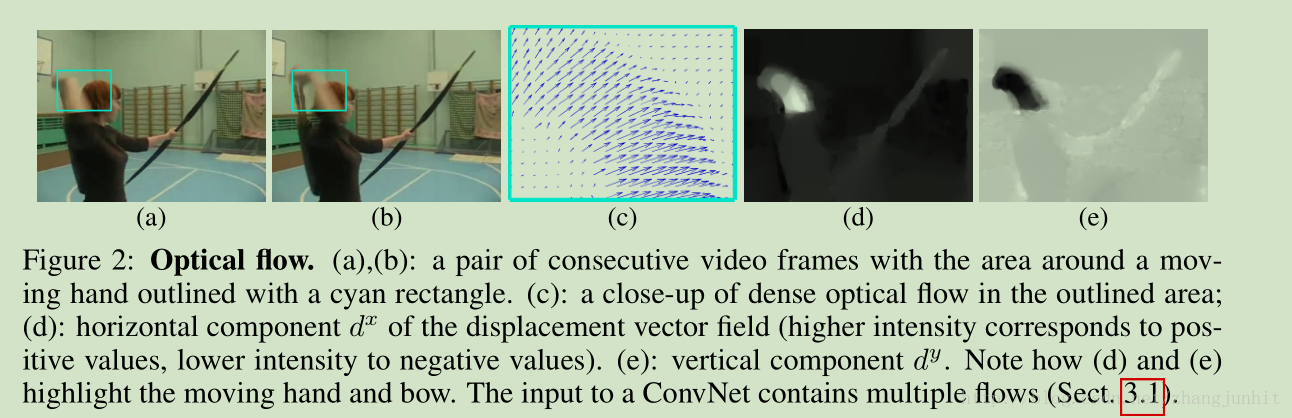

3 Optical flow ConvNets

the input to our model is formed by stacking optical flow displacement fields between several consecutive frames. Such input explicitly describes the motion between video frames, which makes the recognition easier

對於 Optical flow ConvNets 我們將若干連續幀影象對應的光流場輸入到 CNN中,這種顯示的運動資訊可以幫助動作分類。

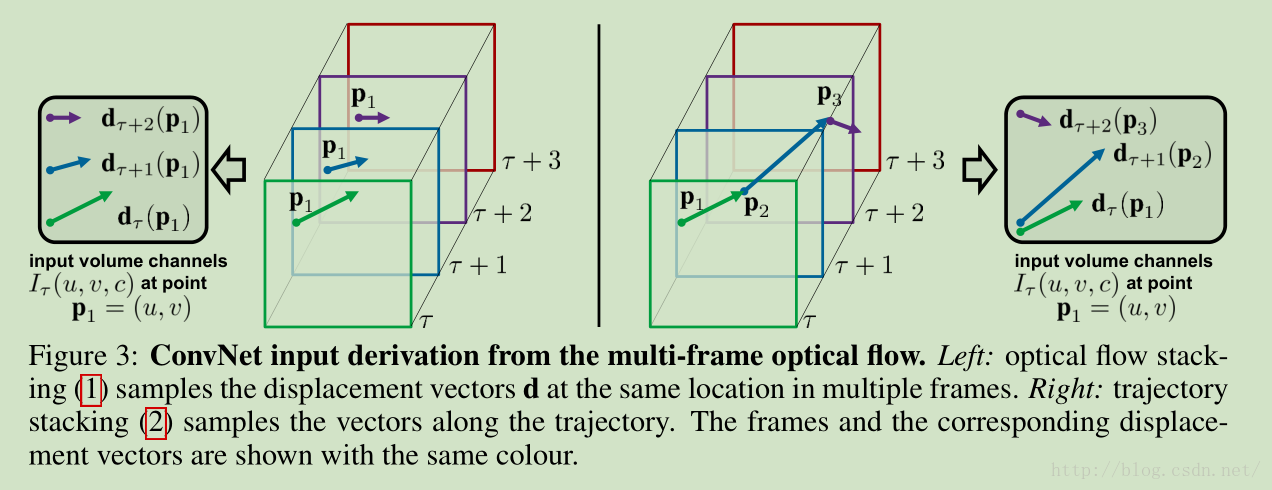

這裡我們考慮基於光流輸入的變體:

3.1 ConvNet input configurations

Optical flow stacking. 這裡我們將光流的水平分量和垂直分量 分別打包當做特徵圖輸入 CNN, The horizontal and vertical components of the vector field can be seen as image channels

Trajectory stacking,作為另一種運動表達方式,我們可以將運動軌跡資訊輸入 CNN

Bi-directional optical flow

雙向光流的計算

Mean flow subtraction: 這算是一種輸入的歸一化了,將均值歸一化到 0

It is generally beneficial to perform zero-centering of the network input, as it allows the model to better exploit the rectification non-linearities

In our case, we consider a simpler approach: from each displacement field d we subtract its mean vector.

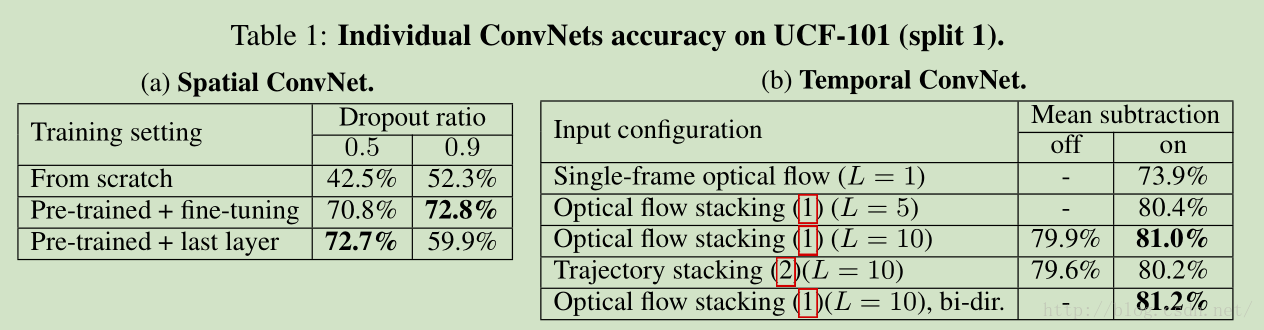

Individual ConvNets accuracy on UCF-101

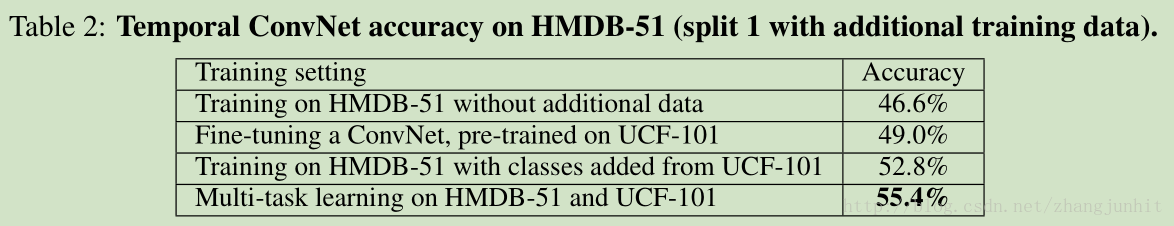

Temporal ConvNet accuracy on HMDB-51

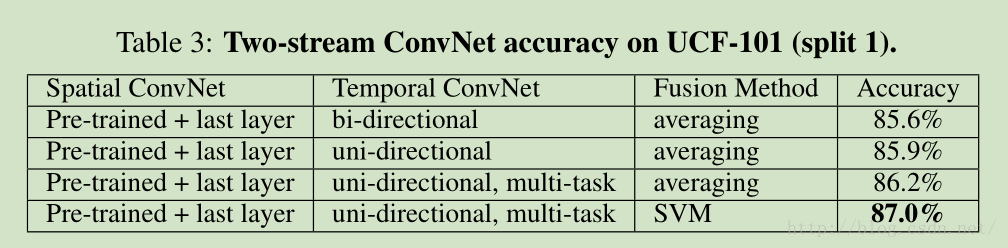

Two-stream ConvNet accuracy on UCF-101

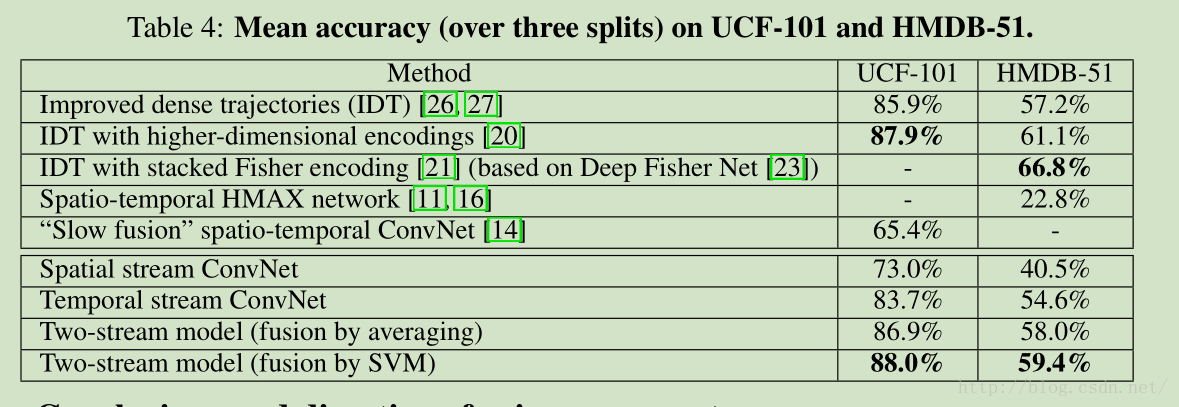

Mean accuracy (over three splits) on UCF-101 and HMDB-51

--------------------- 本文來自 O天涯海閣O 的CSDN 部落格 ,全文地址請點選:https://blog.csdn.net/zhangjunhit/article/details/77991038?utm_source=copy