RPN網路程式碼解讀

1. 說在前面的話

在目標檢測領域Faster RCNN可以說是無人不知無人不曉,它裡面有一個網路結構RPN(Region Proposal Network)用於在特徵圖上產生候選預測區域。但是呢,這個網路結構具體是怎麼工作的呢?網上有很多種解釋,但是都是雲裡霧裡的,還是直接擼程式碼來得直接,這裡就直接從程式碼入手直接擼吧-_-||。

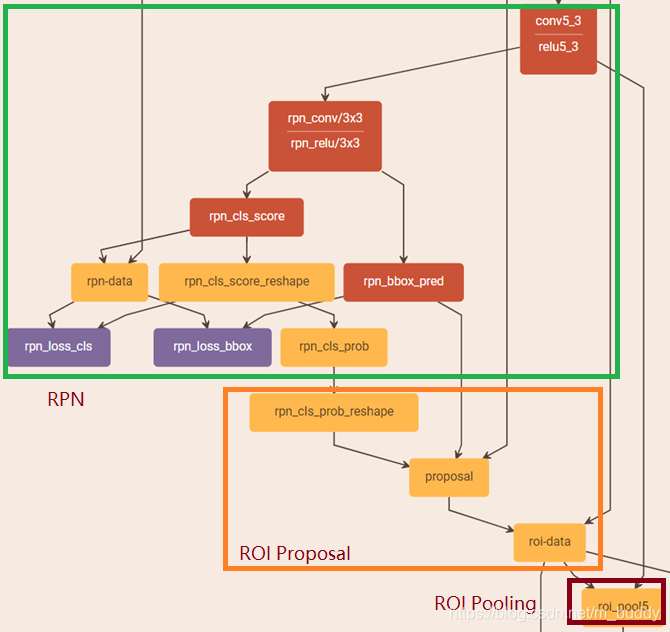

首先,來看一下Faster RCNN中RPN的結構是什麼樣子的吧。可以看到RPN直接通過一個卷積層rpn_conv/3x3直接接在了分類網路的特徵層輸出上面,之後接上兩個卷積層rpn_clc_score與rpn_bbox_pred分別用於產生前景背景分類與預測框。之後再由python層AnchorTargetLayer產生anchor機制的分類與預測框。然後,經過ROI Proposal產生ROI區域的候選,並通過ROI Pooling規範到相同的尺寸上進行後續處理。大體的結構如下圖所示:

雖然在上面的圖中能夠對RPN網路有一個比較直觀但是籠統的概念,其具體內部搞了啥子,並不清楚。所以還是擼一下它裡面的程式碼看看吧,首先來看RPN模組中各個檔案說明。

(1)generate_anchors.py

在[0,0,15,15]基礎anchor的基礎上生成不同寬高比例以及縮放大小的anchor。

Generates a regular grid of multi-scale, multi-aspect anchor boxes.

(2)proposal_layer.py

將RPN網路的每個anchor的分類得分以及檢測框迴歸預估轉換為目標候選

Converts RPN outputs (per-anchor scores and bbox regression estimates) into object proposals.

(3)anchor_target_layer.py

為每個anchor生成訓練目標或標籤,分類的標籤只是0(非目標)1(是目標)-1(忽略)。當分類的標籤大於0的時候預測框的迴歸才被指定。

Generates training targets/labels for each anchor. Classification labels are 1 (object), 0 (not object) or -1 (ignore).

Bbox regression targets are specified when the classification label is > 0.

(4)proposal_target_layer.py

為每個目標候選生成訓練目標或標籤,分類標籤從 (背景0或目標類別 ),自然lable值大於0的才被指定預測框迴歸。

Generates training targets/labels for each object proposal: classification labels 0 - K (bg or object class 1, … , K)

and bbox regression targets in that case that the label is > 0.

(5)generate.py

使用RPN從IMDB輸入資料上產生目標候選。

Generate object detection proposals from an imdb using an RPN.

現在對RPN網路的結構和RPN模組中檔案有了一個大體的認識,那麼接下來就開始閱讀裡面的實現程式碼,看看它究竟幹了些什麼事情。

2. RPN網路部分

這個部分使用到的檔案有anchor_target_layer.py、generate_anchors.py。這裡的generate_anchors.py是用來產生模型需要的anchor的,其中也包含了一些其它的輔助函式,它不是講解說明的重點,這裡不作介紹。主要來看anchor_target_layer.py檔案。

首先,來看看這個層的初始化函式:

def setup(self, bottom, top):

layer_params = yaml.load(self.param_str_)

anchor_scales = layer_params.get('scales', (8, 16, 32)) # 尺度變化引數

self._anchors = generate_anchors(scales=np.array(anchor_scales)) # 生成預設的9個anchor

self._num_anchors = self._anchors.shape[0]

self._feat_stride = layer_params['feat_stride']

# allow boxes to sit over the edge by a small amount

# 設為0,則取出任何超過影象邊界的proposals,只要超出一點點,都要去除

self._allowed_border = layer_params.get('allowed_border', 0)

height, width = bottom[0].data.shape[-2:]

if DEBUG:

print 'AnchorTargetLayer: height', height, 'width', width

A = self._num_anchors

# labels 是否為目標的分類

top[0].reshape(1, 1, A * height, width)

# bbox_targets

top[1].reshape(1, A * 4, height, width)

# bbox_inside_weights

top[2].reshape(1, A * 4, height, width)

# bbox_outside_weights

top[3].reshape(1, A * 4, height, width)

接下來就是重頭的forward函式,首先,該函式在特徵圖生成需要運算的總的anchor

# 1. Generate proposals from bbox deltas and shifted anchors

# x方向的偏移個數,大小為特徵圖的width

shift_x = np.arange(0, width) * self._feat_stride

# y方向的偏移個數,大小為特徵圖的height

shift_y = np.arange(0, height) * self._feat_stride

# shift_x,shift_y均為width×height的二維陣列(meshgrid生成),對應位置的元素組合即構成影象上需要偏移量大小

#(偏移量大小是相對與影象最左上角的那9個anchor的偏移量大小),也就是說總共會得到width×height×9個偏移值對。

# 這些偏移值對與初始的anchor相加即可得到

# 所有的anchors,所以總共會產生width×height×9個anchors,且儲存在all_anchors變數中

shift_x, shift_y = np.meshgrid(shift_x, shift_y)

shifts = np.vstack((shift_x.ravel(), shift_y.ravel(),

shift_x.ravel(), shift_y.ravel())).transpose() # 維度輸出為(width*height)*4

# add A anchors (1, A, 4) to

# cell K shifts (K, 1, 4) to get

# shift anchors (K, A, 4)

# reshape to (K*A, 4) shifted anchors

A = self._num_anchors

K = shifts.shape[0] # K=width*height

# 在之前9個anchor的基礎上產生K*A個anchor,既是總的anchor數量

all_anchors = (self._anchors.reshape((1, A, 4)) +

shifts.reshape((1, K, 4)).transpose((1, 0, 2)))

all_anchors = all_anchors.reshape((K * A, 4))

total_anchors = int(K * A) # 總的anchor數量

產生這麼多的anchor自然有一些超出了邊界,那麼就需要對其進行剔除

# only keep anchors inside the image 在影象內部的anchor,即是有效anchor,邊界之外的刪除掉

inds_inside = np.where(

(all_anchors[:, 0] >= -self._allowed_border) &

(all_anchors[:, 1] >= -self._allowed_border) &

(all_anchors[:, 2] < im_info[1] + self._allowed_border) & # width

(all_anchors[:, 3] < im_info[0] + self._allowed_border) # height

)[0]

初始化可用anchor對應的lable,分類標籤的含義下面寫了

# label: 1 is positive, 0 is negative, -1 is dont care

# 影象內部anchor對應的分類,是否為目標的分類,大小為符合條件anchor的數量

labels = np.empty((len(inds_inside), ), dtype=np.float32)

labels.fill(-1)

在之前生成了計算需要的anchor了那麼接下來就是需要計算anchor與gt之間的關係了,也就是使用overlap area的面積來度量,每個anchor的是否為目標分類也是根據這個度量來設定的。

# overlaps between the anchors and the gt boxes

# overlaps (ex, gt)返回維度為【anchors * gt_boxes】大小的二維陣列

overlaps = bbox_overlaps(

np.ascontiguousarray(anchors, dtype=np.float),

np.ascontiguousarray(gt_boxes, dtype=np.float))

argmax_overlaps = overlaps.argmax(axis=1) # 求取於anchor重疊最大的gt

max_overlaps = overlaps[np.arange(len(inds_inside)), argmax_overlaps] # 取出與每個anchor重疊最大gt的重疊面積

gt_argmax_overlaps = overlaps.argmax(axis=0) # 求出與每個gt重疊面積最大的anchor

gt_max_overlaps = overlaps[gt_argmax_overlaps,

np.arange(overlaps.shape[1])] # 取出與每個gt重疊面積最大的

gt_argmax_overlaps = np.where(overlaps == gt_max_overlaps)[0]

# 重疊面積小於閾值0.3的標註為0

if not cfg.TRAIN.RPN_CLOBBER_POSITIVES:

# assign bg labels first so that positive labels can clobber them

labels[max_overlaps < cfg.TRAIN.RPN_NEGATIVE_OVERLAP] = 0

# fg label: for each gt, anchor with highest overlap 與gt圖重疊最大的對應anchor分類被設定為1

labels[gt_argmax_overlaps] = 1

# fg label: above threshold IOU 將與gt重疊的面積大於閾值0.7的anchor也將其分類設定為1

labels[max_overlaps >= cfg.TRAIN.RPN_POSITIVE_OVERLAP] = 1

if cfg.TRAIN.RPN_CLOBBER_POSITIVES:

# assign bg labels last so that negative labels can clobber positives

labels[max_overlaps < cfg.TRAIN.RPN_NEGATIVE_OVERLAP] = 0

論文中說從所有anchor中隨機選取256個anchor,前景128個,背景128個。注意:那種label為-1的不會當前景也不會當背景。

下面這兩段程式碼是前一部分是在所有前景的anchor中選128個,後一部分是在所有的背景anchor中選128個。如果前景的個數少於了128個,就把所有的anchor選出來,差的由背景部分補。這和Fast RCNN選取ROI一樣。

# subsample positive labels if we have too many 要是執行到這裡得到的分類為1的太多了那就進行取樣

# 從所有label為1的anchor中選擇128個,剩下的anchor的label全部置為-1

num_fg = int(cfg.TRAIN.RPN_FG_FRACTION * cfg.TRAIN.RPN_BATCHSIZE) # 取樣的閾值

fg_inds = np.where(labels == 1)[0]

if len(fg_inds) > num_fg:

disable_inds = npr.choice(

fg_inds, size=(len(fg_inds) - num_fg), replace=False)

labels[disable_inds] = -1

# subsample negative labels if we have too many 要是被分類為非1的太多了那麼也要進行取樣

# 這裡num_bg不是直接設為128,而是256減去label為1的個數,這樣如果label為1的不夠,就用label為0的填充,這個程式碼實現很巧

num_bg = cfg.TRAIN.RPN_BATCHSIZE - np.sum(labels == 1)

bg_inds = np.where(labels == 0)[0]

if len(bg_inds) > num_bg:

disable_inds = npr.choice(

bg_inds, size=(len(bg_inds) - num_bg), replace=False)

labels[disable_inds] = -1

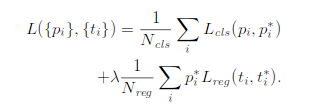

論文中RPN的損失函式是這樣定義的:

這個loss函式和Fast RCNN中的loss函式差不多,所以在計算的時候是每個座標單獨進行smoothL1計算,所以引數

和

必須弄成4維的向量,並不是在論文中的就一個數值。

bbox_inside_weights實際上指的就是

,bbox_outside_weights指的是

。論文中說如果anchor是前景,

就是1,為背景,

就是0。label為-1的,在這個程式碼來看也是設定為0,應該是在後面不會參與計算,這個設定為多少都無所謂。

是進行標準化操作,就是取平均。這個平均是把所有的label 0和label 1加起來。因為選的是256個anchor做訓練,所以實際上這個值是

。

bbox_targets = np.zeros((len(inds_inside), 4), dtype=np.float32) # 之前anchor過濾之後與之對應的bbox

bbox_targets = _compute_targets(anchors, gt_boxes[argmax_overlaps, :]) # 計算anchor框與gt框之間的殘差用於迴歸

bbox_inside_weights = np.zeros((len(inds_inside), 4), dtype=np.float32)

bbox_inside_weights[labels == 1, :] = np.array(cfg.TRAIN.RPN_BBOX_INSIDE_WEIGHTS)

bbox_outside_weights = np.zeros((len(inds_inside), 4), dtype=np.float32)

# 對樣本權重進行歸一化

if cfg.TRAIN.RPN_POSITIVE_WEIGHT < 0:

# uniform weighting of examples (given non-uniform sampling)

num_examples = np.sum(labels >= 0)

positive_weights = np.ones((1, 4)) * 1.0 / num_examples

negative_weights = np.ones((1, 4)) * 1.0 / num_examples

else:

assert ((cfg.TRAIN.RPN_POSITIVE_WEIGHT > 0) &

(cfg.TRAIN.RPN_POSITIVE_WEIGHT < 1))

positive_weights = (cfg.TRAIN.RPN_POSITIVE_WEIGHT /

np.sum(labels == 1))

negative_weights = ((1.0 - cfg.TRAIN.RPN_POSITIVE_WEIGHT) /

np.sum(labels == 0))

bbox_outside_weights[labels == 1, :] = positive_weights

bbox_outside_weights[labels == 0, :] = negative_weights

之後將計算的anchor映射回原來的全部的anchor中去:

# map up to original set of anchors

# 主要是將長度為len(inds_inside)的資料映射回長度total_anchors的資料,total_anchors=(width*height)×9

labels = _unmap(labels, total_anchors, inds_inside, fill=-1)

bbox_targets = _unmap(bbox_targets, total_anchors, inds_inside, fill=0)

bbox_inside_weights = _unmap(bbox_inside_weights, total_anchors, inds_inside, fill=0)

bbox_outside_weights = _unmap(bbox_outside_weights, total_anchors, inds_inside, fill=0)

值得注意的是,rpn網路的訓練是256個anchor,128個positive,128個negative。但anchor_target_layer層的輸出並不是只有256個anchor的label和座標變換,而是所有的anchor。其中_unmap函式就很好體現了這一點。那訓練的時候怎麼實現訓練這256個呢?實際上,這一層的4個輸出,rpn_labels是需要輸出到rpn_loss_cls層,其他的3個輸出到rpn_loss_bbox,label實際上就是loss function前半部分中的 (即計算分類的loss),這是一個log loss,為-1的label是無法進行log計算的,剩下的0、1就直接計算,這一部分實現了256。loss function後半部分是計算bbox座標的loss, ,也就是bbox_inside_weights,論文中說了activated only for positive anchors,只有為正例的anchor才去計算座標的損失,這是 是1,其他情況都是0。所以呢,只有那256個才真正改變了loss值,其他的都是0。

bbox_inside_weights = np.zeros((len(inds_inside), 4), dtype=np.float32)

bbox_inside_weights[labels == 1, :] = np.array(cfg.TRAIN.RPN_BBOX_INSIDE_WEIGHTS)

這段程式碼也體現了這個思想,所以這也實現了256。

最後就是維度轉換並設定這個層的4個輸出了

# labels

labels = labels.reshape((1, height, width, A)).transpose(0, 3, 1, 2)

labels = labels.reshape((1, 1, A * height, width))

top[0].reshape(*labels.shape)

top[0].data[...] = labels

# bbox_targets

bbox_targets = bbox_targets \

.reshape((1, height, width, A * 4)).transpose(0, 3, 1, 2)

top[1].reshape(*bbox_targets.shape)

top[1].data[...] = bbox_targets

# bbox_inside_weights

bbox_inside_weights = bbox_inside_weights \

.reshape((1, height, width, A * 4)).transpose(0, 3, 1, 2)

assert bbox_inside_weights.shape[2] == height

assert bbox_inside_weights.shape[3] == width

top[2].reshape(*bbox_inside_weights.shape)

top[2].data[...] = bbox_inside_weights

# bbox_outside_weights

bbox_outside_weights = bbox_outside_weights \

.reshape((1, height, width, A * 4)).transpose(0, 3, 1, 2)

assert bbox_outside_weights.shape[2] == height

assert bbox_outside_weights.shape[3] == width

top[3].reshape(*bbox_outside_weights.shape)

top[3].data[...] = bbox_outside_weights

到這裡,由特徵圖與anchor生成anchor分類與預測框的流程梳理完了,接下來就是根據對該層輸出計算RPN部分的loss了。

**PS:**我們注意到,該層中沒有並沒有實現反向傳播,這是為毛啊?沒有給網路提供梯度。其實是因為這個層的輸入資訊rpn_cls_score就提供了一個長寬資訊就回家洗洗睡了,所以就沒必要傳遞梯度了。

3. ROI Proposal網路部分

3.1 ProposalLayer

該層有3個輸入:fg/bg anchors分類器結果rpn_cls_prob_reshape,對應的bbox reg的

變換量rpn_bbox_pred,以及im_info;另外還有引數feat_stride=16。

縮排首先解釋im_info。對於一副任意大小影象,傳入Faster RCNN前首先reshape到固定

,

則儲存了此次縮放的所有資訊。然後經過Conv Layers,經過4次pooling變為

大小,其中

則儲存了該資訊。所有這些數值都是為了將proposal映射回原圖而設定的。

首先來看,該層的初始函式

def setup(self, bottom, top):

# parse the layer parameter string, which must be valid YAML

layer_params = yaml.load(self.param_str_)

self._feat_stride = layer_params['feat_stride']

anchor_scales = layer_params.get('scales', (8, 16, 32))

self._anchors = generate_anchors(scales=np.array(anchor_scales)) # 產生預設的9個anchor

self._num_anchors = self._anchors.shape[0]

if DEBUG:

print 'feat_stride: {}'.format(self._feat_stride)

print 'anchors:'

print self._anchors

# rois blob: holds R regions of interest, each is a 5-tuple

# (n, x1, y1, x2, y2) specifying an image batch index n and a

# rectangle (x1, y1, x2, y2)

top[0].reshape(1, 5)

# scores blob: holds scores for R regions of interest

if len(top) > 1:

top[1].reshape(1, 1, 1, 1)

在進行前向運算之前,需要載入一些配置項:

cfg_key = str(self.phase) # either 'TRAIN' or 'TEST' 階段為train和test的時候nms的輸入輸出數目不一樣

# Number of top scoring boxes to keep before apply NMS to RPN proposals

# 對RPN接面果使用NMS之前需要保留的框

pre_nms_topN = cfg[cfg_key].RPN_PRE_NMS_TOP_N # 12000

# Number of top scoring boxes to keep after applying NMS to RPN proposals

# 對RPN接面果使用NMS之後需要保留的框

post_nms_topN = cfg[cfg_key].RPN_POST_NMS_TOP_N # 1200

## NMS threshold used on RPN proposals 使用nms時候的閾值

nms_thresh = cfg[cfg_key].RPN_NMS_THRESH # 0.7

# Proposal height and width both need to be greater than RPN_MIN_SIZE (at orig image scale)

min_size = cfg[cfg_key].RPN_MIN_SIZE # 16

# the first set of _num_anchors channels are bg probs

# the second set are the fg probs, which we want

# 前9個通道為背景類;後9個通道為非背景類

scores = bottom[0].data[:, self._num_anchors:, :, :] # 預測的分類(卷積輸出:18)

bbox_deltas = bottom[1].data # 預測框的偏移量

im_info = bottom[2].data[0, :] # 影象的資訊

接下來就開始proposal了

step1:再次生成anchor,並使用bbox_deltas得到預測框

# 1. Generate proposals from bbox deltas and shifted anchors

height, width = scores.shape[-2:]

if DEBUG:

print 'score map size: {}'.format(scores.shape)

# Enumerate all shifts 這部分同anchor_target_layer

shift_x = np.arange(0, width) * self._feat_stride

shift_y = np.arange(0, height) * self._feat_stride

shift_x, shift_y = np.meshgrid(shift_x, shift_y)

shifts = np.vstack((shift_x.ravel(), shift_y.ravel(),

shift_x.ravel(), shift_y.ravel())).transpose()

# Enumerate all shifted anchors:

#

# add A anchors (1, A, 4) to

# cell K shifts (K, 1, 4) to get

# shift anchors (K, A, 4)

# reshape to (K*A, 4) shifted anchors

A = self._num_anchors

K = shifts.shape[0]

anchors = self._anchors.reshape((1, A, 4)) + \

shifts.reshape((1, K, 4)).transpose((1, 0, 2))

anchors = anchors.reshape((K * A, 4))

# Transpose and reshape predicted bbox transformations to get them

# into the same order as the anchors:

#

# bbox deltas will be (1, 4 * A, H, W) format

# transpose to (1, H, W, 4 * A)

# reshape to (1 * H * W * A, 4) where rows are ordered by (h, w, a)

# in slowest to fastest order

bbox_deltas = bbox_deltas.transpose((0, 2, 3, 1)).reshape((-1, 4))

# Same story for the scores:

#

# scores are (1, A, H, W) format

# transpose to (1, H, W, A)

# reshape to (1 * H * W * A, 1) where rows are ordered by (h, w, a)

scores = scores.transpose((0, 2, 3, 1)).reshape((-1, 1))

# Convert anchors into proposals via bbox transformations

# 利用 bbox_deltas 對anchors進行修正,得到proposals的預測位置,可以參考論文中公式

# 對於x,y使用線性變換,對於w,h使用exp

proposals = bbox_transform_inv(anchors, bbox_deltas)

step2:剪裁預測框使之在影象範圍之內

# 2. clip predicted boxes to image

# 剪裁預測框到影象的邊界內

proposals = clip_boxes(proposals, im_info[:2])

step3:去除小的預測框,閾值為16

# 3. remove predicted boxes with either height or width < threshold

# (NOTE: convert min_size to input image scale stored in im_info[2])

# 去除長寬小於16的預測框,因為進行過4次Pooling呀

keep = _filter_boxes(proposals, min_size * im_info[2])

proposals = proposals[keep, :]

scores = scores[keep]

step4:對於預測框的分數進行排序,並且取前N個送去NMS

# 4. sort all (proposal, score) pairs by score from highest to lowest

# 5. take top pre_nms_topN (e.g. 6000) 選出Top_N,後面再進行 NMS,見前面的設定

order = scores.ravel().argsort()[::-1]

if pre_nms_topN > 0:

order = order[:pre_nms_topN]

proposals = proposals[order, :] # 保留了前pre_nms_topN個框的座標資訊

scores = scores[order] # 保留了前pre_nms_topN個框的分數資訊

step5:進行NMS並取前N個

# 6. apply nms (e.g. threshold = 0.7)

# 7. take after_nms_topN (e.g. 300)

# 8. return the top proposals (-> RoIs top) 對預測框進行nms

keep = nms(np.hstack((proposals, scores)), nms_thresh)

if post_nms_topN > 0:

keep = keep[:post_nms_topN]

proposals = proposals[keep, :] # 對nms之後的預測框取前after_nms_topN個

scores = scores[keep]

step6:輸出結果

# Output rois blob

# Our RPN implementation only supports a single input image, so all

# batch inds are 0

batch_inds = np.zeros((proposals.shape[0], 1), dtype=np.float32)

blob = np.hstack((batch_inds, proposals.astype(np.float32, copy=False)))

top[0].reshape(*(blob.shape))

top[0].data[...] = blob

# [Optional] output scores blob

if len(top) > 1:

top[1].reshape(*(scores.shape))

top[1].data[...] = scores

3.2 ProposalTargetLayer

這個層主要完成由RPN得到的預測框到對應分類的匹配,其中對每次訓練的預測框進行了限制(每次只處理32個目標預測框,總數的1/4),詳見_sample_rois函式。首先,得到分類的數目,並初始化輸出blob的shape

def setup(self, bottom, top):

layer_params = yaml.load(self.param_str_)

self._num_classes = layer_params['num_classes']

# sampled rois (0, x1, y1, x2, y2)

top[0].reshape(1, 5)

# labels

top[1].reshape(1, 1)

# bbox_targets

top[2].reshape(1, self._num_classes * 4)

# bbox_inside_weights

top[3].reshape(1, self._num_classes * 4)

# bbox_outside_weights

top[4].reshape(1, self._num_classes * 4)

前向傳播函式

def forward(self, bottom, top):

# Proposal ROIs (0, x1, y1, x2, y2) coming from RPN

# (i.e., rpn.proposal_layer.ProposalLayer), or any other source

all_rois = bottom[0].data # RPN預測框,維度為[N,5]

# GT boxes (x1, y1, x2, y2, label)

# TODO(rbg): it's annoying that sometimes I have extra info before

# and other times after box coordinates -- normalize to one format

gt_boxes = bottom[1].data # GT資訊,維度[M,5]

# Include ground-truth boxes in the set of candidate rois

# 將ground truth框加入到待分類的框裡面(相當於增加正樣本個數)

# all_rois輸出維度[N+M,5],前一維表示是從RPN的輸出選出的框和ground truth框合在一起了

zeros = np.zeros((gt_boxes.shape[0], 1), dtype=gt_boxes.dtype)

all_rois = np.vstack(

(all_rois, np.hstack((zeros, gt_boxes[:, :-1])))

) # 先在每個ground truth框前面插入0(這樣才能和N個從RPN的輸出選出的框對齊),然後把ground truth框插在最後

# Sanity check: single batch only

assert np.all(all_rois[:, 0] == 0), \

'Only single item batches are supported'

num_images = 1

rois_per_image = cfg.TRAIN.BATCH_SIZE / num_images #cfg.TRAIN.BATCH_SIZE為128

# cfg.TRAIN.FG_FRACTION為0.25,即在一次分類訓練中前景框只能有32個

fg_rois_per_image = np.round(cfg.TRAIN.FG_FRACTION * rois_per_image)

# Sample rois with classification labels and bounding box regression

# targets

# _sample_rois選擇進行分類訓練的框,並求取他們類別和座標的ground truth和計算邊框損失loss時需要的bbox_inside_weights

labels, rois, bbox_targets, bbox_inside_weights = _sample_rois(

all_rois, gt_boxes, fg_rois_per_image,

rois_per_image, self._num_classes)

if DEBUG:

print 'num fg: {}'.format((labels > 0).sum())

print 'num bg: {}'.format((labels == 0).sum())

self._count += 1

self._fg_num += (labels > 0).sum()

self._bg_num += (labels == 0).sum()

print 'num fg avg: {}'.format(self._fg_num / self._count)

print 'num bg avg: {}'.format(self._bg_num / self._count)

print 'ratio: {:.3f}'.format(float(self._fg_num) / float(self._bg_num))

# sampled rois 取樣之後最終保留的全部預測框

top[0].reshape(*rois.shape)

top[0].data[...] = rois

# classification labels 預測框的分類

top[1].reshape(*labels.shape)

top[1].data[...] = labels

# bbox_targets 預測框與GT的殘差

top[2].reshape(*bbox_targets.shape)

top[2].data[...] = bbox_targets

# bbox_inside_weights

top[3].reshape(*bbox_inside_weights.shape)

top[3].data[...] = bbox_inside_weights

# bbox_outside_weights

top[4].reshape(*bbox_inside_weights.shape)

top[4].data[...] = np.array(bbox_inside_weights > 0).astype(np.float32)

對預測框進行取樣並計算殘差,在GT上找到其對應的分類

def _sample_rois(all_rois, gt_boxes, fg_rois_per_image, rois_per_image, num_classes):

"""Generate a random sample of RoIs comprising foreground and background

examples.

"""

# overlaps: (rois x gt_boxes)

# 計算所有roi和ground truth框之間的重合度

# 只取座標資訊,roi中取第二到第五個數(因為補0了呀),ground truth框中取第一到第四個數

overlaps = bbox_overlaps(

np.ascontiguousarray(all_rois[:, 1:5], dtype=np.float),

np.ascontiguousarray(gt_boxes[:, :4], dtype=np.float))

gt_assignment = overlaps.argmax(axis=1) # 對於每個roi,找到對應的gt_box座標 shape: [len(all_rois),]

max_overlaps = overlaps.max(axis=1) # 對於每個roi,找到與gt_box重合的最大的overlap shape: [len(all_rois),]

labels = gt_boxes[gt_assignment, 4] #對於每個roi,找到歸屬的類別: [len(all_rois),]

# Select foreground RoIs as those with >= FG_THRESH overlap

# 大於閾值的實際前景的數量

fg_inds = np.where(max_overlaps >= cfg.TRAIN.FG_THRESH)[0]

# Guard against the case when an image has fewer than fg_rois_per_image

# foreground RoIs 求取用於迴歸的前景框數量

fg_rois_per_this_image = min(fg_rois_per_image, fg_inds.size)

# Sample foreground regions without replacement

# 如果需要的話,就隨機地排除一些前景框

if fg_inds.size > 0:

fg_inds = npr.choice(fg_inds, size=fg_rois_per_this_image, replace=False)

# Select background RoIs as those within [BG_THRESH_LO, BG_THRESH_HI)

# 找到屬於背景的rois(就是與gt_box覆蓋介於0和0.5之間的)

bg_inds = np.where((max_overlaps < cfg.TRAIN.BG_THRESH_HI) &

(max_overlaps >= cfg.TRAIN.BG_THRESH_LO))[0]

# Compute number of background RoIs to take from this image (guarding

# against there being fewer than desired)

bg_rois_per_this_image = rois_per_image - fg_rois_per_this_image # 128-32個

bg_rois_per_this_image = min(bg_rois_per_this_image, bg_inds.size) # 以下操作同fg

# Sample background regions without replacement

if bg_inds.size > 0:

bg_inds = npr.choice(bg_inds, size=bg_rois_per_this_image, replace=False)

# The indices that we're selecting (both fg and bg)

keep_inds = np.append(fg_inds, bg_inds) # 記錄一下運算之後最終保留的框

# Select sampled values from various arrays:

labels = labels[keep_inds] # 記錄一下最終保留的框對應的label

# Clamp labels for the background RoIs to 0

labels[fg_rois_per_this_image:] = 0 # 把背景框的分類置0

rois = all_rois[keep_inds] # 取出最終保留的rois

# 得到最終保留的框的類別ground truth值和座標變換ground truth值,得到預測框的誤差

bbox_target_data = _compute_targets(

rois[:, 1:5], gt_boxes[gt_assignment[keep_inds], :4], labels)

# 得到最終計算loss時使用的ground truth邊框迴歸值和bbox_inside_weights

bbox_targets, bbox_inside_weights = \

_get_bbox_regression_labels(bbox_target_data, num_classes)

return labels, rois, bbox_targets, bbox_inside_weights

計算預測框殘差:

def _compute_targets(ex_rois, gt_rois, labels):

"""Compute bounding-box regression targets for an image."""

assert ex_rois.shape[0] == gt_rois.shape[0]

assert ex_rois.shape[1] == 4

assert gt_rois.shape[1] == 4

targets = bbox_transform(ex_rois, gt_rois) # 獲得預測框與gt的殘差

if cfg.TRAIN.BBOX_NORMALIZE_TARGETS_PRECOMPUTED: # 是否需要進行歸一化

# Optionally normalize targets by a precomputed mean and stdev

targets = ((targets - np.array(cfg.TRAIN.BBOX_NORMALIZE_MEANS))

/ np.array(cfg.TRAIN.BBOX_NORMALIZE_STDS))

# 將殘差插到lable的後面(水平插入)

return np.hstack(

(labels[:, np.newaxis], targets)).astype(np.float32, copy=False)

整理資料到需要的格式:

def _get_bbox_regression_labels(bbox_target_data, num_classes):

"""Bounding-box regression targets (bbox_target_data) are stored in a

compact form N x (class, tx, ty, tw, th)

This function expands those targets into the 4-of-4*K representation used

by the network (i.e. only one class has non-zero targets).

Returns:

bbox_target (ndarray): N x 4K blob of regression targets

bbox_inside_weights (ndarray): N x 4K blob of loss weights

"""

clss = bbox_target_data[:, 0] # 每個預測框通過重疊面積與gt比較得到的分類

# 對應分類上預測框的誤差

bbox_targets = np.zeros((clss.size, 4 * num_classes), dtype=np.float32)

# 用全0初始化一下bbox_inside_weights

bbox_inside_weights = np.zeros(bbox_targets.shape, dtype=np.float32)

inds = np.where(clss > 0)[0] # 非背景類

for ind in inds:

cls = clss[ind]

start = 4 * cls # 找到從屬的類別對應的座標迴歸值的起始位置

end = start + 4 # 找到從屬的類別對應的座標迴歸值的結束位置

bbox_targets[ind, start:end] = bbox_target_data[ind, 1:] #在對應類的座標迴歸上置相應的值(預測框誤差)

# 將bbox_inside_weights上的對應類的座標迴歸值置1

bbox_inside_weights[ind, start:end] = cfg.TRAIN.BBOX_INSIDE_WEIGHTS # (1.0, 1.0, 1.0, 1.0)

return bbox_targets, bbox_inside_weights

4. ROI Pooling

這部分參考:

關於ROI Pooling Layer的解讀