解決Scrapy-Redis爬取完畢之後繼續空跑的問題

阿新 • • 發佈:2018-12-03

1. 背景

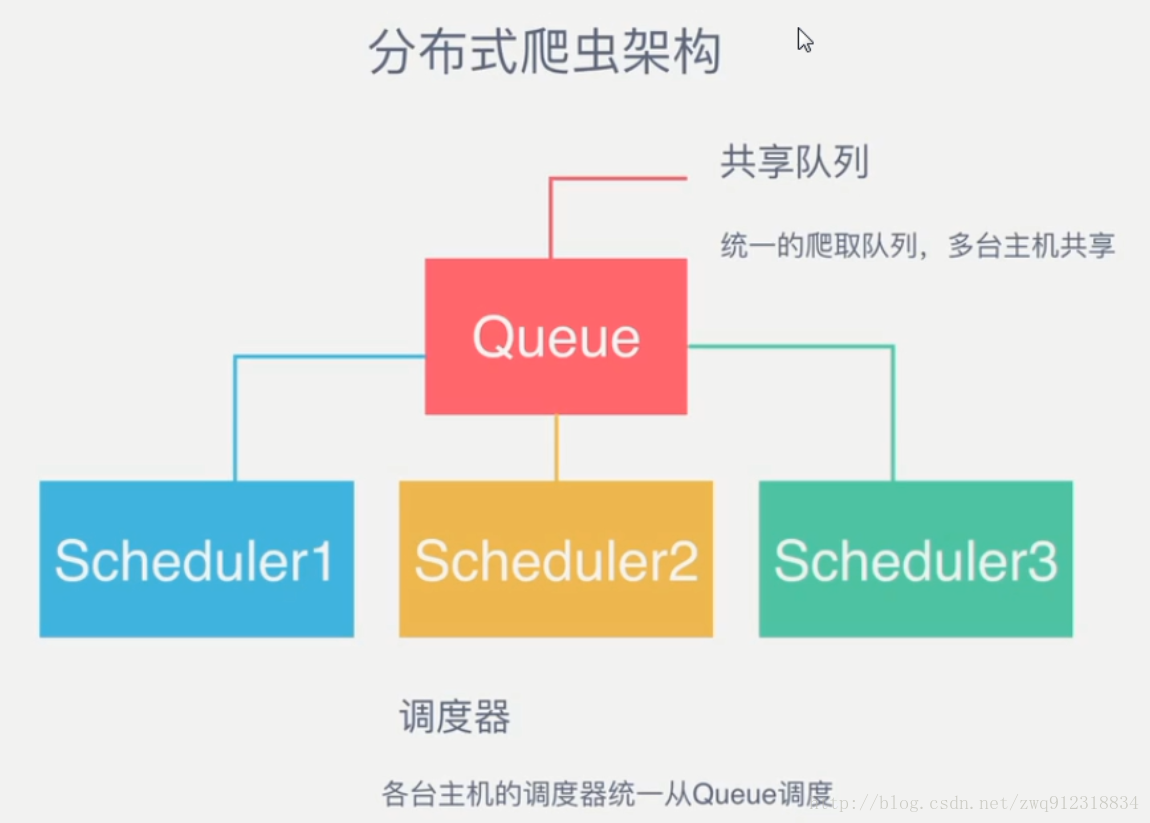

根據scrapy-redis分散式爬蟲的原理,多臺爬蟲主機共享一個爬取佇列。當爬取佇列中存在request時,爬蟲就會取出request進行爬取,如果爬取佇列中不存在request時,爬蟲就會處於等待狀態,行如下:

E:\Miniconda\python.exe E:/PyCharmCode/redisClawerSlaver/redisClawerSlaver/spiders/main.py 2017-12-12 15:54:18 [scrapy.utils.log] INFO: Scrapy 1.4.0 started (bot: scrapybot) 2017-12-12 15:54:18 [scrapy.utils.log] INFO: Overridden settings: {'SPIDER_LOADER_WARN_ONLY': True} 2017-12-12 15:54:18 [scrapy.middleware] INFO: Enabled extensions: ['scrapy.extensions.corestats.CoreStats', 'scrapy.extensions.telnet.TelnetConsole', 'scrapy.extensions.logstats.LogStats'] 2017-12-12 15:54:18 [myspider_redis] INFO: Reading start URLs from redis key 'myspider:start_urls' (batch size: 110, encoding: utf-8 2017-12-12 15:54:18 [scrapy.middleware] INFO: Enabled downloader middlewares: ['scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware', 'scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware', 'scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware', 'redisClawerSlaver.middlewares.ProxiesMiddleware', 'redisClawerSlaver.middlewares.HeadersMiddleware', 'scrapy.downloadermiddlewares.retry.RetryMiddleware', 'scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware', 'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware', 'scrapy.downloadermiddlewares.redirect.RedirectMiddleware', 'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware', 'scrapy.downloadermiddlewares.stats.DownloaderStats'] 2017-12-12 15:54:18 [scrapy.middleware] INFO: Enabled spider middlewares: ['scrapy.spidermiddlewares.httperror.HttpErrorMiddleware', 'scrapy.spidermiddlewares.offsite.OffsiteMiddleware', 'scrapy.spidermiddlewares.referer.RefererMiddleware', 'scrapy.spidermiddlewares.urllength.UrlLengthMiddleware', 'scrapy.spidermiddlewares.depth.DepthMiddleware'] 2017-12-12 15:54:18 [scrapy.middleware] INFO: Enabled item pipelines: ['redisClawerSlaver.pipelines.ExamplePipeline', 'scrapy_redis.pipelines.RedisPipeline'] 2017-12-12 15:54:18 [scrapy.core.engine] INFO: Spider opened 2017-12-12 15:54:18 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min) 2017-12-12 15:55:18 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min) 2017-12-12 15:56:18 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

- 可是,如果所有的request都已經爬取完畢了呢?這件事爬蟲程式是不知道的,它無法區分結束和空窗期狀態的不同,所以會一直處於上面的那種等待狀態,也就是我們說的空跑。

- 那有沒有辦法讓爬蟲區分這種情況,自動結束呢?

2. 環境

- 系統:win7

- scrapy-redis

- redis 3.0.5

- python 3.6.1

3. 解決方案

- 從背景介紹來看,基於scrapy-redis分散式爬蟲的原理,爬蟲結束是一個很模糊的概念,在爬蟲爬取過程中,爬取佇列是一個不斷動態變化的過程,隨著request的爬取,又會有新的request進入爬取佇列。進進出出。爬取速度高於填充速度,就會有佇列空窗期(爬取佇列中,某一段時間會出現沒有request的情況),爬取速度低於填充速度,就不會出現空窗期。所以對於爬蟲結束這件事來說,只能模糊定義,沒有一個精確的標準。

- 所以,下面這兩種方案都是一種大概的思路。

3.1. 利用scrapy的關閉spider擴充套件功能

# 關閉spider擴充套件 class scrapy.contrib.closespider.CloseSpider 當某些狀況發生,spider會自動關閉。每種情況使用指定的關閉原因。 關閉spider的情況可以通過下面的設定項配置: CLOSESPIDER_TIMEOUT CLOSESPIDER_ITEMCOUNT CLOSESPIDER_PAGECOUNT CLOSESPIDER_ERRORCOUNT

- CLOSESPIDER_TIMEOUT

CLOSESPIDER_TIMEOUT

預設值: 0

一個整數值,單位為秒。如果一個spider在指定的秒數後仍在執行, 它將以 closespider_timeout 的原因被自動關閉。 如果值設定為0(或者沒有設定),spiders不會因為超時而關閉。- CLOSESPIDER_ITEMCOUNT

CLOSESPIDER_ITEMCOUNT

預設值: 0

一個整數值,指定條目的個數。如果spider爬取條目數超過了指定的數, 並且這些條目通過item pipeline傳遞,spider將會以 closespider_itemcount 的原因被自動關閉。- CLOSESPIDER_PAGECOUNT

CLOSESPIDER_PAGECOUNT

0.11 新版功能.

預設值: 0

一個整數值,指定最大的抓取響應(reponses)數。 如果spider抓取數超過指定的值,則會以 closespider_pagecount 的原因自動關閉。 如果設定為0(或者未設定),spiders不會因為抓取的響應數而關閉。- CLOSESPIDER_ERRORCOUNT

CLOSESPIDER_ERRORCOUNT

0.11 新版功能.

預設值: 0

一個整數值,指定spider可以接受的最大錯誤數。 如果spider生成多於該數目的錯誤,它將以 closespider_errorcount 的原因關閉。 如果設定為0(或者未設定),spiders不會因為發生錯誤過多而關閉。- 示例:開啟 settings.py,新增一個配置項,如下

# 爬蟲執行超過23.5小時,如果爬蟲還沒有結束,則自動關閉

CLOSESPIDER_TIMEOUT = 84600- 特別注意:如果爬蟲在規定時限沒有把request全部爬取完畢,此時強行停止的話,爬取佇列中就還會存有部分request請求。那麼爬蟲下次開始爬取時,一定要記得在master端對爬取佇列進行清空操作。

3.2. 修改scrapy-redis原始碼

# ----------- 修改scrapy-redis原始碼時,特別需要注意的是:---------

# 第一,要留有原始程式碼的備份。

# 第二,當專案移植到其他機器上時,需要將scrapy-redis原始碼一起移植過去。一般程式碼位置在\Lib\site-packages\scrapy_redis\下- 想象一下,爬蟲已經結束的特徵是什麼?那就是爬取佇列已空,從爬取佇列中無法取到request資訊。那著手點應該就在從爬取佇列中獲取request和排程這個部分。檢視scrapy-redis原始碼,我們發現了兩個著手點:

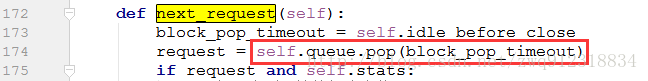

3.2.1. 細節

# .\Lib\site-packages\scrapy_redis\schedluer.py

def next_request(self):

block_pop_timeout = self.idle_before_close

# 下面是從爬取佇列中彈出request

# 這個block_pop_timeout 我尚未研究清除其作用。不過肯定不是超時時間......

request = self.queue.pop(block_pop_timeout)

if request and self.stats:

self.stats.inc_value('scheduler/dequeued/redis', spider=self.spider)

return request- 9

# .\Lib\site-packages\scrapy_redis\spiders.py

def next_requests(self):

"""Returns a request to be scheduled or none."""

use_set = self.settings.getbool('REDIS_START_URLS_AS_SET', defaults.START_URLS_AS_SET)

fetch_one = self.server.spop if use_set else self.server.lpop

# XXX: Do we need to use a timeout here?

found = 0

# TODO: Use redis pipeline execution.

while found < self.redis_batch_size:

data = fetch_one(self.redis_key)

if not data:

# 代表爬取佇列為空。但是可能是永久為空,也可能是暫時為空

# Queue empty.

break

req = self.make_request_from_data(data)

if req:

yield req

found += 1

else:

self.logger.debug("Request not made from data: %r", data)

if found:

self.logger.debug("Read %s requests from '%s'", found, self.redis_key)- 參考註釋,從上述原始碼來看,就只有這兩處可以做手腳。但是爬蟲在爬取過程中,佇列隨時都可能出現暫時的空窗期。想判斷爬取佇列為空,一般是設定一個時限,如果在一個時段內,佇列一直持續為空,那我們可以基本認定這個爬蟲已經結束了。所以有了如下的改動:

# .\Lib\site-packages\scrapy_redis\schedluer.py

# 原始程式碼

def next_request(self):

block_pop_timeout = self.idle_before_close

request = self.queue.pop(block_pop_timeout)

if request and self.stats:

self.stats.inc_value('scheduler/dequeued/redis', spider=self.spider)

return request

# 修改後的程式碼

def __init__(self, server,

persist=False,

flush_on_start=False,

queue_key=defaults.SCHEDULER_QUEUE_KEY,

queue_cls=defaults.SCHEDULER_QUEUE_CLASS,

dupefilter_key=defaults.SCHEDULER_DUPEFILTER_KEY,

dupefilter_cls=defaults.SCHEDULER_DUPEFILTER_CLASS,

idle_before_close=0,

serializer=None):

# ......

# 增加一個計數項

self.lostGetRequest = 0

def next_request(self):

block_pop_timeout = self.idle_before_close

request = self.queue.pop(block_pop_timeout)

if request and self.stats:

# 如果拿到了就恢復這個值

self.lostGetRequest = 0

self.stats.inc_value('scheduler/dequeued/redis', spider=self.spider)

if request is None:

self.lostGetRequest += 1

print(f"request is None, lostGetRequest = {self.lostGetRequest}, time = {datetime.datetime.now()}")

# 100個大概8分鐘的樣子

if self.lostGetRequest > 200:

print(f"request is None, close spider.")

# 結束爬蟲

self.spider.crawler.engine.close_spider(self.spider, 'queue is empty')

return request- 相關log資訊

2017-12-14 16:18:06 [scrapy.middleware] INFO: Enabled item pipelines:

['redisClawerSlaver.pipelines.beforeRedisPipeline',

'redisClawerSlaver.pipelines.amazonRedisPipeline',

'scrapy_redis.pipelines.RedisPipeline']

2017-12-14 16:18:06 [scrapy.core.engine] INFO: Spider opened

2017-12-14 16:18:06 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

request is None, lostGetRequest = 1, time = 2017-12-14 16:18:06.370400

request is None, lostGetRequest = 2, time = 2017-12-14 16:18:11.363400

request is None, lostGetRequest = 3, time = 2017-12-14 16:18:16.363400

request is None, lostGetRequest = 4, time = 2017-12-14 16:18:21.362400

request is None, lostGetRequest = 5, time = 2017-12-14 16:18:26.363400

request is None, lostGetRequest = 6, time = 2017-12-14 16:18:31.362400

request is None, lostGetRequest = 7, time = 2017-12-14 16:18:36.363400

request is None, lostGetRequest = 8, time = 2017-12-14 16:18:41.362400

request is None, lostGetRequest = 9, time = 2017-12-14 16:18:46.363400

request is None, lostGetRequest = 10, time = 2017-12-14 16:18:51.362400

2017-12-14 16:18:56 [scrapy.core.engine] INFO: Closing spider (queue is empty)

request is None, lostGetRequest = 11, time = 2017-12-14 16:18:56.363400

request is None, close spider.

登入結果:loginRes = (235, b'Authentication successful')

登入成功,code = 235

mail has been send successfully. message:Content-Type: text/plain; charset="utf-8"

MIME-Version: 1.0

Content-Transfer-Encoding: base64

From: [email protected]

To: [email protected]

Subject: =?utf-8?b?54is6Jmr57uT5p2f54q25oCB5rGH5oql77yabmFtZSA9IHJlZGlzQ2xhd2VyU2xhdmVyLCByZWFzb24gPSBxdWV1ZSBpcyBlbXB0eSwgZmluaXNoZWRUaW1lID0gMjAxNy0xMi0xNCAxNjoxODo1Ni4zNjQ0MDA=?=

57uG6IqC77yacmVhc29uID0gcXVldWUgaXMgZW1wdHksIHN1Y2Nlc3NzISBhdDoyMDE3LTEyLTE0

IDE2OjE4OjU2LjM2NDQwMA==

2017-12-14 16:18:56 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{'finish_reason': 'queue is empty',

'finish_time': datetime.datetime(2017, 12, 14, 8, 18, 56, 364400),

'log_count/INFO': 8,

'start_time': datetime.datetime(2017, 12, 14, 8, 18, 6, 362400)}

2017-12-14 16:18:56 [scrapy.core.engine] INFO: Spider closed (queue is empty)

Unhandled Error

Traceback (most recent call last):

File "E:\Miniconda\lib\site-packages\scrapy\commands\runspider.py", line 89, in run

self.crawler_process.start()

File "E:\Miniconda\lib\site-packages\scrapy\crawler.py", line 285, in start

reactor.run(installSignalHandlers=False) # blocking call

File "E:\Miniconda\lib\site-packages\twisted\internet\base.py", line 1243, in run

self.mainLoop()

File "E:\Miniconda\lib\site-packages\twisted\internet\base.py", line 1252, in mainLoop

self.runUntilCurrent()

--- <exception caught here> ---

File "E:\Miniconda\lib\site-packages\twisted\internet\base.py", line 878, in runUntilCurrent

call.func(*call.args, **call.kw)

File "E:\Miniconda\lib\site-packages\scrapy\utils\reactor.py", line 41, in __call__

return self._func(*self._a, **self._kw)

File "E:\Miniconda\lib\site-packages\scrapy\core\engine.py", line 137, in _next_request

if self.spider_is_idle(spider) and slot.close_if_idle:

File "E:\Miniconda\lib\site-packages\scrapy\core\engine.py", line 189, in spider_is_idle

if self.slot.start_requests is not None:

builtins.AttributeError: 'NoneType' object has no attribute 'start_requests'

2017-12-14 16:18:56 [twisted] CRITICAL: Unhandled Error

Traceback (most recent call last):

File "E:\Miniconda\lib\site-packages\scrapy\commands\runspider.py", line 89, in run

self.crawler_process.start()

File "E:\Miniconda\lib\site-packages\scrapy\crawler.py", line 285, in start

reactor.run(installSignalHandlers=False) # blocking call

File "E:\Miniconda\lib\site-packages\twisted\internet\base.py", line 1243, in run

self.mainLoop()

File "E:\Miniconda\lib\site-packages\twisted\internet\base.py", line 1252, in mainLoop

self.runUntilCurrent()

--- <exception caught here> ---

File "E:\Miniconda\lib\site-packages\twisted\internet\base.py", line 878, in runUntilCurrent

call.func(*call.args, **call.kw)

File "E:\Miniconda\lib\site-packages\scrapy\utils\reactor.py", line 41, in __call__

return self._func(*self._a, **self._kw)

File "E:\Miniconda\lib\site-packages\scrapy\core\engine.py", line 137, in _next_request

if self.spider_is_idle(spider) and slot.close_if_idle:

File "E:\Miniconda\lib\site-packages\scrapy\core\engine.py", line 189, in spider_is_idle

if self.slot.start_requests is not None:

builtins.AttributeError: 'NoneType' object has no attribute 'start_requests'

Process finished with exit code 0

- 有一個問題,如上所述,當通過engine.close_spider(spider, ‘reason’)來關閉spider時,有時會出現幾個錯誤之後才能關閉。可能是因為scrapy會開啟多個執行緒同時抓取,然後其中一個執行緒關閉了spider,其他執行緒就找不到spider才會報錯。

3.2.2. 注意事項

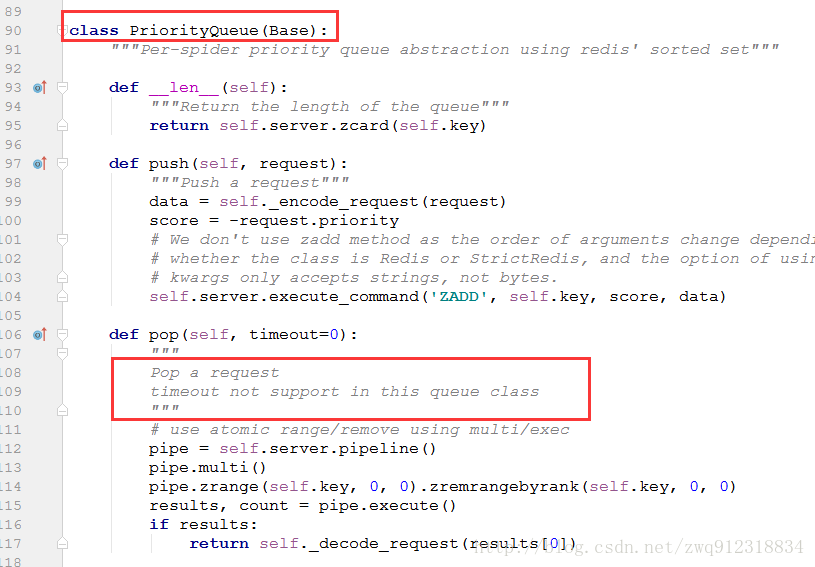

整個排程過程如下:

-

scheduler.py

-

queue.py

- 所以,PriorityQueue和另外兩種佇列FifoQueue,LifoQueue有所不同,特別需要注意。如果會使用到timeout這個引數,那麼在setting中就只能指定爬取佇列為FifoQueue或LifoQueue。

# 指定排序爬取地址時使用的佇列,

# 預設的 按優先順序排序(Scrapy預設),由sorted set實現的一種非FIFO、LIFO方式。

# 'SCHEDULER_QUEUE_CLASS': 'scrapy_redis.queue.SpiderPriorityQueue',

# 可選的 按先進先出排序(FIFO)

'SCHEDULER_QUEUE_CLASS': 'scrapy_redis.queue.SpiderQueue',

# 可選的 按後進先出排序(LIFO)

# SCHEDULER_QUEUE_CLASS = 'scrapy_redis.queue.SpiderStack'