第十一次作業————中文垃圾郵件

阿新 • • 發佈:2018-12-06

import matplotlib.pyplot as plt

import pandas as pd

import string

import codecs

import os

import jieba

from sklearn.feature_extraction.text import CountVectorizer

from wordcloud import WordCloud

from sklearn import naive_bayes as bayes

from sklearn.model_selection import train_test_splitjoin(): 連線字串陣列。將字串、元組、列表中的元素以指定的字元(分隔符)連線生成一個新的字串

os.path.join(): 將多個路徑組合後返回

第一個以”/”開頭的引數開始拼接,之前的引數全部丟棄。

以上一種情況為先。在上一種情況確保情況下,若出現”./”開頭的引數,會從”./”開頭的引數的上一個引數開始拼接

file_path = "D:\\chrome download\\test-master"

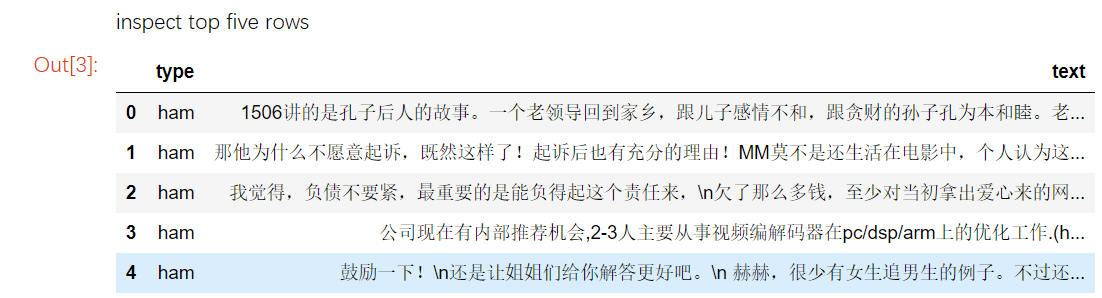

emailframe = pd.read_excel(os.path.join(file_path, "chinesespam.xlsx"), 0)print("inspect top five rows") # 審查前5個行 emailframe.head(5)

執行結果:

可以發現,垃圾郵件50條,非垃圾郵件100條

載入停止詞

stopwords = codecs.open(os.path.join(file_path,'stopwords.txt'),'r','UTF-8').read().split('\r\n')結巴分詞,過濾停止詞,空string,標點等

processed_texts = [] for text in emailframe["text"]: words = [] seg_list = jieba.cut(text) for seg in seg_list: if (seg.isalpha()) & (seg not in stopwords): words.append(seg) sentence = " ".join(words) processed_texts.append(sentence) emailframe["text"] = processed_texts

執行結果:

檢視過濾的結果

emailframe.head()執行結果:

向量化

def transformTextToSparseMatrix(texts):

vectorizer = CountVectorizer(binary = False)

vectorizer.fit(texts)

vocabulary = vectorizer.vocabulary_

print("There are ", len(vocabulary), " word features")

vector = vectorizer.transform(texts)

result = pd.DataFrame(vector.toarray())

keys = pd.DataFrame(vector.toarray())

keys = []

values = []

for key,value in vectorizer.vocabulary_.items():

keys.append(key)

values.append(value)

df = pd.DataFrame(data = {"key":keys,"values":values})

colnames = df.sort_values("values")["key"].values

result.columns = colnames

return result矩陣

textmatrix = transformTextToSparseMatrix(emailframe["text"])

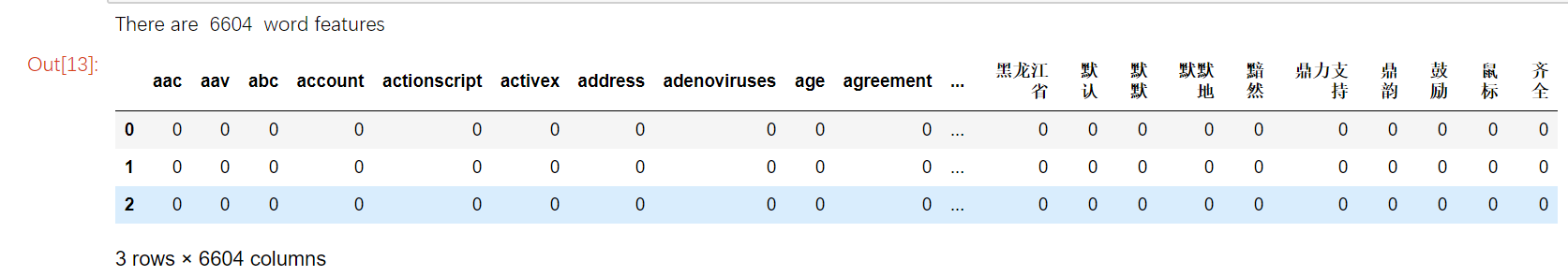

textmatrix.head(3)執行結果:

執行後可以看到,資料集中一共有5982個不同的單詞,即有5982個不同的特徵,維數太多,接下來進行過濾

過濾一些頻繁出現的詞

features = pd.DataFrame(textmatrix.apply(sum, axis=0))

extractedfeatures = [features.index[i] for i in range(features.shape[0]) if features.iloc[i,0] > 5]

textmatrix = textmatrix[extractedfeatures]

print("There are ",textmatrix.shape[1],"word features")過濾了其中>5的789個單詞,然後按照0.2:0.8劃分資料集和訓練集

train,test,trainlabel,testlabel = train_test_split(textmatrix,emailframe["type"],test_size = 0.2)使用樸素貝葉斯訓練模型

clf = bayes.BernoulliNB(alpha=1,binarize=True)

model = clf.fit(train, trainlabel)進行模型評分

model.score(test,testlabel)