Spark-在cdh叢集中執行報錯

-

Run on a YARN cluster

spark-submit \ --class com.hnb.data.UserKeyOpLog \ --master yarn \ --deploy-mode cluster \ --executor-memory 128M \ --num-executors 2 \ lib/original-dataceter-spark.jar \ args(1) \ args(2) \ args(3)報錯:

Exception in thread "main" java.lang.IllegalArgumentException: Required executor memory (1024+384 MB) is above the max threshold (1024 MB) of this cluster! Please check the values of 'yarn.scheduler.maximum-allocation-mb' and/or 'yarn.nodemanager.resource.memory-mb'.[root@node00 spark]# spark-submit --class com.hnb.data.UserKeyOpLog --master yarn --deploy-mode cluster --executor-memory 128M --num-executors 2 lib/original-dataceter-spark.jar /kafka-source/user_key_op_topic/201811/07 /kafka-source/user_key_op_topic/out1 /kafka-source/user_key_op_topic/out2 18/11/09 12:44:04 INFO client.RMProxy: Connecting to ResourceManager at node00/172.16.10.190:8032 18/11/09 12:44:04 INFO yarn.Client: Requesting a new applicationfrom cluster with 3 NodeManagers 18/11/09 12:44:04 INFO yarn.Client: Verifying our application has not requested more than the maximum memory capability of the cluster (1040 MB per container) Exception in thread "main" java.lang.IllegalArgumentException: Required AM memory (1024+384 MB) is above the max threshold (1040 MB) of this cluster! Please increase the value of 'yarn.scheduler.maximum-allocation-mb'. at org.apache.spark.deploy.yarn.Client.verifyClusterResources(Client.scala:299) at org.apache.spark.deploy.yarn.Client.submitApplication(Client.scala:139) at org.apache.spark.deploy.yarn.Client.run(Client.scala:1023) at org.apache.spark.deploy.yarn.Client$.main(Client.scala:1083) at org.apache.spark.deploy.yarn.Client.main(Client.scala) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:730) at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:181) at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:206) at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:121) at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala) [root@node00 spark]#解決辦法:

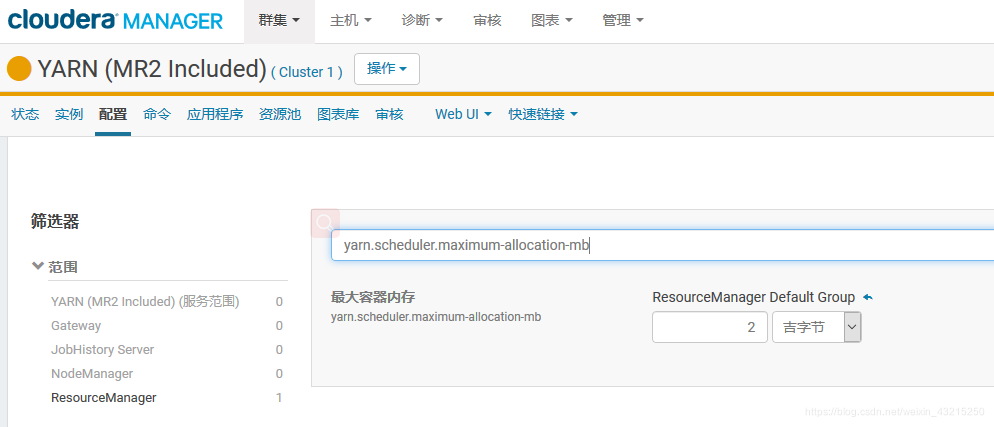

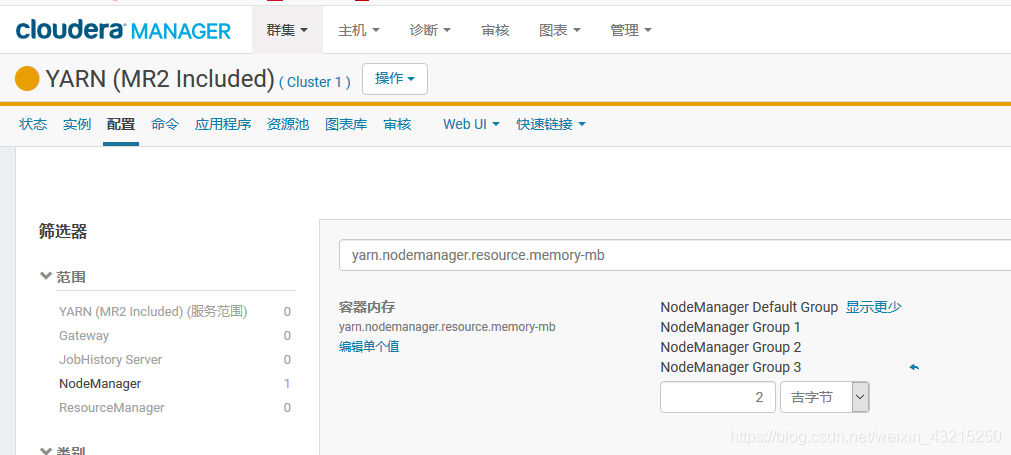

修改配置-

yarn.scheduler.maximum-allocation-mb

-

yarn.nodemanager.resource.memory-mb

在yarn的配置中將上述兩項設定為2吉位元組,重啟yarn,然後重新執行。[root@node00 spark]# spark-submit --class com.hnb.data.UserKeyOpLog --master yarn --deploy-mode cluster --executor-memory 128M --num-executors 2 lib/original-dataceter-spark.jar /kafka-source/user_key_op_topic/201811/07 /kafka-source/user_key_op_topic/out1 /kafka-source/user_key_op_topic/out2 18/11/09 12:59:03 INFO client.RMProxy: Connecting to ResourceManager at node00/172.16.10.190:8032 18/11/09 12:59:03 INFO yarn.Client: Requesting a new application from cluster with 3 NodeManagers 18/11/09 12:59:03 INFO yarn.Client: Verifying our application has not requested more than the maximum memory capability of the cluster (2048 MB per container) 18/11/09 12:59:03 INFO yarn.Client: Will allocate AM container, with 1408 MB memory including 384 MB overhead 18/11/09 12:59:03 INFO yarn.Client: Setting up container launch context for our AM 18/11/09 12:59:03 INFO yarn.Client: Setting up the launch environment for our AM container 18/11/09 12:59:03 INFO yarn.Client: Preparing resources for our AM container 18/11/09 12:59:04 INFO yarn.Client: Uploading resource file:/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/spark/lib/original-dataceter-spark.jar -> hdfs://nameservice1/user/root/.sparkStaging/application_1541739040266_0001/original-dataceter-spark.jar 18/11/09 12:59:04 INFO yarn.Client: Uploading resource file:/tmp/spark-403b6665-d14c-4919-80cf-e6e2ddaf0835/__spark_conf__512169842143839514.zip -> hdfs://nameservice1/user/root/.sparkStaging/application_1541739040266_0001/__spark_conf__512169842143839514.zip 18/11/09 12:59:04 INFO spark.SecurityManager: Changing view acls to: root 18/11/09 12:59:04 INFO spark.SecurityManager: Changing modify acls to: root 18/11/09 12:59:04 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); users with modify permissions: Set(root) 18/11/09 12:59:04 INFO yarn.Client: Submitting application 1 to ResourceManager 18/11/09 12:59:05 INFO impl.YarnClientImpl: Submitted application application_1541739040266_0001 18/11/09 12:59:06 INFO yarn.Client: Application report for application_1541739040266_0001 (state: ACCEPTED) 18/11/09 12:59:06 INFO yarn.Client: client token: N/A diagnostics: N/A ApplicationMaster host: N/A ApplicationMaster RPC port: -1 queue: root.users.root start time: 1541739544990 final status: UNDEFINED tracking URL: http://node00:8088/proxy/application_1541739040266_0001/ user: root 18/11/09 12:59:07 INFO yarn.Client: Application report for application_1541739040266_0001 (state: ACCEPTED) 18/11/09 12:59:08 INFO yarn.Client: Application report for application_1541739040266_0001 (state: ACCEPTED) 18/11/09 12:59:09 INFO yarn.Client: Application report for application_1541739040266_0001 (state: ACCEPTED) 18/11/09 12:59:10 INFO yarn.Client: Application report for application_1541739040266_0001 (state: ACCEPTED) 18/11/09 12:59:11 INFO yarn.Client: Application report for application_1541739040266_0001 (state: ACCEPTED) 18/11/09 12:59:12 INFO yarn.Client: Application report for application_1541739040266_0001 (state: ACCEPTED) 18/11/09 12:59:13 INFO yarn.Client: Application report for application_1541739040266_0001 (state: ACCEPTED) 18/11/09 12:59:14 INFO yarn.Client: Application report for application_1541739040266_0001 (state: ACCEPTED) 18/11/09 12:59:15 INFO yarn.Client: Application report for application_1541739040266_0001 (state: ACCEPTED) 18/11/09 12:59:16 INFO yarn.Client: Application report for application_1541739040266_0001 (state: ACCEPTED) 18/11/09 12:59:17 INFO yarn.Client: Application report for application_1541739040266_0001 (state: ACCEPTED) 18/11/09 12:59:18 INFO yarn.Client: Application report for application_1541739040266_0001 (state: ACCEPTED) 18/11/09 12:59:19 INFO yarn.Client: Application report for application_1541739040266_0001 (state: ACCEPTED) 18/11/09 12:59:20 INFO yarn.Client: Application report for application_1541739040266_0001 (state: ACCEPTED) 18/11/09 12:59:21 INFO yarn.Client: Application report for application_1541739040266_0001 (state: ACCEPTED) 18/11/09 12:59:22 INFO yarn.Client: Application report for application_1541739040266_0001 (state: ACCEPTED) 18/11/09 12:59:23 INFO yarn.Client: Application report for application_1541739040266_0001 (state: RUNNING) 18/11/09 12:59:23 INFO yarn.Client: client token: N/A diagnostics: N/A ApplicationMaster host: 172.16.10.192 ApplicationMaster RPC port: 0 queue: root.users.root start time: 1541739544990 final status: UNDEFINED tracking URL: http://node00:8088/proxy/application_1541739040266_0001/ user: root 18/11/09 12:59:24 INFO yarn.Client: Application report for application_1541739040266_0001 (state: RUNNING) 18/11/09 12:59:25 INFO yarn.Client: Application report for application_1541739040266_0001 (state: RUNNING) 18/11/09 12:59:26 INFO yarn.Client: Application report for application_1541739040266_0001 (state: RUNNING) 18/11/09 12:59:27 INFO yarn.Client: Application report for application_1541739040266_0001 (state: RUNNING) 18/11/09 12:59:28 INFO yarn.Client: Application report for application_1541739040266_0001 (state: RUNNING) 18/11/09 12:59:29 INFO yarn.Client: Application report for application_1541739040266_0001 (state: RUNNING) 18/11/09 12:59:30 INFO yarn.Client: Application report for application_1541739040266_0001 (state: RUNNING) 18/11/09 12:59:31 INFO yarn.Client: Application report for application_1541739040266_0001 (state: RUNNING) 18/11/09 12:59:32 INFO yarn.Client: Application report for application_1541739040266_0001 (state: RUNNING) 18/11/09 12:59:33 INFO yarn.Client: Application report for application_1541739040266_0001 (state: RUNNING) 18/11/09 12:59:34 INFO yarn.Client: Application report for application_1541739040266_0001 (state: RUNNING) 18/11/09 12:59:35 INFO yarn.Client: Application report for application_1541739040266_0001 (state: RUNNING) 18/11/09 12:59:36 INFO yarn.Client: Application report for application_1541739040266_0001 (state: RUNNING) 18/11/09 12:59:37 INFO yarn.Client: Application report for application_1541739040266_0001 (state: RUNNING) 18/11/09 12:59:38 INFO yarn.Client: Application report for application_1541739040266_0001 (state: RUNNING) 18/11/09 12:59:39 INFO yarn.Client: Application report for application_1541739040266_0001 (state: FINISHED) 18/11/09 12:59:39 INFO yarn.Client: client token: N/A diagnostics: N/A ApplicationMaster host: 172.16.10.192 ApplicationMaster RPC port: 0 queue: root.users.root start time: 1541739544990 final status: SUCCEEDED tracking URL: http://node00:8088/proxy/application_1541739040266_0001/ user: root 18/11/09 12:59:39 INFO util.ShutdownHookManager: Shutdown hook called 18/11/09 12:59:39 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-403b6665-d14c-4919-80cf-e6e2ddaf0835 [root@node00 spark]#

-

-

本地提交模式

spark-submit \ --master local \ --class org.apache.spark.examples.SparkPi \ lib/spark-examples.jar[root@cdh01 ~]# spark-submit --master local --class org.apache.spark.examples.SparkPi /opt/cloudera/parcels/CDH-5.11.1-1.cdh5.11.1.p0.4/lib/spark/lib/spark-examples.jar 10[root@cdh01 ~]# spark-submit --master local --class org.apache.spark.examples.SparkPi /opt/cloudera/parcels/CDH-5.11.1-1.cdh5.11.1.p0.4/lib/spark/lib/spark-examples.jar 10 18/10/29 14:39:08 INFO spark.SparkContext: Running Spark version 1.6.0 18/10/29 14:39:09 INFO spark.SecurityManager: Changing view acls to: root 18/10/29 14:39:09 INFO spark.SecurityManager: Changing modify acls to: root 18/10/29 14:39:09 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); users with modify permissions: Set(root) 18/10/29 14:39:09 INFO util.Utils: Successfully started service 'sparkDriver' on port 55692. 18/10/29 14:39:09 INFO slf4j.Slf4jLogger: Slf4jLogger started 18/10/29 14:39:09 INFO Remoting: Starting remoting 18/10/29 14:39:10 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkDriverActorSystem@192.168.50.202:43516] 18/10/29 14:39:10 INFO Remoting: Remoting now listens on addresses: [akka.tcp://sparkDriverActorSystem@192.168.50.202:43516] 18/相關推薦

Spark-在cdh叢集中執行報錯

Run on a YARN cluster spark-submit \ --class com.hnb.data.UserKeyOpLog \ --master yarn \ --deploy-mode cluster \ --executor-memory 128M \ -

eclipse中執行報錯之解決方法

com.mysql.jdbc.MysqlDataTruncation: Data truncation: Truncated incorrect DOUBLE value: '?ü???à' at com.mysql.jdbc.MysqlIO.checkErrorPack

安卓中執行報錯Error:Execution failed for task ':app:transformClassesWithDexForDebug'解決

錯誤如下:Error:Execution failed for task ':app:transformDexArchiveWithExternalLibsDexMergerForDebug'.> java.la

已經設置utf8的mysql cmd中插入中文執行報錯解決方法

客戶 res img 說明 設置 -1 bsp 插入 gbk 說明cmd客戶端的字符集是gbk,結果集也要設置為gbk。 使用語句 set character_set_client=gbk; set character_set_results=gbk; 就

【Spark】Spark執行報錯Task not serializable

文章目錄 異常資訊 出現場景 解決方案 分析 異常資訊 org.apache.spark.SparkException: Task not serializable Caused by: java.io.NotSerial

在spark叢集中執行程式遇到的一些問題

使用的是yarn模式,所以執行程式之前需要先將所用資料集傳到hdfs上 //檢視hdfs的目錄 ./hdfs dfs -ls //新建一個data資料夾 ./hdfs dfs -mkdir /data //將檔案上傳到data資料夾下 ./hdfs dfs -p

解決yolov3中darknet.py預測位置不準和使用python3執行報錯的問題

本文總結了使用yolo過程中出現的一些問題,在網路上找到了相關的解決方案。 1 使用darknet.py預測位置不準 1.1 使用python呼叫darknet 一般來說,模型訓練好了,也可以使用shell命令進行預測了,下一步該在業務裡面使用別的語言來呼叫了,我們這裡使用p

pythonmysql執行報錯解決過程中遇到的其中一個報錯解決文章來源

本文章僅記錄下面報錯的解決文章來源: error: command 'C:\Users\Administrator\AppData\Local\Programs\Common\Micr osoft\Visual C++ f

問題五:idea中使用springboot+jpa執行報錯The server time zone value 'Öйú±ê׼ʱ¼ä' is unrecognized or represents

最開始使用springboot中jpa,配置檔案使用的是application,裡面對格式要求有點嚴格 這個問題處理過後,又開始有其他錯誤:The server time zone value 'Öйú±ê׼ʱ¼ä' isunrecognized or rep

Maven打包Java版的spark程式到jar包,本地Windows測試,上傳到叢集中執行

作者:翁鬆秀 Maven打包Java版的spark程式到jar包,本地Windows測試,上傳到叢集中執行 文章目錄 Maven打包Java版的spark程式到jar包,本地Windows測試,上傳到叢集中執行 Step1

spark-shell 執行報錯 OutOfMemoryError

java.lang.OutOfMemoryError: unable to create new native threadat java.lang.Thread.start0(Native Method)at java.lang.Thread.start(Thread.java:714)at java.ut

一步一步完成如何在現有的CDH叢集中部署一個與CDH版本不同的spark

首先當然是下載一個spark原始碼,在http://archive.cloudera.com/cdh5/cdh/5/中找到屬於自己的原始碼,自己編譯打包,有關如何編譯打包可以參考一下我原來寫的文章: http://blog.csdn.net/xiao_jun_0820/ar

python的sklearn機器學習SVM中的NuSVC執行報錯:ValueError: b'specified nu is infeasible'

早上在使用NuSVC進行模型訓練的時候,報錯如下 Reloaded modules: __mp_main__ Traceback (most recent call last): File "<ipython-input-2-c95a09e8e532>", line 1

selenium 在命令列中可以執行但是在pycharm中卻報錯

初學python,用到selenium,在python的命令列中輸入: from selenium import webdriver driver=webdriver.Chrome() driver.maximize_wi

Hue上檢視spark執行報錯資訊(一)

點選Hue報錯頁面,找到application_ID 根據application_ID到yarn介面(http://bigdata.lhx.com:8088/cluster)找到完整資訊 點選ID或者history進入logs介面 詳細報錯資訊:spark找不到叢集中asmp資料

RedisTemplate執行lua指令碼,叢集模式下報錯解決

redis叢集配置: 在使用spring的RedisTemplate執行lua指令碼時,報錯EvalSha is not supported in cluster environment,不支援cluster。 程式碼: @Test public

關於在Hibernate5.3.1中HQL語句使用"?"引數佔位符執行報錯的問題

在Hibernate5.3.1中,HQL語句使用"?"引數佔位符執行報錯:Caused by: org.hibernate.QueryException: Legacy-style query parameters (`?`) are no longer supported;

在android studio中新增.jar檔案後,rebuild不報錯,執行報錯的解決

問題如題,一直以為是下載的jar有問題 報如下錯誤: Error:Execution failed for task ':app:transformResourcesWithMergeJavaResForDebug'. > com.android.build.api.

Jenkins中shell-script執行報錯sh: line 2: npm: command not found

<1>本地執行npm run build--正常<2>檢視環境變數--正常[[email protected] bin]# echo $PATH/usr/local/n

zabbix 監控平臺搭建過程中的報錯與解決方法總結

監控 zabbix 運維自動化1.php option post_max_size 2.php option max_execution_time 3.php option max_input_time 4.php time zone 5.php bcm