眼睛橫縱比來做 側眼眨眼檢測 技術

眨眼檢測

與用於計算閃爍的傳統影象處理方法不同,閃爍通常涉及以下的某些組合:

- 眼睛定位。

- 閾值找到眼睛的白色。

- 確定眼睛的“白色”區域是否消失一段時間(表示眨眼)。

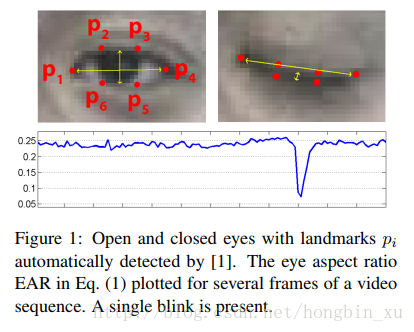

眼睛縱橫比是一種 更優雅的解決方案,其涉及 基於眼睛的面部地標之間的距離比率的非常簡單的計算。

這種用於眨眼檢測的方法快速,有效且易於實現。

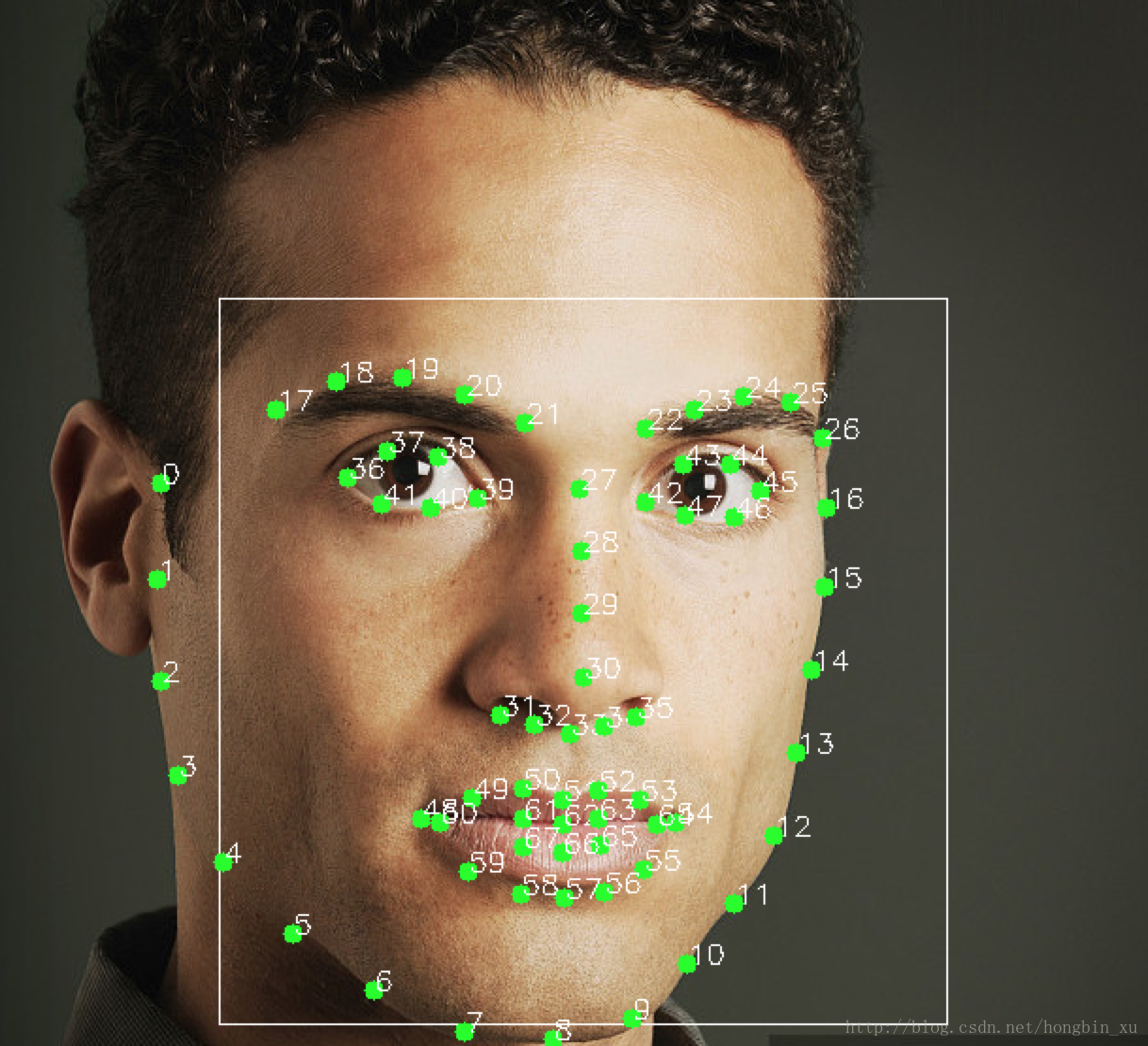

人臉全域性關鍵點:

眼部關鍵點

我們視訊中的一幀是這樣的:

先嚐試用opencv作出解決方法,再從底層演算法復現加速效能。

光用opencv檢測側眼的話,效果不好,但是可以檢測出眼角。接下來考慮檢測全圖特徵點,從特徵點中發掘有價值的點。

對灰度圖用harris角點檢測效果不好

打算也做一個閾值分割,基於分割結果再看

中間的為眼部(睜眼時)

眨眼時

由此有個簡單辦法,看看有多少個大連通區域- - 當連通區域減少時,就代表了閉眼,開始這樣魯棒性和準確性肯定不夠。

嘗試Sobel運算元進行梯度檢測,採用Y軸做梯度,因為眼部Y方向梯度明顯

結果如上,灰度>10的畫素點總數 低於30000時,為閉眼幀。此演算法對於檢測 閉眼動作 較為明顯,但是對於檢測閉眼時間不太有效,原因是,閉眼後,睫毛在面板上程式設計邊緣被提取出來,產生了一定的干擾。

因為專案打算從側面檢測人眼,所以傳統的演算法效果很不好,最終基於sobel+去噪聲技術開發出了一個演算法,可以實現 較為準確的判斷。 波谷為持續閉眼時間。

# -*- coding: utf-8 -*- """ Created on Tue Sep 4 13:16:11 2018 @author: Lenovo """ import numpy as np from matplotlib import animation import matplotlib.pyplot as plt import cv2 import time from scipy.fftpack import fft,ifft cap = cv2.VideoCapture('WIN_20180831_13_51_22_Pro.mp4') pnum= list() plt.close() #clf() # 清圖 cla() # 清座標軸 close() # 關視窗 fig=plt.figure() ax=fig.add_subplot(1,1,1) plt.grid(True) #新增網格 plt.ion() #interactive mode on i=0 formerimg = np.zeros((720,1280)) iss, frame = cap.read() while iss: i=i+1 iss,img = cap.read() if iss: img = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY) img=cv2.GaussianBlur(img,(5,5),20) cv2.imshow("yuantu_gaus",img) #ax.hist(img.flatten(),256) # imghist = np.histogram(img.flatten(),256)[0] # histmax = np.argmax(imghist).astype(np.uint8) # img[img>histmax-50]=255 # # print(img) # cv2.imshow("huidu",img) # sobelX = cv2.Sobel(img,cv2.CV_64F,1,0)#x方向的梯度 # sobelX = np.uint8(np.absolute(sobelX))#x方向梯度的絕對值 sobelY = cv2.Sobel(img,cv2.CV_64F,0,1)#y方向的梯度 sobelY = np.uint8(np.absolute(sobelY))#y方向梯度的絕對值 sobelY[sobelY<=20]=0 cv2.imshow("sobelY",sobelY) # # #找到輪廓 # _,contours, hierarchy = cv2.findContours(sobelY,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE) # #繪製輪廓 # cv2.drawContours(sobelY,contours,-1,(0,0,255),3) # #繪製結果 kernel = cv2.getStructuringElement(cv2.MORPH_RECT,(5, 5)) sobelY = cv2.morphologyEx(sobelY, cv2.MORPH_OPEN, kernel) sobelY = cv2.morphologyEx(sobelY, cv2.MORPH_CLOSE, kernel) # sobelY = cv2.medianBlur(sobelY,5) pnum.append(len(sobelY[sobelY>0])) # # print(i) #cv2.imshow("result", sobelY) # plt.plot(range(len(pnum)),pnum,c='r',marker='.') # cv2.imshow("yanjiao",sobelY) plt.pause(0.01) # if cv2.waitKey(1) & 0xFF == 'q': # break # time.sleep(0.5) cv2.destroyAllWindows() plt.plot(range(len(pnum)),pnum,c='r',marker='.') # #fig=plt.figure('22') #ax2=fig.add_subplot(1,1,1) # #epnum = np.array(pnum) #avg = epnum.mean() #s=0 #countt=0 #for i in epnum: # if i> avg: # s=s+i # countt=countt+1 #avg = s/countt #print(avg) #epnum[epnum<avg]=0 #ax2.plot(range(len(pnum)),epnum,c='r',marker='.') # ##bb = plt.hist(pnum.flatten()) #print(pnum.mean()) #a = np.histogram(pnum.flatten()) ##from scipy import stats #pnum[pnum<3754]=0 #plt.plot(range(len(pnum)),pnum,c='r',marker='.')

嘗試了一下gamma矯正影象,效果不太好

現在我們探究一種新方法,因為直接計算畫素點數量,會受到睫毛的影響,那麼我們如何利用睫毛呢?

考慮到使用,質心計算的方法,當睫毛下垂的時候,質心會同時被帶下來,通過統計質心的y軸運動軌跡來評估眼睛閉合度

初步測試效果不錯

但是雙迴圈 效率較低,看看能否優化一下

# -*- coding: utf-8 -*-

"""

Created on Tue Sep 4 13:16:11 2018

@author: Lenovo

"""

import numpy as np

from matplotlib import animation

import matplotlib.pyplot as plt

import cv2

import time

from scipy.fftpack import fft,ifft

cap = cv2.VideoCapture('WIN_20180831_13_49_39_Pro.mp4')

pnum= list()

plt.close() #clf() # 清圖 cla() # 清座標軸 close() # 關視窗

fig=plt.figure()

ax=fig.add_subplot(1,1,1)

plt.grid(True) #新增網格

plt.ion() #interactive mode on

i=0

formerimg = np.zeros((720,1280))

iss, frame = cap.read()

while iss:

i=i+1

iss,img = cap.read()

if iss:

img = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

img = np.power(img/255, 0.9)

cv2.imshow("yuantu_gaus",img)

#ax.hist(img.flatten(),256)

# imghist = np.histogram(img.flatten(),256)[0]

# histmax = np.argmax(imghist).astype(np.uint8)

# img[img>histmax-50]=255

#

# print(img)

# cv2.imshow("huidu",img)

# sobelX = cv2.Sobel(img,cv2.CV_64F,1,0)#x方向的梯度

# sobelX = np.uint8(np.absolute(sobelX))#x方向梯度的絕對值

img=img*255

sobelY = cv2.Sobel(img,cv2.CV_64F,0,1)#y方向的梯度

sobelY = np.uint8(np.absolute(sobelY))#y方向梯度的絕對值

# cv2.imshow("sobelY",sobelY)

#

# #找到輪廓

# _,contours, hierarchy = cv2.findContours(sobelY,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

# #繪製輪廓

# cv2.drawContours(sobelY,contours,-1,(0,0,255),3)

# #繪製結果

# img=cv2.GaussianBlur(img,(5,5),20)

# sobelY = cv2.medianBlur(sobelY,5)

kernel = cv2.getStructuringElement(cv2.MORPH_RECT,(3, 3))

sobelY = cv2.morphologyEx(sobelY, cv2.MORPH_OPEN, kernel)

sobelY[sobelY<=15]=0

yi = 0

ci = 0

for i in range(sobelY.shape[0]):

for j in range(sobelY.shape[1]):

if sobelY[i,j]>0:

yi=yi+i

ci=ci+1

cv2.imshow("open",sobelY)

pnum.append(yi/ci)

#

# print(i)

#cv2.imshow("result", sobelY)

#

plt.plot(range(len(pnum)),pnum,c='r',marker='.')

# cv2.imshow("yanjiao",sobelY)

plt.pause(0.01)

# if cv2.waitKey(1) & 0xFF == 'q':

# break

# time.sleep(0.5)

cv2.destroyAllWindows()

plt.plot(range(len(pnum)),pnum,c='r',marker='.')

#

#fig=plt.figure('22')

#ax2=fig.add_subplot(1,1,1)

#

#epnum = np.array(pnum)

#avg = epnum.mean()

#s=0

#countt=0

#for i in epnum:

# if i> avg:

# s=s+i

# countt=countt+1

#avg = s/countt

#print(avg)

#epnum[epnum<avg]=0

#ax2.plot(range(len(pnum)),epnum,c='r',marker='.')

#

##bb = plt.hist(pnum.flatten())

#print(pnum.mean())

#a = np.histogram(pnum.flatten())

##from scipy import stats

#pnum[pnum<3754]=0

#plt.plot(range(len(pnum)),pnum,c='r',marker='.')效果很棒,但是速度確實慢

測了三個視訊,效果都異常的好。

對於小影象速度快,因為迴圈遍歷少

對於雙迴圈的遍歷,採用了np庫中的where函式來快速實現,具體如下

np.where(sobelY>0)[0].mean()

表示採集y軸(通過【0】來表示)求mean平均,即可得到y軸座標

最終效果很好,對於我手上所擁有的三個視訊,檢測可以達到實時且高度準確。

接下來會安排大家進行更多的視訊採集與演算法調優。

# -*- coding: utf-8 -*-

"""

Created on Tue Sep 4 13:16:11 2018

@author: Lenovo

"""

import numpy as np

from matplotlib import animation

import matplotlib.pyplot as plt

import cv2

import time

from scipy.fftpack import fft,ifft

cap = cv2.VideoCapture('WIN_20180831_13_49_39_Pro.mp4')

pnum= list()

plt.close() #clf() # 清圖 cla() # 清座標軸 close() # 關視窗

fig=plt.figure()

ax=fig.add_subplot(1,1,1)

plt.grid(True) #新增網格

plt.ion() #interactive mode on

i=0

formerimg = np.zeros((720,1280))

iss, frame = cap.read()

while iss:

i=i+1

iss,img = cap.read()

if iss:

img = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

cv2.imshow("yuantu_gaus",img)

#ax.hist(img.flatten(),256)

# imghist = np.histogram(img.flatten(),256)[0]

# histmax = np.argmax(imghist).astype(np.uint8)

# img[img>histmax-50]=255

#

# print(img)

# cv2.imshow("huidu",img)

# sobelX = cv2.Sobel(img,cv2.CV_64F,1,0)#x方向的梯度

# sobelX = np.uint8(np.absolute(sobelX))#x方向梯度的絕對值

img=img*255

sobelY = cv2.Sobel(img,cv2.CV_64F,0,1)#y方向的梯度

sobelY = np.uint8(np.absolute(sobelY))#y方向梯度的絕對值

# cv2.imshow("sobelY",sobelY)

#

# #找到輪廓

# _,contours, hierarchy = cv2.findContours(sobelY,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

# #繪製輪廓

# cv2.drawContours(sobelY,contours,-1,(0,0,255),3)

# #繪製結果

# img=cv2.GaussianBlur(img,(5,5),20)

# sobelY = cv2.medianBlur(sobelY,5)

kernel = cv2.getStructuringElement(cv2.MORPH_RECT,(3, 3))

sobelY = cv2.morphologyEx(sobelY, cv2.MORPH_OPEN, kernel)

sobelY[sobelY<=15]=0

#

# yi = 0

# ci = 0

# for i in range(sobelY.shape[0]):

# for j in range(sobelY.shape[1]):

# if sobelY[i,j]>0:

# yi=yi+i

# ci=ci+1

cv2.imshow("open",sobelY)

pnum.append(np.where(sobelY>0)[0].mean())

#

# print(i)

#cv2.imshow("result", sobelY)

#

plt.plot(range(len(pnum)),pnum,c='r',marker='.')

# cv2.imshow("yanjiao",sobelY)

plt.pause(0.01)

# if cv2.waitKey(1) & 0xFF == 'q':

# break

# time.sleep(0.5)

cv2.destroyAllWindows()

plt.plot(range(len(pnum)),pnum,c='r',marker='.')

#

#fig=plt.figure('22')

#ax2=fig.add_subplot(1,1,1)

#

#epnum = np.array(pnum)

#avg = epnum.mean()

#s=0

#countt=0

#for i in epnum:

# if i> avg:

# s=s+i

# countt=countt+1

#avg = s/countt

#print(avg)

#epnum[epnum<avg]=0

#ax2.plot(range(len(pnum)),epnum,c='r',marker='.')

#

##bb = plt.hist(pnum.flatten())

#print(pnum.mean())

#a = np.histogram(pnum.flatten())

##from scipy import stats

#pnum[pnum<3754]=0

#plt.plot(range(len(pnum)),pnum,c='r',marker='.')通過gamma變換,我們可以做區域性增強效果

舉個例子,比如夜景中拍出來的圖片噪聲很多,於是我們先通過 高值gamma 增加對比度,減少噪聲,然後再通過區域性低值gamma,提升特徵亮度,突出細節與輪廓

在之前的眼部追蹤中,我通過這種思路,實現了較好的閾值分割與抗噪聲。

# -*- coding: utf-8 -*-

"""

Created on Tue Sep 4 13:16:11 2018

@author: Lenovo

"""

import numpy as np

from matplotlib import animation

import matplotlib.pyplot as plt

import cv2

import time

from scipy.fftpack import fft,ifft

cap = cv2.VideoCapture('WIN_20180831_13_49_39_Pro.mp4')

pnum= list()

pnum.append(300)

plt.close() #clf() # 清圖 cla() # 清座標軸 close() # 關視窗

fig=plt.figure()

ax=fig.add_subplot(1,1,1)

plt.grid(True) #新增網格

plt.ion() #interactive mode on

i=0

formerimg = np.zeros((720,1280))

iss, frame = cap.read()

while iss:

i=i+1

iss,img = cap.read()

if iss:

img = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

cv2.imshow("yuantu_gaus",img)

img=cv2.GaussianBlur(img,(5,5),20)

img = np.power(img / 255.0, 1.3)

img = img*255

#ax.hist(img.flatten(),256)

# imghist = np.histogram(img.flatten(),256)[0]

# histmax = np.argmax(imghist).astype(np.uint8)

# img[img>histmax-50]=255

#

# print(img)

# cv2.imshow("huidu",img)

# sobelX = cv2.Sobel(img,cv2.CV_64F,1,0)#x方向的梯度

# sobelX = np.uint8(np.absolute(sobelX))#x方向梯度的絕對值

# img=img*255

sobelY = cv2.Sobel(img,cv2.CV_64F,0,1)#y方向的梯度

sobelY = np.uint8(np.absolute(sobelY))#y方向梯度的絕對值

# cv2.imshow("sobelY",sobelY)

#

# #找到輪廓

# _,contours, hierarchy = cv2.findContours(sobelY,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

# #繪製輪廓

# cv2.drawContours(sobelY,contours,-1,(0,0,255),3)

# #繪製結果

# img=cv2.GaussianBlur(img,(5,5),20)

sobelY = cv2.medianBlur(sobelY,5)

# kernel = cv2.getStructuringElement(cv2.MORPH_RECT,(3, 3))

# sobelY = cv2.morphologyEx(sobelY, cv2.MORPH_OPEN, kernel)

sn = np.histogram(sobelY)[1]

sobelY[sobelY<=sn[0:3].mean()]=0

#

# yi = 0

# ci = 0

# for i in range(sobelY.shape[0]):

# for j in range(sobelY.shape[1]):

# if sobelY[i,j]>0:

# yi=yi+i

# ci=ci+1

ypoint = int(np.where(sobelY>0)[0].mean())

xpoint = int(np.where(sobelY>0)[1].mean())

# img.flags.WRITEABLE =True

img = img/255.0

img[ypoint-200:ypoint+300,xpoint-300:xpoint+300] = np.power(img[ypoint-200:ypoint+300,xpoint-300:xpoint+300], 0.5)

img = img*255

img = np.uint8(np.absolute(img))#y方向梯度的絕對值

cv2.imshow("local_h",img)

sobelY = cv2.Sobel(img,cv2.CV_64F,0,1)#y方向的梯度

sobelY = np.uint8(np.absolute(sobelY))#y方向梯度的絕對值

cv2.imshow("local_sobelY",sobelY)

ypoint = int(np.where(sobelY>0)[0].mean())

xpoint = int(np.where(sobelY>0)[1].mean())

if np.absolute(ypoint-pnum[-1])<=1:

ypoint=pnum[-1]

cv2.imshow("open",sobelY)

pnum.append(ypoint)

#

# print(i)

#cv2.imshow("result", sobelY)

#

plt.plot(range(len(pnum)),pnum,c='r',marker='.')

# cv2.imshow("yanjiao",sobelY)

plt.pause(0.01)

# if cv2.waitKey(1) & 0xFF == 'q':

# break

# time.sleep(0.5)

cv2.destroyAllWindows()

plt.plot(range(len(pnum)),pnum,c='r',marker='.')

#

#fig=plt.figure('22')

#ax2=fig.add_subplot(1,1,1)

#

#epnum = np.array(pnum)

#avg = epnum.mean()

#s=0

#countt=0

#for i in epnum:

# if i> avg:

# s=s+i

# countt=countt+1

#avg = s/countt

#print(avg)

#epnum[epnum<avg]=0

#ax2.plot(range(len(pnum)),epnum,c='r',marker='.')

#

##bb = plt.hist(pnum.flatten())

#print(pnum.mean())

#a = np.histogram(pnum.flatten())

##from scipy import stats

#pnum[pnum<3754]=0

#plt.plot(range(len(pnum)),pnum,c='r',marker='.')加上這些步驟以後,(後面坐了少量優化)程式碼就不放上來了,速度 10FPS

因為我們的專案要達到極速實時模式,所以去掉很多 提高精度的程式碼後,速度可以達到天花板(與視訊採集幀率同速)

實時版本程式碼如下:

# -*- coding: utf-8 -*-

"""

Created on Tue Sep 4 13:16:11 2018

@author: Lenovo

"""

import numpy as np

from matplotlib import animation

import matplotlib.pyplot as plt

import cv2

import time

from scipy.fftpack import fft,ifft

cap = cv2.VideoCapture('WIN_20180831_13_49_39_Pro.mp4')

pnum= list()

pnum.append(300)

plt.close() #clf() # 清圖 cla() # 清座標軸 close() # 關視窗

fig=plt.figure()

ax=fig.add_subplot(1,1,1)

plt.grid(True) #新增網格

plt.ion() #interactive mode on

i=0

formerimg = np.zeros((720,1280))

iss, frame = cap.read()

s = time.time()

while iss:

i=i+1

iss,img = cap.read()

if iss:

img = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

# cv2.imshow("yuantu_gaus",img)

# img=cv2.GaussianBlur(img,(5,5),20)

# img = np.power(img / 255.0, 1.3)

# img = img*255

#ax.hist(img.flatten(),256)

# imghist = np.histogram(img.flatten(),256)[0]

# histmax = np.argmax(imghist).astype(np.uint8)

# img[img>histmax-50]=255

#

# print(img)

# cv2.imshow("huidu",img)

# sobelX = cv2.Sobel(img,cv2.CV_64F,1,0)#x方向的梯度

# sobelX = np.uint8(np.absolute(sobelX))#x方向梯度的絕對值

# img=img*255

sobelY = cv2.Sobel(img,cv2.CV_64F,0,1)#y方向的梯度

sobelY = np.uint8(np.absolute(sobelY))#y方向梯度的絕對值

# cv2.imshow("sobelY",sobelY)

#

# #找到輪廓

# _,contours, hierarchy = cv2.findContours(sobelY,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

# #繪製輪廓

# cv2.drawContours(sobelY,contours,-1,(0,0,255),3)

# #繪製結果

# img=cv2.GaussianBlur(img,(5,5),20)

# sobelY = cv2.medianBlur(sobelY,5)

# kernel = cv2.getStructuringElement(cv2.MORPH_RECT,(3, 3))

# sobelY = cv2.morphologyEx(sobelY, cv2.MORPH_OPEN, kernel)

sn = np.histogram(sobelY)[1]

sobelY[sobelY<=sn[0:3].mean()]=0

#

# yi = 0

# ci = 0

# for i in range(sobelY.shape[0]):

# for j in range(sobelY.shape[1]):

# if sobelY[i,j]>0:

# yi=yi+i

# ci=ci+1

ypoint = int(np.where(sobelY>0)[0].mean())

# xpoint = int(np.where(sobelY>0)[1].mean())

## img.flags.WRITEABLE =True

# img = img/255.0

# img[ypoint-200:ypoint+300,xpoint-300:xpoint+300] = np.power(img[ypoint-200:ypoint+300,xpoint-300:xpoint+300], 0.5)

# img = img*255

# img = np.uint8(np.absolute(img))#y方向梯度的絕對值

# cv2.imshow("local_h",img)

# sobelY = cv2.Sobel(img,cv2.CV_64F,0,1)#y方向的梯度

# sobelY = np.uint8(np.absolute(sobelY))#y方向梯度的絕對值

# cv2.imshow("local_sobelY",sobelY)

#

# ypoint = np.where(sobelY>0)[0].mean()

# xpoint = np.where(sobelY>0)[1].mean()

#

# if np.absolute(ypoint-pnum[-1])<=3:

# ypoint=pnum[-1]

# cv2.imshow("open",sobelY)

pnum.append(ypoint)

#

# print(i)

#cv2.imshow("result", sobelY)

# plt.plot(range(len(pnum)),pnum,c='r',marker='.')

# cv2.imshow("yanjiao",sobelY)

# plt.pause(0.01)

# if cv2.waitKey(1) & 0xFF == 'q':

# break

# time.sleep(0.5)

e=time.time()

print(e-s)

cv2.destroyAllWindows()

plt.plot(range(len(pnum)),pnum,c='r',marker='.')

#

#fig=plt.figure('22')

#ax2=fig.add_subplot(1,1,1)

#

#epnum = np.array(pnum)

#avg = epnum.mean()

#s=0

#countt=0

#for i in epnum:

# if i> avg:

# s=s+i

# countt=countt+1

#avg = s/countt

#print(avg)

#epnum[epnum<avg]=0

#ax2.plot(range(len(pnum)),epnum,c='r',marker='.')

#

##bb = plt.hist(pnum.flatten())

#print(pnum.mean())

#a = np.histogram(pnum.flatten())

##from scipy import stats

#pnum[pnum<3754]=0

#plt.plot(range(len(pnum)),pnum,c='r',marker='.')實時版本結果如下:

高精度版本程式碼如下:(做了部分資料處理)

# -*- coding: utf-8 -*-

"""

Created on Tue Sep 4 13:16:11 2018

@author: Lenovo

"""

import numpy as np

from matplotlib import animation

import matplotlib.pyplot as plt

import cv2

import time

from scipy.fftpack import fft,ifft

cap = cv2.VideoCapture('WIN_20180831_13_49_39_Pro.mp4')

pnum= list()

pnum.append(360)

plt.close() #clf() # 清圖 cla() # 清座標軸 close() # 關視窗

fig=plt.figure()

ax=fig.add_subplot(1,1,1)

plt.grid(True) #新增網格

plt.ion() #interactive mode on

i=0

formerimg = np.zeros((720,1280))

iss, frame = cap.read()

s = time.time()

while iss:

i=i+1

iss,img = cap.read()

if iss:

img = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

# cv2.imshow("yuantu_gaus",img)

img=cv2.GaussianBlur(img,(5,5),20)

img = np.power(img / 255.0, 1.3)

img = img*255

#ax.hist(img.flatten(),256)

# imghist = np.histogram(img.flatten(),256)[0]

# histmax = np.argmax(imghist).astype(np.uint8)

# img[img>histmax-50]=255

#

# print(img)

# cv2.imshow("huidu",img)

# sobelX = cv2.Sobel(img,cv2.CV_64F,1,0)#x方向的梯度

# sobelX = np.uint8(np.absolute(sobelX))#x方向梯度的絕對值

# img=img*255

sobelY = cv2.Sobel(img,cv2.CV_64F,0,1)#y方向的梯度

sobelY = np.uint8(np.absolute(sobelY))#y方向梯度的絕對值

# cv2.imshow("sobelY",sobelY)

#

# #找到輪廓

# _,contours, hierarchy = cv2.findContours(sobelY,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

# #繪製輪廓

# cv2.drawContours(sobelY,contours,-1,(0,0,255),3)

# #繪製結果

# img=cv2.GaussianBlur(img,(5,5),20)

sobelY = cv2.medianBlur(sobelY,5)

# kernel = cv2.getStructuringElement(cv2.MORPH_RECT,(3, 3))

# sobelY = cv2.morphologyEx(sobelY, cv2.MORPH_OPEN, kernel)

sn = np.histogram(sobelY)[1]

sobelY[sobelY<=sn[0:3].mean()]=0

#

# yi = 0

# ci = 0

# for i in range(sobelY.shape[0]):

# for j in range(sobelY.shape[1]):

# if sobelY[i,j]>0:

# yi=yi+i

# ci=ci+1

ypoint = int(np.where(sobelY>0)[0].mean())

xpoint = int(np.where(sobelY>0)[1].mean())

# img.flags.WRITEABLE =True

img = img/255.0

img[ypoint-200:ypoint+300,xpoint-300:xpoint+300] = np.power(img[ypoint-200:ypoint+300,xpoint-300:xpoint+300], 0.5)

img = img*255

img = np.uint8(np.absolute(img))#y方向梯度的絕對值

# cv2.imshow("local_h",img)

sobelY = cv2.Sobel(img,cv2.CV_64F,0,1)#y方向的梯度

sobelY = np.uint8(np.absolute(sobelY))#y方向梯度的絕對值

# cv2.imshow("local_sobelY",sobelY)

ypoint = np.where(sobelY>0)[0].mean()

xpoint = np.where(sobelY>0)[1].mean()

if np.absolute(ypoint-pnum[-1])<=3:

ypoint=pnum[-1]

# cv2.imshow("open",sobelY)

pnum.append(ypoint)

#

# print(i)

#cv2.imshow("result", sobelY)

#

# plt.plot(range(len(pnum)),pnum,c='r',marker='.')

# cv2.imshow("yanjiao",sobelY)

# plt.pause(0.01)

# if cv2.waitKey(1) & 0xFF == 'q':

# break

# time.sleep(0.5)

e=time.time()

print(e-s)

cv2.destroyAllWindows()

plt.plot(range(len(pnum)),pnum,c='r',marker='.')

#

#fig=plt.figure('22')

#ax2=fig.add_subplot(1,1,1)

#

#epnum = np.array(pnum)

#avg = epnum.mean()

#s=0

#countt=0

#for i in epnum:

# if i> avg:

# s=s+i

# countt=countt+1

#avg = s/countt

#print(avg)

#epnum[epnum<avg]=0

ax.plot(range(len(pnum)),pnum,c='r',marker='.')

#

##bb = plt.hist(pnum.flatten())

#print(pnum.mean())

#a = np.histogram(pnum.flatten())

##from scipy import stats

#pnum[pnum<3754]=0

#plt.plot(range(len(pnum)),pnum,c='r',marker='.')目前基於SOBEL的方法就告一段落。接下來嘗試一下 有沒有其他辦法能做更好的處理。

最近閱讀了一些文獻,有一些新的感悟,接下來,也嘗試嘗試

1.y向差分投影

按照論文中這個思路來的話,對眼部區域定位應該不錯,如果我能做到眼部區域定位,再去做區域內運算的話,能去除掉大量噪聲干擾。

該方法在眼部未閉合的時候,效果較好,眼部閉合就會一團糟,應為方差趨於穩定了。

# -*- coding: utf-8 -*-

"""

Created on Tue Sep 4 13:16:11 2018

@author: xkk

"""

import numpy as np

from matplotlib import animation

import matplotlib.pyplot as plt

import cv2

import time

from scipy.fftpack import fft,ifft

cap = cv2.VideoCapture('WIN_20180903_16_06_34_Pro.mp4')

pnum= list()

pnum.append(300)

plt.close() #clf() # 清圖 cla() # 清座標軸 close() # 關視窗

fig=plt.figure()

ax=fig.add_subplot(1,1,1)

plt.grid(True) #新增網格

plt.ion() #interactive mode on

i=0

formerimg = np.zeros((720,1280))

iss, frame = cap.read()

s = time.time()

while iss:

i=i+1

iss,img = cap.read()

if iss:

img = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

cv2.imshow("yuantu_gaus",img)

limg = img[:,1:]

rimg = img[:,:-1]

dimg = np.sum(np.power(rimg-limg,2),axis=1)

dimg = dimg.flatten()

#plt.hist(dimg,1000)

# pnum.append(ypoint)

plt.plot(range(len(dimg)),dimg,c='r',marker='.')

plt.pause(0.2)

plt.clf()

if cv2.waitKey(1) & 0xFF == 'q':

break

time.sleep(0.5)效果如下:

同理,結合了y軸就可以做眼部追蹤了。

但是還是在閉眼的一瞬間會出現漂移。如何找到閉眼特徵成為很重要的事情了。

之後我們對影象進行sobel邊緣提取,只做y軸方向sobel,接下來,我們可用質點的思路做檢測,但是上面已經做過了,效果較好,但是抖動較大,這裡考慮嘗試新的思路,基於區域的畫素分佈均勻度,即 熵 ,來做。

感覺效果也不行,不穩定。

嘗試一下 基於灰度相似度的方法,對模板匹配方法的改進,歸一化積相關灰度匹配。

它使用的相似性度量定義如下:

這方法受眼部位置影響太大,人體本身就會產生抖動,導致R值幅度波動大。

看到一些論文用YCBCR基於膚色分類,對於白天光照充足的情況下,效果理想

對於夜晚 或者人體抖動,產生的干擾,效果就不好了

具體過程如下

-----------------------------------------

縱向灰度加和圖

# -*- coding: utf-8 -*-

"""

Created on Tue Sep 4 13:16:11 2018

@author: xkk

"""

import numpy as np

from matplotlib import animation

import matplotlib.pyplot as plt

import cv2

import time

from scipy.fftpack import fft,ifft

cap = cv2.VideoCapture('WIN_20180903_16_06_34_Pro.mp4')

pnum= list()

pnum.append(300)

plt.close() #clf() # 清圖 cla() # 清座標軸 close() # 關視窗

fig=plt.figure()

ax=fig.add_subplot(1,1,1)

plt.grid(True) #新增網格

plt.ion() #interactive mode on

i=0

formerimg = np.zeros((720,1280))

iss, frame = cap.read()

s = time.time()

while iss:

i=i+1

iss,img = cap.read()

if iss:

img = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

cv2.imshow("yuantu_gaus",img)

dimg = np.sum(img,axis=0)

dimg = dimg.flatten()

#plt.hist(dimg,1000)

# pnum.append(ypoint)

plt.plot(range(len(dimg)),dimg,c='r',marker='.')

plt.pause(0.01)

plt.clf()

if cv2.waitKey(1) & 0xFF == 'q':

break

time.sleep(0.5)回YCBCR,發現效果是真的好,但是就是這個分割閾值難以確定

import cv2

import numpy as np

import pylab as pl

from PIL import Image

import matplotlib.pyplot as plt

import time

pnum= list()

#構建Gabor濾波器

def build_filters():

filters = []

ksize = [7,9,11,13,15,17] #gabor尺度 6個

lamda = np.pi/2.0 # 波長

kern = cv2.getGaborKernel((15,15),1,np.pi/2,lamda,0.5,0,ktype=cv2.CV_32F)

kern /= 1.5*kern.sum()

filters.append(kern)

return filters

#濾波過程

def process(img,filters):

accum = np.zeros_like(img)

for kern in filters:

fimg = cv2.filter2D(img,cv2.CV_8UC3,kern)

np.maximum(accum,fimg,accum)

return accum

#特徵圖生成並顯示

def getGabor(img,filters):

res = [] #濾波結果

for i in range(len(filters)):

res1 = process(img,filters[i])

res.append(np.asarray(res1))

return res

if __name__ == '__main__':

cap = cv2.VideoCapture('WIN_20180831_13_49_39_Pro.mp4')

filters = build_filters()

iss=True

while iss:

iss,img = cap.read()

if iss:

# edgeimg = getGabor(img,filters)[0]

edgeimg = cv2.cvtColor(img,cv2.COLOR_BGR2YCR_CB)

cv2.imshow("grayedgeimg",edgeimg)

cv2.imshow("grayedgeimg_0",edgeimg[:,:,0])

cr = edgeimg[:,:,1]

cb = edgeimg[:,:,2]

#一共有多少畫素點

allp = cr.shape[0]*cr.shape[1]

ninetyp = int(allp*0.2)

divgray = cr.mean()

# sn = np.histogram(cr,255)

# for i in range(255):

# ninetyp = ninetyp - sn[0][i]

# if ninetyp<=0:

# divgray=i

# break

print(divgray)

cr_hist = np.histogram(cr.flatten())

cr[cr<150]=255

# kernel = np.ones((15,15),np.uint8)

# cr = cv2.erode(cr,kernel,iterations = 1)

cv2.imshow("grayedgeimg_2-1",cr)

ypoint = np.where(cr==255)[0].mean()

pnum.append(ypoint)

plt.plot(range(len(pnum)),pnum,c='r',marker='.')

# yc = cr[:-1,:]-cr[1:,:]

# cr_sumhang = np.sum(np.power(yc,2),axis=1)

# plt.plot(range(len(cr_sumhang.flatten())),cr_sumhang.flatten())

plt.pause(0.0001)

plt.clf()

# time.sleep(0.1)

if cv2.waitKey(1) & 0xFF == 'q':

break

試了一下otsu和adaptivethresh 效果都不行,otsu勉強能看

用攝像頭實際測量一下YCBCR方法,感覺光線影響太大

後來發現- -,迴歸樸素方法,otsu+質點預測 就能達到還不錯的效果。

import cv2

import numpy as np

import pylab as pl

from PIL import Image

import matplotlib.pyplot as plt

import time

pnum= list()

#構建Gabor濾波器

def build_filters():

filters = []

ksize = [7,9,11,13,15,17] #gabor尺度 6個

lamda = np.pi/2.0 # 波長

kern = cv2.getGaborKernel((15,15),1,np.pi/2,lamda,0.5,0,ktype=cv2.CV_32F)

kern /= 1.5*kern.sum()

filters.append(kern)

return filters

#濾波過程

def process(img,filters):

accum = np.zeros_like(img)

for kern in filters:

fimg = cv2.filter2D(img,cv2.CV_8UC3,kern)

np.maximum(accum,fimg,accum)

return accum

#特徵圖生成並顯示

def getGabor(img,filters):

res = [] #濾波結果

for i in range(len(filters)):

res1 = process(img,filters[i])

res.append(np.asarray(res1))

return res

if __name__ == '__main__':

cap = cv2.VideoCapture(0)

filters = build_filters()

iss=True

while iss:

iss,img = cap.read()

if iss:

# edgeimg = getGabor(img,filters)[0]

edgeimg = cv2.cvtColor(img,cv2.COLOR_BGR2YCR_CB)

cv2.imshow("grayedgeimg",edgeimg)

cv2.imshow("grayedgeimg_0",edgeimg[:,:,0])

cr = edgeimg[:,:,1]

cb = edgeimg[:,:,2]

#一共有多少畫素點

allp = cr.shape[0]*cr.shape[1]

ninetyp = int(allp*0.2)

divgray = cr.mean()

# sn = np.histogram(cr,255)

# for i in range(255):

# ninetyp = ninetyp - sn[0][i]

# if ninetyp<=0:

# divgray=i

# break

print(divgray)

cr_hist = np.histogram(cr.flatten())

cr[cr<cr.mean()-cr.std()]=255

retval, cr = cv2.threshold(cr, 0, 255, cv2.THRESH_OTSU)

kernel = np.ones((10,10),np.uint8)

cr = cv2.erode(cr,kernel,iterations = 1)

cr = cv2.dilate(cr,kernel,iterations = 1)

cv2.imshow("grayedgeimg_2-1",cr)

ypoint = np.where(cr==255)[0].mean()

if len(pnum)>200:

pnum.clear()

pnum.append(ypoint)

plt.plot(range(len(pnum)),pnum,c='r',marker='.')

# yc = cr[:-1,:]-cr[1:,:]

# cr_sumhang = np.sum(np.power(yc,2),axis=1)

# plt.plot(range(len(cr_sumhang.flatten())),cr_sumhang.flatten())

plt.pause(0.0001)

plt.clf()

# time.sleep(0.1)

if cv2.waitKey(1) & 0xFF == 'q':

break

----------------------------------

接下來試試 神經網路 / 機器學習