利用Pycharm+selenium+chromedriver測試瀏覽器

阿新 • • 發佈:2018-12-10

背景

在抓取動態網頁失敗的時候,瞭解到selenium+chromedriver可以比較方便實現動態網頁抓取。利用Python抓取煎蛋網妹子圖。但是程式碼執行出錯。

原始碼(測試程式碼,沒有參考學習價值,僅供測試)

# -*- coding:utf-8 -*- import urllib.request import json import os import re from bs4 import BeautifulSoup from selenium import webdriver from selenium.common.exceptions import TimeoutException from selenium.webdriver.chrome.options import Options # 下載page_number頁前的所有圖片 # def s = r'img src=\"(.+jpg)' re_hmtl = re.compile(s) def getPage(url): chrome_options = Options() chrome_options.add_argument('--headless') chrome_options.add_argument('--disable-gpu') driver = webdriver.Chrome(chrome_options = chrome_options) dirver.get(url) return driver.page_source def save_imgs(folder, page_number): if(os.path.exists(folder) == False): os.mkdir(folder) # 建立一個名為mm_pic的資料夾 os.chdir(folder) # 切換到mm_pic資料夾下 url = 'http://jandan.net/ooxx/' # 網站地址 url = url + 'page-' + str(page_number) + '#comments' html = getPage(url) print(html) # req = urllib.request.Request(url) # req.add_header('User-Agent','Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.110 Safari/537.36') # source = urllib.request.urlopen(req) #print(source) #url_source = source.read().decode('utf-8') #print('Status:',source.status,source.reason) # url_source = BeautifulSoup(source.read(),'html.parser') # print(url_source.contents) print('OK') if __name__ == '__main__': save_imgs('mm_pic',55)

報錯如下,作為一個Python小白並不明白錯誤原因是什麼。

解決

參考資料如下:

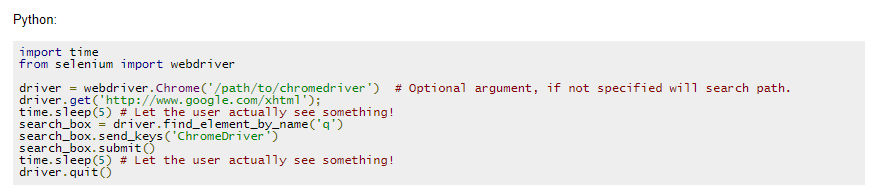

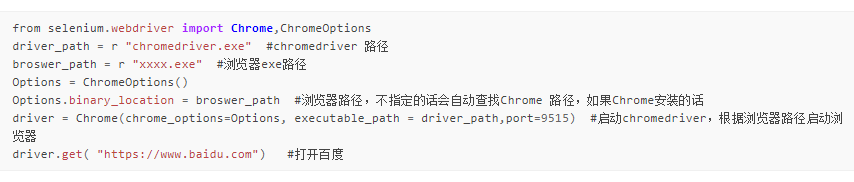

Chromedriver進行瀏覽器自動化測試

Chromedriver官方文件參考

截圖如下:

原來要裝載Chromedriver的安裝路徑。

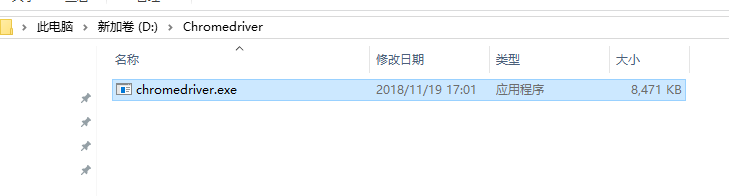

- 下載chromedriver下載地址

- 解壓到資料夾

- 程式碼測試,執行成功。

from selenium import webdriver driver = webdriver.Chrome(r'D:\Chromedriver\chromedriver.exe')# 將Chromedriver的安裝路徑作為引數 driver.get('https://www.google.com') print(driver.title) print(driver.current_url)

# -*- coding:utf-8 -*- import urllib.request import json import os import re from bs4 import BeautifulSoup from selenium import webdriver from selenium.common.exceptions import TimeoutException from selenium.webdriver.chrome.options import Options # 下載page_number頁前的所有圖片 # def s = r'img src=\"(.+jpg)' re_hmtl = re.compile(s) def getPage(url): chrome_options = Options() chrome_options.add_argument('--headless') chrome_options.add_argument('--disable-gpu') driver = webdriver.Chrome(chrome_options = chrome_options,executable_path=r'D:\Chromedriver\chromedriver.exe') driver.get(url) return driver.page_source def save_imgs(folder, page_number): if(os.path.exists(folder) == False): os.mkdir(folder) # 建立一個名為mm_pic的資料夾 os.chdir(folder) # 切換到mm_pic資料夾下 url = 'http://jandan.net/ooxx/' # 網站地址 url = url + 'page-' + str(page_number) + '#comments' html = getPage(url) print(html) # req = urllib.request.Request(url) # req.add_header('User-Agent','Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.110 Safari/537.36') # source = urllib.request.urlopen(req) #print(source) #url_source = source.read().decode('utf-8') #print('Status:',source.status,source.reason) # url_source = BeautifulSoup(source.read(),'html.parser') # print(url_source.contents) print('OK') if __name__ == '__main__': save_imgs('mm_pic',55)