【學習筆記】分類程式設計練習

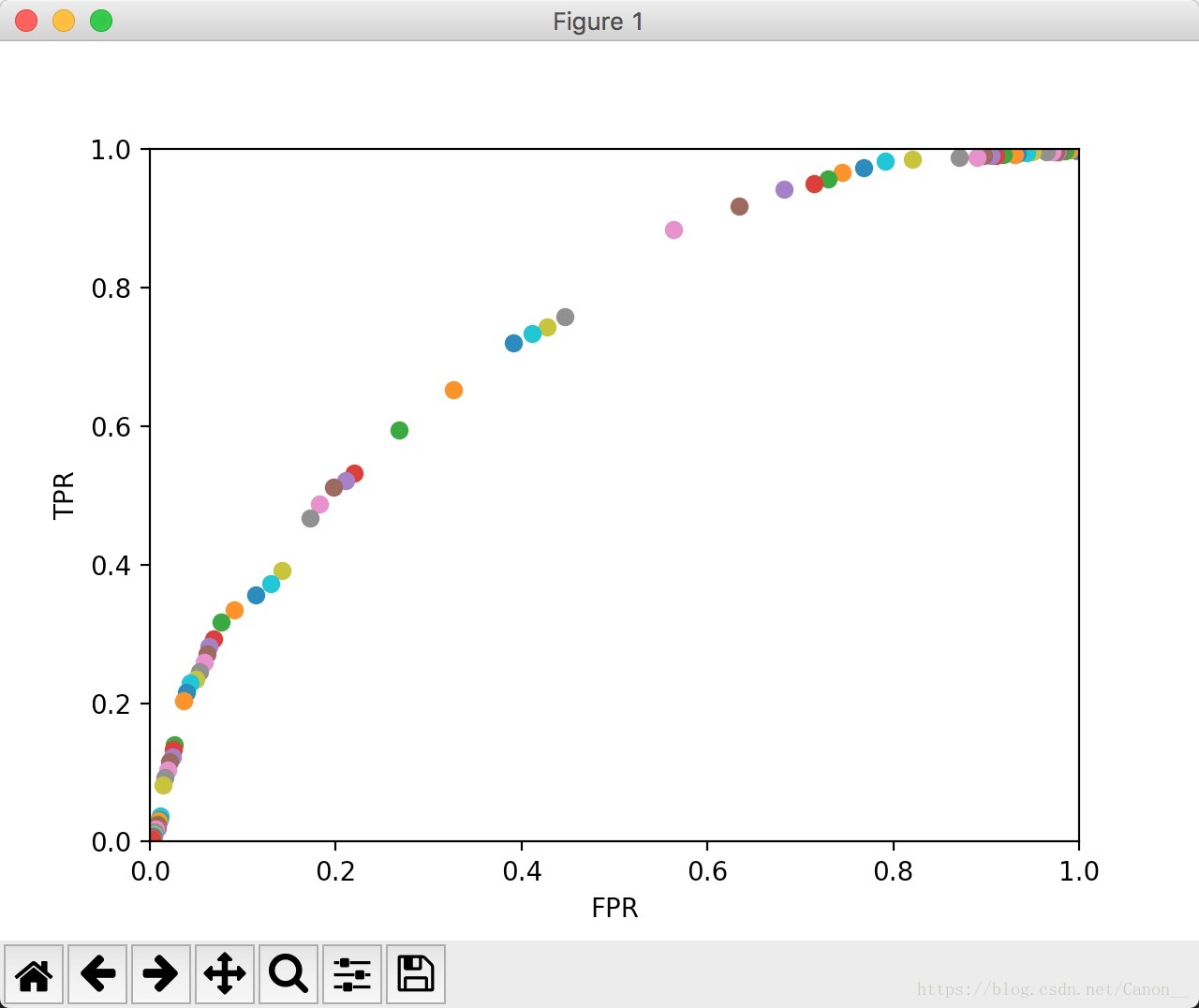

由於沒有對特徵進行處理(處理方法前面寫過,懶得再寫了,而且原文也沒寫),所以模型訓練結果並不好。

我們這裡只是簡單的講解下,框架的搭建。文章線性迴歸的部分不再提及了,因為也就是改一兩行程式碼的事。

我們先將資料分為訓練集 和驗證集 並且建立一個新的column叫 median_house_value_high, 這個列是基於medain_house_value 來決定的,median_house_value大於75%的計作1,否則計作0 。我們主要講解下 迴歸模型。

import pandas as pd import numpy as np import tensorflow as tf from tensorflow.data import Dataset import matplotlib.pyplot as plt df = pd.read_csv('california_housing_train.csv') df['median_house_value_high'] = (df['median_house_value'] > 265000).astype('float32') df['rooms_per_person'] = df['total_rooms'] / df['population'] df = df.reindex(np.random.permutation(df.index)) df = df.sort_index() df_features = df.drop(['median_house_value_high', 'median_house_value'], axis=1, inplace=False).copy() df_targets = df['median_house_value_high'].copy() training_features = df_features.head(12000).astype('float32') training_targets = df_targets.head(12000).astype('float32') validation_features = df_features.tail(5000).astype('float32') validation_targets = df_targets.tail(5000).astype('float32')

同樣我們先把資料分為訓練集和測試集,這裡因為我們用median_house_value來得到的targets,所以不再把median_house_value作為輸入特徵。

下面依舊是用dataset把df變為tensor:

def my_input_fn(features, targets, batch_size=1, num_epochs=1, shuffle=False): features = {key: np.array(value) for key, value in dict(features).items()} ds = Dataset.from_tensor_slices((features, targets)) ds = ds.batch(batch_size).repeat(num_epochs) if shuffle: ds.shuffle(10000) features, labels = ds.make_one_shot_iterator().get_next() return features, labels

之前文章提過,邏輯迴歸很依賴於正則化,為了防止過擬合,我們這裡在定義layer的時候別忘記加上l2正則化。

def add_layer(inputs, input_size, output_size, activation_function=None): weights = tf.Variable(tf.random_normal([input_size, output_size], stddev=0.1)) tf.add_to_collection('losses', tf.contrib.layers.l2_regularizer(.1)(weights)) biases = tf.Variable(tf.zeros(output_size) + 0.1) wx_b = tf.matmul(inputs, weights) + biases if activation_function is None: outputs = wx_b else: outputs = activation_function(wx_b) return weights, biases, outputs

之前我們提到過logloss函式,我們這回依舊用這個,別忘了正則化項。

def log_loss(pred, ys):

logloss = tf.reduce_sum(-ys * tf.log(pred) - (1-ys)*tf.log(1-pred))

loss = logloss + tf.add_n(tf.get_collection('losses'))

return loss剩下我們就需要定義訓練方法了,這裡我依舊選用的adam。

def train_step(learning_rate, loss):

train = tf.train.AdamOptimizer(learning_rate).minimize(loss)

return train由於my_input_fn返回的features是字典形式,上一次一個一個expand_dim實在有點煩,這回寫個函式expand_dim了

def expand_dim(_dict):

for key in _dict:

_dict[key] = tf.expand_dims(_dict[key], -1)

return _dict原文中讓我們畫roc的影象,那我們就定義一下。我這裡biases指代的分類閾值。仔細看了下,但願沒寫錯。

def roc(pred, targets, biases):

if len(pred) != len(targets):

raise Exception('預測長度與目標長度不等')

else:

TP = 0

TN = 0

FP = 0

FN = 0

for i in range(len(pred)):

if pred[i] > biases and targets[i] == 1:

TP += 1

elif pred[i] > biases and targets[i] == 0:

FP += 1

elif pred[i] < biases and targets[i] == 1:

FN += 1

else:

TN += 1

accuracy = (TP+TN)/(TP+TN+FP+FN)

TPR = TP/(TP+FN)

FPR = FP/(FP+TN)

return accuracy, TPR, FPR老步驟構建神經網路,最後一層用啟用函式用sigmoid,其他層或者用多少層就看個人了。

xs, ys = my_input_fn(training_features, training_targets, batch_size=200, num_epochs=100)

xv, yv = my_input_fn(validation_features, validation_targets, batch_size=5000, num_epochs=1100)

xs = expand_dim(xs)

xv = expand_dim(xv)

xs1, xs2, xs3, xs4, xs5, xs6, xs7, xs8, xs9 = xs.values()

_inputs = tf.concat([xs1, xs2, xs3, xs4, xs5, xs6, xs7, xs8, xs9], -1)

xv1, xv2, xv3, xv4, xv5, xv6, xv7, xv8, xv9 = xv.values()

xv_inputs = tf.concat([xv1, xv2, xv3, xv4, xv5, xv6, xv7, xv8, xv9], -1)

w1, b1, l1 = add_layer(_inputs, 9, 100, activation_function=tf.nn.tanh)

w2, b2, l2 = add_layer(l1, 100, 40, activation_function=None)

w3, b3, pred = add_layer(l2, 40, 1, activation_function=tf.nn.sigmoid)

loss = log_loss(pred, ys)

train = train_step(0.0001, loss)

sess = tf.Session()

init = tf.global_variables_initializer()

sess.run(init)依舊訓練,同時列印loss(我這裡loss奇高)。

for i in range(5000):

sess.run(train)

if i % 50 == 0:

v_l1 = tf.nn.tanh(tf.matmul(xv_inputs, w1) + b1)

v_l2 = tf.matmul(v_l1, w2) + b2

v_pred = tf.nn.sigmoid(tf.matmul(v_l2, w3) + b3)

v_loss = log_loss(v_pred, yv)

print(sess.run(v_loss))最後我們來畫圖(AUC這裡就不算了,如果想仔細處理這個模型,請先做好特徵工程):

fig = plt.figure()

ax = fig.add_subplot(1, 1, 1)

plt.ion()

plt.show()

ax.set_xlabel('FPR')

ax.set_ylabel('TPR')

ax.set_xlim(0, 1)

ax.set_ylim(0, 1)

for i in np.arange(0., 1., 0.001):

v_l1 = tf.nn.tanh(tf.matmul(xv_inputs, w1) + b1)

v_l2 = tf.matmul(v_l1, w2) + b2

v_pred = tf.nn.sigmoid(tf.matmul(v_l2, w3) + b3)

pred_roc, targets_roc = sess.run([v_pred, yv])

accuracy, tpr, fpr = roc(pred_roc, targets_roc, i)

print('accuracy:', accuracy, 'biases:', i)

ax.scatter(fpr, tpr)

plt.pause(0.1)這是我得到的一張圖:

我們的資料集並不大,而且分類的確是不均衡。在biases到0.3左右的時候 準確率基本不再變化。可見機器基本都是奔著TN去預測了。不過之後我們還有很多程式設計練習,到時候我們再討論已存的問題(scatter預設顏色居然不是單一顏色(這個可以通過引數c來更改))。如果想要點外面有個框可以用 linewidths引數來更改。scatter的引數一搜一大堆,這裡不再贅述了。