flume 1.6 安裝及配置 日誌採集配置

阿新 • • 發佈:2018-12-12

1.下載flume1.6

2.安裝jdk和Hadoop

具體參照以前wen'文章

3.flume 配置檔案修改

修改conf目錄下的flume-env.sh檔案

export JAVA_HOME=/etc/java/jdk/jdk1.8/

4.編寫採集配置檔案將採集結果存入hdfs

在conf目錄下編輯flume-conf-hdfs.properties檔案如下:

##################################################################### ## 監聽目錄中的新增檔案 ## this agent is consists of source which is r1 , sinks which is k1, ## channel which is c1 ## ## 這裡面的a1 是flume一個例項agent的名字 ##################################################################### a1.sources = r1 a1.sinks = k1 a1.channels = c1 # 監聽資料來源的方式,這裡採用監聽目錄中的新增檔案 a1.sources.r1.type = spooldir a1.sources.r1.spoolDir = /home/flume/test a1.sources.r1.fileSuffix = .ok # a1.sources.r1.deletePolicy = immediate a1.sources.r1.deletePolicy = never a1.sources.r1.fileHeader = true # 採集的資料的下沉(落地)方式 通過日誌 #a1.sinks.k1.type = logger a1.sinks.k1.type=hdfs a1.sinks.k1.hdfs.useLocalTimeStamp=true a1.sinks.k1.hdfs.path=hdfs://localhost:9000/flume-dir/%Y%m%d%H%M%S a1.sinks.k1.hdfs.filePrefix=log a1.sinks.k1.hdfs.fileType=DataStream a1.sinks.k1.hdfs.writeFormat=TEXT a1.sinks.k1.hdfs.rollInterval=10 a1.sinks.k1.hdfs.rollCount=0 a1.sinks.k1.hdfs.rollSize # 描述channel的部分,使用記憶體做資料的臨時儲存 a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 # 使用channel將source和sink連線起來 a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1

5.執行啟動flume agent a1

./flume-ng agent -c conf -n a1 -f ../conf/flume-conf-hdfs.properties -Dflume.root.logger=INFO,console6.操作相應的檔案,生成採集的日誌

在資料夾/home/flume/test執行如下操作ming命令:

echo 'this is lys flume test'>flume.txt檔案下生成了檔案flume.txt.ok

並且flume列印日誌如下:

18/09/28 22:33:23 INFO instrumentation.MonitoredCounterGroup: Component type: SINK, name: k1 started 18/09/28 22:33:23 INFO source.SpoolDirectorySource: SpoolDirectorySource source starting with directory: /home/flume/test 18/09/28 22:33:23 INFO instrumentation.MonitoredCounterGroup: Monitored counter group for type: SOURCE, name: r1: Successfully registered new MBean. 18/09/28 22:33:23 INFO instrumentation.MonitoredCounterGroup: Component type: SOURCE, name: r1 started 18/09/28 22:34:22 INFO avro.ReliableSpoolingFileEventReader: Last read took us just up to a file boundary. Rolling to the next file, if there is one. 18/09/28 22:34:22 INFO avro.ReliableSpoolingFileEventReader: Preparing to move file /home/flume/test/flume.txt to /home/flume/test/flume.txt.ok 18/09/28 22:34:25 INFO hdfs.HDFSDataStream: Serializer = TEXT, UseRawLocalFileSystem = false 18/09/28 22:34:25 INFO hdfs.BucketWriter: Creating hdfs://localhost:9000/flume-dir/20180928223425/log.1538188465593.tmp 18/09/28 22:34:37 INFO hdfs.BucketWriter: Closing hdfs://localhost:9000/flume-dir/20180928223425/log.1538188465593.tmp 18/09/28 22:34:37 INFO hdfs.BucketWriter: Renaming hdfs://localhost:9000/flume-dir/20180928223425/log.1538188465593.tmp to hdfs://localhost:9000/flume-dir/20180928223425/log.1538188465593 18/09/28 22:34:37 INFO hdfs.HDFSEventSink: Writer callback called

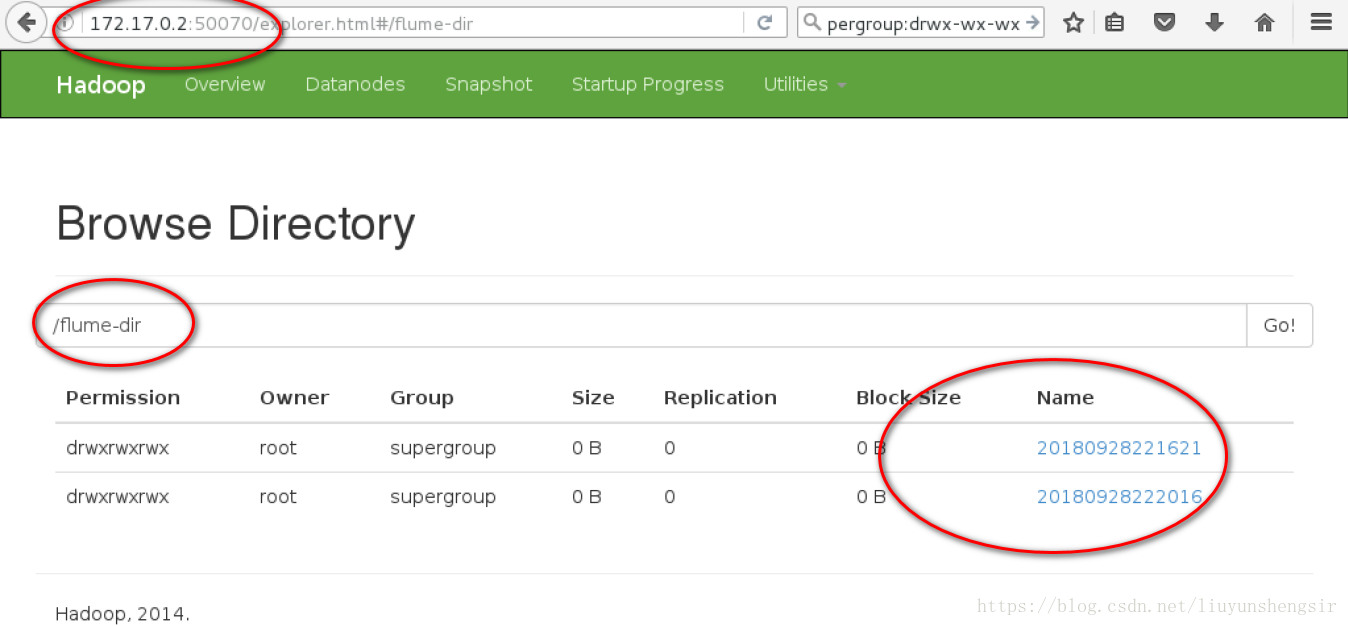

代表日誌匯入hdfs成功,並且在hdfs下可以看到wen'檔案