OpenCV兩種畸變校正模型原始碼分析以及CUDA實現

http://www.cnblogs.com/riddick/p/7811877.html

影象演算法中會經常用到攝像機的畸變校正,有必要總結分析OpenCV中畸變校正方法,其中包括普通針孔相機模型和魚眼相機模型fisheye兩種畸變校正方法。

普通相機模型畸變校正函式針對OpenCV中的cv::initUndistortRectifyMap(),魚眼相機模型畸變校正函式對應OpenCV中的cv::fisheye::initUndistortRectifyMap()。兩種方法算出對映Mapx和Mapy後,統一用cv::Remap()函式進行插值得到校正後的影象。

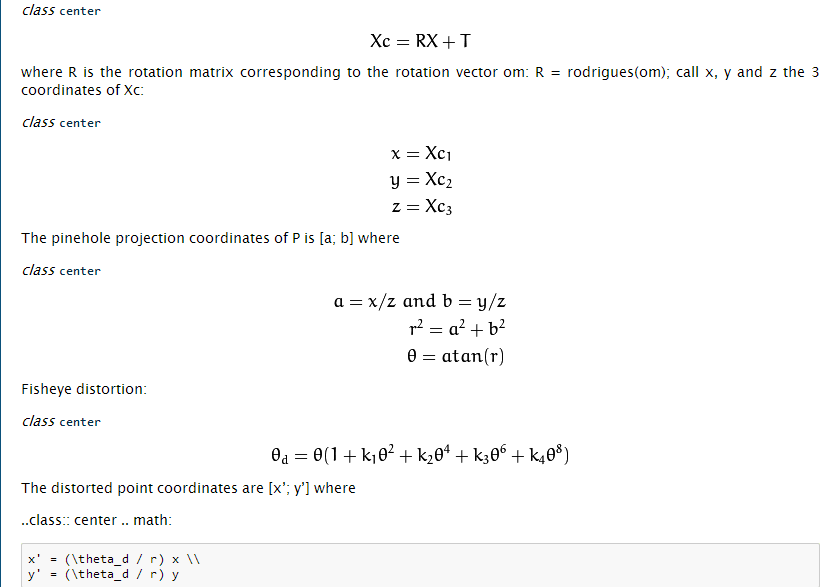

1. FishEye模型的畸變校正。

方便起見,直接貼出OpenCV原始碼,我在裡面加了註釋說明。建議參考OpenCV官方文件看畸變模型原理會更清楚:https://docs.opencv.org/3.0-beta/modules/calib3d/doc/camera_calibration_and_3d_reconstruction.html#fisheye-initundistortrectifymap

簡要流程就是:

1. 求內參矩陣的逆,由於攝像機座標系的三維點到二維影象平面,需要乘以旋轉矩陣R和內參矩陣K。那麼反向投影回去則是二維影象座標乘以 K*R的逆矩陣。

2. 將目標影象中的每一個畫素點座標(j,i),乘以1中求出的逆矩陣iR,轉換到攝像機座標系(_x,_y,_w),並歸一化得到z=1平面下的三維座標(x,y,1);

3.求出平面模型下畫素點對應魚眼半球模型下的極座標(r, theta)。

4.利用魚眼畸變模型求出擁有畸變時畫素點對應的theta_d。

5.利用求出的theta_d值將三維座標點重投影到二維影象平面得到(u,v),(u,v)即為目標影象對應的畸變影象中畫素點座標

6.使用cv::Remap()函式,根據mapx,mapy取出對應座標位置的畫素值賦值給目標影象,一般採用雙線性插值法,得到畸變校正後的目標影象。

#include <opencv2\opencv.hpp>

void cv::fisheye::initUndistortRectifyMap( InputArray K, InputArray D, InputArray R, InputArray P,

const cv::Size& size, int m1type, OutputArray map1, OutputArray map2 )

{

CV_Assert( m1type == CV_16SC2 || m1type == CV_32F || m1type <=0 );

map1.create( size, m1type <= 0 ? CV_16SC2 : m1type );

map2.create( size, map1.type() == CV_16SC2 ? CV_16UC1 : CV_32F );

CV_Assert((K.depth() == CV_32F || K.depth() == CV_64F) && (D.depth() == CV_32F || D.depth() == CV_64F));

CV_Assert((P.empty() || P.depth() == CV_32F || P.depth() == CV_64F) && (R.empty() || R.depth() == CV_32F || R.depth() == CV_64F));

CV_Assert(K.size() == Size(3, 3) && (D.empty() || D.total() == 4));

CV_Assert(R.empty() || R.size() == Size(3, 3) || R.total() * R.channels() == 3);

CV_Assert(P.empty() || P.size() == Size(3, 3) || P.size() == Size(4, 3));

//從內參矩陣K中取出歸一化焦距fx,fy; cx,cy

cv::Vec2d f, c;

if (K.depth() == CV_32F)

{

Matx33f camMat = K.getMat();

f = Vec2f(camMat(0, 0), camMat(1, 1));

c = Vec2f(camMat(0, 2), camMat(1, 2));

}

else

{

Matx33d camMat = K.getMat();

f = Vec2d(camMat(0, 0), camMat(1, 1));

c = Vec2d(camMat(0, 2), camMat(1, 2));

}

//從畸變係數矩陣D中取出畸變係數k1,k2,k3,k4

Vec4d k = Vec4d::all(0);

if (!D.empty())

k = D.depth() == CV_32F ? (Vec4d)*D.getMat().ptr<Vec4f>(): *D.getMat().ptr<Vec4d>();

//旋轉矩陣RR轉換資料型別為CV_64F,如果不需要旋轉,則RR為單位陣

cv::Matx33d RR = cv::Matx33d::eye();

if (!R.empty() && R.total() * R.channels() == 3)

{

cv::Vec3d rvec;

R.getMat().convertTo(rvec, CV_64F);

RR = Affine3d(rvec).rotation();

}

else if (!R.empty() && R.size() == Size(3, 3))

R.getMat().convertTo(RR, CV_64F);

//新的內參矩陣PP轉換資料型別為CV_64F

cv::Matx33d PP = cv::Matx33d::eye();

if (!P.empty())

P.getMat().colRange(0, 3).convertTo(PP, CV_64F);

//關鍵一步:新的內參矩陣*旋轉矩陣,然後利用SVD分解求出逆矩陣iR,後面用到

cv::Matx33d iR = (PP * RR).inv(cv::DECOMP_SVD);

//反向對映,遍歷目標影象所有畫素位置,找到畸變影象中對應位置座標(u,v),並分別儲存座標(u,v)到mapx和mapy中

for( int i = 0; i < size.height; ++i)

{

float* m1f = map1.getMat().ptr<float>(i);

float* m2f = map2.getMat().ptr<float>(i);

short* m1 = (short*)m1f;

ushort* m2 = (ushort*)m2f;

//二維影象平面座標系->攝像機座標系

double _x = i*iR(0, 1) + iR(0, 2),

_y = i*iR(1, 1) + iR(1, 2),

_w = i*iR(2, 1) + iR(2, 2);

for( int j = 0; j < size.width; ++j)

{

//歸一化攝像機座標系,相當於假定在Z=1平面上

double x = _x/_w, y = _y/_w;

//求魚眼半球體截面半徑r

double r = sqrt(x*x + y*y);

//求魚眼半球面上一點與光心的連線和光軸的夾角Theta

double theta = atan(r);

//畸變模型求出theta_d,相當於有畸變的角度值

double theta2 = theta*theta, theta4 = theta2*theta2, theta6 = theta4*theta2, theta8 = theta4*theta4;

double theta_d = theta * (1 + k[0]*theta2 + k[1]*theta4 + k[2]*theta6 + k[3]*theta8);

//利用有畸變的Theta值,將攝像機座標系下的歸一化三維座標,重投影到二維影象平面,得到(j,i)對應畸變影象中的(u,v)

double scale = (r == 0) ? 1.0 : theta_d / r;

double u = f[0]*x*scale + c[0];

double v = f[1]*y*scale + c[1];

//儲存(u,v)座標到mapx,mapy

if( m1type == CV_16SC2 )

{

int iu = cv::saturate_cast<int>(u*cv::INTER_TAB_SIZE);

int iv = cv::saturate_cast<int>(v*cv::INTER_TAB_SIZE);

m1[j*2+0] = (short)(iu >> cv::INTER_BITS);

m1[j*2+1] = (short)(iv >> cv::INTER_BITS);

m2[j] = (ushort)((iv & (cv::INTER_TAB_SIZE-1))*cv::INTER_TAB_SIZE + (iu & (cv::INTER_TAB_SIZE-1)));

}

else if( m1type == CV_32FC1 )

{

m1f[j] = (float)u;

m2f[j] = (float)v;

}

//這三條語句是上面 ”//二維影象平面座標系->攝像機座標系“的一部分,是矩陣iR的第一列,這樣寫能夠簡化計算

_x += iR(0, 0);

_y += iR(1, 0);

_w += iR(2, 0);

}

}

}

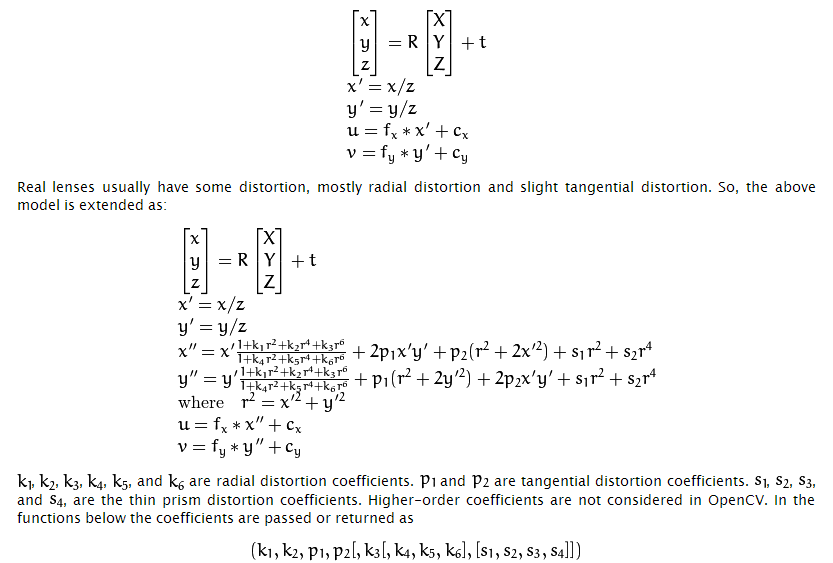

2.普通相機模型的畸變校正

同樣建議參考OpenCV官方文件閱讀程式碼 https://docs.opencv.org/3.0-beta/modules/calib3d/doc/camera_calibration_and_3d_reconstruction.html# 。

主要流程和上面Fisheye模型差不多,只有第4部分的畸變模型不一樣,普通相機的畸變模型如下:

同樣把原始碼貼上,並加上註解:

#include <opencv2\opencv.hpp>

void cv::initUndistortRectifyMap( InputArray _cameraMatrix, InputArray _distCoeffs,

InputArray _matR, InputArray _newCameraMatrix,

Size size, int m1type, OutputArray _map1, OutputArray _map2 )

{

Mat cameraMatrix = _cameraMatrix.getMat(), distCoeffs = _distCoeffs.getMat();

Mat matR = _matR.getMat(), newCameraMatrix = _newCameraMatrix.getMat();

if( m1type <= 0 )

m1type = CV_16SC2;

CV_Assert( m1type == CV_16SC2 || m1type == CV_32FC1 || m1type == CV_32FC2 );

_map1.create( size, m1type );

Mat map1 = _map1.getMat(), map2;

if( m1type != CV_32FC2 )

{

_map2.create( size, m1type == CV_16SC2 ? CV_16UC1 : CV_32FC1 );

map2 = _map2.getMat();

}

else

_map2.release();

Mat_<double> R = Mat_<double>::eye(3, 3);

Mat_<double> A = Mat_<double>(cameraMatrix), Ar;

if( !newCameraMatrix.empty() )

Ar = Mat_<double>(newCameraMatrix);

else

Ar = getDefaultNewCameraMatrix( A, size, true );

if( !matR.empty() )

R = Mat_<double>(matR);

if( !distCoeffs.empty() )

distCoeffs = Mat_<double>(distCoeffs);

else

{

distCoeffs.create(14, 1, CV_64F);

distCoeffs = 0.;

}

CV_Assert( A.size() == Size(3,3) && A.size() == R.size() );

CV_Assert( Ar.size() == Size(3,3) || Ar.size() == Size(4, 3));

//LU分解求新的內參矩陣Ar與旋轉矩陣R乘積的逆矩陣iR

Mat_<double> iR = (Ar.colRange(0,3)*R).inv(DECOMP_LU);

const double* ir = &iR(0,0);

//從舊的內參矩陣中取出光心位置u0,v0,和歸一化焦距fx,fy

double u0 = A(0, 2), v0 = A(1, 2);

double fx = A(0, 0), fy = A(1, 1);

//尼瑪14個畸變係數,不過大多用到的只有(k1,k2,p1,p2),最多加一個k3,用不到的置為0

CV_Assert( distCoeffs.size() == Size(1, 4) || distCoeffs.size() == Size(4, 1) ||

distCoeffs.size() == Size(1, 5) || distCoeffs.size() == Size(5, 1) ||

distCoeffs.size() == Size(1, 8) || distCoeffs.size() == Size(8, 1) ||

distCoeffs.size() == Size(1, 12) || distCoeffs.size() == Size(12, 1) ||

distCoeffs.size() == Size(1, 14) || distCoeffs.size() == Size(14, 1));

if( distCoeffs.rows != 1 && !distCoeffs.isContinuous() )

distCoeffs = distCoeffs.t();

const double* const distPtr = distCoeffs.ptr<double>();

double k1 = distPtr[0];

double k2 = distPtr[1];

double p1 = distPtr[2];

double p2 = distPtr[3];

double k3 = distCoeffs.cols + distCoeffs.rows - 1 >= 5 ? distPtr[4] : 0.;

double k4 = distCoeffs.cols + distCoeffs.rows - 1 >= 8 ? distPtr[5] : 0.;

double k5 = distCoeffs.cols + distCoeffs.rows - 1 >= 8 ? distPtr[6] : 0.;

double k6 = distCoeffs.cols + distCoeffs.rows - 1 >= 8 ? distPtr[7] : 0.;

double s1 = distCoeffs.cols + distCoeffs.rows - 1 >= 12 ? distPtr[8] : 0.;

double s2 = distCoeffs.cols + distCoeffs.rows - 1 >= 12 ? distPtr[9] : 0.;

double s3 = distCoeffs.cols + distCoeffs.rows - 1 >= 12 ? distPtr[10] : 0.;

double s4 = distCoeffs.cols + distCoeffs.rows - 1 >= 12 ? distPtr[11] : 0.;

double tauX = distCoeffs.cols + distCoeffs.rows - 1 >= 14 ? distPtr[12] : 0.;

double tauY = distCoeffs.cols + distCoeffs.rows - 1 >= 14 ? distPtr[13] : 0.;

//tauX,tauY這個是什麼梯形畸變,用不到的話matTilt為單位陣

// Matrix for trapezoidal distortion of tilted image sensor

cv::Matx33d matTilt = cv::Matx33d::eye();

cv::detail::computeTiltProjectionMatrix(tauX, tauY, &matTilt);

for( int i = 0; i < size.height; i++ )

{

float* m1f = map1.ptr<float>(i);

float* m2f = map2.empty() ? 0 : map2.ptr<float>(i);

short* m1 = (short*)m1f;

ushort* m2 = (ushort*)m2f;

//利用逆矩陣iR將二維影象座標(j,i)轉換到攝像機座標系(_x,_y,_w)

double _x = i*ir[1] + ir[2], _y = i*ir[4] + ir[5], _w = i*ir[7] + ir[8];

for( int j = 0; j < size.width; j++, _x += ir[0], _y += ir[3], _w += ir[6] )

{

//攝像機座標系歸一化,令Z=1平面

double w = 1./_w, x = _x*w, y = _y*w;

//這一部分請看OpenCV官方文件,畸變模型部分

double x2 = x*x, y2 = y*y;

double r2 = x2 + y2, _2xy = 2*x*y;

double kr = (1 + ((k3*r2 + k2)*r2 + k1)*r2)/(1 + ((k6*r2 + k5)*r2 + k4)*r2);

double xd = (x*kr + p1*_2xy + p2*(r2 + 2*x2) + s1*r2+s2*r2*r2);

double yd = (y*kr + p1*(r2 + 2*y2) + p2*_2xy + s3*r2+s4*r2*r2);

//根據求取的xd,yd將三維座標重投影到二維畸變影象座標(u,v)

cv::Vec3d vecTilt = matTilt*cv::Vec3d(xd, yd, 1);

double invProj = vecTilt(2) ? 1./vecTilt(2) : 1;

double u = fx*invProj*vecTilt(0) + u0;

double v = fy*invProj*vecTilt(1) + v0;

//儲存u,v的值到Mapx,Mapy中

if( m1type == CV_16SC2 )

{

int iu = saturate_cast<int>(u*INTER_TAB_SIZE);

int iv = saturate_cast<int>(v*INTER_TAB_SIZE);

m1[j*2] = (short)(iu >> INTER_BITS);

m1[j*2+1] = (short)(iv >> INTER_BITS);

m2[j] = (ushort)((iv & (INTER_TAB_SIZE-1))*INTER_TAB_SIZE + (iu & (INTER_TAB_SIZE-1)));

}

else if( m1type == CV_32FC1 )

{

m1f[j] = (float)u;

m2f[j] = (float)v;

}

else

{

m1f[j*2] = (float)u;

m1f[j*2+1] = (float)v;

}

}

}

}

如有錯誤,望不吝賜教!

另附上CUDA實現兩種畸變校正方法的程式碼,放在我的碼雲上:https://gitee.com/rxdj/camera-calibration.git。見cudaUndistort中的兩個.cu檔案