經典爬蟲:爬取百度股票

阿新 • • 發佈:2018-12-19

關鍵字: 百度股票 爬蟲 檔案儲存

前言

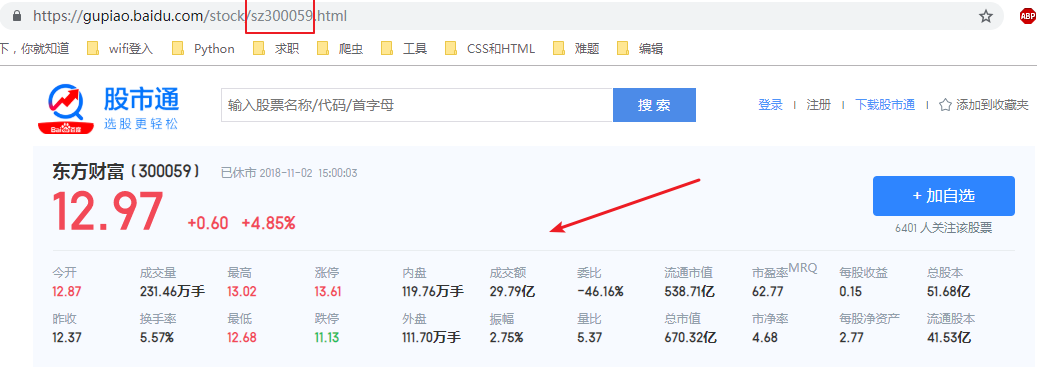

百度股票 URL :https://gupiao.baidu.com/stock/ + sz300059 +.html,其中以 sh 開頭的代表上交所掛牌交易的股票,以 sz 開頭的代表深交所掛牌交易的股票。

第一步我們要在 東方財富網 爬取類似 sz300059 這樣的股票代號:

HTML下載器

def getHTML(url):

try:

r = requests.get(url)

r.raise_for_status()

r.encoding = r.apparent_encoding

return URL生成器

def getStockURL(nameurl, urllist):

html = getHTML(nameurl) #呼叫HTML下載器

name = re.findall('[s][hz]\d{6}', html)

for item in name:

urllist.append("https://gupiao.baidu.com/stock/%s.html" %item)

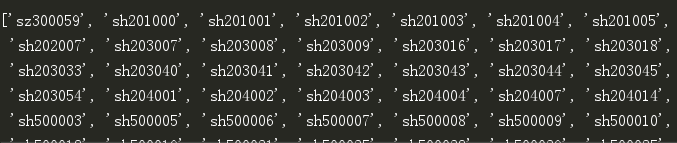

- 從 東方財富網 下載類似

sz300059這樣的股票代號,我們呼叫re庫,再用正則表示式[s][hz]\d{6}去完成匹配。

獲取股票資訊並儲存

def getStockInfo(urllist, fpath):

for i in range(len(urllist)):

html = getHTML(urllist[i]) #呼叫HTML下載器

soup = BeautifulSoup(html, "html.parser")

try:

info = {}

title = soup.find_all('a', attrs={'class':'bets-name'})[0]

info. soup.find('Tag')返回的是bs4.element.Tag型別。soup.find_all('Tag')返回的是bs4.element.ResultSet型別。soup.find('Tag').children返回的是生成器。trackback庫的print_exc()函式可以捕獲並列印異常。- 更多

BeautifulSoup資訊參考這裡:點我

為了讓程式碼不斷打印出當前進度,我們可以把這段程式碼改動一下:

def getStockInfo(urllist, fpath):

count = 0

for i in range(len(urllist)):

html = getHTML(urllist[i]) #呼叫HTML下載器

soup = BeautifulSoup(html, "html.parser")

try:

info = {}

title = soup.find_all('a', attrs={'class':'bets-name'})[0]

info.update({'股票名稱': title.text.split()[0]}) #初始化股票名稱

keylist = soup.find_all('dt')

valuelist = soup.find_all('dd')

lenth = len(keylist)

for i in range(lenth):

key = keylist[i].text

value = valuelist[i].text

info[key] = value

with open(fpath, 'a', encoding='utf-8') as f:

f.write(str(info) + '\n')

count = count + 1

print("\r當前進度: {:.2f}%".format(count * 100 / len(urllist)), end="")

except:

count = count + 1

print("\r當前進度: {:.2f}%".format(count * 100 / len(urllist)), end="")

continue

結尾

最後股票資訊會儲存在 D 盤的 BaiduStockInfo.txt 中:

全碼

import traceback

import requests

import re

from bs4 import BeautifulSoup

def getHTML(url):

try:

r = requests.get(url)

r.raise_for_status()

r.encoding = r.apparent_encoding

return r.text

except:

return ""

def getStockURL(nameurl, urllist):

html = getHTML(nameurl) #呼叫HTML下載器

name = re.findall('[s][hz]\d{6}', html)

for item in name:

urllist.append("https://gupiao.baidu.com/stock/%s.html" %item)

def getStockInfo(urllist, fpath):

count = 0

for i in range(len(urllist)):

html = getHTML(urllist[i]) #呼叫HTML下載器

soup = BeautifulSoup(html, "html.parser")

try:

info = {}

title = soup.find_all('a', attrs={'class':'bets-name'})[0]

info.update({'股票名稱': title.text.split()[0]}) #初始化股票名稱

keylist = soup.find_all('dt')

valuelist = soup.find_all('dd')

lenth = len(keylist)

for i in range(lenth):

key = keylist[i].text

value = valuelist[i].text

info[key] = value

with open(fpath, 'a', encoding='utf-8') as f:

f.write(str(info) + '\n')

count = count + 1

print("\r當前進度: {:.2f}%".format(count * 100 / len(urllist)), end="")

except:

count = count + 1

print("\r當前進度: {:.2f}%".format(count * 100 / len(urllist)), end="")

continue

def main():

urllist = []

nameurl = "http://quote.eastmoney.com/stocklist.html"

output_file = 'D:/BaiduStockInfo.txt' #輸出地址

getStockURL(nameurl, urllist)

getStockInfo(urllist, output_file)

main()

小尾巴

歡迎掃碼關注我的公眾號:爬蟲小棧, 一起進步的小棧。