hadoop zookeeper hbase spark phoenix (HA)搭建過程

環境介紹:

系統:centos7

軟體包:

apache-phoenix-4.14.0-HBase-1.4-bin.tar.gz 下載連結:http://mirror.bit.edu.cn/apache/phoenix/apache-phoenix-4.14.1-HBase-1.4/bin/apache-phoenix-4.14.1-HBase-1.4-bin.tar.gz

hadoop-3.1.1.tar.gz 下載連結:http://mirror.bit.edu.cn/apache/hadoop/core/hadoop-3.1.1/hadoop-3.1.1.tar.gz

hbase-1.4.8-bin.tar.gz 下載連結:

jdk-8u181-linux-x64.tar.gz 下載連結:https://download.oracle.com/otn-pub/java/jdk/8u191-b12/2787e4a523244c269598db4e85c51e0c/jdk-8u191-linux-x64.tar.gz

spark-2.1.0-bin-hadoop2.7.tgz 下載連結:http://mirror.bit.edu.cn/apache/spark/spark-2.1.3/spark-2.1.3-bin-hadoop2.7.tgz

zookeeper-3.4.13.tar.gz 下載連結:http://mirror.bit.edu.cn/apache/zookeeper/zookeeper-3.4.13/zookeeper-3.4.13.tar.gz

注:連結版本跟我現在使用的版本可能會有稍微差別,不過不影響,只是小版本號差那麼一點,大版本號號還是一樣的,這裡使用最新的hadoop3.11版本。

資源關係:這裡使用的主機名跟我待會使用的主機名不一樣,畢竟生產環境,叢集配置涉及到主機名和ip都會相應的變化。但是效果是一樣的。

| 主機名 | ip | |||||||||||

| zk1 | 10.62.2.1 | jdk8 | zookeeper | namenode1 | journalnode1 | resourcemanager1 | Hmaster | |||||

| zk2 | 10.62.2.2 | jdk8 | zookeeper | namenode2 | journalnode2 | resourcemanager2 | Hmaster-back | |||||

| zk3 | 10.62.2.3 | jdk8 | zookeeper | namenode3 | journalnode3 | spark-master | phoenix | |||||

| yt1 | 10.62.3.1 | jdk8 | datanode1 | HRegenServer1 | ||||||||

| yt2 | 10.62.3.2 | jdk8 | datanode2 | HRegenServer2 | ||||||||

| yt3 | 10.62.3.3 | jdk8 | datanode3 | HRegenServer3 | ||||||||

| yt4 | 10.62.3.4 | jdk8 | datanode4 | |||||||||

| yt5 | 10.62.3.5 | jdk8 | datanode5 | spark-work1 | ||||||||

| yt6 | 10.62.3.6 | jdk8 | datanode6 | spark-work2 | ||||||||

| yt7 | 10.62.3.7 | jdk8 | datanode7 | spark-work3 | ||||||||

| yt8 | 10.62.3.8 | jdk8 | datanode8 | spark-work4 | ||||||||

| yt9 | 10.62.3.9 | jdk8 | datanode9 | nodemanager1 | ||||||||

| yt10 | 10.62.3.10 | jdk8 | datanode10 | nodemanager2 |

前期準備:1、配置ip,關閉防火牆或者設定相應的策略,關閉selinux,設定主機之間相互ssh免密,建立使用者等,這裡不在多說。

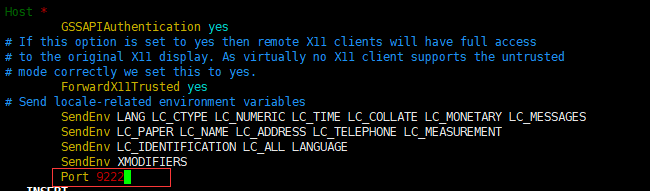

配置ssh免密需要注意一點:如果你的主機之間不是預設的22埠,那麼在設定ssh免密的時候需要修改/etc/ssh/ssh_config配置檔案

$ vim /etc/ssh/ssh_config

在檔案最後新增

Port ssh的埠

比如我的ssh埠是9222

然後重新啟動ssh服務

一、zookeeper安裝

1、解壓zookeeper包到相應的目錄,進入到解壓的目錄

1、解壓

$ tar zxvf zookeeper-3.4.13.tar.gz -C /data1/hadoop/hadoop/

$ mv zookeeper-3.4.13/ zookeeper

2、修改環境變數:

在~/.bashrc檔案新增

export ZOOK=/data1/hadoop/zookeeper-3.4.13/

export PATH=$PATH:${ZOOK}/bin

$ source ~/.bashrc

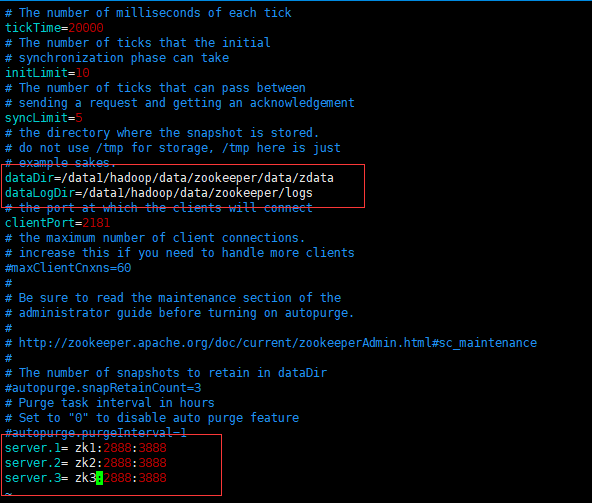

3、修改配置檔案

$ cd zookeeper/conf

$ cp zoo_sample.cfg zoo.cfg

修改成如下:

如果要自定義日誌檔案,僅僅在改配置檔案修改並不好使,還得修改./bin/zkEnv.sh 這個檔案,修改如下:

4、建立資料目錄和日誌目錄

$ mkdir /data1/hadoop/data/zookeeper/data/zdata -p

$ mkdir /data1/hadoop/data/zookeeper/logs -p

5、建立myid檔案

$ echo 1 > /data1/hadoop/data/zookeeper/data/zdata/myid

6、分發到另外的兩臺機器

$ scp -r zk2:/data1/hadoop/data/zookeeper/ (注意,這裡已經做完免密了,不需要再輸入密碼)

$ scp -r zk3:/data1/hadoop/data/zookeeper/

7、當然,還得去zk2上執行 $ echo 2 > /data1/hadoop/data/zookeeper/data/zdata/myid

去zk3上執行 $ echo 3 > /data1/hadoop/data/zookeeper/data/zdata/myid

8、接下來分別到這三臺機器執行

$ zkServer.sh start (我已經添加了環境變數,如果未新增需要到bin目錄下執行該指令碼檔案)

如果不出意外,執行成功是最好的

10、檢視狀態:zkServer.sh status

如果最後狀態是一個leader,兩個follower就代表成功。

11、啟動失敗原因(有可能全部沒有啟動成功,有可能啟動其中一個或者兩個)

(1)、防火牆沒有停掉或者策略配置有問題

(2)、配置檔案錯誤,尤其是myid檔案

(3)、selinux沒設定成disabled或者permissive

(4)、埠被佔用

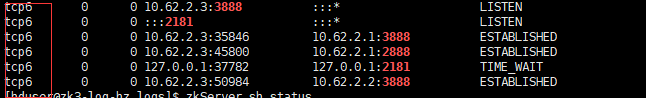

(5)、啟動以後監聽的是ipv6地址,如下:(這是最坑的,我在這裡卡了好久)

正常情況下應該是tcp不是tcp6

解決辦法就是關閉ipv6,然後重新啟動zookeeper。

2、配置jdk,解壓然後配置~/.source檔案就可以,不在多說

3、hadoop(HA)(zk1機器操作)

1、解壓

$ tar zxvf hadoop-3.1.1.tar.gz -C /data1/hadoop/

$ mv hadoop-3.1.1 hadoop

$ cd /data1/hadoop/hadoop/etc/hadoop/

2、配置環境變數~/.source

export HADOOP_HOME=/data1/hadoop/hadoop

export PATH=$PATH:${HADOOP_HOME}/bin:${HADOOP_HOME}/sbin

3、配置hadoop-env.sh,新增Java環境變數,檔案最後新增:

export JAVA_HOME=/usr/local/jdk/

4、配置core-site.xml,如下:(具體配置還得根據自己實際情況,這裡可以做一個參考)

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://GD-AI</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/data/hadoop/tmp/</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.groups</name>

<value>*</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>zk1:2181,zk2:2181,zk3:2181</value>

</property>

<property>

<name>fs.trash.interval</name>

<value>1440</value>

</property>

</configuration>

5、配置hdfs-site.xml,如下:

<configuration>

<property>

<name>dfs.nameservices</name>

<value>GD-AI</value>

</property>

<property>

<name>dfs.ha.namenodes.GD-AI</name>

<value>nna,nns,nnj</value>

</property>

<property>

<name>dfs.namenode.rpc-address.GD-AI.nna</name>

<value>zk1:9000</value>

</property>

<property>

<name>dfs.namenode.rpc-address.GD-AI.nns</name>

<value>zk2:9000</value>

</property>

<property>

<name>dfs.namenode.rpc-address.GD-AI.nnj</name>

<value>zk3:9000</value>

</property>

<property>

<name>dfs.namenode.http-address.GD-AI.nna</name>

<value>zk1:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.GD-AI.nns</name>

<value>zk2:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.GD-AI.nnj</name>

<value>zk3:50070</value>

</property>

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://zk1:8485;zk2:8485;zk3:8485/GD-AI</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.GD-AI</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence

shell(/bin/true)

</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/hduser/.ssh/id_rsa</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/data1/hadoop/data/tmp/journal</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/data1/hadoop/data/dfs/nn</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/data2/hadoop/data/dn,/data3/hadoop/data/dn,/data4/hadoop/data/dn,/data5/hadoop/data/dn,/data6/hadoop/data/dn,/data7/hadoop/data/dn,/data8/hadoop/data/dn,/data9/hadoop/data/dn,/data10/hadoop/data/dn</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.journalnode.http-address</name>

<value>0.0.0.0:8480</value>

</property>

<property>

<name>dfs.journalnode.rpc-address</name>

<value>0.0.0.0:8485</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>zk1:2181,zk2:2181,zk3:2181</value>

</property>

<property>

<name>dfs.permissions.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.namenode.acls.enabled</name>

<value>true</value>

</property>

</configuration>

6、配置 yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.resourcemanager.connect.retry-interval.ms</name>

<value>2000</value>

</property>

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>zk1:2181,zk2:2181,zk3:2181</value>

</property>

<property>

<name>yarn.resourcemanager.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm1</name>

<value>zk1</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm2</name>

<value>zk2</value>

</property>

<property>

<name>yarn.resourcemanager.ha.id</name>

<value>rm1</value> <!-- 這個值需要注意,分發到另外一臺resourcemanager時,也就是resourcemanager備節點時需要修改成rm2(也許你那裡不是) -->

</property>

<property>

<name>yarn.resourcemanager.recovery.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.zk-state-store.address</name>

<value>zk1:2181,zk2:2181,zk3:2181</value>

</property>

<property>

<name>yarn.resourcemanager.store.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value>

</property>

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>zk1:2181,zk2:2181,zk3:2181</value>

</property>

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>GD-yarn</value>

</property>

<property>

<name>yarn.app.mapreduce.am.scheduler.connection.wait.interval-ms</name>

<value>5000</value>

</property>

<!-- 如下標紅的在拷貝到另外一臺resourcemanager時,需要修改成對應的主機名-->

<roperty>

<name>yarn.resourcemanager.address.rm1</name>

<value>zk1:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address.rm1</name>

<value>zk1:8030</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.rm1</name>

<value>zk1:8088</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address.rm1</name>

<value>zk1:8031</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address.rm1</name>

<value>zk1:8033</value>

</property>

<property>

<name>yarn.resourcemanager.ha.admin.address.rm1</name>

<value>zk1:23142</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.nodemanager.local-dirs</name>

<value>/data1/hadoop/data/nm</value>

</property>

<property>

<name>yarn.nodemanager.log-dirs</name>

<value>/data1/hadoop/log/yarn</value>

</property>

<property>

<name>mapreduce.shuffle.port</name>

<value>23080</value>

</property>

<property>

<name>yarn.client.failover-proxy-provider</name>

<value>org.apache.hadoop.yarn.client.ConfiguredRMFailoverProxyProvider</value>

</property>

<property>

<name>yarn.resourcemanager.ha.automatic-failover.zk-base-path</name>

<value>/yarn-leader-election</value>

</property>

<property>

<!-- 以下資源情況請根據自己環境做調整 -->

<name>yarn.nodemanager.vcores-pcores-ratio</name>

<value>1</value>

</property>

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>20</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>196608</value>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>2048</value>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>196608</value>

</property>

</configuration>

7、配置mapred-site.xml,如下:資源情況根據自己環境做調整

<configuration>

<property>

<name>mapreduce.map.memory.mb</name>

<value>5120</value>

</property>

<property>

<name>mapreduce.map.java.opts</name>

<value>-Xmx4096M</value>

</property>

<property>

<name>mapreduce.reduce.memory.mb</name>

<value>10240</value>

</property>

<property>

<name>mapreduce.reduce.java.opts</name>

<value>-Xmx8196M</value>

</property>

<property>

<name>mapreduce.task.io.sort.mb</name>

<value>512</value>

</property>

<property>

<name>mapreduce.task.io.sort.factor</name>

<value>100</value>

</property>

<property>

<name>mapreduce.tasktracker.http.threads</name>

<value>100</value>

</property>

<property>

<name>mapreduce.reduce.shuffle.parallelcopies</name>

<value>100</value>

</property>

<property>

<name>mapreduce.map.output.compress</name>

<value>true</value>

</property>

<property>

<name>mapreduce.map.output.compress.codec</name>

<value>org.apache.hadoop.io.compress.DefaultCodec</value>

</property>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobtracker.address</name>

<value>zk1:11211</value>

</property>

<property>

<name>mapreduce.job.queuename</name>

<value>hadoop</value>

</property>

8、配置capacity-scheduler.xml(自己測試可以不用配置),如下:

<configuration>

<property>

<name>yarn.scheduler.capacity.root.queues</name>

<value>default,hadoop,orc</value>

</property>

<property>

<name>yarn.scheduler.capacity.root.default.capacity</name>

<value>0</value>

</property>

<property>

<name>yarn.scheduler.capacity.root.hadoop.capacity</name>

<value>65</value>

</property>

<property>

<name>yarn.scheduler.capacity.root.orc.capacity</name>

<value>35</value>

</property>

<property>

<name>yarn.scheduler.capacity.root.hadoop.user-limit-factor</name>

<value>1</value>

</property>

<property>

<name>yarn.scheduler.capacity.root.orc.user-limit-factor</name>

<value>1</value>

</property>

<property>

<name>yarn.scheduler.capacity.root.orc.maximum-capacity</name>

<value>100</value>

</property>

<property>

<name>yarn.scheduler.capacity.root.hadoop.maximum-capacity</name>

<value>100</value>

</property>

<property>

<name>yarn.scheduler.capacity.root.default.acl_submit_applications</name>

<value>default</value>

</property>

<property>

<name>yarn.scheduler.capacity.root.hadoop.acl_submit_applications</name>

<value>hadoop</value>

</property>

<property>

<name>yarn.scheduler.capacity.root.hadoop.acl_administer_queue</name>

<value>hadoop</value>

</property>

</configuration>

9、配置workers檔案(注意:這是配置datanode節點的檔案,以前是slaves,現在3.x變成了workers檔案)

10、分發到所有的其他機器(注:整個叢集所有機器),具體情況具體分發

$ scp -r /data1/hadoop/hadoop 其他所有機器:/data1/hadoop

10、格式化與啟動

(1)、格式化zookeeper(zk1上格式化)

$ hdfs zkfc -formatZK

(2)、在zk這三臺機器啟動journalnode

$ hadoop-daemon.sh start journalnode

(3)、格式化namenode(zk1上格式化)

$ hdfs namenode -format

(4)、啟動namenode(zk1執行)

$ hadoop-daemon.sh start namenode

(5)、在zk2、zk3兩臺機器分別執行:

$ hdfs namenode -bootstrapStandby

(6)、啟動hdfs,執行命令 start-dfs.sh 因為我這裡yt*這些機器都需要啟動datanode,所以workers檔案如下:

yt1

yt2

yt3

yt4

yt5

yt6

yt7

yt8

yt9

yt10

(7)、啟動yarn,執行命令 start-yarn.sh ,因為我只啟動了最後兩條,所以workers檔案修改如下(只需要修改zk1就行,畢竟這是主節點)

yjt9

yjt10

(8)、檢視叢集狀態

$ hdfs haadmin -getAllServiceState (hdfs)

zk1:9000 active

zk2:9000 standby

zk3:9000 standby

$ yarn rmadmin -getAllServiceState (yarn)

zk1:8033 active

zk2:8033 standby

(9)、測試

kill掉活動的節點,看是否自動轉移。(這裡就不測試了)

4、hbase (HA)(zk2機器操作)

1、解壓

tar zxvf hbase-1.4.8-bin.tar.gz -C /data1/hadoop/

2、配置hbase-env.sh,新增環境變數如下:

export HADOOP_HOME=/data1/hadoop/hadoop

export JAVA_HOME=/usr/local/jdk

export HBASE_MANAGES_ZK=false <!--不使用自帶的zookeeper -->

3、配置hbase-site.xml,如下:(這裡只是最簡單的配置,不適合生成環境)

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://GD-AI/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>zk1:2181,zk2:2181,zk3:2181</value>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

</property>

<property>

<name>hbase.master.port</name>

<value>60000</value>

</property>

<property>

<name>hbase.tmp.dir</name>

<value>/data1/hadoop/data/hbase/tmp/</value>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/data1/hadoop/data/zookeeper/data/</value>

</property>

</configuration>

或者:(生成環境)

<configuration>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.tmp.dir</name>

<value>/data2/hadoop/data/hbase/tmp/</value>

</property>

<property>

<name>hbase.rootdir</name>

<value>hdfs://GD-AI/hbase</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>zk1:2181,zk2:2181,zk3:2181</value>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/data2/hadoop/data/zookeeper/data/</value>

</property>

<property>

<name>hbase.zookeeper.property.tickTime</name>

<value>10000</value>

</property>

<property>

<name>zookeeper.znode.parent</name>

<value>/hbase</value>

</property>

<property>

<name>data.tx.timeout</name>

<value>1800</value>

</property>

<property>

<name>ipc.socket.timeout</name>

<value>18000000</value>

</property>

<property>

<name>hbase.regionserver.handler.count</name>

<value>60</value>

</property>

<property>

<name>hbase.master.maxclockskew</name>

<value>300000</value>

</property>

<property>

<name>hbase.client.scanner.timeout.period</name>

<value>1800000</value>

</property>

<property>

<name>hbase.rpc.timeout</name>

<value>1800000</value>

</property>

<property>

<name>hbase.client.operation.timeout</name>

<value>1800000</value>

</property>

<property>

<name>hbase.lease.recovery.timeout</name>

<value>3600000</value>

</property>

<property>

<name>hbase.lease.recovery.dfs.timeout</name>

<value>1800000</value>

</property>

<property>

<name>hbase.client.scanner.caching</name>

<value>1000</value>

</property>

<property>

<name>hbase.htable.threads.max</name>

<value>5000</value>

</property>

<property>

<name>hbase.regionserver.wal.codec</name>

<value>org.apache.hadoop.hbase.regionserver.wal.IndexedWALEditCodec</value>

</property>

<property>

<name>hbase.region.server.rpc.scheduler.factory.class</name>

<value>org.apache.hadoop.hbase.ipc.PhoenixRpcSchedulerFactory</value>

</property>

<property>

<name>hbase.rpc.controllerfactory.class</name>

<value>org.apache.hadoop.hbase.ipc.controller.ServerRpcControllerFactory</value>

</property>

<property>

<name>phoenix.query.timeoutMs</name>

<value>1800000</value>

</property>

<property>

<name>phoenix.rpc.timeout</name>

<value>1800000</value>

</property>

<property>

<name>phoenix.coprocessor.maxServerCacheTimeToLiveMs</name>

<value>1800000</value>

</property>

<property>

<name>phoenix.query.keepAliveMs</name>

<value>120000</value>

</property>

<property>

<name>zookeeper.session.timeout</name>

<value>120000</value>

</property>

</configuration>

4、拷貝hadoop的hdfs-site.xml和core-site.xml檔案到hbase的conf目錄下,或者做軟連線也可以

$ cat /data1/hadoop/hadoop/etc/hadoop/hdfs-site.xml . (點代表當前目錄,因為我當前目錄就是hbase的conf目錄下)

$ cat /data1/hadoop/hadoop/etc/hadoop/core-site.xml .

或者

$ ln -s /data1/hadoop/hadoop/etc/hadoop/hdfs-site.xml hdfs-site.xml

$ ln -s /data1/hadoop/hadoop/etc/hadoop/core-site.xml core-site.xml

5、配置regionservers,檔案內容如下

yt1

yt2

yt3

6、配置backup-masters(改檔案需要自己建立,指定備Hmaster的節點),內容如下:

zk3

7、分發到其他節點

8、啟動hbase(zk2執行命令就行)

$ start-hbase.sh

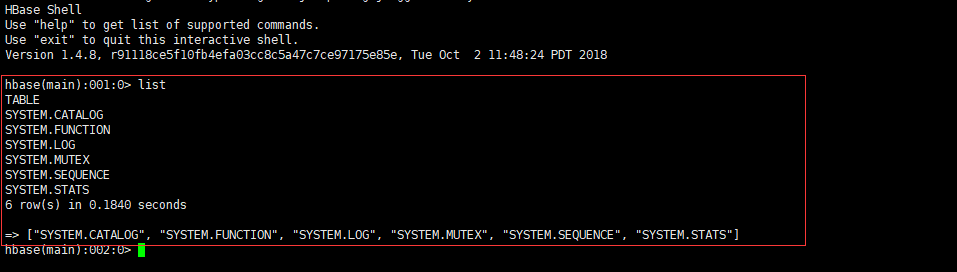

9、測試:命令列輸入 hbase shell進入到hbase,執行list命令,如果不報錯就成功,如下:

5、phoenix安裝

1、解壓

$ tar zxvf apache-phoenix-4.14.0-HBase-1.4-bin.tar.gz -C /data1/hadoop

2、把hbase配置檔案hbase-site.xml拷貝到Phoenix的bin目錄(主從節點都需要)

3、把Phoenix下的phoenix-core-4.14.0-HBase-1.4.jar 和phoenix-4.14.0-HBase-1.4-server.jar這兩個包拷貝到hbase的lib目錄下(主從節點都需要)

4、測試

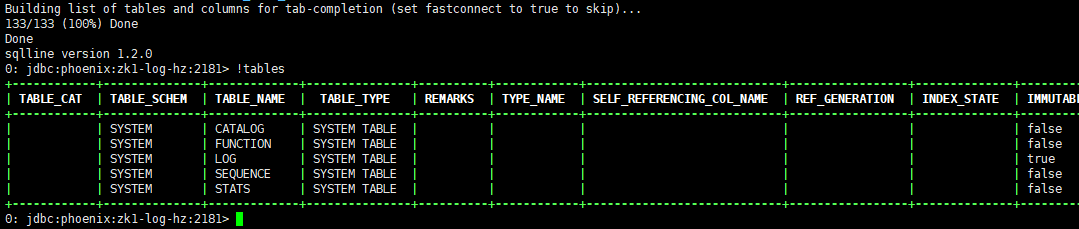

$ ./bin/sqlline.py zk1,zk2,zk3:2181 指定zookeeper叢集節點

連線成功以後輸入!tables檢視(注意命令前面有感嘆號)

6、配置spark(HA)

1、解壓

$ tar zxvf spark-2.1.0-bin-hadoop2.7.tgz -C /data1/hadoop

2、配置~/.source環境變數

export SPARK_HOME=/data1/hadoop/spark

export PATH=$PATH:${SPARK_HOME}/bin:${SPARK_HOME}/sbin

3、配置spark-env.sh,檔案最後新增如下:

export JAVA_HOME=/usr/local/jdk

export HADOOP_HOME=/data1/hadoop/hadoop

export SPARK_DAEMON_JAVA_OPTS="-Dspark.deploy.recoveryMode=ZOOKEEPER -Dspark.deploy.zookeeper.url=zk1:2181,zk2:2181,zk3:2181 -Dspark.deploy.zookeeper.dir=/spark"

4、配置slaves(指定worker節點),檔案內容如下:

yjt5

yjt6

yjt7

yjt8

5、分發到其他節點

6、啟動spark (zk3機器執行命令)

$ ./sbin/start-all.sh (這裡只會啟動一個master和其他的工作節點)

啟動另外一個master

$ star-master.sh

7、配置過程中的一些錯誤

錯誤(1):

2018-12-19 13:20:41,444 INFO org.apache.hadoop.security.authentication.server.AuthenticationFilter: Unable to initialize FileSignerSecretProvider, falling back to use random secrets.

2018-12-19 13:20:41,446 INFO org.apache.hadoop.http.HttpRequestLog: Http request log for http.requests.datanode is not defined

2018-12-19 13:20:41,450 INFO org.apache.hadoop.http.HttpServer2: Added global filter 'safety' (class=org.apache.hadoop.http.HttpServer2$QuotingInputFilter)

2018-12-19 13:20:41,452 INFO org.apache.hadoop.http.HttpServer2: Added filter static_user_filter (class=org.apache.hadoop.http.lib.StaticUserWebFilter$StaticUserFilter) to context datanode

2018-12-19 13:20:41,452 INFO org.apache.hadoop.http.HttpServer2: Added filter static_user_filter (class=org.apache.hadoop.http.lib.StaticUserWebFilter$StaticUserFilter) to context logs

2018-12-19 13:20:41,452 INFO org.apache.hadoop.http.HttpServer2: Added filter static_user_filter (class=org.apache.hadoop.http.lib.StaticUserWebFilter$StaticUserFilter) to context static

2018-12-19 13:20:41,471 INFO org.apache.hadoop.http.HttpServer2: HttpServer.start() threw a non Bind IOException

java.net.BindException: Port in use: localhost:0

。。。。。。。

解決:在/etc/hosts檔案新增如下兩行(我在配置這個檔案的時候刪除了這兩行,沒想到居然報錯了):

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

錯誤(2):

-ls: java.net.UnknownHostException: GD-AI

[[email protected] logs]$ hadoop fs -ls /

2018-12-19 15:31:17,553 INFO ipc.Client: Retrying connect to server: GD-AI/125.211.213.133:8020. Already tried 0 time(s); maxRetries=45

2018-12-19 15:31:37,575 INFO ipc.Client: Retrying connect to server: GD-AI/125.211.213.133:8020. Already tried 1 time(s); maxRetries=45

我連這個ip是什麼鬼都不知道,居然報這個錯:

解決:在hdfs-site.xml檔案新增下面這個配置,如果原本就有,還是報這個錯,那說明這個配置你應該是配置錯了;注意下面標紅的地方,我最開始就配置失誤了,這個該是你hdfs叢集的名字

<property>

<name>dfs.client.failover.proxy.provider.GD-AI</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

錯誤(3):

當hdfs或者yarn叢集的active節點掛掉以後,活動不能自動轉移:

解決:

(1)檢視系統是否有fuser命令,如果沒有請執行下面這個命令安裝:

$ sudo yum install -y psmisc

(2) 修改hdfs-site.xml配置檔案,新增標紅的配置,一般來說配置這個檔案都沒有配置這個值,當然,我前面配置hdfs-site.xml已經配置好了

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence

shell(/bin/true)

</value>

ok!!!