Designing Multi-Threaded Applications Using Swift

Designing Multi-Threaded Applications Using Swift

Being an iOS Developer in the automotive industry, I spend a great deal of time working with real time data. The need to process continuous streams of data efficiently is something that is important in many applications today. To make sure you don’t lock up the user interface, you will most likely need to use multi-threading.

Information that is streamed in real time is the most fun to work with, because you will constantly receive new data that you can use to update your visuals. It is also the most difficult and the most frustrating thing you can do, because an iOS device has certain limitations when it comes to hardware. Luckily, Apple has made multi-threading available through an extremely easy-to-use interface called GCD (Grand Central Dispatch). You may be familiar with code that looks a little something like this:

The main queue is where most of your programs code is run if you don’t explicitly place it in another queue. It’s a serial queue, meaning that it will pick the first item in line, execute the code, wait for it to finish, and release the item, then pick the next item in line, and so on.

Multi-threading and Concurrency

The main queue is not the only queue that is available through GCD though. There are a number of predefined queues that all have different priorities. There are also ways for you to create your own, specialized queues like so:

Note the queue we just created has the attribute .concurrent, meaning that this particular queue will not wait for one item to finish before executing the next one. It will simply place the first item in a thread and start it, and then move on to the next item, regardless of whether the first one has finished or not.

Now, let’s get a bit technical…

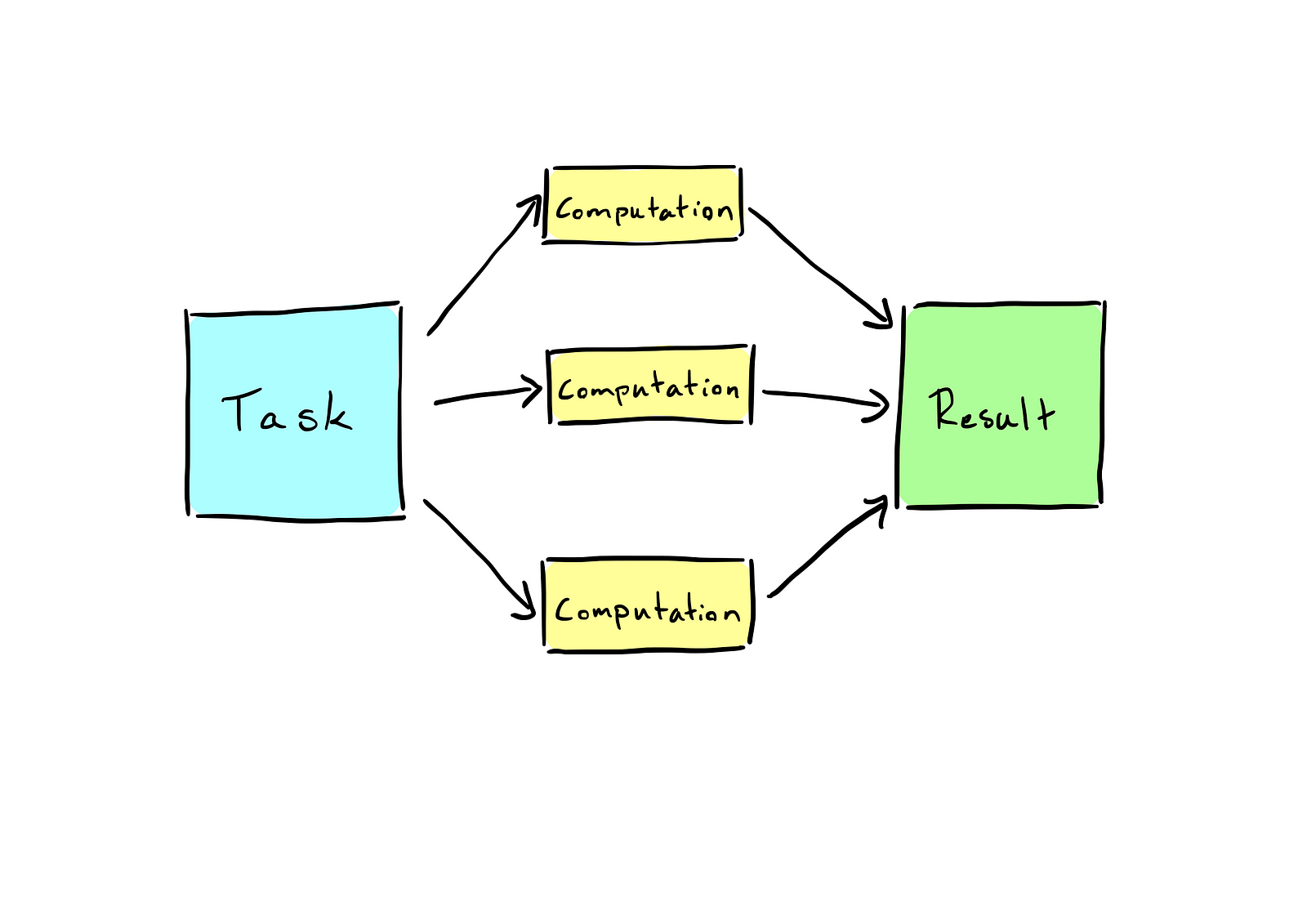

Let’s say that you’re working with a stream of data where the sample rate is ~20Hz. This means that you will have about 50 milliseconds to parse and interpret the data, add it to your data structure and tell your views to display it. If your iOS device tries to do this on the main thread, it will have very little time to check if the user is trying to interact with the app, and your application will become unresponsive. This is where we turn to multi-threading.

Assume that we are using a very simple data structure to store the data samples we receive, a common integer array. We might be tempted to create a queue and use it like this:

Did it work??

This looks good, right? Now we are doing all our data processing on a background thread and the main thread is only used to update our visuals. However, this is bound to crash. But why? The answer is a bit technical, but it’s important that you think about this.

Since our queue is concurrent, it will throw out work items on threads to be executed in parallel. We are also using an array as our data storage. A Swift array is a struct type, which implies that it’s a value type. When you try to append a value to an array like this, you will:

- Allocate a new array and copy the values from your old array

- Append the new data

- Write the new reference back to your variable

- The system proceeds to deallocate the memory that was used by the old array

Think about what would happen if two threads get the same array copied to them, they append their own data to the copy and then they write back the new reference to our variable, either one before the other or both at the same time. The first case will give us incorrect data, because the data from the thread that wrote first will be missing from the array that is written by the thread that writes last. The second case will cause our app to crash, because two threads can’t gain write access to allocated memory simultaneously.

With this in mind, we could use a pretty smart construct that comes with the DispatchQueue class, namely flags. Now, we can change our code like so:

This may look intimidating, but I’ll explain what it does.

By using the .barrier flag whenever we add an item that will change our data structure by writing to it, we tell our queue that this particular work item will need to be executed on its own. That means the queue will need to wait for all running threads to finish, then run this item and wait for it to finish, then it can start executing code in parallel again.

When the main thread needs to access the data to update our views, it needs to go through the data queue with a synchronous call. If it doesn’t, it runs the risk of one of our writing threads corrupting the data it’s reading at any time.

Finishing up…

Hopefully you made it through and got some new knowledge along the way. It may be helpful to re-read this again in a couple of days, to give yourself a chance to reflect on it.

Feel free to comment if you have questions, and follow to get notifications about future articles.