Kubernetes23--kube-scheduler原始碼--優選過程分析

kubernetes/pkg/scheduler/core/generic_scheduler.go

優選過程分析

優選函式入口

priorityList, err := PrioritizeNodes(pod, g.cachedNodeInfoMap, metaPrioritiesInterface, g.prioritizers, filteredNodes, g.extenders)函式定義

func PrioritizeNodes( pod *v1.Pod, nodeNameToInfo map[string]*schedulercache.NodeInfo, meta interface{}, priorityConfigs []algorithm.PriorityConfig, nodes []*v1.Node, extenders []algorithm.SchedulerExtender, ) (schedulerapi.HostPriorityList, error)

函式說明

// PrioritizeNodes prioritizes the nodes by running the individual priority functions in parallel. // Each priority function is expected to set a score of 0-10 // 0 is the lowest priority score (least preferred node) and 10 is the highest // Each priority function can also have its own weight // The node scores returned by the priority function are multiplied by the weights to get weighted scores // All scores are finally combined (added) to get the total weighted scores of all nodes

每一個優先順序函式返回0--10,並且有相應權重,優先順序函式平行計算,最終使用加權和作為最終Node節點得分數,返回優先順序Node列表

func PrioritizeNodes( pod *v1.Pod, nodeNameToInfo map[string]*schedulercache.NodeInfo, meta interface{}, priorityConfigs []algorithm.PriorityConfig, nodes []*v1.Node, extenders []algorithm.SchedulerExtender, ) (schedulerapi.HostPriorityList, error) { // If no priority configs are provided, then the EqualPriority function is applied // This is required to generate the priority list in the required format if len(priorityConfigs) == 0 && len(extenders) == 0 { result := make(schedulerapi.HostPriorityList, 0, len(nodes)) for i := range nodes { hostPriority, err := EqualPriorityMap(pod, meta, nodeNameToInfo[nodes[i].Name]) if err != nil { return nil, err } result = append(result, hostPriority) } return result, nil } var ( mu = sync.Mutex{} wg = sync.WaitGroup{} errs []error ) appendError := func(err error) { mu.Lock() defer mu.Unlock() errs = append(errs, err) } results := make([]schedulerapi.HostPriorityList, len(priorityConfigs), len(priorityConfigs)) // DEPRECATED: we can remove this when all priorityConfigs implement the // Map-Reduce pattern. for i := range priorityConfigs { if priorityConfigs[i].Function != nil { wg.Add(1) go func(index int) { defer wg.Done() var err error results[index], err = priorityConfigs[index].Function(pod, nodeNameToInfo, nodes) if err != nil { appendError(err) } }(i) } else { results[i] = make(schedulerapi.HostPriorityList, len(nodes)) } } workqueue.ParallelizeUntil(context.TODO(), 16, len(nodes), func(index int) { nodeInfo := nodeNameToInfo[nodes[index].Name] for i := range priorityConfigs { if priorityConfigs[i].Function != nil { continue } var err error results[i][index], err = priorityConfigs[i].Map(pod, meta, nodeInfo) if err != nil { appendError(err) results[i][index].Host = nodes[index].Name } } }) for i := range priorityConfigs { if priorityConfigs[i].Reduce == nil { continue } wg.Add(1) go func(index int) { defer wg.Done() if err := priorityConfigs[index].Reduce(pod, meta, nodeNameToInfo, results[index]); err != nil { appendError(err) } if klog.V(10) { for _, hostPriority := range results[index] { klog.Infof("%v -> %v: %v, Score: (%d)", util.GetPodFullName(pod), hostPriority.Host, priorityConfigs[index].Name, hostPriority.Score) } } }(i) } // Wait for all computations to be finished. wg.Wait() if len(errs) != 0 { return schedulerapi.HostPriorityList{}, errors.NewAggregate(errs) } // Summarize all scores. result := make(schedulerapi.HostPriorityList, 0, len(nodes)) for i := range nodes { result = append(result, schedulerapi.HostPriority{Host: nodes[i].Name, Score: 0}) for j := range priorityConfigs { result[i].Score += results[j][i].Score * priorityConfigs[j].Weight } } if len(extenders) != 0 && nodes != nil { combinedScores := make(map[string]int, len(nodeNameToInfo)) for i := range extenders { if !extenders[i].IsInterested(pod) { continue } wg.Add(1) go func(extIndex int) { defer wg.Done() prioritizedList, weight, err := extenders[extIndex].Prioritize(pod, nodes) if err != nil { // Prioritization errors from extender can be ignored, let k8s/other extenders determine the priorities return } mu.Lock() for i := range *prioritizedList { host, score := (*prioritizedList)[i].Host, (*prioritizedList)[i].Score if klog.V(10) { klog.Infof("%v -> %v: %v, Score: (%d)", util.GetPodFullName(pod), host, extenders[extIndex].Name(), score) } combinedScores[host] += score * weight } mu.Unlock() }(i) } // wait for all go routines to finish wg.Wait() for i := range result { result[i].Score += combinedScores[result[i].Host] } } if klog.V(10) { for i := range result { klog.Infof("Host %s => Score %d", result[i].Host, result[i].Score) } } return result, nil }

如果沒有優先順序配置,則執行相等權重函式來處理

// If no priority configs are provided, then the EqualPriority function is applied

// This is required to generate the priority list in the required format

if len(priorityConfigs) == 0 && len(extenders) == 0 {

result := make(schedulerapi.HostPriorityList, 0, len(nodes))

for i := range nodes {

hostPriority, err := EqualPriorityMap(pod, meta, nodeNameToInfo[nodes[i].Name])

if err != nil {

return nil, err

}

result = append(result, hostPriority)

}

return result, nil

}// EqualPriorityMap is a prioritizer function that gives an equal weight of one to all nodes

func EqualPriorityMap(_ *v1.Pod, _ interface{}, nodeInfo *schedulercache.NodeInfo) (schedulerapi.HostPriority, error) {

node := nodeInfo.Node()

if node == nil {

return schedulerapi.HostPriority{}, fmt.Errorf("node not found")

}

return schedulerapi.HostPriority{

Host: node.Name,

Score: 1,

}, nil

}

可知最終返回的HostPriority得分預設均為1

計算所有Node節點在該優先順序函式的得分,舊版本使用普通迴圈方式加非同步執行

// DEPRECATED: we can remove this when all priorityConfigs implement the

// Map-Reduce pattern.

for i := range priorityConfigs {

if priorityConfigs[i].Function != nil {

wg.Add(1)

go func(index int) {

defer wg.Done()

var err error

results[index], err = priorityConfigs[index].Function(pod, nodeNameToInfo, nodes)

if err != nil {

appendError(err)

}

}(i)

} else {

results[i] = make(schedulerapi.HostPriorityList, len(nodes))

}

}// PriorityFunction is a function that computes scores for all nodes.

// DEPRECATED

// Use Map-Reduce pattern for priority functions.

type PriorityFunction func(pod *v1.Pod, nodeNameToInfo map[string]*schedulercache.NodeInfo, nodes []*v1.Node) (schedulerapi.HostPriorityList, error)新版本使用Map-Reduce模式來實現 map過程啟動16個程序

workqueue.ParallelizeUntil(context.TODO(), 16, len(nodes), func(index int) {

nodeInfo := nodeNameToInfo[nodes[index].Name]

for i := range priorityConfigs {

if priorityConfigs[i].Function != nil {

continue

}

var err error

results[i][index], err = priorityConfigs[i].Map(pod, meta, nodeInfo)

if err != nil {

appendError(err)

results[i][index].Host = nodes[index].Name

}

}

})

for i := range priorityConfigs {

if priorityConfigs[i].Reduce == nil {

continue

}

wg.Add(1)

go func(index int) {

defer wg.Done()

if err := priorityConfigs[index].Reduce(pod, meta, nodeNameToInfo, results[index]); err != nil {

appendError(err)

}

if klog.V(10) {

for _, hostPriority := range results[index] {

klog.Infof("%v -> %v: %v, Score: (%d)", util.GetPodFullName(pod), hostPriority.Host, priorityConfigs[index].Name, hostPriority.Score)

}

}

}(i)

}map以及reduce函式定義如下

// PriorityMapFunction is a function that computes per-node results for a given node.

// TODO: Figure out the exact API of this method.

// TODO: Change interface{} to a specific type.

type PriorityMapFunction func(pod *v1.Pod, meta interface{}, nodeInfo *schedulercache.NodeInfo) (schedulerapi.HostPriority, error)

// PriorityReduceFunction is a function that aggregated per-node results and computes

//// final scores for all nodes.

// TODO: Figure out the exact API of this method.

// TODO: Change interface{} to a specific type.

type PriorityReduceFunction func(pod *v1.Pod, meta interface{}, nodeNameToInfo map[string]*schedulercache.NodeInfo, result schedulerapi.HostPriorityList) error等到計算結束,彙總計算每一個Node的總得分

// Wait for all computations to be finished.

wg.Wait()

if len(errs) != 0 {

return schedulerapi.HostPriorityList{}, errors.NewAggregate(errs)

}

// Summarize all scores.

result := make(schedulerapi.HostPriorityList, 0, len(nodes))

for i := range nodes {

result = append(result, schedulerapi.HostPriority{Host: nodes[i].Name, Score: 0})

for j := range priorityConfigs {

result[i].Score += results[j][i].Score * priorityConfigs[j].Weight

}

}對於演算法的擴充套件功能計算實現SchedulerExtender

if len(extenders) != 0 && nodes != nil {

combinedScores := make(map[string]int, len(nodeNameToInfo))

for i := range extenders {

if !extenders[i].IsInterested(pod) {

continue

}

wg.Add(1)

go func(extIndex int) {

defer wg.Done()

prioritizedList, weight, err := extenders[extIndex].Prioritize(pod, nodes)

if err != nil {

// Prioritization errors from extender can be ignored, let k8s/other extenders determine the priorities

return

}

mu.Lock()

for i := range *prioritizedList {

host, score := (*prioritizedList)[i].Host, (*prioritizedList)[i].Score

if klog.V(10) {

klog.Infof("%v -> %v: %v, Score: (%d)", util.GetPodFullName(pod), host, extenders[extIndex].Name(), score)

}

combinedScores[host] += score * weight

}

mu.Unlock()

}(i)

}

// wait for all go routines to finish

wg.Wait()

for i := range result {

result[i].Score += combinedScores[result[i].Host]

}

}SchedulerExtender介面以後需要研究一下

優先順序函式實現分析

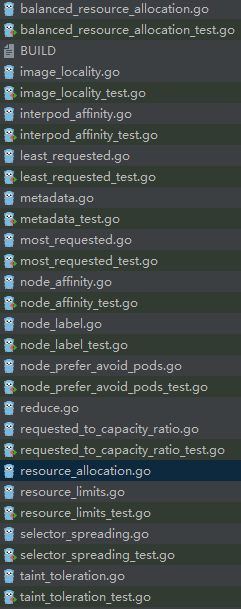

優先順序函式實現位置 kubernetes/pkg/scheduler/algorithm/priorities

1.BalancedResourceAllocation 資源均衡利用 計算cpu,mem, volumn利用率的方差

// BalancedResourceAllocationMap favors nodes with balanced resource usage rate. // BalancedResourceAllocationMap should **NOT** be used alone, and **MUST** be used together // with LeastRequestedPriority. It calculates the difference between the cpu and memory fraction // of capacity, and prioritizes the host based on how close the two metrics are to each other.

balancedResourcePriority = &ResourceAllocationPriority{"BalancedResourceAllocation", balancedResourceScorer}具體實現

func balancedResourceScorer(requested, allocable *schedulercache.Resource, includeVolumes bool, requestedVolumes int, allocatableVolumes int) int64 {

cpuFraction := fractionOfCapacity(requested.MilliCPU, allocable.MilliCPU)

memoryFraction := fractionOfCapacity(requested.Memory, allocable.Memory)

// This to find a node which has most balanced CPU, memory and volume usage.

if includeVolumes && utilfeature.DefaultFeatureGate.Enabled(features.BalanceAttachedNodeVolumes) && allocatableVolumes > 0 {

volumeFraction := float64(requestedVolumes) / float64(allocatableVolumes)

if cpuFraction >= 1 || memoryFraction >= 1 || volumeFraction >= 1 {

// if requested >= capacity, the corresponding host should never be preferred.

return 0

}

// Compute variance for all the three fractions.

mean := (cpuFraction + memoryFraction + volumeFraction) / float64(3)

variance := float64((((cpuFraction - mean) * (cpuFraction - mean)) + ((memoryFraction - mean) * (memoryFraction - mean)) + ((volumeFraction - mean) * (volumeFraction - mean))) / float64(3))

// Since the variance is between positive fractions, it will be positive fraction. 1-variance lets the

// score to be higher for node which has least variance and multiplying it with 10 provides the scaling

// // factor needed.

return int64((1 - variance) * float64(schedulerapi.MaxPriority))

}

if cpuFraction >= 1 || memoryFraction >= 1 {

// if requested >= capacity, the corresponding host should never be preferred.

return 0

}

// Upper and lower boundary of difference between cpuFraction and memoryFraction are -1 and 1

// respectively. Multiplying the absolute value of the difference by 10 scales the value to

// 0-10 with 0 representing well balanced allocation and 10 poorly balanced. Subtracting it from

// 10 leads to the score which also scales from 0 to 10 while 10 representing well balanced.

diff := math.Abs(cpuFraction - memoryFraction)

return int64((1 - diff) * float64(schedulerapi.MaxPriority))

}

func fractionOfCapacity(requested, capacity int64) float64 {

if capacity == 0 {

return 1

}

return float64(requested) / float64(capacity)

}分為兩種模式,第一種計算目錄使用率,第二種只計算cpu以及記憶體使用率。計算各種指標的使用率,計算均值,計算方差

cpuFraction := fractionOfCapacity(requested.MilliCPU, allocable.MilliCPU)

memoryFraction := fractionOfCapacity(requested.Memory, allocable.Memory)

volumeFraction := float64(requestedVolumes) / float64(allocatableVolumes)

mean := (cpuFraction + memoryFraction + volumeFraction) / float64(3)

variance := float64((((cpuFraction - mean) * (cpuFraction - mean)) + ((memoryFraction - mean) * (memoryFraction - mean)) + ((volumeFraction - mean) * (volumeFraction - mean))) / float64(3))

return int64((1 - variance) * float64(schedulerapi.MaxPriority))

2.ImageLocalityPriority 根據需要的映象在Node節點已經存在的數量,因為映象如果不存在則需要到倉庫拉取,這樣時間較長

// ImageLocalityPriorityMap is a priority function that favors nodes that already have requested pod container's images.

// It will detect whether the requested images are present on a node, and then calculate a score ranging from 0 to 10

// based on the total size of those images.

// - If none of the images are present, this node will be given the lowest priority.

// - If some of the images are present on a node, the larger their sizes' sum, the higher the node's priority.func ImageLocalityPriorityMap(pod *v1.Pod, meta interface{}, nodeInfo *schedulercache.NodeInfo) (schedulerapi.HostPriority, error) {

node := nodeInfo.Node()

if node == nil {

return schedulerapi.HostPriority{}, fmt.Errorf("node not found")

}

var score int

if priorityMeta, ok := meta.(*priorityMetadata); ok {

score = calculatePriority(sumImageScores(nodeInfo, pod.Spec.Containers, priorityMeta.totalNumNodes))

} else {

// if we are not able to parse priority meta data, skip this priority

score = 0

}

return schedulerapi.HostPriority{

Host: node.Name,

Score: score,

}, nil

}計算一個映象的得分

spread := float64(imageState.NumNodes) / float64(totalNumNodes)

int64(float64(imageState.Size) * spread)

計算一個Node節點所有映象的得分

sum += scaledImageScore(state, totalNumNodes)

轉換得分到區間0--10

int(int64(schedulerapi.MaxPriority) * (sumScores - minThreshold) / (maxThreshold - minThreshold))

3.LeastResourceAllocation cpu以及記憶體的平均cpu利用率

leastResourcePriority = &ResourceAllocationPriority{"LeastResourceAllocation", leastResourceScorer}func leastResourceScorer(requested, allocable *schedulercache.Resource, includeVolumes bool, requestedVolumes int, allocatableVolumes int) int64 {

return (leastRequestedScore(requested.MilliCPU, allocable.MilliCPU) +

leastRequestedScore(requested.Memory, allocable.Memory)) / 2

}計算可用的資源利用率

func leastRequestedScore(requested, capacity int64) int64 {

if capacity == 0 {

return 0

}

if requested > capacity {

return 0

}

return ((capacity - requested) * int64(schedulerapi.MaxPriority)) / capacity

}計算資源可用率

((capacity - requested) * int64(schedulerapi.MaxPriority)) / capacity

計算cpu以及記憶體可用利用率均值

(leastRequestedScore(requested.MilliCPU, allocable.MilliCPU) + leastRequestedScore(requested.Memory, allocable.Memory)) / 2

其餘優先順序函式可以參考如下:

kubernetes/pkg/scheduler/algorithm/priorities