How to Train Your Model (Dramatically Faster)

Here we consider exactly why transfer learning is so efficient.

By only retraining our final layer, we’re performing a far less computationally expensive optimization (learning hundreds or thousands of parameters, instead of millions) .

This contrasts with open source models like Inception v3 that contain 25 million parameters and were trained with best-in-class hardware. As a result, these nets have well-fit parameters and bottleneck layers with highly optimized representations of the input data. While you might find it difficult to train a high-performing model from scratch with your own limited computing and data resources, you can use transfer learning to leverage the work of others and force-multiply your performance.

Sample code

Let’s look at some Python code to get slightly more into the weeds (but not too far — don’t want to get lost down there!).

First, we need to start with a pretrained model. Keras has a bunch of pretrained models; we’ll use the InceptionV3 model.

# Keras and TensorFlow must be (pip) installed.from keras.applications import InceptionV3from keras.models import Model

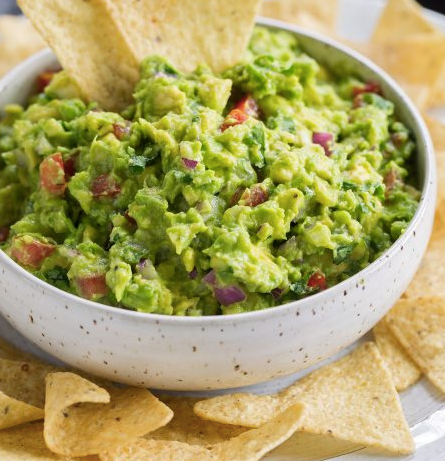

InceptionV3 has been trained on the ImageNet data, which contains 1000 different objects, many of which I find to be pretty eccentric. For instance, class 924 is guacamole.

Indeed, the pretrained InceptionV3 recognizes it as such.

preds = InceptionV3().predict(guacamole_img)returns a 1000 dimension array (where guacamole_img

preds.max() returns 0.99999, whereas preds.argmax(-1) returns index 924 — the Inception model is really sure that this guacamole is just that! (E.g. we are predicting that guacamole_imgis Imagenet image #924 with 99.999% confidence. Here’s a link to reproducible code.

Now that we know that InceptionV3 can at least confirm what I’m currently snacking on, let’s see if we can use the underlying data representation to retrain and learn a new classification scheme.

As noted above, we want to freeze the first n-1layers of the model, and just retrain a final layer.

Below, we load the pretrained model; we then grab the input and second to last (bottleneck) layer names from the original model using TensorFlow’s .get_layer() method and build a new model using those two layers as input and output.

original_model = InceptionV3()bottleneck_input = original_model.get_layer(index=0).inputbottleneck_output = original_model.get_layer(index=-2).outputbottleneck_model = Model(inputs=bottleneck_input, outputs=bottleneck_output)

Here, we get the input from the first layer (index = 0) of the Inception model. If we print(model.get_layer(index=0).input), we see Tensor("input_1:0", shape=(?,?,?,3), dtype=float32) — this indicates that our model is expecting some indeterminate amount of images as input, of an unspecified height and width, with 3 RBG channels. This, too, is what we want as the input for our bottleneck layer.

We see Tensor("avg_pool/Mean:0",shape=(?, 2048), dtype=float32) as the output of our bottleneck, which we accessed by referencing the second to last model layer. In this instance, the Inception model has learned a 2048 dimensional representation of any image input, where we can think of these 2048 dimensions as representing crucial components of an image that are essential to classification.

Lastly, we instantiate a new model with the original image input and the bottleneck layer as output: Model(inputs=bottleneck_input, outputs=bottleneck_output).

Next, we need to set each layer in the pretrained model to untrainable — essentially we are freezing the weights and biases of these layers and keeping the information that was already learned through Inception’s original, laborious training.

for layer in bottleneck_model.layers: layer.trainable = False

Now, we make a new Sequential() model, starting with our previous building block and then making a minor addition.

new_model = Sequential()new_model.add(bottleneck_model)new_model.add(Dense(2, activation=‘softmax’, input_dim=2048))

The above code serves to build a composite model which combines our Inception architecture with a final layer with 2 nodes. We use 2 because we are going to retrain a new model to learn to differentiate cats and dogs — so we only have 2 image classes. Replace this with however many classes you’re hoping to classify.

As noted before, the bottleneck output is of size 2048, so this is our input_dim to the Dense layer. Lastly, we insert a softmax activation to ensure our image class outputs can be interpreted as probabilities.

I’ve included a very tall network layout image of the full network at the very end of this article — be sure to check it out!.

To finish, we just need a few more standard TensorFlow steps:

# For a binary classification problemnew_model.compile(optimizer='rmsprop', loss='binary_crossentropy', metrics=['accuracy'])

one_hot_labels = keras.utils.to_categorical(labels, num_classes=2)

new_model.fit(processed_imgs_array, one_hot_labels, epochs=2, batch_size=32)

Here, processed_ims_array is an array of size (number_images_in_training_set, 224, 224, 3), and labels is a Python list of the ground truth image classes. These are scalars corresponding to the class of the image in the training data. num_classes=2, so labels is just a list of length number_of_images_in_training_setcontaining 0’s and 1’s.

In the end, when we run this model on our first cat training image (using Tensorflow’s very handy, built-in bilinear rescaling function):

The model predicts cat with 94% confidence. That’s pretty good, considering I only used 20 training images, and trained for a mere 2 epochs!

Take-aways

By leveraging a pre-built model architecture and pre-learned weights, transfer learning allows you to use the learned high-level representation of a given data structure and apply it to your own, new training data.

To recap, you need 3 ingredients to use transfer learning:

- A pretrained model

- Similar training data — You need inputs to be “similar enough” to inputs of pre-trained model. Similar enough means that the inputs must be of the same format (e.g. shape of input tensors, data types…) and of similar interpretation. For example, if you are using a model pretrained for image classification, images will work as input! However, some clever folk have formatted audio to run through a pretrained image classifier, with some cool results. As ever, fortune favors the creative.

- Training labels

Check out a full working example here for a demonstration of transfer learning that uses local files.

Please leave comments and claps below if you have any questions / found this of value. Feel free to reach out to me if you have any machine learning projects that you’d like to discuss! [email protected].