Building trust in AI applications

Building Trust in AI Applications

All of us have seen those fear mongering headlines about how artificial intelligence is going to steal our jobs and how we should be very careful with biased AI algorithms. Bias means that the algorithm favors certain groups of people or otherwise guides decisions towards an unfair outcome. Bias can mean giving a raise only to white male employees, increasing criminal risk factors of certain ethnic groups and filling your news feed only with topics and point of views that you are currently consuming — instead of giving a broad, balanced view of the world and educating you.

It is important to be aware that a machine learning model can be biased, but this hardly opens up any new topics to the discussion of AI ethics compared to ethical decisions in general. White males are more likely to get a promotion anyways, court judges may get tired and hand out stricter verdicts and we tend to consume news that we agree with. That is to say, the same biases exist even without AI.

Instead, the discussion about AI ethics should concentrate on increasing transparency, educating the public and bringing out the good examples.

AI doesn’t do anything alone

First of all, algorithms and AI do not do anything alone. While it is true that the current AI models and suggestions that they generate are based on historical data, there is still a human checking the current situation before the final decision. The headline above gives us the idea that the algorithms have made a terrible error and there was nothing that the child care employee could have done about it. The case was about a woman, a former drug user, whose daughters had been taken from her custody.

The child care case above was from Broward County, Florida but there are efforts to create an AI application to detect child care issues in a city called Espoo in Finland. The city of Espoo and the consultancy company Tieto Inc. together are building a deep learning model which, at the time of writing, has not been used as a base to any decisions yet but promising signs have been found while training the model. They collected social and healthcare data and early education client relationship data about 520 000 citizens and over 37 million contact points with them. The experiment led to finding 280 different factors that, if happening together, predict the need for support from child care officials even before custody must be considered.

Companies working with AI development and journalists writing about AI need to build the trust of the public and not the opposite. In AI lies a great opportunity for enhancing human capabilities and we should not raise uncertainty leading to resistance and thus slowing down the adoption of the new technology.

Cultivating fear mongering towards AI leads to a situation where good examples, like Espoo’s child care experiment are toned down and developers of such applications needs to be very careful about what to publicize. This leaves the general public ignorant about any great achievements and usually ignorance leads being suspicious.

Bringing out the good examples

The conversation should shift from trying to prevent AI from being biased and doing wrong decisions to a more constructive direction: how can AI help us to make the ethically right decisions and operations.

Let’s start with one good example. One of Valohai’s customers, TwoHat Security, is building a model to stop distribution of child pornography. TwoHat Security is working with Canada’s law enforcement and universities to build a machine vision model to detect sexual abuse material from darknets and other hard to reach places of the Internet. There is no doubt that this is by all measures an ethically, morally and socially right way to use artificial intelligence. Using machines to search for illegal material allows the law enforcement to be much more effective and eliminates the need for humans to go through morbid material by themselves.

Still, mainly because the media is going after shock value, we tend to hear about the bad examples more than the good.

How to be transparent?

A key aspect in building public trust towards AI applications is transparency. While journalists can do their part by writing from a constructive and supportive perspective, companies must also do theirs and share their progress in building the new technology.

For example, companies can start by participating to the AI Ethics challenge initiated by Finland’s Ministry of Economics Affair and Employment’s AI Steering Group. The challenge encourages companies around the world to write down their principles for ethical use of artificial intelligence to make sure that future technologies are developed responsibly across the whole company. Ethical principles are thought to build trust towards customers who in general want to know what kind of applications are build with their data. You can read more about the challenge and participate here.

Lyrebird’s deep learning application allows anyone to create a vocal avatar that resembles one’s own voice. This kind of technology is potentially very harmful in wrong hands. Not only because it can mimic one’s voice but used in conjunction with natural language processing (NLP) models, that can translate speech to text and generate answers, it could reply to questions asked — in your own voice!

For good reason, the company has chosen to publish their ethical principles on to their website. They predict that this technology will be widespread in just a few years and they admit there is a risk to misuse of such technology. They have chosen to educate people about the technology and to do, this they released audio samples of digitally created voices of Donald Trump and Barack Obama. An option would have been to build audio avatars in silence and wait someone to use this technology to fool the public audience. Now, thanks to this openness, at least some people know to be suspicious if they hear Obama giving an odd speech. People need to know that they can’t trust everything they see and hear.

We need street signs for AI

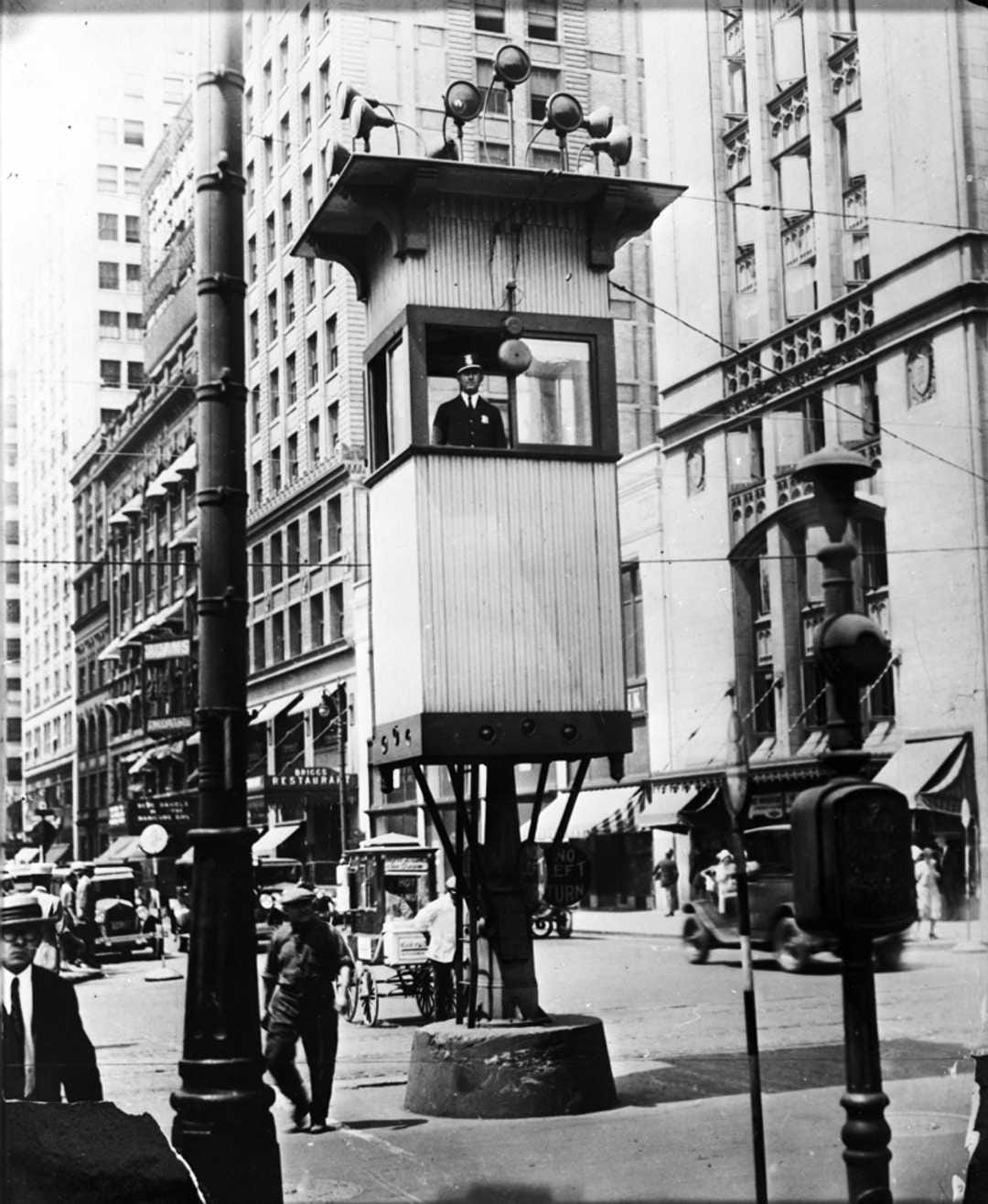

“Drivers often didn’t understand that taking corners at high speed would have dire consequences.” — Bill Loomis, The Detroit News

The quote above describes drivers in the early 20th century when cars were a new sight on the roads. I don’t know about you, but I facepalmed reading that. It is like saying: “Training your human-recognizing machine vision model mainly with pictures of white people causes the model to recognise only white people as humans and people with dark skin as apes.” Oh, right, that happened to Google already.

Google Photos, y’all fucked up. My friend’s not a gorilla. pic.twitter.com/SMkMCsNVX4

— Jacky lives on @[email protected] now. (@jackyalcine) June 29, 2015

During the first 30 years when cars were becoming common, they were very dangerous. There weren’t street signs, proper road infrastructure, speed limits nor even seatbelts. Not to mention regulation — just squeeze 10 people in one car, drink and drive if you wish!

Biased AI is becoming the “no one can trust a metal horse” argument of the early 20th century car industry. Maybe just after a decade we’ll see AI solutions as fundamental and self-evident as cars.

The headline for Lyrebird’s ethical principles is “with great technology comes great responsibility”. This also holds true when it comes to legislation that is hopelessly behind the times. Even if it can be argued that corporations’ sole purpose is to generate profit for their owners, it is crucial that companies are active in this discussion and keep regulators up to date about advancements in technology. This time around, regulation can’t be 30 years behind the technology.

In the picture from The Detroit News archives there are street lights of early 20th century that had to be switched manually.

Our promise to AI ethics

The current situation in some companies is not that bright when it comes to transparency, mostly unintentionally. There are multiple companies who are testing things out and without having proper records on which model has been trained with what data and when. It’s hard to be transparent if you are not sure about the details yourself.

Valohai promises to make companies’ deep learning experiments reproducible and keep records of all the trainings and details in them. Let’s take a step towards transparent and ethical AI development together.

Valohai saves and archives all this information making everything version controlled and 100% reproducible.