Differences Between AI and Machine Learning and Why it Matters

Differences Between AI and Machine Learning and Why it Matters

Unfortunately, some tech organizations are deceiving customers by proclaiming using AI on their technologies while not being clear about their products’ limits

This past week, while exploring the most recent press on artificial intelligence, I stumbled upon an organization that professed to utilize “AI and machine learning” to gather and examine thousands of users’ data to enhance the user experience in versatile mobile applications. Around the same time, I read about another organization that anticipated customer behavior using “a blend of machine learning and AI” along “AI-powered forecasting analytics.”

(I will abstain from naming the organizations in order to not disgrace them, since I somewhat “trust” their SaaS may tackle genuine issues, regardless of whether they are advertising their products deceptively.)

There is a lot of confusion between machine learning and AI. Based on some articles that I have been reading, a lot of people still refer to AI and machine learning as equivalent words and utilize them reciprocally, while other utilize them as discrete, parallel advancements. However, in many cases the general population talking and expounding on the innovation do not realize the contrast between AI and ML. In others, they deliberately overlook those distinctions to create hyper-excitement for advertising and sales purposes.

Below we will go through some main differences between AI and machine learning.

What is machine learning?

Machine learning is a branch of artificial intelligence, simply put by Professor and Former Chair of the Machine Learning Department at Carnegie Mellon University, Tom M. Mitchell: “Machine learning is the study of computer algorithms that improve automatically through experience.”

For instance, if you provide a machine learning program with a lot of x-ray images, along their corresponding symptoms, it will be able to assist (or possibly automate) the analysis of x-ray images in the future. The machine learning application will compare all those different images and find what the common patterns are in images that have been labeled with similar symptoms. In addition, when you provide it with new images it will compare its contents with the patterns it has gleaned and tell you how likely the images contain any of the symptoms it has studied before.

In a simple example, if you load a machine learning program with a considerable large data-set of x-ray pictures along their description (symptoms, items to consider, etc.), it will have the capacity to assist (or perhaps automatize) the data analysis of x-ray pictures later on. The machine learning model will look at each one of the pictures in the diverse data-set, and find common patterns found in pictures that have been labeled with comparable indications. Furthermore, (assuming that we use a good ML algorithm for images) when you load the model with new pictures it will compare its parameters with the examples it has gathered before in order to disclose to you how likely the pictures contain any of the indications it has analyzed previously.

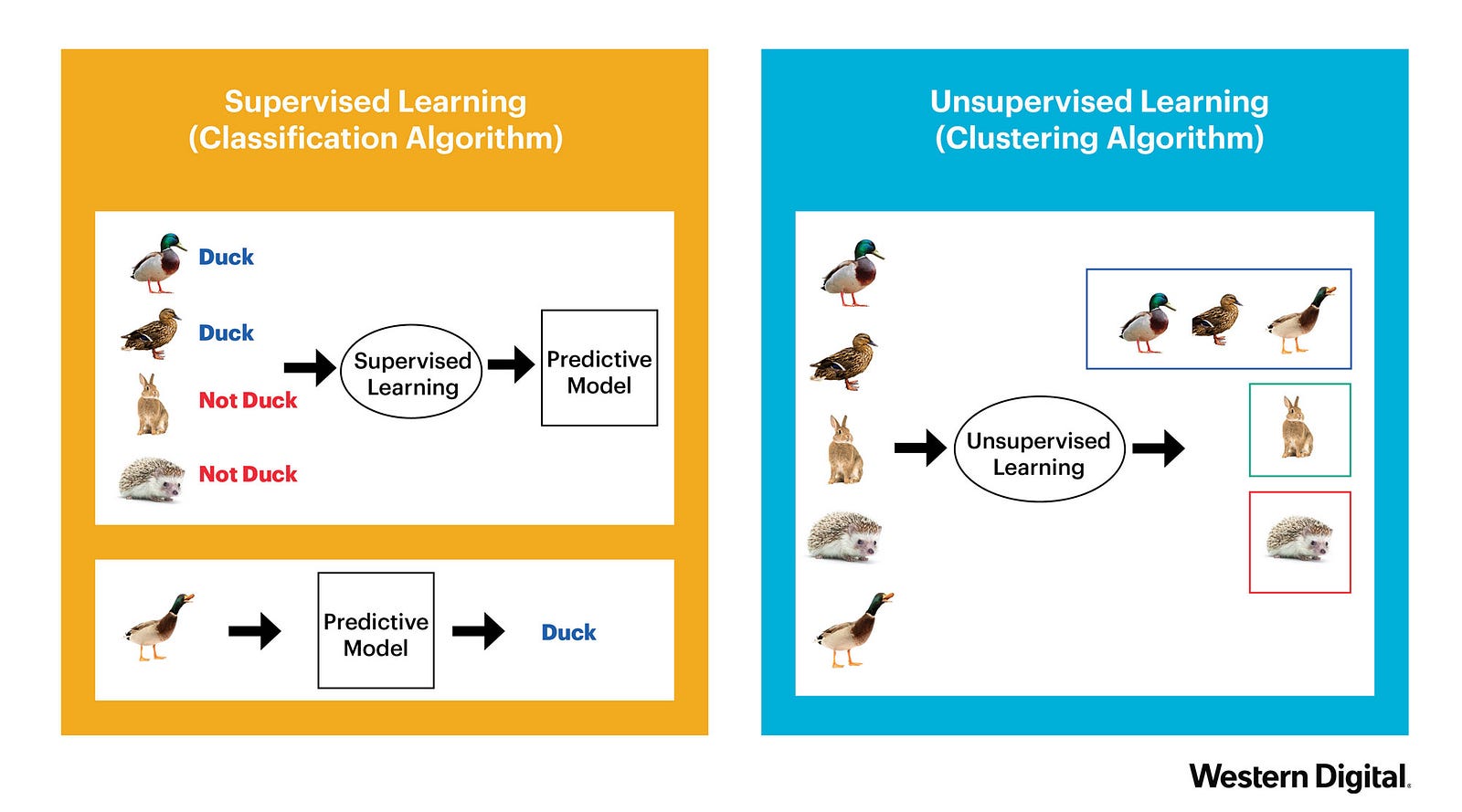

The type of machine learning from our previous example is called “supervised learning,” where supervised learning algorithms try to model relationship and dependencies between the target prediction output and the input features, such that we can predict the output values for new data based on those relationships, which it has learned from previous data-sets [15] fed.

Unsupervised learning, another type of machine learning are the family of machine learning algorithms, which are mainly used in pattern detection and descriptive modeling. These algorithms do not have output categories or labels on the data (the model is trained with unlabeled data).

Reinforcement learning, the third popular type of machine learning aims at using observations gathered from the interaction with its environment to take actions that would maximize the reward or minimize the risk. In this case the reinforcement learning algorithm (called the agent) continuously learns from its environment using iteration. A great example of reinforcement learning are computers reaching super-human state and beating humans on computer games [3].

Machine learning is interesting; particularly its advanced sub-branches, i.e. deep learning and the various types of neural networks. In any case, it is “magic”, regardless of whether the public at times have issues observing its internal workings. In fact, while some tend to compare deep learning and neural networks to the way the human brain works, there are important differences between the two [4].

Note on ML: We know what machine learning is, we also know that it is a branch of artificial intelligence, along knowing what it can and cannot do [5].

What is Artificial Intelligence (AI)?

On the other hand, the term “artificial intelligence” is exceptionally wide in scope. According to Andrew Moore [6], Dean of the School of Computer Science at Carnegie Mellon University, “Artificial intelligence is the science and engineering of making computers behave in ways that, until recently, we thought required human intelligence.”

This is a great way to define AI in a single sentence; however, it still shows how broad and vague the field is. Several decades ago, a pocket calculator was considered a form of AI, since mathematical calculation was something that only the human brain could perform. Today, the calculator is one of the most common applications you will find on every computer’s operating system, therefore, “until recently” is something that progresses with time.

Assistant Professor and Researcher at CMU, Zachary Lipton clarifies on Approximately Correct [7], the term AI “is aspirational, a moving target based on those capabilities that humans possess but which machines do not.”

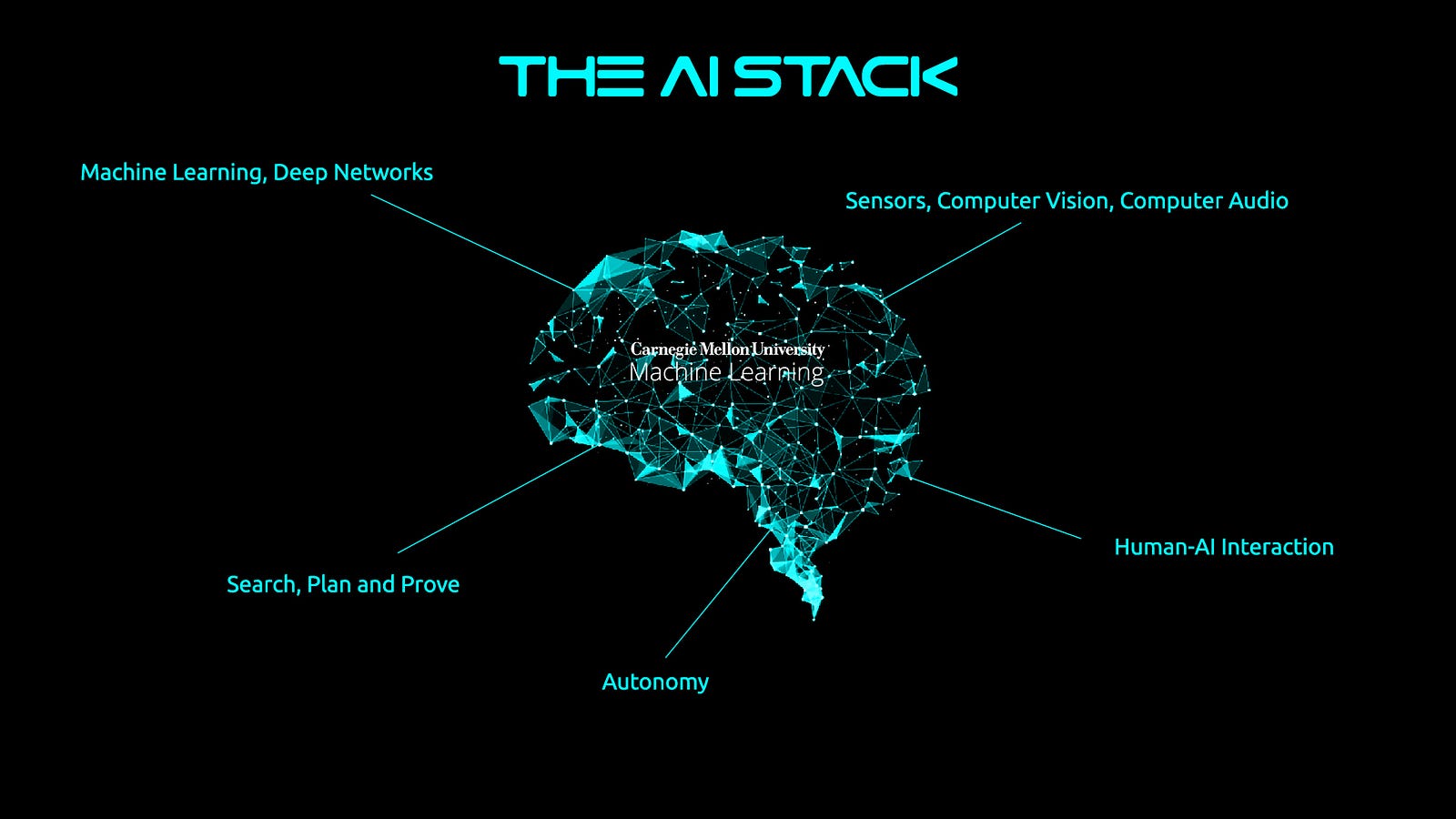

AI also includes a considerable measure of technology advances that we know. Machine learning is only one of them. Prior works of AI utilized different techniques, for instance, Deep Blue, the AI that defeated the world’s chess champion in 1997, used a method called tree search algorithms [8] to evaluate millions of moves at every turn.

AI as we know it today is symbolized with Human-AI interaction gadgets by Google Home, Siri and Alexa, by the machine learning powered video prediction systems that power Netflix, Amazon and YouTube, by the algorithms hedge funds use to make micro-trades that rake in millions of dollars every year. These technology advancements are progressively becoming important in our daily lives. In fact, they are intelligent assistants that enhance our abilities, along making us more productive.

Note on AI: In contrast to machine learning, AI is a moving target, and its definition changes as its related technological advancements turn out to be further developed. What is and is not AI can without much of a stretch, be challenged, making machine learning very clear-cut on its definition. Possibly, within a few decades, today’s innovative AI advancements will be considered as dull as calculators are to us right now.

Why do tech companies tend to use AI and ML interchangeably?

The term “artificial intelligence” came to inception in 1956 by a group of researchers including Allen Newell and Herbert A. Simon [9], AI’s industry has gone through many fluctuations. In the early decades, there was a lot of hype surrounding the industry, and many scientists concurred that human-level AI was just around the corner. However, undelivered assertions caused a general disenchantment with the industry along the public and led to the AI winter, a period where funding and interest in the field subsided considerably.

Afterwards, organizations attempted to separate themselves with the term AI, which had become synonymous with unsubstantiated hype, and utilized different terms to refer to their work. For instance, IBM described Deep Blue as a supercomputer and explicitly stated that it did not use artificial intelligence [10], while it actually did.

During this period, a variety of other terms such as big data, predictive analytics and machine learning started gaining traction and popularity. In 2012, machine learning, deep learning and neural networks made great strides and started being utilized in a growing number of fields. Organizations suddenly started to use the terms machine learning and deep learning to advertise their products.

Deep learning started to perform tasks that were impossible to do with classic rule-based programming. Fields such as speech and face recognition, image classification and natural language processing, which were at early stages, suddenly took great leaps.

Hence, to the momentum, we are seeing a gearshift back to AI. For those who had been used to the limits of old-fashioned software, the effects of deep learning almost seemed like “magic,” especially since a fraction of the fields that neural networks and deep learning are entering were considered off limits for computers. Machine learning and deep learning engineers are earning skyward salaries [11], even when they are working at non-profit organizations, which speaks to how hot the field is.

All these elements have helped reignite the excitement and hype surrounding artificial intelligence. Therefore, many organizations find it more profitable to use the vague term AI, which has a lot of baggage and exudes a mystic aura, instead of being more specific about what kind of technologies they employ. This helps them oversell, redesign or re-market their products’ capabilities without being clear about its limits.

In the meantime, the “advanced artificial intelligence” that these organizations claim to use, is usually a variant of machine learning or some other known technology.

Sadly, this is something that tech publications often report without profound examination, and frequently go along AI articles with pictures of crystal balls, and other supernatural portrayals. Such deception helps those companies generate hype around their offerings. Yet, down the road, as they fail to meet the expectations, these organizations are forced to hire humans to make up for the shortcomings of their so-called AI [12]. In the end, they might end up causing mistrust in the field and trigger another AI winter for the sake of short-term gains.

I am always open to feedback, please share in the comments if you see something that may need revisited. Thank you for reading!

Don’t forget to checkout:

References:

[3] Watch our AI system play against five of the world’s top Dota 2 Professionals | Open AI | https://openai.com/five/

[9] Reinventing Education Based on Data and What Works, Since 1955 | Carnegie Mellon University | https://www.cmu.edu/simon/what-is-simon/history.html

[14] Dr. Andrew Moore Opening Keynote | Artificial Intelligence and Global Security Initiative | https://youtu.be/r-zXI-DltT8