How Facebook 3D Photos Work

How Facebook 3D Photos Work

Facebook recently rolled out a new feature, 3D Photos, or, as they call it in their javascript: 2.5D Photos. Here is how it looks like:

To create such a photo, choose 3D Photo when post to Facebook, and use new portrait mode on iPhone. That’s it.

But how does it work under the hood? I like to learn new ideas by reading other people’s code. So I was curious enough to learn how they implemented this effect at Facebook. Here is the story. If you just want to know the tech, skip to the “how it all works” part.

First guess, fragment shader?

There is a popular way of faking 3D with shader, by using depth map and distort image pixels with fragment shader in WebGL:

At first I thought facebook team went for that, as it’s very easy to do, and the image size is quite small. But that’s not how they made it. ? You can see rough edges on a depth change at facebook implementation.

Another idea that might be used by Facebook, is vertex displacement based on image, like the one we used with Denys recently on his

There are some other ways to fake it, but now I needed to know how they did it! Because i’m curious, you know.

How i decompiled

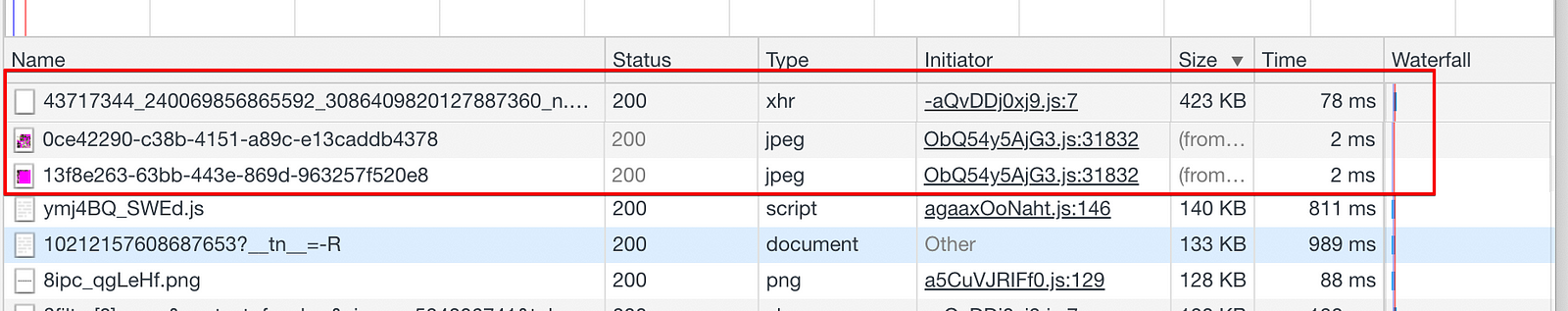

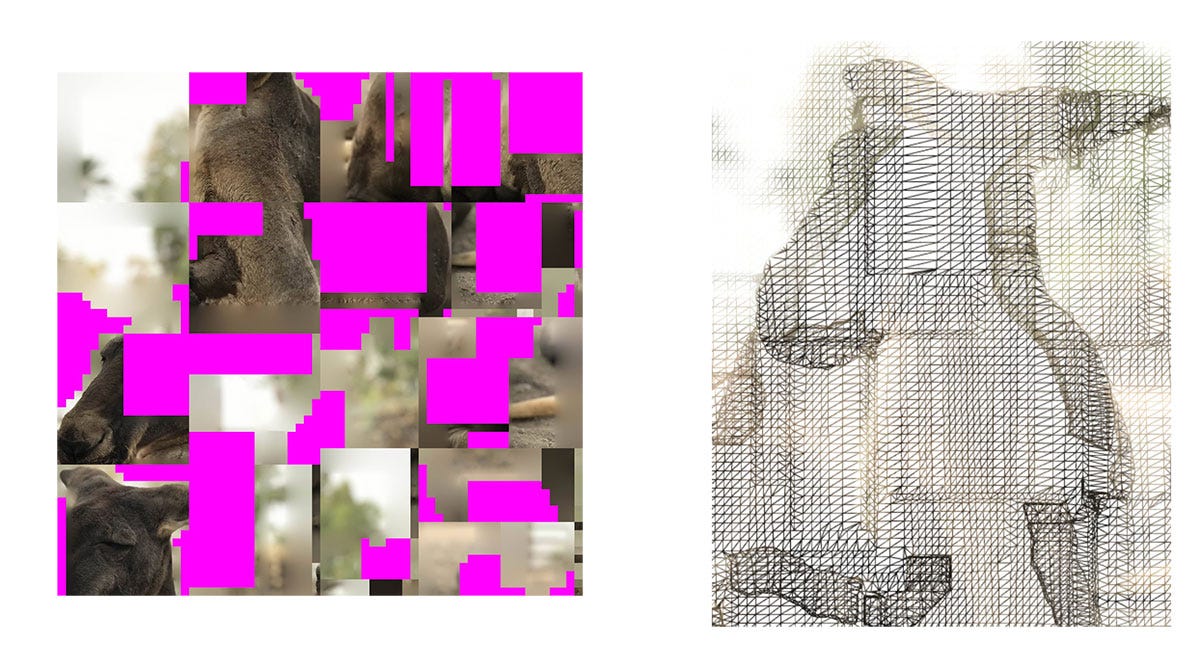

Best way to start is a network tab in DevTools. Everything sorted by file size, and that’s what you get for that kangaroo:

Downloaded biggest file, it turned out to be a .glb file. Which is a binary form of glTF format, the best choice for 3D models on the web now.

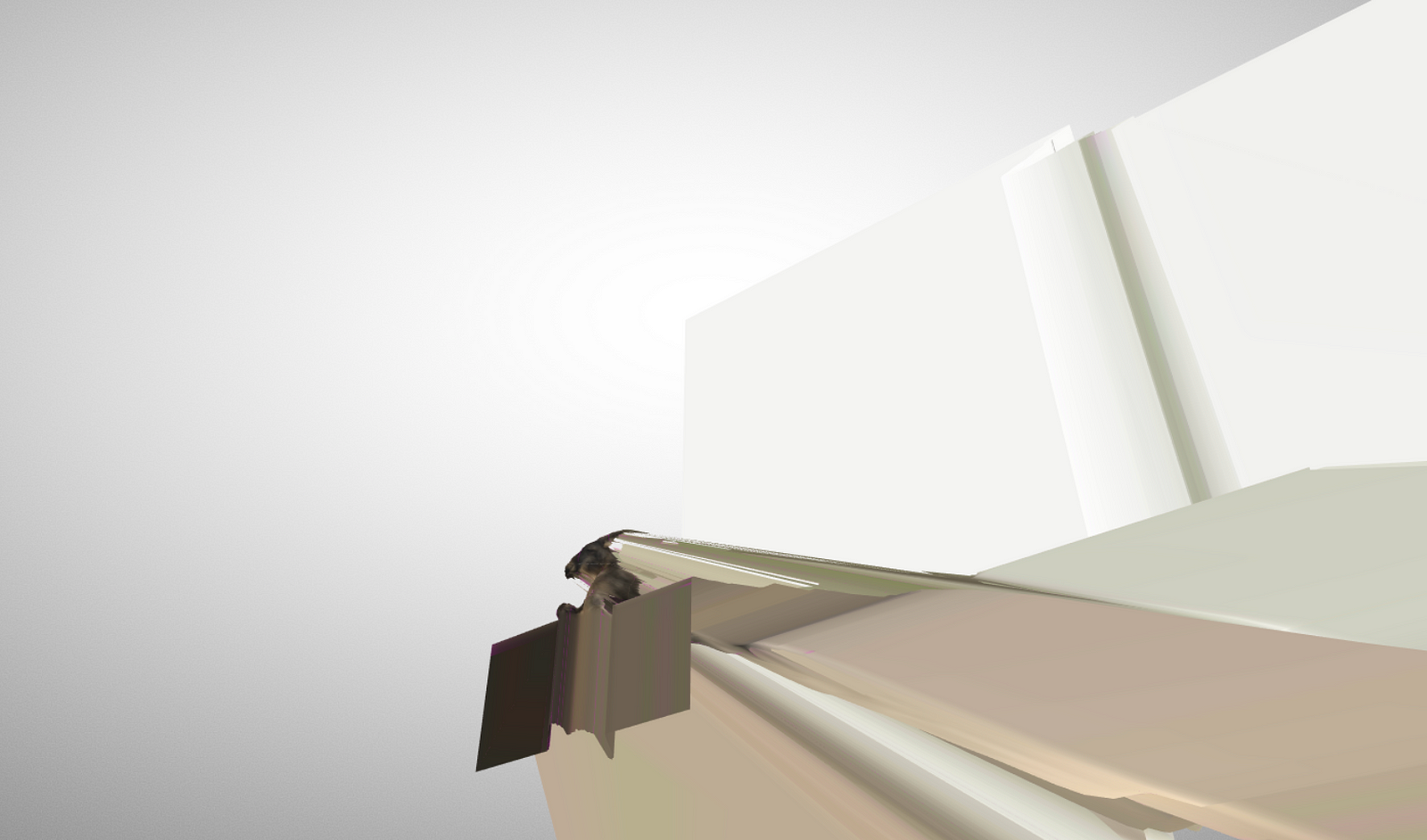

So I went to the online glTF viewer, uploaded that file, and saw this:

Not very helpful, but now I knew, it was not about vertex-fragment displacement, that’s an actual 3D model here.

So I went to javascript sources of Facebook page (using this extension), and found out they use Three.js framework for that viewer. Nice, I knew how to handle that one.

Found (that’s actually hardest part) the three.js scene object, and just changed one value:

material.wireframe = true;

Finally I was able to see the magic behind this effect!

How it all works?

First of all, when new iPhone is taking a picture in portrait mode, it also stores a depth data along with usual .jpg. Here is a little video explanation on how it gathers it:

According to apple specification this data comes as a point cloud. Not a mesh.

Next, facebook based on some interpolation algorithm creates an actual 3D model out of it, with photo as a texture:

Now when you load a facebook page, it starts a three.js scene, and loads this model with a texture.

When you move you mouse — camera position is changing, so you experience sweet 2.5D effect. Which is actually a true 3D as we just found out.

In the end

I don’t know why Facebook picked a more complicated and techy way of doing this effect. May be because they already have a 3D viewer, or they plan something bigger soon. No idea.

Still curious about exact algorithm used to create the glTF model out of depth field, specifically, notice how this part is not just “depth” but more like a separate object in front of the background.

I think that’s some kind of special interpolation of depth data. But may be you have any other idea? May be thats TrueDepth information we can get out of photo?

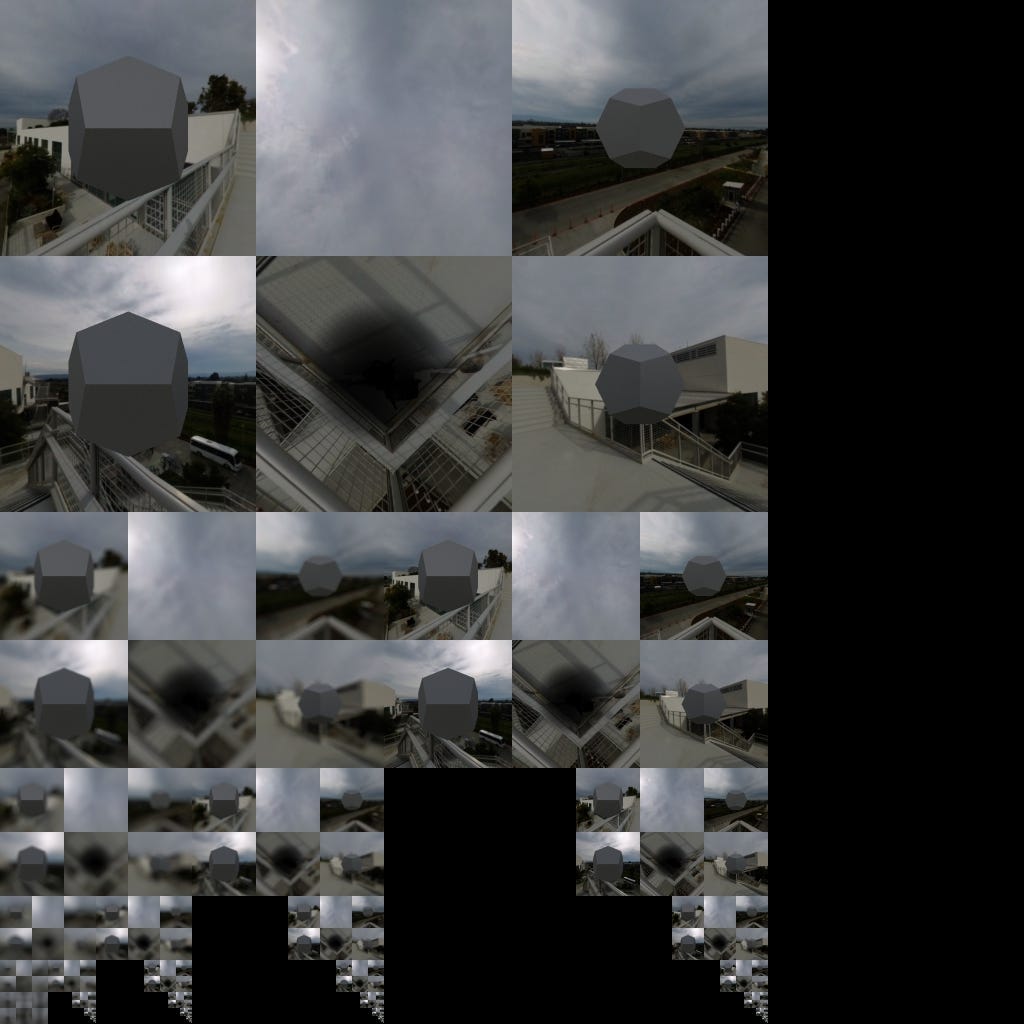

There is also one strange (possibly just test) image loaded additionally on each 3D photo:

Thanks to Michael Feldstein, he uncovered the mistery, so that image is PreMultipliedRadienceEnvironmentMap that threejs uses to pack into instead of a mipmapped cubemap.

That’s it. Hope you liked this small investigation. I’d be grateful if you share any of your ideas on this. Have a nice day!