Learning 3D Face Morphable Model Out of 2D Image

Researchers from Michigan State University propose a novel Deep Learning-based approach to learning a 3D Morphable Model. Exploiting the power of Deep Neural Networks to learn non-linear mappings, they suggest a method for learning 3D Morphable Model out of just 2D images from in-the-wild (images not taken in a controlled environment like a lab).

Previous Approaches

A conventional 3DMM is learned from a set of 3D face scans with associated well-controlled 2D face images. Traditionally, 3DMM is learned through supervision by performing dimension reduction, typically Principal Component Analysis (PCA), on a training set of co-captured 3D face scans and 2D images. By employing a linear model such as PCA, non-linear transformations and facial variations cannot be captured by the 3D Morphable Model. Moreover, large amounts of high-quality 3D data are needed to model highly variable 3D face shapes.

State of the art idea

The idea of the proposed approach is to leverage the power of Deep Neural Networks or more specifically Convolutional Neural Networks (which are more suitable for the task and less expensive than multilayer perceptrons) to learn the 3D Morphable Model with an encoder network that takes a face image as input and generates the shape and albedo parameters, from which two decoders estimate shape and albedo.

Method

As mentioned before a linear 3DMM has the problems such as the need of 3D face scans for supervised learning, unable to leverage massive in-the-wild face images for learning, and the limited representation power due to the linear model (PCA). The proposed method learns a nonlinear 3 DMM model using only large-scale in-the-wild 2D face images.

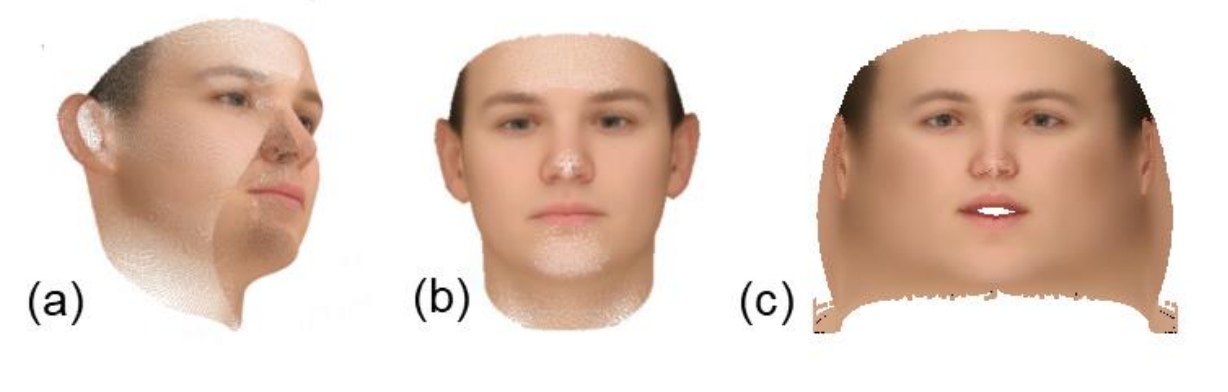

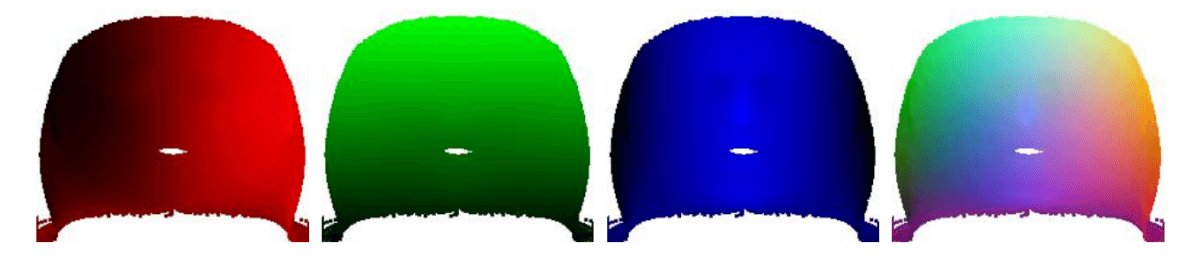

UV Space Representation

In their method, the researchers use an unwrapped 2D texture (where 3 D vertex v is projected onto the UV space) as a texture representation for the shape and the albedo. They argue that keeping the spatial information is very important as they employ Convolutional Networks in their method and frontal face-images contain little information about the two sides. Therefore their choice falls on UV-space representation.

Network architecture

They designed an architecture that given an input image it encodes it into shape, albedo and lightning parameters (vectors). The encoded latent vectors for albedo and shape are decoded using two different Decoder networks (again Convolutional Neural Networks) to obtain face skin reflectance, image (for the albedo) and 3D face mash (for the shape). Then a differentiable rendering layer was designed to generate the reconstructed face by fusing the 3D face, albedo, lighting, and the camera projection parameters estimated by the encoder. The whole architecture is nicely presented in the figure below.