What can you do with 25 Racks of Servers in 2018? Part 1…

What can you do with 25 Racks of Servers in 2018? Part 1…

My team and I, at Blockchain Productivity, integrate and deploy IT systems for bulk computing applications. Our AmbientCube enables us to deliver computing in a modular footprint for a variety of scenarios. We have deployed more than 320 teraflops of capacity in a non-traditional decentralized data center and are pushing the envelope to see what we can do.

TL;DR — Over the last year we have incrementally built a supercomputer and now have access to a large quantity of network enterprise servers. We are going to do something interesting with 20,000 Ivy Bridge Cores for a 12-hour period every few weeks and you will help us decide the projects! Our first 12-hour campaign will be to direct our computing power at a

Background

We see computing becoming a commodity that will be as important as Electricity in the 21st Century.

We believe that computing will continue to become a commodity and we will see a world where brands like AWS and Azure are not able to command a premium. Many of these developments are in active discussion in a variety of blockchain projects that envision a role for providers of capacity that will be compensated for delivering commodity resources such as

There are always bigger problems to compute.

Grid computing has been around for decades. Well before the World Wide Web, there were projects using computers to solve the world’s big problems. We have crossed the precipice of petascale computing and are charging on to exascale and beyond. There are always interesting problems and application areas and the value of the results continue to increase with added computational power.

The Future of Computing Is Interesting but still developing

We have a strong hunch that we are in the middle of a transition from cloud computing to something else, but it’s not entirely clear what that something is. Chris Dixon had an excellent write up a few years ago about “What’s next for computing?”. We see an incredible convergence between mobile and cloud that will look very similar and at the same time very different from the computing and communication fabric of today. One of my favorite quotes about the future comes from William Gibson, “The future is already here — it’s just not very evenly distributed”. To this end, we are on a journey to visit innovative communities and connect with the individuals and organizations that are already building the applications of tomorrow.

First Signs of the Transition from (Cloud, Distributed, Parallel, Utility) Computing to (Ambient, Edge, Fog, Ubiquitous) Computing

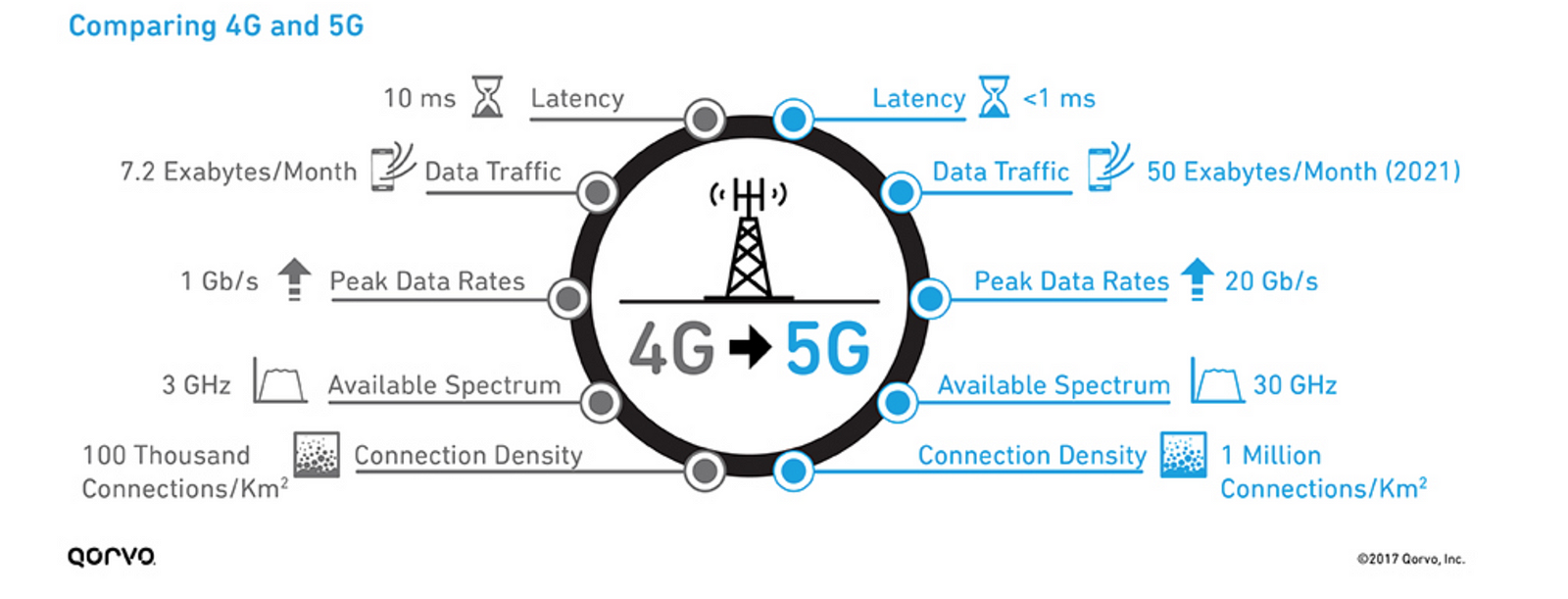

We are surrounded by processors and computing. This is only the beginning as we digitize and connect everything around us. Both the number of connected devices and the amount of data being generated every day is exploding. Today the cloud is quickly charging toward world domination as enterprises of all sizes start to consume computing rather than invest in building new data centers. As 5G comes out of the lab and applications such as AR conferencing emerge we will see computing continue to develop at the Edge of the network and integrate in fascinating ways (both at the network and CDN level) with the public clouds that are becoming so dominant today.

A number of properties (latency, jitter, mobility support) that are required for emerging applications are forcing the underlying compute infrastructure to adapt. This adaptation happens by modifying the properties of the network and deployment of computing elements in that context. Depending on the application and a combination of fixed and mobile elements along with a mix of wireless and wireline connectivity there are a number of possible topologies. Two applications that are coming online today are autonomous vehicles (vehicular networks) and virtual reality.

Vehicular Networks

Tesla’s autopilot has given us an early sneak peek into what cars as an edge computer that shares a large dataset across an entire fleet can do. Currently, the capabilities are updated across the fleet on a period basis (less often than once per week). A number of interesting capabilities come online as the infrastructure evolves. The Fleet Learning dataset could be updated near real-time allowing every Tesla vehicle to be much more responsive to developing local conditions. Adding data from traffic cameras and other fixed infrastructure could further the capabilities of autonomous vehicles.

Virtual Reality

Immersive experiences are already pushing the boundaries of collaboration and design. NVIDIA has demonstrated their Holodeck concept to enable the design team to collaborate in product design exercises. It’s not a significant stretch to imagine these interactions happening in an AR context. In order to deliver this experience, latency and processing will have to happen much closer to the capabilities offered by 5G wireless networks. This world is coming quickly.

We are looking for exciting use cases as we build our ecosystem.

We continue our journey to connect with organizations and technologists who are using disproportionally large amounts of computing to deliver their value propositions. We have traveled to a number of communities including New York and Tel Aviv to engage with companies and individuals doing groundbreaking work in their respective spaces. A number of interesting early applications have evolved:

- Grid Computing

- Machine Learning

- Bayesian Analytics

- Cloud Video Rendering

- Integer Programming for Supply Chain applications.

- Service Quality Monitoring

- Zero Knowledge Proof processing

We will expand our list of applications as we discover them. Do you have an application that puts 80 cores, 800 cores or 8000+ cores to work for a month? If so let’s talk!

We hope to make this a collaborative exercise!

We would love to hear your thought on what else we should be doing with all of this horsepower. We are reaching out to a few online communities to help us imagine the possibilities. Please leave your comments below and give us a ?? if you enjoyed the content.

What are we actually doing and why.

We are going to do something interesting with 20,000 Ivy Bridge Cores for a 12-hour period every few weeks (12-hour campaigns) and you will help us decide.

A bit about our setup.

We have access to 20,000 Ivy Bridge EP cores (40,000 threads the way that AWS and other cloud providers count). They are primarily packaged in node machines at a density of 800 cores per standard 19" rack. Because of our extensive use of modern DevOps tools and processes, we can remotely control the mechanical plant and the workload of the infrastructure at a very granular level. For this exercise, we will first test the optimal configuration to utilize the entire capacity of our footprint and then deploy that workload so that we can document a continuous 12 hours of directed effort.

We packaged our computer in a modular form factor so that we can optimize based on application

Because the computing fabric of the future is developing so quickly it really helps to be flexible. To this end, we house our machines in a modular system that allows us to be flexible with location. We call this our AmbientCube. Currently, we are optimized to provide the lowest price per flop. However, we can optimize for latency (at cell site) or on-premise (in enterprise facilities) deployment in the future.

The first 12-hour campaign will be…

My first encounter with cluster computing was in the early 2000s just when Beowulf Clusters were becoming a common computing paradigm. Having assembled a 120 node cluster with high-end engineering workstations, I was looking for an appropriate application to direct this capacity. In the days before Bitcoin, the obvious choice was the great projects that had been assembled by David Anderson at Berkley. Back then I chose to direct the capacity that we had put together toward [email protected]. This was a great early experience to see how underutilized power could be directed to great effect. For our first effort, we will point our resources to another BOINC project where 320 teraflops could have a significant impact.