Generating Large, Synthetic, Annotated, & Photorealistic Datasets for Computer Vision

I’d like to introduce you to the beta of a tool we’ve been working on at Greppy, called the Greppy Metaverse, which assists with computer vision object recognition / semantic segmentation / instance segmentation, by making it quick and easy to generate a lot of training data for machine learning. (Aside: we’d also love to help on your project if we can — email me at

If you’ve done image recognition in the past, you’ll know that the size and accuracy of your dataset is important. All of your scenes need to be annotated, too, which can mean thousands or tens-of-thousands of images. That amount of time and effort wasn’t scalable for our small team.

Overview

So, we invented a tool that makes creating large, annotated datasets orders of magnitude easier. We hope this can be useful for AR, autonomous navigation, and robotics in general — by generating the data needed to recognize and segment all sorts of new objects.

We’ve even open-sourced our VertuoPlus Deluxe Silver dataset

To demonstrate its capabilities, I’ll bring you through a real example here at Greppy, where we needed to recognize our coffee machine and its buttons with a Intel Realsense D435 depth camera. More to come in the future on why we want to recognize our coffee machine, but suffice it to say we’re in need of caffeine more often than not.

In the Good-ol’ Days, We Had to Annotate By Hand!

For most datasets in the past, annotation tasks have been done by (human) hand. As you can see on the left, this isn’t particularly interesting work, and as with all things human, it’s error-prone.

It’s also nearly impossible to accurately annotate other important information like object pose, object normals, and depth.

Synthetic Data: a 10 year-old idea

One promising alternative to hand-labelling has been synthetically produced (read: computer generated) data. It’s an idea that’s been around for more than a decade (see this GitHub repo linking to many such projects).

We ran into some issues with existing projects though, because they either required programming skill to use, or didn’t output photorealistic images. We needed something that our non-programming team members could use to help efficiently generate large amounts of data to recognize new types of objects. Also, some of our objects were challenging to photorealistically produce without ray tracing (wikipedia), which is a technique other existing projects didn’t use.

Making Synthetic Data at Scale with Greppy Metaverse

To achieve the scale in number of objects we wanted, we’ve been making the Greppy Metaverse tool. For example, we can use the great pre-made CAD models from sites 3D Warehouse, and use the web interface to make them more photorealistic. Or, our artists can whip up a custom 3D model, but don’t have to worry about how to code.

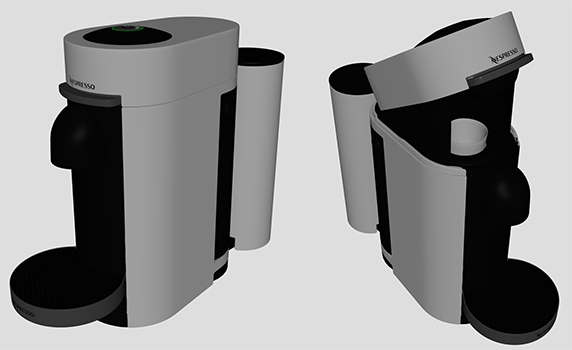

Let’s get back to coffee. With our tool, we first upload 2 non-photorealistic CAD models of the Nespresso VertuoPlus Deluxe Silver machine we have. We actually uploaded two CAD models, because we want to recognize machine in both configurations.

Once the CAD models are uploaded, we select from pre-made, photorealistic materials and applied to each surface. One of the goals of Greppy Metaverse is to build up a repository of open-source, photorealistic materials for anyone to use (with the help of the community, ideally!). As a side note, 3D artists are typically needed to create custom materials.

To be able to recognize the different parts of the machine, we also need to annotate which parts of the machine we care about. The web interface provides the facility to do this, so folks who don’t know 3D modeling software can help for this annotation. No 3D artist, or programmer needed ;-)

And then… that’s it! We automatically generate up to tens of thousands of scenes that vary in pose, number of instances of objects, camera angle, and lighting conditions. They’ll all be annotated automatically and are accurate to the pixel. Behind the scenes, the tool spins up a bunch of cloud instances with GPUs, and renders these variations across a little “renderfarm”.