Compressive Sensing for AI Self-Driving Cars

By Lance Eliot, the AI Trends Insider

Years ago, I did some computer consulting work for a chemical company. It’s quite a curious story and one that went into the record books as a consulting engagement. The way it began was certainly an eye opener.

An executive at a company that I had been doing consulting for had been golfing with an executive from a chemical company and they got to discussing computers during their round of golf. The chemical company executive expressed that he was looking for a good consultant that would keep things confidential and be very discrete. The exec that I knew told him that I fit that mold. I received the next day an out-of-the-blue call from the assistant to the chemical executive and was asked if I would be interested in coming out to their company for a potential consulting gig.

I jumped at the chance as it was rare to have a prospect call me, versus my having to try and beat the bushes to find new clients. I dutifully showed-up at the appointed date and time, wearing my best suit and tie. The lobby assistant escorted me back into the office area and as we walked through the halls of the building, I noticed a set of double doors that had a rather prominent sign that said “No Admittance Unless Authorized.” I assumed that there must be some kind of special chemicals or maybe company secrets that were locked behind those doors.

Upon entering into the office of the executive, he warmly greeted me. We sat and had some initial small talk. I could see that he wanted to tell me something but was hesitating to do so. I decided to mention that my work is always of a confidential nature and that I don’t talk about my clients (note: I realize that my telling the story now might seem untoward, but it was many years ago that this client activity occurred and I am also purposely leaving out any specific details).

He then confided in me an incredible tale. Here’s what the scoop was. They were a tiny division of a very large chemical company. Headquarters had decided to try and have this division do some analyses of certain chemicals that the company was using. The division would place the chemicals into vials, and run tests on the liquid in the vials. From these tests, they would end-up with ten numbers for each vial. They were to do some statistical analysis on the numbers and provide a report to headquarters about their findings.

The division was excited to receive this new work from HQ. They had hired a software developer to create an application for them that would allow by-hand entry of the vial numbers and would calculate the needed statistics, along with producing a report that could be sent back to headquarters. The developer was one of those one-man-band kind of programmers and had whipped out the code quickly and cheaply. So far, the tale seems rather benign.

Here’s the kicker. Turns out that the software wasn’t working correctly. First of all, upon entering the ten numbers for each vial, somehow the program was messing up and on the reports it showed different numbers than were entered. They also discovered that the statistics calculations were incorrect. Even the manner that the ten numbers needed to be entered was goofy and it allowed easily for the data entry people to enter the wrong values. There was no internal error checking in the code. The ten numbers were always to be in the range of 1 to 20, but a user could enter any number, such as say 100 or -100, and the code would allow it. The data entry people were pretty sloppy and were also time pressured to enter the numbers, leading to a ton of bad data getting into the otherwise bad program overall.

You’ve probably heard about a famous computer industry expression: Garbage In, Garbage Out (GIGO). It means that if you let someone enter lousy data, you are likely going to see lousy data coming out of the system. There are many programs and programmers that don’t seem to care about GIGO and their attitude is often “if the user is stupid enough to enter bad data, they get what they deserve!” That being said, in contrast there are programmers that do care about GIGO, but they are sometimes told not to worry about it, or not given enough time and allowed effort to write code to stop or curtail GIGO.

In contemporary times, the mantra has become GDGI, which stands for Garbage Doesn’t Get In. This means that the developers of a program should design, build, and test it so that it won’t allow bad data to get into the program. The sooner that you can catch bad data at the entry point, the better off you are. The further that bad data makes its way through a program, the harder it is to detect, the harder it is to stop, and the harder it is to correct (as a rule of thumb).

Let’s get back to the story. The division executive had discovered that the program was cruddy, and that the data was bad, doing so before they had sent any of the reports to headquarters. The programmer though was nowhere to be found. He was not responding to their requests that he come and rewrite the application. After trying to reach him over and over, they found a different programmer and asked to have the code rewritten. This programmer said that the code was inscrutable and there wasn’t going to be any viable means of fixing it.

Meanwhile, the division was under the gun to provide the reports. They did what “anyone” might do — they decided to calculate the statistics by hand. Yes, they abandoned using the application at all. They instead bought a bunch of calculators and hired temporary labor to start crunching numbers. They then had this written up in essentially Word documents and sent those over to headquarters. In doing so, they implied that they had a program that was doing the calculations and keeping track of the data. It was hoped that headquarters wouldn’t figure out the mess that the division had really gotten itself into, and buy them time to figure out what to do.

The reason they had the double doors with the big sign about no admittance unless authorized was due to the fact that each day they had hordes of temps that were coming into the room there to do all of these by-hand calculations. They were trying to keep it a secret even within the division itself. The executive told me that he was not able to sleep at nights because he figured that the ruse would eventually be uncovered by others. He had gotten himself into quite a mess.

You can probably now see why he was looking for a computer consultant that had discretion.

The main reason I tell the story here is that I wanted to discuss GIGO and GDGI. Before I do so, I’ll go ahead and provide you with the happy ending to the story. I was able to rewrite the application, we got it going, and eventually the data was being stored online, the calculations were being done correctly, and we had lots of error checking built into the code. Headquarters remained pleased, and indeed even dramatically increased the volume of chemical tests coming to the division. Had we not gotten the software fixed up, the division would have had to hire most of the population of the United States just to do the by-hand statistical calculations.

Anyway, one lesson here is that sometimes you want to allow data to flow into an application, freely, and deal with it once its already inside. In other cases, you want to stop the data before it gets into the application per se, and decide what to do with it before it gets further along in the process.

Allow me to switch topics for the moment and discuss compressive sensing.

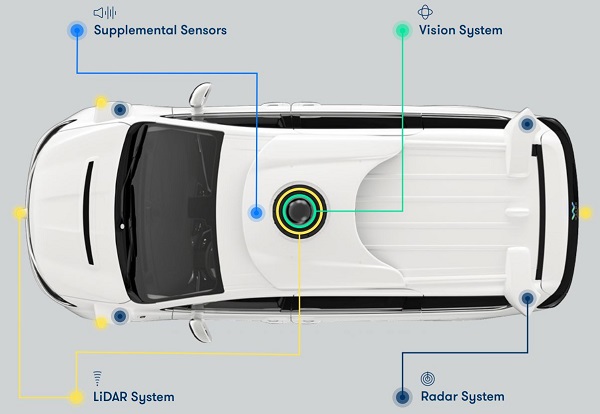

Compressive sensing is a technique and approach to collecting data from sensors. If you take a look at all of the sensors on an AI self-driving car, which might have a dozen cameras, a dozen sonars, a LIDAR, and so on, you begin to realize that those sensors are going to generate a ton of data. The deluge of data coming into the AI self-driving car system is going to require hefty computational processing and a huge amount of storage. The more you need computational processing then the more processors you need in the AI self-driving car, and likewise the more computer storage that you’ll need. This adds cost to the AI self-driving car, along with adding weight, along with adding complexity, along with adding the chances for breakdowns and other problems.

In today’s world, we assume that all of the data coming from those sensors is precious and required. Suppose that a camera has taken a picture and it consists of one million pixels of data. All one million of those pixels are collected by the sensor and pushed forward into the system for use and analysis. The computational processing needs to examine one million pixels. The internal memory needs to store the one million pixels.

We often deal with torrents of data by using various compression techniques and programs. Typically, the compression algorithm does encoding to undertake the compression, and then does decoding when the compressed data needs to be brought back into its uncompressed state. A completely lossless compression is one that returns you back the same thing exactly that you started with. A lousy compression is one that will not guarantee that you can get back your original, since during the compression it might do things that will prevent you from reconstructing the original.

The compression involves the usual trade-off of time and quality. A faster compression algorithm tends to reduce the quality or losslessness of the result. Also, there are trade-offs about whether you want the most time to be consumed when doing the encoding, or at the decoding. If you have data coming into you at a really fast pace, you often cannot afford to do much encoding, and so you use a “dumb encoder” that won’t take long to run. You tend to pay for this in that when you do the decoding, often considered “smart decoding” it then takes a while to run, but that might be OK because you were pressed for time when the data first arrived and in contrast maybe have a lot more time to deal with the decoding.

You can think of the compressive sensing as a means of using a dumb encoder and allowing then for a smart decoder. Part of the reason that the encoder in compressive sensing can go quickly is because it is willing to discard data. In fact, compressive sensing says that you don’t even need to collect a lot of that data at all. They view that the sensor designers are not thinking straight and are assuming that every bit of data is precious. This is not always the case.

Suppose that instead of collecting one million pixels for a picture, we instead either told the sensor to only collect 200,000 pixels (the pixels to be chosen at random), or that we let the camera provide the one million pixels but we then selected only 200,000 at random from the array provided. If the image itself has certain handy properties, especially sparsity and also incoherence, we have really good chance of being able to reconstruct what the million must have been, even if we had tossed away the other 800,000 pixels.

The compressive sensing works best when the original data exhibits sparsity, meaning that it has essentially simple elements that are readily composed of the same kinds of nearby pixel values. If the data is noisy and spread all around in a wild manner (like say a lot of static on a TV screen that is widely dispersed), it makes compressive sensing not so handy. Randomness of the original data is bad in this case. We want there to be discernible patterns.

Imagine a painting that has a girl wearing a red dress and there is a blue sky behind her. If we were to outline the girl, we’d see that there are lots of red pixels within the bounds of that outline. When looking outside the outline, we’d see there are lots of blue pixels. If we were to randomly sample the pixels, we would mainly end-up with red pixels and blue pixels. Wherever we saw red pixels in our sample, the odds are that there are more red pixels nearby. Wherever we saw blue pixels, the odds are that there are more blue pixels nearby. In that sense, even if we only had say 20% of the original data, we could pretty much predict where the missing 80% is going to be red pixels or the blue pixels. Kind of a paint-by-numbers notion.

So, via compressive sensing, we have a good chance of starting with just a tiny sample of data and being able to reconstruct what the entire original set must have been. This technique involves a combination of mathematics, computer science, information theory, signal processing, etc. It works on the basis that most of the data being collected is redundant and we don’t really need it all. It would seem wasteful to go to the trouble to collect something that is redundant and instead just collect a fraction of it. As they say in compressive sensing, there are way too many measurements often being taken and what we should be doing is simplifying the data acquisition and then following it up with some robust numerical optimization.

With compressive sensing, you take random measurements, rather than measuring everything. You then reconstruct using typically some kind of linear programming or other non-linear minimization function. In a sense, you are trying to simultaneously do signal acquisition and compression. The sensing is considered ultra-efficient and non-adaptive, and recovery or reconstruction is by tractable optimization. If you are used to the classical Nyquist/Shannon sampling approach, you’ll find the use of compressive sensing quite interesting and impressive. Its initial roots were in trying to aid medical MRI’s and speed-up the processing, reducing therefore the difficulty factor for the human patient in terms of having to sit still for a lengthy time to do an MRI.

What does this have to do with AI self-driving cars?

At the Cybernetic Self-Driving Car Institute, we are using compressive sensing to deal with the deluge of data coming from the numerous sensors on AI self-driving cars.

Right now, most of the auto makers and tech companies developing AI self-driving cars are handling the torrent of data in the usual old fashioned manner. They don’t really care about how much processing is needed and nor how much storage is needed, since they are developing experimental cars and they can put whatever souped-up processors and RAM that they want. The skies the limit. No barrier to costs.

Eventually, ultimately, we all will want AI self-driving cars to become available for the masses, and so the cost of the AI self-driving car must come down. One way to get the cost down will be to be more mindful of how fast the processors are and how many you need, and likewise how much memory you need. Currently, most are just trying to get the stuff to work. We’re looking ahead to how this stuff can be optimized and minimized, allowing the costs and complexity to be reduced.

Some of the sensor makers are also beginning to see the value of compressive sensing. But, it is a tough choice for them. They don’t want to provide a camera that only gives you 200,000 pixels per picture when someone else’s camera is giving 1,000,000. It would seem to make their camera inferior. Also, admittedly, the nature of the images being captured can dramatically impact whether the compressive sensing is viable and applicable.

Even though I have been mentioning images and a camera, keep in mind that compressive sensing can apply to any of the sensors on the AI self-driving car. The data could be radar, it could be LIDAR, it could be sonar, it really doesn’t matter what kind of data is coming in. It’s more about the characteristics of the data as to whether it is amenable to using compressive sensing.

Another reason why compressive sensing can be important to AI self-driving cars is that the speed at which the torrent of data needs to be analyzed can be aided by using compressive sensing. If the AI of the self-driving car is not informed quickly enough by the sensors and sensor fusion about the state of the car, the AI might not be able to make needed decisions on a timely basis. Suppose that an image of a dog in front of the self-driving car has been received by the camera on the self-driving car, but it takes a minute for the image to be analyzed, and meanwhile the AI is allowing the self-driving car to speed forward. By the time that the sensor fusion has occurred, it might be too late for the AI self-driving car to prevent the car from hitting the dog.

Via compressive sensing, if we are able to either not care about receiving all the data or at least we are willing to discard a big chunk of it, the speed at which the sensors report the data and the sensor fusion can happen is possible to be sped up. This will not always be the case and you need to realize that this is not some kind of magical silver bullet.

I had mentioned earlier that the adage of Garbage In, Garbage Out has been around for a while. In one sense, the redundant data that comes to a sensor can be considered as “garbage” in that we don’t need it. I’m not saying that it is bad data (which is what the word “Garbage” in the GIGO context implies). I am merely saying that it is something we can toss away without necessarily fears of doing so. That’s why the Garbage Doesn’t Get In (GDGI) is more applicable here. The use of compressive sensing says that there’s not a need to bring in the data that we otherwise consider redundant and can be tossed out. I urge you to take a look at compressive sensing as it is still a somewhat “new” movement and continues to have room to grow. We firmly believe that it can be very valuable for the advent of widespread and affordable AI self-driving cars. That’s a goal we can all get behind!

This content is originally posted on AI Trends.