大資料協作框架之Flume

一、概述

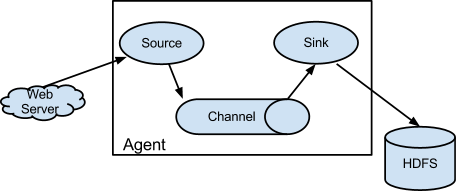

Flume是Cloudera提供的一個高可用的,高可靠的,分散式的海量日誌採集、聚合和傳輸的系統,Flume支援在日誌系統中定製各類資料傳送方,用於收集資料;同時,Flume提供對資料進行簡單處理,並寫到各種資料接受方(可定製)的能力。

二、安裝

1、解壓:

tar -zxvf flume-ng-1.6.0-cdh5.14.2.tar.gz -C /opt/cdh5.14.2/

2、檢視conf目錄:

[[email protected] conf]# ll

total 16

-rw-r--r-- 1 1106 4001 1661 Mar 28 04:47 flume-conf.properties.template

-rw-r--r-- 1 1106 4001 1455 Mar 28 04:47 flume-env.ps1.template

-rw-r--r-- 1 1106 4001 1565 Mar 28 04:47 flume-env.sh.template

-rw-r--r-- 1 1106 4001 3107 Mar 28 04:47 log4j.properties3、重新命名flume-env.sh.template為flume-env.sh

4、配置flume-env.sh

檢視Java_home的路徑:

[[email protected] conf]# echo $JAVA_HOME

/opt/java/jdk1.7.0_80修改flume-env.sh:

# Licensed to the Apache Software Foundation (ASF) under one # or more contributor license agreements. See the NOTICE file # distributed with this work for additional information # regarding copyright ownership. The ASF licenses this file # to you under the Apache License, Version 2.0 (the # "License"); you may not use this file except in compliance # with the License. You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # If this file is placed at FLUME_CONF_DIR/flume-env.sh, it will be sourced # during Flume startup. # Enviroment variables can be set here. export JAVA_HOME=/opt/java/jdk1.7.0_80 # Give Flume more memory and pre-allocate, enable remote monitoring via JMX # export JAVA_OPTS="-Xms100m -Xmx2000m -Dcom.sun.management.jmxremote" # Let Flume write raw event data and configuration information to its log files for debugging # purposes. Enabling these flags is not recommended in production, # as it may result in logging sensitive user information or encryption secrets. # export JAVA_OPTS="$JAVA_OPTS -Dorg.apache.flume.log.rawdata=true -Dorg.apache.flume.log.printconfig=true " # Note that the Flume conf directory is always included in the classpath. #FLUME_CLASSPATH=""

5、將Hadoop的lib目錄下的四個jar包拷貝到flume的lib目錄:

commons-configuration-1.6.jar,hadoop-auth-2.6.0-cdh5.14.2.jar,hadoop-common-2.6.0-cdh5.14.2.jar,hadoop-hdfs-2.6.0-cdh5.14.2.jar

[[email protected] hadoop-2.6.0]# cp -r share/hadoop/tools/lib/commons-configuration-1.6.jar /opt/cdh5.14.2/flume-1.6.0/lib/

[[email protected] hadoop-2.6.0]# cp -r share/hadoop/common/lib/hadoop-auth-2.6.0-cdh5.14.2.jar /opt/cdh5.14.2/flume-1.6.0/lib/

[[email protected] hadoop-2.6.0]# cp -r share/hadoop/common/hadoop-common-2.6.0-cdh5.14.2.jar /opt/cdh5.14.2/flume-1.6.0/lib/

[[email protected] hadoop-2.6.0]# cp -r share/hadoop/hdfs/hadoop-hdfs-2.6.0-cdh5.14.2.jar /opt/cdh5.14.2/flume-1.6.0/lib/三、基本使用

1、檢視當前flume版本:

[[email protected] flume-1.6.0]# bin/flume-ng version

Flume 1.6.0-cdh5.14.2

Source code repository: https://git-wip-us.apache.org/repos/asf/flume.git

Revision: 50436774fa1c7eaf0bd9c89ac6ee845695fbb687

Compiled by jenkins on Tue Mar 27 13:55:10 PDT 2018

From source with checksum 30217fe2b34097676ff5eabb51f4a11d2、檢視幫助:

[[email protected] flume-1.6.0]# bin/flume-ng help

Usage: bin/flume-ng <command> [options]...

commands:

help display this help text

agent run a Flume agent

avro-client run an avro Flume client

version show Flume version info

global options:

--conf,-c <conf> use configs in <conf> directory

--classpath,-C <cp> append to the classpath

--dryrun,-d do not actually start Flume, just print the command

--plugins-path <dirs> colon-separated list of plugins.d directories. See the

plugins.d section in the user guide for more details.

Default: $FLUME_HOME/plugins.d

-Dproperty=value sets a Java system property value

-Xproperty=value sets a Java -X option

agent options:

--name,-n <name> the name of this agent (required)

--conf-file,-f <file> specify a config file (required if -z missing)

--zkConnString,-z <str> specify the ZooKeeper connection to use (required if -f missing)

--zkBasePath,-p <path> specify the base path in ZooKeeper for agent configs

--no-reload-conf do not reload config file if changed

--help,-h display help text

avro-client options:

--rpcProps,-P <file> RPC client properties file with server connection params

--host,-H <host> hostname to which events will be sent

--port,-p <port> port of the avro source

--dirname <dir> directory to stream to avro source

--filename,-F <file> text file to stream to avro source (default: std input)

--headerFile,-R <file> File containing event headers as key/value pairs on each new line

--help,-h display help text

Either --rpcProps or both --host and --port must be specified.

Note that if <conf> directory is specified, then it is always included first

in the classpath.

四、Flume Agent應用例子:將hive的log儲存到hdfs

1、在flume的conf目錄下建立檔案catchhivelogs.conf,檔案的內容的格式可以參照conf目錄下的flume-conf.properties.template

2、編寫catchhivelogs.conf

http://flume.apache.org/FlumeUserGuide.html#exec-source

http://flume.apache.org/FlumeUserGuide.html#memory-channel

http://flume.apache.org/FlumeUserGuide.html#hdfs-sink

# The configuration file needs to define the sources,

# the channels and the sinks.

# Sources, channels and sinks are defined per agent,

# in this case called 'agent'

#define agent

a1.sources = r1

a1.channels = c1

a1.sinks = k1

#define sources

a1.sources.r1.type = exec

a1.sources.r1.command = tail -f /opt/cdh5.14.2/hive-1.1.0/logs/hive.log

a1.sources.r1.shell = /bin/bash -c

#define channels

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

#define sinks

a1.sinks.k1.type = hdfs

a1.sinks.k1.hdfs.path = hdfs://master.cdh.com:8020/user/flume/hive-logs/

a1.sinks.k1.hdfs.fileType = DataStream

a1.sinks.k1.hdfs.writeFormat = Text

a1.sinks.k1.hdfs.batchSize = 10

#bind the sources and sinks to the channels

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

3、執行:

bin/flume-ng agent -c conf -n a1 -f conf/catchhivelogs.conf -Dflume.root.logger=DEBUG,console發現報錯了:

2018-07-31 17:43:21,039 (SinkRunner-PollingRunner-DefaultSinkProcessor) [ERROR - org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:447)] process failed

java.lang.NoClassDefFoundError: org/apache/htrace/core/Tracer$Builder

at org.apache.hadoop.fs.FsTracer.get(FsTracer.java:42)

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2803)

at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:98)

at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2853)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2835)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:387)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:186)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:371)

at org.apache.hadoop.fs.Path.getFileSystem(Path.java:296)

at org.apache.flume.sink.hdfs.BucketWriter$1.call(BucketWriter.java:260)

at org.apache.flume.sink.hdfs.BucketWriter$1.call(BucketWriter.java:252)

at org.apache.flume.sink.hdfs.BucketWriter$9$1.run(BucketWriter.java:701)

at org.apache.flume.auth.SimpleAuthenticator.execute(SimpleAuthenticator.java:50)

at org.apache.flume.sink.hdfs.BucketWriter$9.call(BucketWriter.java:698)

at java.util.concurrent.FutureTask.run(FutureTask.java:262)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.lang.ClassNotFoundException: org.apache.htrace.core.Tracer$Builder

at java.net.URLClassLoader$1.run(URLClassLoader.java:366)

at java.net.URLClassLoader$1.run(URLClassLoader.java:355)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:354)

at java.lang.ClassLoader.loadClass(ClassLoader.java:425)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:308)

at java.lang.ClassLoader.loadClass(ClassLoader.java:358)

... 18 more解決辦法:

將hadoop-2.6.0/share/hadoop/common/lib目錄下的htrace-core4-4.0.1-incubating.jar拷貝到flume的lib目錄下。

4、執行結果:

檢視hdfs:

5、優化:

在第2步中的conf:

a1.sinks.k1.hdfs.path = hdfs://master.cdh.com:8020/user/flume/hive-logs/

當Hadoop 為HA時候,這種寫法肯定有問題。

解決辦法:1、將hdfs-site.xml和core-site.xml複製到flume-1.6.0/conf的目錄下

[[email protected] hadoop]# cp -r hdfs-site.xml /opt/cdh5.14.2/flume-1.6.0/conf/

[[email protected] hadoop]# cp -r core-site.xml /opt/cdh5.14.2/flume-1.6.0/conf/

2、修改a1.sinks.k1.hdfs.path = hdfs://ns1/user/flume/hive-logs/

五、flume在企業大資料中的架構: