A Long Way From Tay: Microsoft Advancing in Conversational AI

Microsoft debuted a bevy of new services and product updates on Wednesday during its Artificial Intelligence (AI) in Business event, including an AI model builder for Power BI, its no-code business analytics solution; a Unity plugin for AirSim, its open source aerial informatics and robotics toolkit; and a public preview of its PlayFab multiplayer server platform. But two announcements stood out from the rest and underline the Seattle company’s impressive progress in the field of conversational AI.

The first was the acquisition of XOXCO, creator of the Botkit framework that enables enterprises to create conversational bots for chat apps like Cisco Spark, Slack, and Microsoft Teams. The second was an open source system for creating virtual assistants — built on the Microsoft Bot Framework — that can answer basic questions, access calendars, and highlight points of interest.

The wealth of bot-building tools now available to developers within Microsoft’s ecosystem — buoyed by the company’s acquisitions earlier this year of conversational AI startup Semantic Machines, AI development kit creator Bonsai, and no-code deep learning service Lobe — have no doubt contributed to the growth of Azure, its cloud computing platform. Azure topped Amazon Web Services in Q1 2018 with $6 billion in revenue, and to date 1.2 million developers have signed up to use Azure Cognitive Services, a collection of premade AI models.

That momentum would’ve been inconceivable just two years ago.

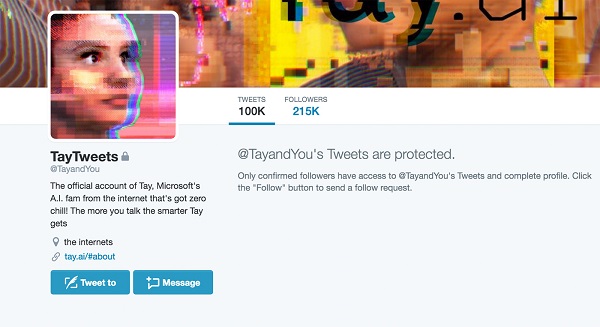

March 2016, you might recall, marked the launch of Tay, an AI chatbot Microsoft researchers set loose on Twitter as part of an experiment in “conversational understanding.” The more users conversed with Tay, the smarter it would get, the idea went, and the more natural its interactions would become.

It took less than 16 hours for Tay to begin spouting misogynistic and racist remarks. Many of these were prompted by a “repeat after me” function that enabled Twitter users to effectively put words in the bot’s mouth. But at least a few of the hateful responses were novel: Tay invoked Adolf Hitler in response to a question about Ricky Gervais; transphobically referred to Caitlyn Jenner as “[not] a real woman”; and equated feminism with “cancer” and “[a] cult.”

Microsoft moderators deleted the bulk of Tay’s offensive tweets, deactivated its account, and promised that “adjustments” to Tay were forthcoming. But the bot fared no better in a second test ahead of Microsoft’s 2016 Build Conference, spamming its roughly 213,000 followers with nonsensical messages and tweeted about drug use.

It was a major embarrassment for the company’s AI and Research division, forever memorialized on MIT Technology Review’s list of 2016’s biggest tech failures. But it also marked a turning point in Microsoft’s approach to conversational AI. It would proceed with caution in the future — and perhaps more importantly, with greater transparency.

“Looking ahead, we face some difficult — and yet exciting — research challenges in AI design,” Peter Lee, corporate vice president for Microsoft Healthcare, wrote in a blog post in 2016. “We will do everything possible to limit technical exploits, but also know we cannot fully predict all possible human interactive misuses without learning from mistakes. To do AI right, one needs to iterate with many people, and often in public forums.”

A new chatbot named Zo followed Tay — this one hardcoded to avoid engaging in its predecessor’s worst tendencies — with the ability to understand the general sentiment of a range of ideas. (It responds to the word “mother” warmly and answers with specifics to phrases like “I love pizza.”) And Microsoft devoted increasing resources to Xiaolce — its wittier, cheerful chatbot for Asian markets with full-duplex capabilities — which now has over 500 million social media followers.

Coinciding with Microsoft’s in-house chatbot efforts was a push toward platforms emphasizing human language — what CEO Satya Nadella called Conversation as a Platform (CaaP). “Bots are like new applications, and digital assistants are meta apps, or like the new browsers,” he said at Build 2016. “And intelligence is infused into all of your interactions. That’s the rich platform that we have.”

2016 saw the launch of the Azure Bot Service, which gives developers tools to build, test, and deploy bots made for Facebook Messenger, Skype, Slack, Microsoft Teams, and other chat apps. It exited beta in 2017 and has been used to deploy 33,000 active bots across financial, services, health care, and retail sectors.

Ever since Tay’s disastrous rollout, ethical bot building has remained top of mind for Microsoft. This week, the company published guidelines for conversational AI that suggest developers assess whether a bot’s intended purpose can be performed responsibly, establish limitations associated with its use, and design bots to respect the full range of human abilities.

Read the source article in VentureBeat.