SVM入門例項可執行python程式碼完整版(簡單視覺化)

阿新 • • 發佈:2019-01-02

執行環境 anaconda

python 版本 2.7.13

包含詳細資料集和資料的使用,視覺化結果,很快入門,程式碼如下

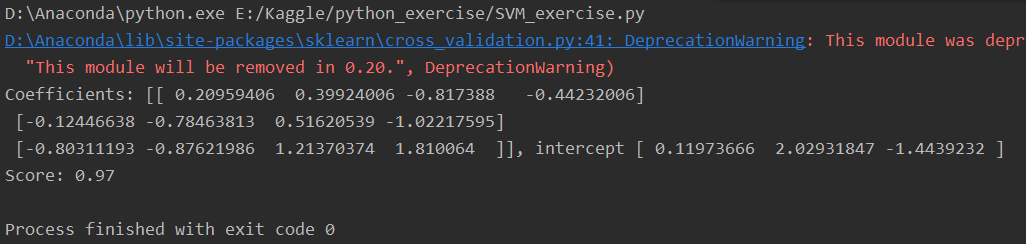

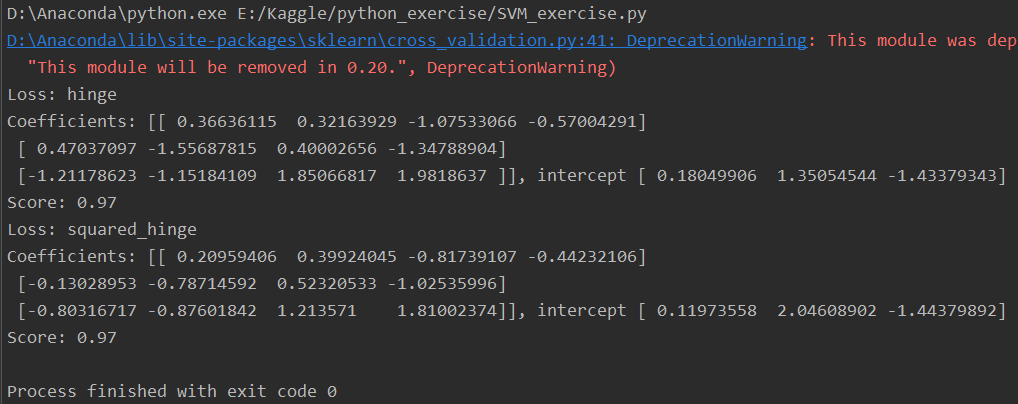

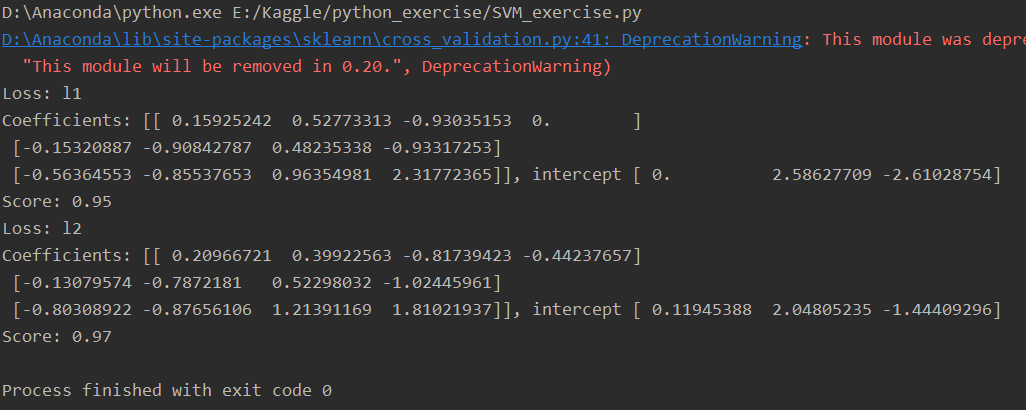

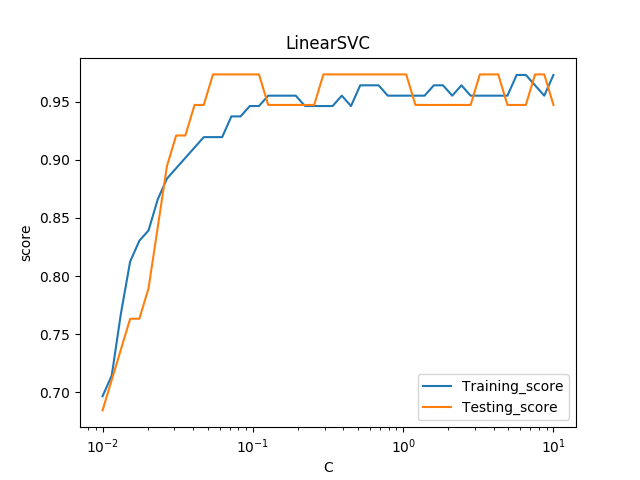

# -*- coding: utf-8 -*- __author__ = 'LinearSVC線性分類支援向量機:包含懲罰項的' # 導包 import matplotlib.pyplot as plt import numpy as np from sklearn import datasets, linear_model, cross_validation, svm # 資料集:鳶尾花資料集 ''' 資料數 150 資料類別 3 (setosa, versicolor, virginica)# 結果2每個資料包含4個屬性:sepal萼片長度、萼片寬度、petal花瓣長度、花瓣寬度''' def load_data_classfication(): iris = datasets.load_iris() X_train = iris.data y_train = iris.target return cross_validation.train_test_split(X_train, y_train, test_size=0.25, random_state=0, stratify=y_train) # 求得分類函式引數w, b # 得出預測準確度 ''' 呼叫預設線性分類函式,預設引數定義如下:penalty = 'l2' 懲罰項loss = 'squared_hinge' 合頁損失函式的平方dual = True 解決對偶問題tol = 0.0001 終止迭代的閾值C = 1.0 懲罰引數multi_class = 'ovr' 多分類問題的策略:採用 one-vs-rest策略fit_intercept = True 計算截距,即決策函式中的常數項intercept-scaling = 1 例項X變成向量[X, intercept-scaling],此時相當於添加了一個人工特徵,該特徵對所有例項都是常數值。class-weight = None 認為類權重是1 verbose = 0 表示不開啟verbose輸出random_state = None 使用預設的隨機數生成器max_iter = 1000 指定最大的迭代次數''' def test_LinearSVC(*data): X_train, X_test, y_train, y_test = data cls = svm.LinearSVC() cls.fit(X_train, y_train) print('Coefficients: %s, intercept %s' % (cls.coef_, cls.intercept_)) print('Score: %.2f' % cls.score(X_test, y_test)) # 考察損失函式的影響 def test_LinearSVC_loss(*data): X_train, X_test, y_train, y_test = data losses = ['hinge', 'squared_hinge'] for loss in losses: cls = svm.LinearSVC(loss=loss) cls.fit(X_train, y_train) print('Loss: %s' % loss) print('Coefficients: %s, intercept %s' % (cls.coef_, cls.intercept_)) print('Score: %.2f' % cls.score(X_test, y_test)) # 考察懲罰項引數影響 dual=True, penalty='l2'不支援 def test_LinearSVC_L12(*data): X_train, X_test, y_train, y_test = data L12 = ['l1', 'l2'] for p in L12: cls = svm.LinearSVC(penalty=p, dual=False) cls.fit(X_train, y_train) print('Loss: %s' % p) print('Coefficients: %s, intercept %s' % (cls.coef_, cls.intercept_)) print('Score: %.2f' % cls.score(X_test, y_test)) # 考察懲罰項 def test_LinearSVC_C(*data): X_train, X_test, y_train, y_test = data Cs = np.logspace(-2, 1) train_scores = [] test_scores = [] for C in Cs: cls = svm.LinearSVC(C=C) cls.fit(X_train, y_train) train_scores.append(cls.score(X_train, y_train)) test_scores.append(cls.score(X_test, y_test)) # 繪圖 fig = plt.figure() ax = fig.add_subplot(1, 1, 1) ax.plot(Cs, train_scores, label='Training_score') ax.plot(Cs, test_scores, label='Testing_score') ax.set_xlabel(r'C') ax.set_ylabel(r'score') ax.set_xscale('log') ax.set_title('LinearSVC') ax.legend(loc='best') #plt.show() plt.savefig('C_LinearSVC.png') # 呼叫test_LinearSVC函式 if __name__ == '__main__': X_train, X_test, y_train, y_test = load_data_classfication() #結果1 #test_LinearSVC(X_train, X_test, y_train, y_test) #結果2 #test_LinearSVC_loss(X_train, X_test, y_train, y_test) #結果3 #test_LinearSVC_L12(X_train, X_test, y_train, y_test) #結果4 test_LinearSVC_C(X_train, X_test, y_train, y_test) #結果5 '''結果1 Coefficients: [[ 0.20959406 0.39924006 -0.817388 -0.44232006] [-0.12446638 -0.78463813 0.51620539 -1.02217595] [-0.80311193 -0.87621986 1.21370374 1.810064 ]], intercept [ 0.11973666 2.02931847 -1.4439232 ] Score: 0.97 '''

# 結果3

# 結果4

# 結果5