kubeadm安裝kubernetes 1.13.1叢集完整部署記錄

-

k8s是什麼

Kubernetes簡稱為k8s,它是 Google 開源的容器叢集管理系統。在 Docker 技術的基礎上,為容器化的應用提供部署執行、資源排程、服務發現和動態伸縮等一系列完整功能,提高了大規模容器叢集管理的便捷性。k8s是容器到容器雲後的產物。但是k8s並不是萬能,並不一定適合所有的雲場景。官方有一段"What Kubernetes is not"的解釋可能更有利我們的理解。

Kubernetes 不是一個傳統意義上,包羅永珍的 PaaS (平臺即服務) 系統。我們保留使用者選擇的自由,這非常重要。

-

- Kubernetes 不限制支援的應用程式型別。 它不插手應用程式框架 (例如 Wildfly), 不限制支援的語言執行時 (例如 Java, Python, Ruby),只迎合符合 12種因素的應用程式,也不區分”應用程式”與”服務”。Kubernetes 旨在支援極其多樣化的工作負載,包括無狀態、有狀態和資料處理工作負載。如果應用可以在容器中執行,它就可以在 Kubernetes 上執行。

- Kubernetes 不提供作為內建服務的中介軟體 (例如 訊息中介軟體)、資料處理框架 (例如 Spark)、資料庫 (例如 mysql)或叢集儲存系統 (例如 Ceph)。這些應用可以執行在 Kubernetes 上。

- Kubernetes 沒有提供點選即部署的服務市場

- Kubernetes 從原始碼到映象都是非壟斷的。 它不部署原始碼且不構建您的應用程式。 持續整合 (CI) 工作流是一個不同使用者和專案都有自己需求和偏好的領域。 所以我們支援在 Kubernetes 分層的 CI 工作流,但不指定它應該如何工作。

- Kubernetes 允許使用者選擇其他的日誌記錄,監控和告警系統 (雖然我們提供一些整合作為概念驗證)

- Kubernetes 不提供或授權一個全面的應用程式配置語言/系統 (例如 jsonnet).

- Kubernetes 不提供也不採用任何全面機器配置、保養、管理或自我修復系統

- Kubernetes 不限制支援的應用程式型別。 它不插手應用程式框架 (例如

-

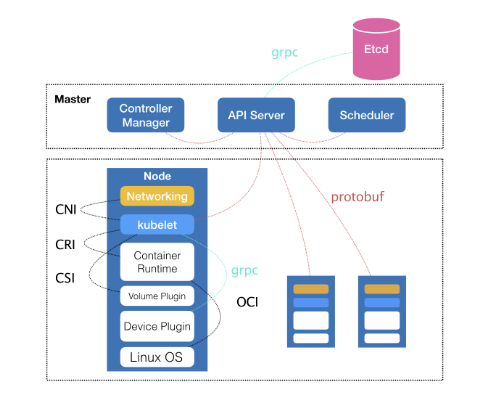

Kubernetes的整體架構如下:

其中,控制節點,即Master節點,由三個緊密協作的獨立元件組合而成,他們分別負責是API服務的kube-apiserver、負責排程的kube-scheduler,以及負責容器編排的kube-controller-manager。整個叢集的持久化資料,則由kube-apiserver處理後儲存在Etcd中。

在計算節點上最核心的部分,則是一個叫做kubelet的元件。

在 Kubernetes 專案中,kubelet 主要負責

1.kubelet同容器執行(running containers)時(比如 Docker 專案)打交道。而這個互動所依賴的,是一個稱作 CRI(Container Runtime Interface)的遠端呼叫介面,這個介面定義了容器執行時的各項核心操作,比如:啟動一個容器需要的所有引數。

這也是為何,Kubernetes 專案並不關心你部署的是什麼容器執行時、使用的什麼技術實現,只要你的這個容器執行時能夠執行標準的容器映象,它就可以通過實現 CRI 接入到 Kubernetes 專案當中。

2.而具體的容器執行時,比如 Docker 專案,則一般通過 OCI 這個容器執行時規範同底層的 Linux 作業系統進行互動,即:把 CRI 請求翻譯成對 Linux 作業系統的呼叫(操作 Linux Namespace 和 Cgroups 等)。

3.此外,kubelet 還通過 gRPC 協議同一個叫作 Device Plugin 的外掛進行互動。這個外掛,是 Kubernetes 專案用來管理 GPU 等宿主機物理裝置的主要元件,也是基於 Kubernetes 專案進行機器學習訓練、高效能作業支援等工作必須關注的功能。

4.kubelet 的另一個重要功能,則是呼叫網路外掛和儲存外掛為容器配置網路和持久化儲存。這兩個外掛與 kubelet 進行互動的介面,分別是 CNI(Container Networking Interface)和 CSI(Container Storage Interface)。

所以說,kubelet完全是為了實現Kubernets專案對容器的管理能力而重新實現的一個元件。

-

Kubernetes部署

-

安裝docker

-

Kubernetes從1.6開始使用CRI(Container Runtime Interface)容器執行時介面。預設的容器執行時仍然是Docker,是使用kubelet中內建dockershim CRI來實現的

apt-get remove docker-ce apt autoremove apt-get install docker-ce

啟動docker:

systemctl enable docker

systemctl start docker

-

-

安裝kubeadm,kubelet, kubectl

-

kubeadm: 引導啟動k8s叢集的命令列工具。

kubelet: 在群集中所有節點上執行的核心元件, 用來執行如啟動pods和containers等操作。

kubectl: 操作叢集的命令列工具。

首先新增apt-key:

sudo apt update && sudo apt install -y apt-transport-https curl curl -s https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add -

新增kubernetes源:

sudo vim /etc/apt/sources.list.d/kubernetes.list deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

安裝:

sudo apt update sudo apt install -y kubelet kubeadm kubectl sudo apt-mark hold kubelet kubeadm kubectl

初始化Master節點

在初始化之前,我們還有以下幾點需要注意:

1.選擇一個網路外掛,並檢查它是否需要在初始化Master時指定一些引數,比如我們可能需要根據選擇的外掛來設定--pod-network-cidr引數。參考:Installing a pod network add-on。

2.kubeadm使用eth0的預設網路介面(通常是內網IP)做為Master節點的advertise address,如果我們想使用不同的網路介面,可以使用--apiserver-advertise-address=<ip-address>引數來設定。如果適應IPv6,則必須使用IPv6d的地址,如:--apiserver-advertise-address=fd00::101。

3.1.13版本中終於解決了在國內無法拉取國外映象的痛點,其增加了一個--image-repository引數,預設值是k8s.gcr.io,我們將其指定為國內映象地址:registry.aliyuncs.com/google_containers

4.我們還需要指定--kubernetes-version引數,因為它的預設值是stable-1,會導致從https://dl.k8s.io/release/stable-1.txt下載最新的版本號,我們可以將其指定為固定版本(最新版:v1.13.1)來跳過網路請求。

#kubeadm init --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.13.1 --pod-network-cidr=192.168.0.0/16

[init] Using Kubernetes version: v1.13.1 [preflight] Running pre-flight checks [WARNING SystemVerification]: this Docker version is not on the list of validated versions: 18.09.0. Latest validated version: 18.06 [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Activating the kubelet service [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "etcd/ca" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [fn004 localhost] and IPs [121.197.130.187 127.0.0.1 ::1] [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [fn004 localhost] and IPs [121.197.130.187 127.0.0.1 ::1] [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "ca" certificate and key [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [fn004 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 121.197.130.187] [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "kubelet.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" [control-plane] Creating static Pod manifest for "kube-scheduler" [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests" [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s [apiclient] All control plane components are healthy after 21.504803 seconds [uploadconfig] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config-1.13" in namespace kube-system with the configuration for the kubelets in the cluster [patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "fn004" as an annotation [mark-control-plane] Marking the node fn004 as control-plane by adding the label "node-role.kubernetes.io/master=''" [mark-control-plane] Marking the node fn004 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule] [bootstrap-token] Using token: b0x4dv.nbut63ktiaikcc24 [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles [bootstraptoken] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstraptoken] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstraptoken] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstraptoken] creating the "cluster-info" ConfigMap in the "kube-public" namespace [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes master has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of machines by running the following on each node as root: kubeadm join [公網IP]:6443 --token b0x4dv.nbut63ktiaikcc24 --discovery-token-ca-cert-hash sha256:551fe78b50dfe52410869685b7dc70b9a27e550241a6112d8d1fef2073759bb4

如果init出現了錯誤,需要重新init的時候,可以 #kubeadm reset 重新初始化叢集。

接著執行:

mkdir -p $HOME/.kube cp -i /etc/kubernetes/admin.conf $HOME/.kube/config chown $(id -u):$(id -g) $HOME/.kube/config

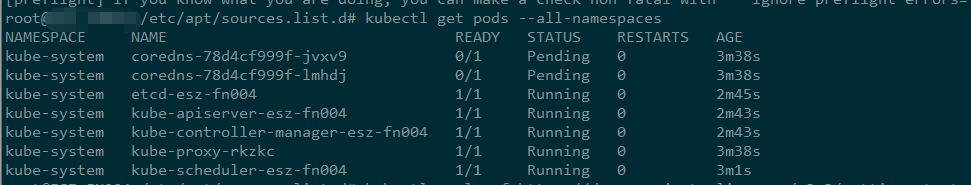

kubectl get pods --all-namespaces //可以看到coredns的狀態是pending,這事因為我們還沒有安裝網路外掛

Calico是一個純三層的虛擬網路方案,Calico 為每個容器分配一個 IP,每個 host 都是 router,把不同 host 的容器連線起來。與 VxLAN 不同的是,Calico 不對資料包做額外封裝,不需要 NAT 和埠對映,擴充套件性和效能都很好。

預設情況下,Calico網路外掛使用的的網段是192.168.0.0/16,在init的時候,我們已經通過--pod-network-cidr=192.168.0.0/16來適配Calico,當然你也可以修改calico.yml檔案來指定不同的網段。

可以使用如下命令命令來安裝Canal外掛:

安裝calico網路元件

kubectl apply -f https://docs.projectcalico.org/v3.3/getting-started/kubernetes/installation/hosted/rbac-kdd.yaml kubectl apply -f https://docs.projectcalico.org/v3.3/getting-started/kubernetes/installation/hosted/kubernetes-datastore/calico-networking/1.7/calico.yaml

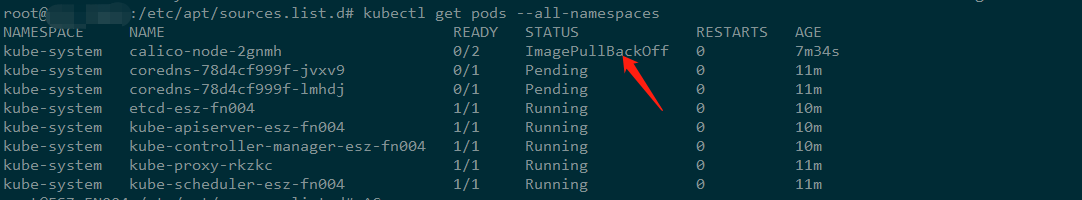

上圖出現了拉取映象失敗的情況,可以通過systemctl status kubelet 檢視報錯原因,正確的結果如下:

NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-node-wdgl5 2/2 Running 0 90s kube-system coredns-78d4cf999f-jvxv9 1/1 Running 0 27m kube-system coredns-78d4cf999f-lmhdj 1/1 Running 0 27m kube-system etcd-fn004 1/1 Running 0 26m kube-system kube-apiserver-fn004 1/1 Running 0 26m kube-system kube-controller-manager-fn004 1/1 Running 0 26m kube-system kube-proxy-rkzkc 1/1 Running 0 27m kube-system kube-scheduler-fn004 1/1 Running 0 27m

以上就部署完了一個master節點,接下來就可以加入worker節點並進行測試了。

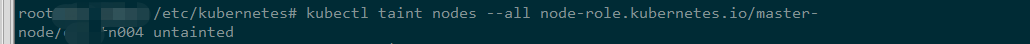

Master隔離

預設情況下,由於安全原因,叢集並不會將pods部署在Master節點上。但是在開發環境下,我們可能就只有一個Master節點,這時可以使用下面的命令來解除這個限制:

kubectl taint nodes --all node-role.kubernetes.io/master-

加入worker節點

登入另外一臺機器B:

直接執行:kubeadm join [masterIP]:6443 --token b0x4dv.nbut63ktiaikcc24 --discovery-token-ca-cert-hash sha256:551fe78b50dfe52410869685b7dc70b9a27e550241a6112d8d1fef2073759bb4

[email protected]:/etc/kubernetes# kubeadm join [master_ip]:6443 --token b0x4dv.nbut63ktiaikcc24 --discovery-token-ca-cert-hash sha256:551fe78b50dfe52410869685b7dc70b9a27e550241a6112d8d1fef2073759bb4 [preflight] Running pre-flight checks [WARNING SystemVerification]: this Docker version is not on the list of validated versions: 18.09.0. Latest validated version: 18.06 [discovery] Trying to connect to API Server "master_ip:6443" [discovery] Created cluster-info discovery client, requesting info from "https://master_ip:6443" [discovery] Requesting info from "https://master_ip:6443" again to validate TLS against the pinned public key [discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "master_ip:6443" [discovery] Successfully established connection with API Server "master_ip:6443" [join] Reading configuration from the cluster... [join] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' [kubelet] Downloading configuration for the kubelet from the "kubelet-config-1.13" ConfigMap in the kube-system namespace [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Activating the kubelet service [tlsbootstrap] Waiting for the kubelet to perform the TLS Bootstrap... [patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "fn001" as an annotation This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the master to see this node join the cluster.

在master節點可以用#kubeadm token list 檢視token.

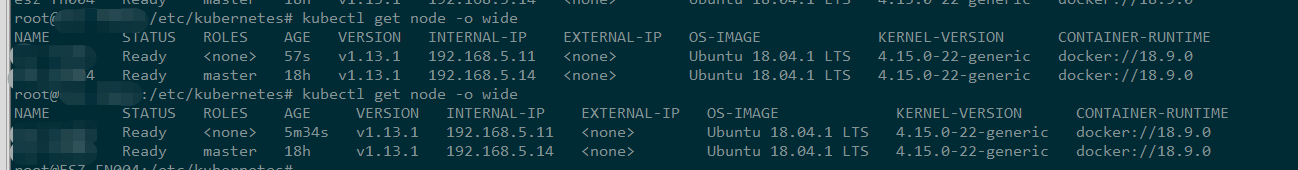

等 一會兒就可以在master節點檢視節點狀態:

測試

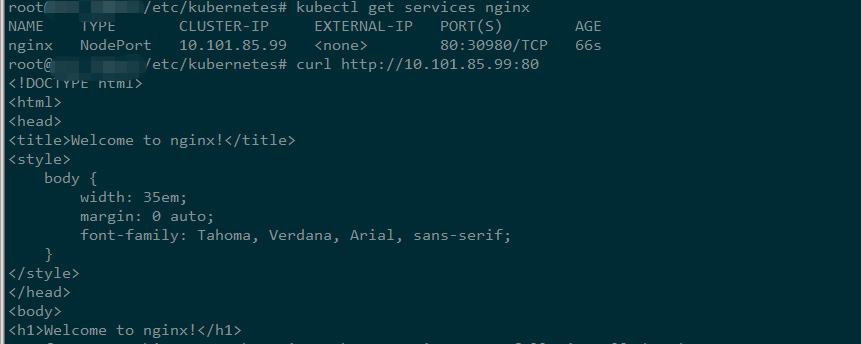

首先驗證kube-apiserver, kube-controller-manager, kube-scheduler, pod network 是否正常:

kubectl create deployment nginx --image=nginx:alpine //部署一個nginx,包含2個pod

kubectl scale deployment nginx --replicas=2

kubectl get pods -l app=nginx -o wide //驗證nginx pod是否執行,會分配2個192.168.開頭的叢集IP

kubectl expose deployment nginx --port=80 --type=NodePort //以nodePort 方式對外提供服務

kubectl get services nginx //檢視叢集外可訪問的Port

錯誤解決:

systemctl status kubelet //報錯是因為配置檔案人為被修改了,導致重啟始終不成功。以下配置檔案使用1.13的版本。供參考。

出現如下報錯:

kubelet[12305]: Flag --cgroup-driver has been deprecated, This parameter should be set via the config file specified by the Kubelet's --config flag. S

systemd[1]: kubelet.service: Service lacks both ExecStart= and ExecStop= setting. Refusing.

需要檢查/etc/systemd/system/kubelet.service.d/10-kubeadm.conf /lib/systemd/system/kubelet.service 這2個配置檔案是否正確生成。正確配置如下:

vim /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

# Note: This dropin only works with kubeadm and kubelet v1.11+ [Service] Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf" Environment="KUBELET_CONFIG_ARGS=--config=/var/lib/kubelet/config.yaml" # This is a file that "kubeadm init" and "kubeadm join" generates at runtime, populating the KUBELET_KUBEADM_ARGS variable dynamically EnvironmentFile=-/var/lib/kubelet/kubeadm-flags.env # This is a file that the user can use for overrides of the kubelet args as a last resort. Preferably, the user should use # the .NodeRegistration.KubeletExtraArgs object in the configuration files instead. KUBELET_EXTRA_ARGS should be sourced from this file. EnvironmentFile=-/etc/default/kubelet ExecStart= ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS

vim /lib/systemd/system/kubelet.service

[Unit] Description=kubelet: The Kubernetes Node Agent Documentation=https://kubernetes.io/docs/home/ [Service] ExecStart=/usr/bin/kubelet Restart=always StartLimitInterval=0 RestartSec=10 [Install] WantedBy=multi-user.target

參考連結:

https://kubernetes.io/docs/concepts/overview/what-is-kubernetes/