HashMap/跳錶(SkipList)/紅黑樹比較及ConcurrentSkipListMap原始碼解析

二分查詢和AVL樹查詢

二分查詢要求元素可以隨機訪問,所以決定了需要把元素儲存在連續記憶體。這樣查詢確實很快,但是插入和刪除元素的時候,為了保證元素的有序性,就需要大量的移動元素了。如果需要的是一個能夠進行二分查詢,又能快速新增和刪除元素的資料結構,首先就是二叉查詢樹,二叉查詢樹在最壞情況下可能變成一個連結串列。

於是,就出現了平衡二叉樹,根據平衡演算法的不同有AVL樹,B-Tree,B+Tree,紅黑樹等,但是AVL樹實現起來比較複雜,平衡操作較難理解,這時候就可以用SkipList跳躍表結構。

什麼是跳錶

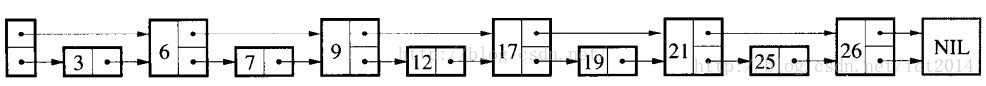

傳統意義的單鏈表是一個線性結構,向有序的連結串列中插入一個節點需要O(n)的時間,查詢操作需要O

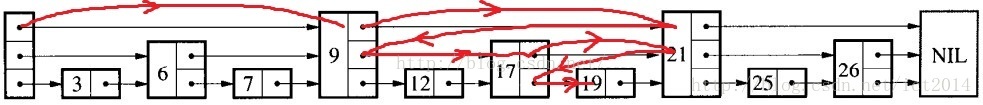

跳躍表的簡單示例:

如果我們使用上圖所示的跳躍表,就可以減少查詢所需時間為O(n/2),因為我們可以先通過每個節點的最上面的指標先進行查詢,這樣子就能跳過一半的節點。

比如我們想查詢19,首先和6比較,大於6之後,在和9進行比較,然後在和12進行比較......最後比較到21的時候,發現21大於19,說明查詢的點在17和21之間,從這個過程中,我們可以看出,查詢的時候跳過了3、7、12等點,因此查詢的複雜度為O(n/2)。

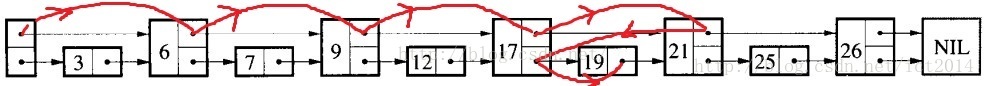

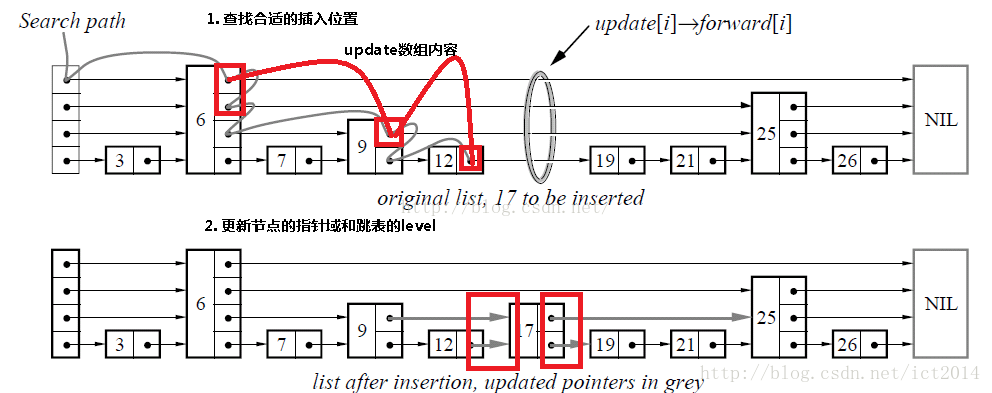

查詢的過程如下圖:

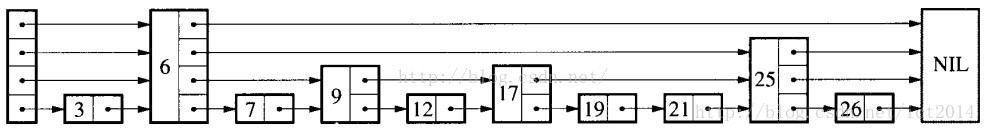

其實,上面基本上就是跳躍表的思想,每一個結點不單單隻包含指向下一個結點的指標,可能包含很多個指向後續結點的指標,這樣就可以跳過一些不必要的結點,從而加快查詢、刪除等操作。對於一個連結串列內每一個結點包含多少個指向後續元素的指標,後續節點個數是通過一個隨機函式生成器得到,這樣子就構成了一個跳躍表。

隨機生成的跳躍表可能如下圖所示:

跳躍表其實也是一種通過“空間來換取時間”的一個演算法,通過在每個節點中增加了向前的指標,從而提升查詢的效率。

“Skip lists are data structures that use probabilistic balancing rather than strictly enforced balancing. As a result, the algorithms for insertion and deletion in skip lists are much simpler and significantly faster

than equivalent algorithms for balanced trees. ”

譯文:跳躍表使用概率均衡技術

跳錶是一種隨機化的資料結構,目前開源軟體 Redis 和 LevelDB 都有用到它。

SkipList的操作

查詢就是給定一個key,查詢這個key是否出現在跳躍表中,如果出現,則返回其值,如果不存在,則返回不存在。我們結合一個圖就是講解查詢操作,如下圖所示:如果我們想查詢19是否存在?如何查詢呢?我們從頭結點開始,首先和9進行判斷,此時大於9,然後和21進行判斷,小於21,此時這個值肯定在9結點和21結點之間,此時,我們和17進行判斷,大於17,然後和21進行判斷,小於21,此時肯定在17結點和21結點之間,此時和19進行判斷,找到了。具體的示意圖如圖所示:

我們結合下圖進行講解,查詢路徑如下圖的灰色的線所示 申請新的結點如17結點所示, 調整指向新結點17的指標以及17結點指向後續結點的指標。這裡有一個小技巧,就是使用update陣列儲存大於17結點的位置,update陣列的內容如紅線所示,這些位置才是有可能更新指標的位置。

Key-Value資料結構

目前常用的key-value資料結構有三種:Hash表、紅黑樹、SkipList,它們各自有著不同的優缺點(不考慮刪除操作):

Hash表:插入、查詢最快,為O(1);如使用連結串列實現則可實現無鎖;資料有序化需要顯式的排序操作。

紅黑樹:插入、查詢為O(logn),但常數項較小;無鎖實現的複雜性很高,一般需要加鎖;資料天然有序。

SkipList:插入、查詢為O(logn),但常數項比紅黑樹要大;底層結構為連結串列,可無鎖實現;資料天然有序。如果要實現一個key-value結構,需求的功能有插入、查詢、迭代、修改,那麼首先Hash表就不是很適合了,因為迭代的時間複雜度比較高;而紅黑樹的插入很可能會涉及多個結點的旋轉、變色操作,因此需要在外層加鎖,這無形中降低了它可能的併發度。而SkipList底層是用連結串列實現的,可以實現為lock free,同時它還有著不錯的效能(單執行緒下只比紅黑樹略慢),非常適合用來實現我們需求的那種key-value結構。LevelDB、Reddis的底層儲存結構就是用的SkipList。

基於鎖的併發

優點:1、程式設計模型簡單,如果小心控制上鎖順序,一般來說不會有死鎖的問題;

2、可以通過調節鎖的粒度來調節效能。

缺點:

1、所有基於鎖的演算法都有死鎖的可能;

2、上鎖和解鎖時程序要從使用者態切換到核心態,並可能伴隨有執行緒的排程、上下文切換等,開銷比較重;

3、對共享資料的讀與寫之間會有互斥。

常見的lock free程式設計一般是基於CAS(Compare And Swap)操作:CAS(void *ptr, Any oldValue, Any newValue);

即檢視記憶體地址ptr處的值,如果為oldValue則將其改為newValue,並返回true,否則返回false。X86平臺上的CAS操作一般是通過CPU的CMPXCHG指令來完成的。CPU在執行此指令時會首先鎖住CPU匯流排,禁止其它核心對記憶體的訪問,然後再檢視或修改*ptr的值。簡單的說CAS利用了CPU的硬體鎖來實現對共享資源的序列使用。

優點:

1、開銷較小:不需要進入核心,不需要切換執行緒;

2、沒有死鎖:匯流排鎖最長持續為一次read+write的時間;

3、只有寫操作需要使用CAS,讀操作與序列程式碼完全相同,可實現讀寫不互斥。

缺點:

1、程式設計非常複雜,兩行程式碼之間可能發生任何事,很多常識性的假設都不成立。

2、CAS模型覆蓋的情況非常少,無法用CAS實現原子的複數操作。

而在效能層面上,CAS與mutex/readwrite lock各有千秋,簡述如下:

1、單執行緒下CAS的開銷大約為10次加法操作,mutex的上鎖+解鎖大約為20次加法操作,而readwrite lock的開銷則更大一些。

2、CAS的效能為固定值,而mutex則可以通過改變臨界區的大小來調節效能;

3、如果臨界區中真正的修改操作只佔一小部分,那麼用CAS可以獲得更大的併發度。

4、多核CPU中執行緒排程成本較高,此時更適合用CAS。

跳錶和紅黑樹的效能相當,最主要的優勢就是當調整(插入或刪除)時,紅黑樹需要使用旋轉來維護平衡性,這個操作需要動多個節點,在併發時候很難控制。而跳錶插入或刪除時只需定位後插入,插入時只需新增插入的那個節點及其多個層的複製,以及定位和插入的原子性維護。所以它更加可以利用CAS操作來進行無鎖程式設計。

JDK為我們提供了很多Map介面的實現,使得我們可以方便地處理Key-Value的資料結構。

當我們希望快速存取<Key, Value>鍵值對時我們可以使用HashMap。

當我們希望在多執行緒併發存取<Key, Value>鍵值對時,我們會選擇ConcurrentHashMap。

TreeMap則會幫助我們保證資料是按照Key的自然順序或者compareTo方法指定的排序規則進行排序。

OK,那麼當我們需要多執行緒併發存取<Key, Value>資料並且希望保證資料有序時,我們需要怎麼做呢?

也許,我們可以選擇ConcurrentTreeMap。不好意思,JDK沒有提供這麼好的資料結構給我們。

當然,我們可以自己新增lock來實現ConcurrentTreeMap,但是隨著併發量的提升,lock帶來的效能開銷也隨之增大。

Don't cry......,JDK6裡面引入的ConcurrentSkipListMap也許可以滿足我們的需求。

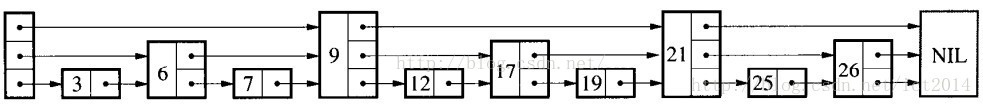

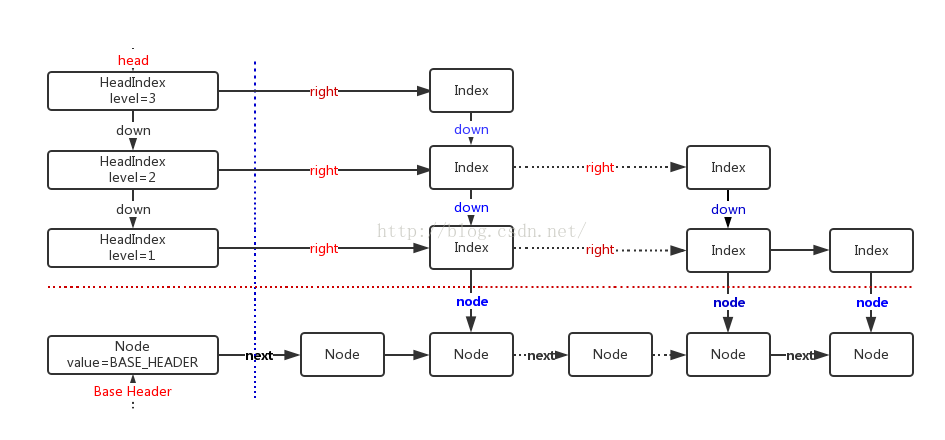

儲存結構

ConcurrentSkipListMap儲存結構跳躍表(SkipList): 1、最底層的資料節點按照關鍵字升序排列。 2、包含多級索引,每個級別的索引節點按照其關聯資料節點的關鍵字升序排列。 3、高級別索引是其低級別索引的子集。 4、如果關鍵字key在級別level=i的索引中出現,則級別level<=i的所有索引中都包含key。 注:類比一下資料庫的索引、B+樹。

public class ConcurrentSkipListMap<K,V> extends AbstractMap<K,V> implements ConcurrentNavigableMap<K,V>,

Cloneable,java.io.Serializable {

/** Special value used to identify base-level header*/

private static final Object BASE_HEADER = new Object();//該值用於標記資料節點的頭結點

/** The topmost head index of the skiplist.*/

private transient volatile HeadIndex<K,V> head;//最高級別索引的索引頭

......

/** Nodes hold keys and values, and are singly linked in sorted order, possibly with some intervening marker nodes.

The list is headed by a dummy node accessible as head.node. The value field is declared only as Object because it

takes special non-V values for marker and header nodes. */

static final class Node<K,V> {//儲存鍵值對的資料節點,並且是有序的單鏈表。

final K key;

volatile Object value;

volatile Node<K,V> next;//後繼資料節點

......

}

/** Index nodes represent the levels of the skip list.

Note that even though both Nodes and Indexes have forward-pointing fields, they have different types and are handled

in different ways, that can't nicely be captured by placing field in a shared abstract class.

*/

static class Index<K,V> {//索引節點

final Node<K,V> node;//索引節點關聯的資料節點

final Index<K,V> down;//下一級別索引節點(關聯的資料節點相同)

volatile Index<K,V> right;//當前索引級別中,後繼索引節點

......

}

/** Nodes heading each level keep track of their level.*/

static final class HeadIndex<K,V> extends Index<K,V> {//索引頭

final int level;//索引級別

HeadIndex(Node<K,V> node, Index<K,V> down, Index<K,V> right, int level) {

super(node, down, right);

this.level = level;

}

}

......

} 查詢

//Returns the value to which the specified key is mapped, or null if this map contains no mapping for the key.

public V get(Object key) {

return doGet(key);

} private V doGet(Object okey) {

Comparable<? super K> key = comparable(okey);

// Loop needed here and elsewhere in case value field goes null just as it is about to be returned, in which case we

// lost a race with a deletion, so must retry.

// 這裡採用迴圈的方式來查詢資料節點,是為了防止返回剛好被刪除的資料節點,一旦出現這樣的情況,需要重試。

for (;;) {

Node<K,V> n = findNode(key);//根據key查詢資料節點

if (n == null)

return null;

Object v = n.value;

if (v != null)

return (V)v;

}

} /**Returns node holding key or null if no such, clearing out any deleted nodes seen along the way.

Repeatedly traverses at base-level looking for key starting at predecessor returned from findPredecessor,

processing base-level deletions as encountered. Some callers rely on this side-effect of clearing deleted nodes.

* Restarts occur, at traversal step centered on node n, if:

*

* (1) After reading n's next field, n is no longer assumed predecessor b's current successor, which means that

* we don't have a consistent 3-node snapshot and so cannot unlink any subsequent deleted nodes encountered.

*

* (2) n's value field is null, indicating n is deleted, in which case we help out an ongoing structural deletion

* before retrying. Even though there are cases where such unlinking doesn't require restart, they aren't sorted out

* here because doing so would not usually outweigh cost of restarting.

*

* (3) n is a marker or n's predecessor's value field is null, indicating (among other possibilities) that

* findPredecessor returned a deleted node. We can't unlink the node because we don't know its predecessor, so rely

* on another call to findPredecessor to notice and return some earlier predecessor, which it will do. This check is

* only strictly needed at beginning of loop, (and the b.value check isn't strictly needed at all) but is done

* each iteration to help avoid contention with other threads by callers that will fail to be able to change

* links, and so will retry anyway.

*

* The traversal loops in doPut, doRemove, and findNear all include the same three kinds of checks. And specialized

* versions appear in findFirst, and findLast and their variants. They can't easily share code because each uses the

* reads of fields held in locals occurring in the orders they were performed.

*

* @param key the key

* @return node holding key, or null if no such

*/

private Node<K,V> findNode(Comparable<? super K> key) {

for (;;) {

Node<K,V> b = findPredecessor(key);//根據key查詢前驅資料節點

Node<K,V> n = b.next;

for (;;) {

if (n == null)

return null;

Node<K,V> f = n.next;

//1、b的後繼節點兩次讀取不一致,重試

if (n != b.next) // inconsistent read

break;

Object v = n.value; //2、資料節點的值為null,表示該資料節點標記為已刪除,移除該資料節點並重試。

if (v == null) { // n is deleted

n.helpDelete(b, f);

break;

}

//3、b節點被標記為刪除,重試

if (v == n || b.value == null) // b is deleted

break;

int c = key.compareTo(n.key);

if (c == 0)//找到返回

return n;

if (c < 0)//給定key小於當前可以,不存在

return null;

b = n;//否則繼續查詢

n = f;

}

}

} /**Returns a base-level node with key strictly less than given key, or the base-level header if there is no such node.

Also unlinks indexes to deleted nodes found along the way. Callers rely on this side-effect of clearing indices to deleted nodes.

* @param key the key

* @return a predecessor of key */

//返回“小於且最接近給定key”的資料節點,如果不存在這樣的資料節點就返回最低級別的索引頭。

private Node<K,V> findPredecessor(Comparable<? super K> key) {

if (key == null)

throw new NullPointerException(); // don't postpone errors

for (;;) {

Index<K,V> q = head;//從頂層索引開始查詢

Index<K,V> r = q.right;

for (;;) {

if (r != null) {

Node<K,V> n = r.node;

K k = n.key;

if (n.value == null) {//資料節點的值為null,表示該資料節點標記為已刪除,斷開連線並重試

if (!q.unlink(r))

break; // restart

r = q.right; // reread r

continue;

}

if (key.compareTo(k) > 0) {//給定key大於當前key,繼續往右查詢

q = r;

r = r.right;

continue;

}

}

//執行到這裡有兩種情況:

//1、當前級別的索引查詢結束

//2、給定key小於等於當前key

Index<K,V> d = q.down;//在下一級別索引中查詢

if (d != null) {//如果還存在更低級別的索引,在更低級別的索引中繼續查詢

q = d;

r = d.right;

} else

return q.node;//如果當前已經是最低級別的索引,當前索引節點關聯的資料節點即為所求

}

}

}

插入

/**

* Associates the specified value with the specified key in this map.

* If the map previously contained a mapping for the key, the old value is replaced.

*

* @param key key with which the specified value is to be associated

* @param value value to be associated with the specified key

* @return the previous value associated with the specified key, or

* <tt>null</tt> if there was no mapping for the key

* @throws ClassCastException if the specified key cannot be compared

* with the keys currently in the map

* @throws NullPointerException if the specified key or value is null

*/

public V put(K key, V value) {

if (value == null)

throw new NullPointerException();

return doPut(key, value, false);

}

/**

* Main insertion method. Adds element if not present, or replaces value if present and onlyIfAbsent is false.

* @param kkey the key

* @param value the value that must be associated with key

* @param onlyIfAbsent if should not insert if already present

* @return the old value, or null if newly inserted

*/

private V doPut(K kkey, V value, boolean onlyIfAbsent) {

Comparable<? super K> key = comparable(kkey);

for (;;) {

Node<K,V> b = findPredecessor(key);//查詢前驅資料節點

Node<K,V> n = b.next;

for (;;) {

if (n != null) {

Node<K,V> f = n.next;

//1、b的後繼兩次讀取不一致,重試

if (n != b.next) // inconsistent read

break;

Object v = n.value;

//2、資料節點的值為null,表示該資料節點標記為已刪除,移除該資料節點並重試。

if (v == null) { // n is deleted

n.helpDelete(b, f);

break;

}

//3、b節點被標記為已刪除,重試

if (v == n || b.value == null) // b is deleted

break;

int c = key.compareTo(n.key);

if (c > 0) {//給定key大於當前可以,繼續尋找合適的插入點

b = n;

n = f;

continue;

}

if (c == 0) {//找到

if (onlyIfAbsent || n.casValue(v, value))

return (V)v;

else

break; // restart if lost race to replace value

}

// else c < 0; fall through

}

//沒有找到,新建資料節點

Node<K,V> z = new Node<K,V>(kkey, value, n);

if (!b.casNext(n, z))

break; // restart if lost race to append to b

int level = randomLevel();//隨機的索引級別

if (level > 0)

insertIndex(z, level);

return null;

}

}

} /**

* Creates and adds index nodes for the given node.

* @param z the node

* @param level the level of the index

*/

private void insertIndex(Node<K,V> z, int level) {

HeadIndex<K,V> h = head;

int max = h.level;

if (level <= max) {//索引級別已經存在,在當前索引級別以及底層索引級別上都新增該節點的索引

Index<K,V> idx = null;

for (int i = 1; i <= level; ++i)//首先得到一個包含1~level個索引級別的down關係的連結串列,最後的inx為最高level索引

idx = new Index<K,V>(z, idx, null);

addIndex(idx, h, level);//Adds given index nodes from given level down to 1.新增索引

} else { // Add a new level 新增索引級別

/* To reduce interference by other threads checking for empty levels in tryReduceLevel, new levels are added

* with initialized right pointers. Which in turn requires keeping levels in an array to access them while

* creating new head index nodes from the opposite direction. */

level = max + 1;

Index<K,V>[] idxs = (Index<K,V>[])new Index[level+1];

Index<K,V> idx = null;

for (int i = 1; i <= level; ++i)

idxs[i] = idx = new Index<K,V>(z, idx, null);

HeadIndex<K,V> oldh;

int k;

for (;;) {

oldh = head;

int oldLevel = oldh.level;//更新head

if (level <= oldLevel) { // lost race to add level

k = level;

break;

}

HeadIndex<K,V> newh = oldh;

Node<K,V> oldbase = oldh.node;

for (int j = oldLevel+1; j <= level; ++j)

newh = new HeadIndex<K,V>(oldbase, newh, idxs[j], j);

if (casHead(oldh, newh)) {

k = oldLevel;

break;

}

}

addIndex(idxs[k], oldh, k);

}

}public class ConcurrentSkipListMap<K,V> extends AbstractMap<K,V> implements ConcurrentNavigableMap<K,V>,

Cloneable,java.io.Serializable {

/** Special value used to identify base-level header*/

private static final Object BASE_HEADER = new Object();//該值用於標記資料節點的頭結點

/** The topmost head index of the skiplist.*/

private transient volatile HeadIndex<K,V> head;//最高級別索引的索引頭

......

/** Nodes hold keys and values, and are singly linked in sorted order, possibly with some intervening marker nodes.

The list is headed by a dummy node accessible as head.node. The value field is declared only as Object because it

takes special non-V values for marker and header nodes. */

static final class Node<K,V> {//儲存鍵值對的資料節點,並且是有序的單鏈表。

final K key;

volatile Object value;

volatile Node<K,V> next;//後繼資料節點

......

}

/** Index nodes represent the levels of the skip list.

Note that even though both Nodes and Indexes have forward-pointing fields, they have different types and are handled

in different ways, that can't nicely be captured by placing field in a shared abstract class.

*/

static class Index<K,V> {//索引節點

final Node<K,V> node;//索引節點關聯的資料節點

final Index<K,V> down;//下一級別索引節點(關聯的資料節點相同)

volatile Index<K,V> right;//當前索引級別中,後繼索引節點

......

}

/** Nodes heading each level keep track of their level.*/

static final class HeadIndex<K,V> extends Index<K,V> {//索引頭

final int level;//索引級別

HeadIndex(Node<K,V> node, Index<K,V> down, Index<K,V> right, int level) {

super(node, down, right);

this.level = level;

}

}

......

}參考:

JDK 1.7原始碼

http://blog.csdn.NET/ict2014/article/details/17394259

http://blog.sina.com.cn/s/blog_72995dcc01017w1t.html

https://yq.aliyun.com/articles/38381

http://www.2cto.com/kf/201212/175026.html

http://ifeve.com/cas-skiplist/