《深度學習——Andrew Ng》第四課第二週程式設計作業

深度學習第四課是 卷積神經網路 ,共四周內容:

- 第一週 卷積神經網路(卷積的含義,各個層的功能,如何計算資料在不同層的大小(shape))

- 第二週 深度卷積網路:例項探究(LeNet5、ResNet50等經典神經網路,遷移學習,資料擴充)

- 第三週 目標檢測(目標檢測,衡量指標,YOLO演算法)

- 第四周 特殊應用:人臉識別和神經風格轉換(。。。還沒看)

上面連結有作業及答案,這裡主要總結一下第二週的作業內容,把學到的知識做個筆記。

keras框架

這裡先送上keras框架的中文文件,Keras中文文件,有不會的內容查官方文件還是最有效的。

keras簡介

之前有tensorflow、caffe、theano等諸多深度學習框架,這裡keras的出現是為了進一步簡化初學者構建神經網路的步驟。如果是隻對深度學習有概念上的認識,而不清楚tensorflow裡面的tensor(張量)等概念的同學,可以使用keras快速搭建自己的網路,這也符合NG快速搭建自己網路的理念。

網路層的使用

keras裡面的網路層的使用都很簡單,將keras.layers匯入即可,這裡以2D卷積層為例:

from keras import layers

from keras.layers import Conv2D

# 這裡最後輸出是X,輸入是最後括號裡面的X。8個3*3的2D卷積層 keras.layers.convolutional.Conv2D(filters, kernel_size, strides=(1, 1), padding=’valid’, data_format=None, dilation_rate=(1, 1), activation=None, use_bias=True, kernel_initializer=’glorot_uniform’, bias_initializer=’zeros’, kernel_regularizer=None, bias_regularizer=None, activity_regularizer=None, kernel_constraint=None, bias_constraint=None)

引數

- filters:卷積核的數目(即輸出的維度)

- kernel_size:單個整數或由兩個整數構成的list/tuple,卷積核的寬度和長度。如為單個整數,則表示在各個空間維度的相同長度。

- strides:單個整數或由兩個整數構成的list/tuple,為卷積的步長。如為單個整數,則表示在各個空間維度的相同步長。任何不為1的strides均與任何不為1的dilation_rate均不相容

- padding:補0策略,為“valid”, “same” 。“valid”代表只進行有效的卷積,即對邊界資料不處理。“same”代表保留邊界處的卷積結果,通常會導致輸出shape與輸入shape相同。

- activation:啟用函式,為預定義的啟用函式名(參考啟用函式),或逐元素(element-wise)的Theano函式。如果不指定該引數,將不會使用任何啟用函式(即使用線性啟用函式:a(x)=x)

- dilation_rate:單個整數或由兩個個整數構成的list/tuple,指定dilated convolution中的膨脹比例。任何不為1的dilation_rate均與任何不為1的strides均不相容。

- data_format:字串,“channels_first”或“channels_last”之一,代表影象的通道維的位置。該引數是Keras 1.x中的image_dim_ordering,“channels_last”對應原本的“tf”,“channels_first”對應原本的“th”。以128x128的RGB影象為例,“channels_first”應將資料組織為(3,128,128),而“channels_last”應將資料組織為(128,128,3)。該引數的預設值是~/.keras/keras.json中設定的值,若從未設定過,則為“channels_last”。

- use_bias:布林值,是否使用偏置項

- kernel_initializer:權值初始化方法,為預定義初始化方法名的字串,或用於初始化權重的初始化器。參考initializers

- bias_initializer:權值初始化方法,為預定義初始化方法名的字串,或用於初始化權重的初始化器。參考initializers

- kernel_regularizer:施加在權重上的正則項,為Regularizer物件

- bias_regularizer:施加在偏置向量上的正則項,為Regularizer物件

- activity_regularizer:施加在輸出上的正則項,為Regularizer物件

- kernel_constraints:施加在權重上的約束項,為Constraints物件

- bias_constraints:施加在偏置上的約束項,為Constraints物件

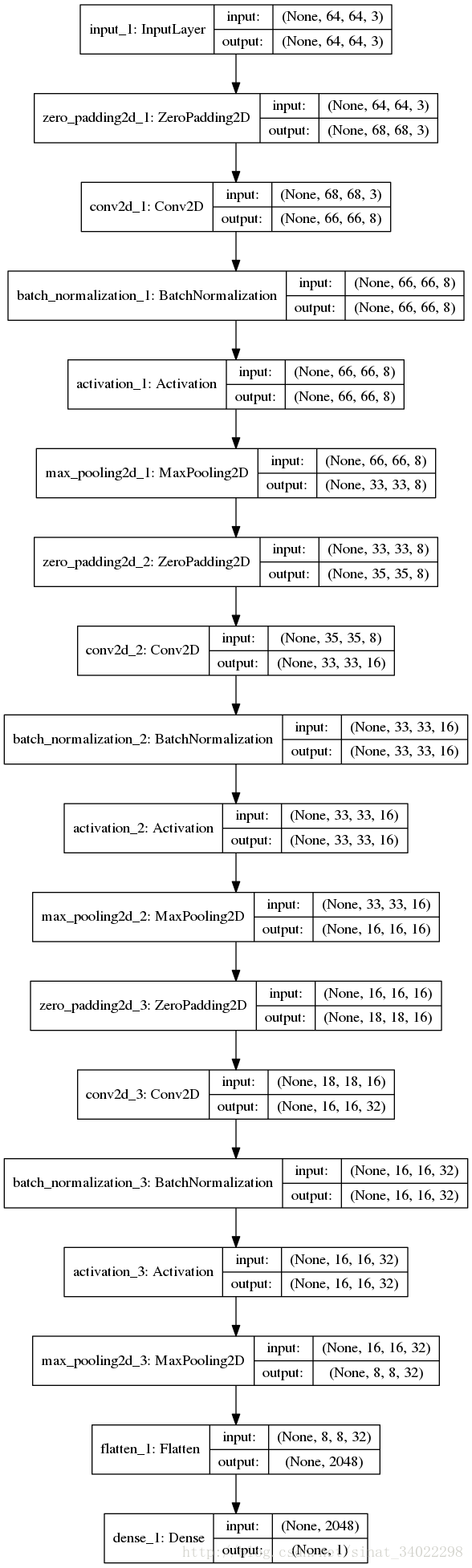

視覺化

keras.utils.vis_utils模組提供了畫出Keras模型的函式(利用graphviz)

這裡首先要安裝兩個依賴包(按照順序安裝):

graphviz

sudo apt-get install graphviz

如果提示有依賴無法安裝,則先安裝依賴,然後再裝graphviz:sudo apt-get -f installsudo apt-get install graphviz- pydot-ng

sudo pip3 install pydot-ng

安裝好之後可以在程式中畫出自己網路模型圖,這裡以第一個作業的happyModel為例:

### keras visualization

from keras.utils import plot_model

plot_model(happyModel, to_file='happymodel.png', show_shapes = True)ResNet50

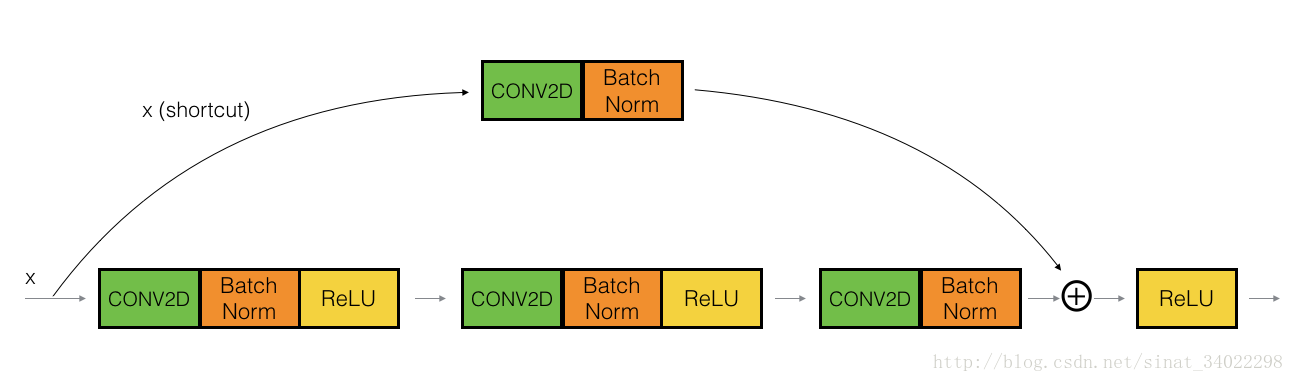

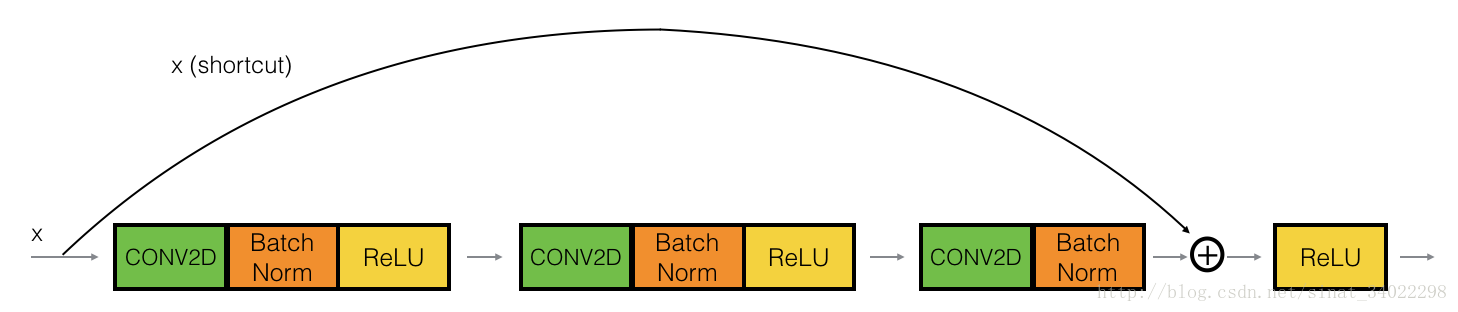

殘差網路的原理網上有很多解釋,這裡在直覺上,我感覺是將網路前面層的資料輸送給後面,從而將中間層做個“假遮蔽”,這樣就可以將深度網路做一個簡化。(個人愚見,有待日後再學習理解)

殘差網路結構圖:

Keras實現ResNet50

這裡貼出幾個主要函式,都是使用Keras,將需要的網路層寫出了,連線實現。

block identity

################# block identity #################

def identity_block(X, f, filters, stage, block):

"""

Implementation of the identity block as defined in Figure 4

Arguments:

X -- input tensor of shape (m, n_H_prev, n_W_prev, n_C_prev)

f -- integer, specifying the shape of the middle CONV's window for the main path

filters -- python list of integers, defining the number of filters in the CONV layers of the main path

stage -- integer, used to name the layers, depending on their position in the network

block -- string/character, used to name the layers, depending on their position in the network

Returns:

X -- output of the identity block, tensor of shape (n_H, n_W, n_C)

"""

# defining name basis

conv_name_base = 'res' + str(stage) + block + '_branch'

bn_name_base = 'bn' + str(stage) + block + '_branch'

# retrieve filters

F1, F2, F3 = filters

# Save the input value. You'll need this later to add back to the main path.

X_shortcut = X

# First component of main path

X = Conv2D(filters = F1, kernel_size=(1,1), strides=(1,1), padding='valid',name=conv_name_base + '2a',

kernel_initializer=glorot_uniform(seed=0))(X)

X = BatchNormalization(axis=3, name=bn_name_base + '2a')(X)

X = Activation('relu')(X)

# Second component of main path

X = Conv2D(filters= F2, kernel_size=(f,f), strides=(1,1), padding=('same'), name=conv_name_base + '2b',

kernel_initializer=glorot_uniform(seed=0))(X)

X = BatchNormalization(axis=3,name=bn_name_base + '2b')(X)

X = Activation('relu')(X)

# Third component of main path

X = Conv2D(filters=F3, kernel_size=(1, 1), strides=(1, 1), padding=('valid'), name=conv_name_base + '2c',

kernel_initializer=glorot_uniform(seed=0))(X)

X = BatchNormalization(axis=3, name=bn_name_base + '2c')(X)

# Final step: Add shortcut value to main path, and pass it through a RELU activation

X = layers.add([X, X_shortcut])

X = Activation('relu')(X)

return Xblock convolutional

################# block convolutional #################

# GRADED FUNCTION: convolutional_block

def convolutional_block(X, f, filters, stage, block, s=2):

"""

Implementation of the convolutional block as defined in Figure 4

Arguments:

X -- input tensor of shape (m, n_H_prev, n_W_prev, n_C_prev)

f -- integer, specifying the shape of the middle CONV's window for the main path

filters -- python list of integers, defining the number of filters in the CONV layers of the main path

stage -- integer, used to name the layers, depending on their position in the network

block -- string/character, used to name the layers, depending on their position in the network

s -- Integer, specifying the stride to be used

Returns:

X -- output of the convolutional block, tensor of shape (n_H, n_W, n_C)

"""

# defining name basis

conv_name_base = 'res' + str(stage) + block + '_branch'

bn_name_base = 'bn' + str(stage) + block + '_branch'

# Retrieve Filters

F1, F2, F3 = filters

# Save the input value

X_shortcut = X

##### MAIN PATH #####

# First component of main path

X = Conv2D(F1, (1, 1), strides=(s, s), name=conv_name_base + '2a', padding='valid',

kernel_initializer=glorot_uniform(seed=0))(X)

X = BatchNormalization(axis=3, name=bn_name_base + '2a')(X)

X = Activation('relu')(X)

### START CODE HERE ###

# Second component of main path (≈3 lines)

X = Conv2D(F2, (f, f), strides=(1, 1), name=conv_name_base + '2b', padding='same',

kernel_initializer=glorot_uniform(seed=0))(X)

X = BatchNormalization(axis=3, name=bn_name_base + '2b')(X)

X = Activation('relu')(X)

# Third component of main path (≈2 lines)

X = Conv2D(F3, (1, 1), strides=(1, 1), name=conv_name_base + '2c', padding='valid',

kernel_initializer=glorot_uniform(seed=0))(X)

X = BatchNormalization(axis=3, name=bn_name_base + '2c')(X)

##### SHORTCUT PATH #### (≈2 lines)

X_shortcut = Conv2D(F3, (1, 1), strides=(s, s), name=conv_name_base + '1', padding='valid',

kernel_initializer=glorot_uniform(seed=0))(X_shortcut)

X_shortcut = BatchNormalization(axis=3, name=bn_name_base + '1')(X_shortcut)

# Final step: Add shortcut value to main path, and pass it through a RELU activation (≈2 lines)

X = layers.add([X, X_shortcut])

X = Activation('relu')(X)

### END CODE HERE ###

return X由identity block和convolutional block組成ResNet50

################# ResNet50 #################

# ResNet50

def ResNet50(input_shape=(64,64,3), classes = 6):

"""

Implementation of the popular ResNet50 the following architecture:

CONV2D -> BATCHNORM -> RELU -> MAXPOOL -> CONVBLOCK -> IDBLOCK*2 -> CONVBLOCK -> IDBLOCK*3

-> CONVBLOCK -> IDBLOCK*5 -> CONVBLOCK -> IDBLOCK*2 -> AVGPOOL -> TOPLAYER

Arguments:

input_shape -- shape of the images of the dataset

classes -- integer, number of classes

Returns:

model -- a Model() instance in Keras

"""

# Define the input as a tensor with shape input_shape

X_input = Input(input_shape)

# Zero-padding

X = ZeroPadding2D((3,3))(X_input)

# Stage 1

X = Conv2D(64, (7,7), strides=(2,2), name='conv1', kernel_initializer=glorot_uniform(seed=0))(X)

X = BatchNormalization(axis=3,name='bn_conv1')(X)

X = Activation('relu')(X)

X = MaxPooling2D((3,3), strides=(2,2))(X)

# Stage2

X = convolutional_block(X, f=3, filters=[64,64,256], stage=2, block='a', s=1)

X = identity_block(X, 3, [64,64,256], stage=2, block='b')

X = identity_block(X, 3, [64,64,256], stage=2, block='c')

# Stage3

X = convolutional_block(X, f=3, filters=[128,128,512], stage=3, block='a',s=2)

X = identity_block(X, f=3, filters=[128,128,512], stage=3, block='b')

X = identity_block(X, f=3, filters=[128,128,512], stage=3, block='c')

X = identity_block(X, f=3, filters=[128,128,512], stage=3, block='d')

# Stage4

X = convolutional_block(X, f=3, filters=[256, 256, 1024], block='a', stage=4, s=2)

X = identity_block(X, f=3, filters=[256, 256, 1024], block='b', stage=4)

X = identity_block(X, f=3, filters=[256, 256, 1024], block='c', stage=4)

X = identity_block(X, f=3, filters=[256, 256, 1024], block='d', stage=4)

X = identity_block(X, f=3, filters=[256, 256, 1024], block='e', stage=4)

X = identity_block(X, f=3, filters=[256, 256, 1024], block='f', stage=4)

# Stage 5 (≈3 lines)

# The convolutional block uses three set of filters of size [512, 512, 2048], "f" is 3, "s" is 2 and the block is "a".

# The 2 identity blocks use three set of filters of size [256, 256, 2048], "f" is 3 and the blocks are "b" and "c".

X = convolutional_block(X, f = 3, filters=[512, 512, 2048], stage=5, block='a', s = 2)

X = identity_block(X, f = 3, filters=[256, 256, 2048], stage=5, block='b')

X = identity_block(X, f = 3, filters=[256, 256, 2048], stage=5, block='c')

# Avgpool

X = AveragePooling2D(pool_size=(2,2))(X)

# output layer

X = Flatten()(X)

X = Dense(classes,activation='softmax', name='fc'+str(classes), kernel_initializer=glorot_uniform(seed=0))(X)

# create model

model = Model(inputs=X_input, outputs=X, name='ResNet50')

return model