MapReduce和Yarn的理解

MapReduce設計理念:移動計算,而不移動資料

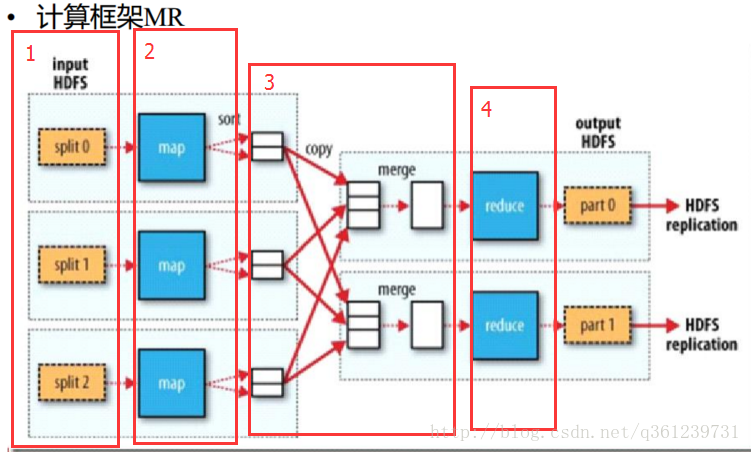

計算框架MR說明:

- 分為4個步驟,按順序執行:

- split(左淺黃色框):將單個的block進行切割,得到資料片段。

- map Task(左藍色框):自己寫的map程式,一個map程式就叫一個map任務,有多少個碎片,就有多少個map任務(Java執行緒),輸入的資料就是鍵值對資料,輸出的資料也是鍵值對。

- shuffle(洗牌,白色框):將map輸出的資料進行分組、排序、合併。

- reduce Task(右藍色框):在整個MapReduce執行過程中,預設只有一個Reduce Task(可以自己進行設定),自己寫程式去定製計算內容,最後得到結果。

執行過程:

一個檔案被切成1024個碎片段,對應著1024個Mapper Task,這些MapperTask在有碎片段的結點(DataNode)去執行,每個DataNode上都有個NodeManager來執行MapReduce程式,NodeManager有一個與之對應的AppMaster,由它負責請ResourceManager中去請求資源,這個資源被稱為Container,AppMaster就會去執行MapperTask,通過Executer物件來執行,在執行過程中,會監控Mapper Taks的執行狀態和執行進度,並想NodeManager彙報,然後NodeManager向ResourceManager彙報。Executer在呼叫MapperTask時,首先初始化這個類(通過反射),呼叫(只調用一次)setup()方法來進行初始化,之後迴圈呼叫map方法。因為有1024個MapperTask,那麼Mapper程式會被初始化1024次。

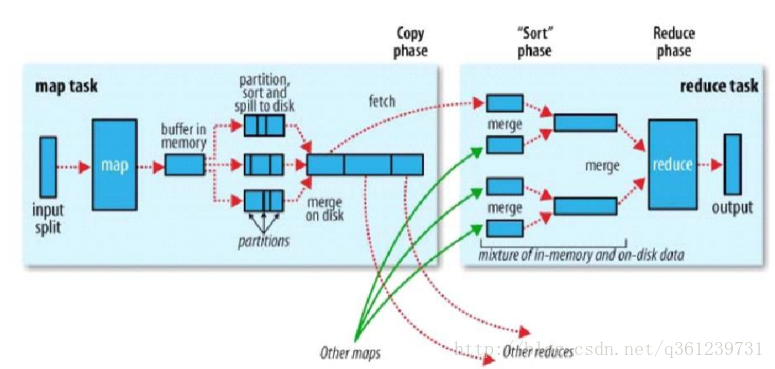

MapReduce執行過程:

說明:

- spill to disk:map輸出的資料放到記憶體中,溢位時,執行spill to disk

- partition:(預設由計算框架通過取模(key的hash值)演算法)將map輸出的key-value資料進行分割槽,並得到分割槽號。目的是將需要執行的資料分配到對應的reduce Task中。

- sort:將map task輸出的資料,按key進行排序(預設為字典排序)。

- fetch:按照分割槽號來抓取資料,分配到對應的reduce task。

- sort phase:同上面的 3

- group:將資料按鍵值是否相等,分為一組。

- 將分組傳給reduce進行計算

- 還有一個combiner過程,在下圖講解

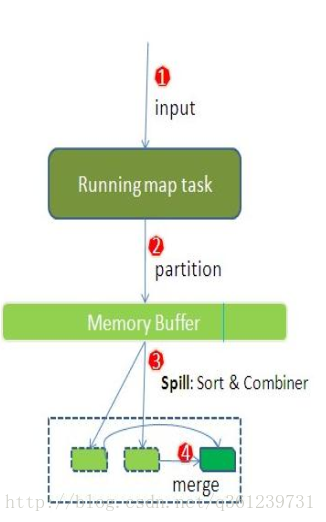

Hadoop計算框架shuffle過程詳解

- 每個map task都有一個記憶體緩衝區(預設是100MB),儲存著map的輸出結果

- 當緩衝區快滿的時候需要將緩衝區的資料以一個臨時檔案的方式存放到磁碟(Spill)

- 溢寫是由單獨執行緒來完成,不影響往緩衝區寫map結果的執行緒(spill.percent,預設是0.8)

- 當溢寫執行緒啟動後,需要對這80MB空間內的key做排序(Sort)

Combiner講解

- 假如client設定過Combiner,那麼現在就是使用Combiner的時候了。 將有相同key的key/value對的value加起來,減少溢寫到磁碟的資料量。(reduce1,word1,[8])。

- 當整個map task結束後再對磁碟中這個map task產生的所有臨時檔案做合併(Merge),對於“word1”就是像這樣的:{“word1”, [5, 8, 2, …]},假如有Combiner,{word1 [15]},最終產生一個檔案。

- reduce 從tasktracker copy資料

- copy過來的資料會先放入記憶體緩衝區中,這裡的緩衝區大小要比map端的更為靈活,它基於JVM的heap size設定

- merge有三種形式:1)記憶體到記憶體 2)記憶體到磁碟 3)磁碟到磁碟。 merge從不同tasktracker上拿到的資料,{word1 [15,17,2]}

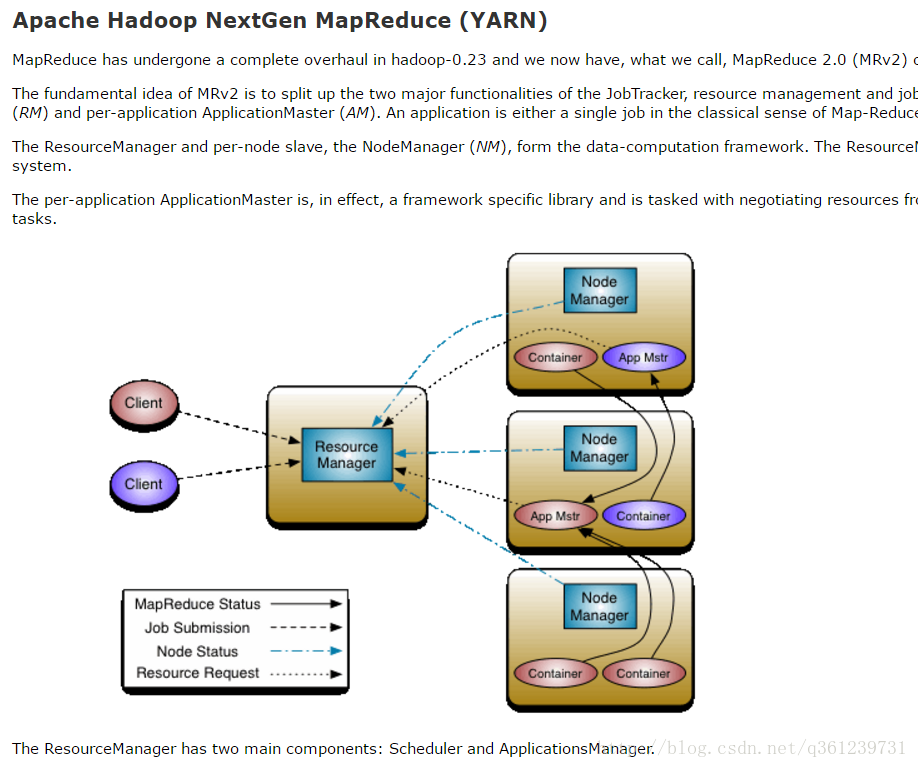

MapReduce 的執行環境 YARN

The ResourceManager has two main components: Scheduler and ApplicationsManager.

The Scheduler is responsible for allocating(分配) resources to the various(多方面的) running applications subject to familiar constraints(約束) of capacities(容量), queues etc. The Scheduler is pure scheduler in the sense that it performs no monitoring or tracking of status for the application. Also, it offers no guarantees(保證) about restarting failed tasks either due to application failure or hardware failures. The Scheduler performs its scheduling function based the resource requirements of the applications; it does so based on the abstract notion(概念) of a resource Container which incorporates(合併) elements such as memory, cpu, disk, network etc. In the first version, only memory is supported.

The Scheduler has a pluggable policy plug-in, which is responsible for partitioning the cluster resources among the various queues, applications etc. The current Map-Reduce schedulers such as the CapacityScheduler and the FairScheduler would be some examples of the plug-in.

The CapacityScheduler supports hierarchical queues to allow for more predictable sharing of cluster resources

The ApplicationsManager is responsible for accepting job-submissions, negotiating(協商) the first container for executing the application specific ApplicationMaster and provides the service for restarting the ApplicationMaster container on failure.

The NodeManager is the per-machine framework agent(代理) who is responsible for containers, monitoring their resource usage (cpu, memory, disk, network) and reporting the same to the ResourceManager/Scheduler.

The per-application ApplicationMaster has the responsibility of negotiating appropriate(恰當的) resource containers from the Scheduler, tracking their status and monitoring for progress.

MRV2 maintains(維持) API compatibility(相容性) with previous stable release (hadoop-1.x). This means that all Map-Reduce jobs should still run unchanged on top of MRv2 with just a recompile.

程式設計師需要做的是,計算程式的開發。不需要去管Resource Manager