Variational Autoencoder(變分自編碼)

使用通用自編碼器的時候,首先將輸入encoder壓縮為一個小的 form,然後將其decoder轉換成輸出的一個估計。如果目標是簡單的重現輸入效果很好,但是若想生成新的物件就不太可行了,因為其實我們根本不知道這個網路所生成的編碼具體是什麼。雖然我們可以通過結果去對比不同的物件,但是要理解它內部的工作方式幾乎是不可能的,甚至有時候可能連輸入應該是什麼樣子的都不知道。

解決方法是用相反的方法使用變分自編碼器(Variational Autoencoder,VAE),即不去關注隱含向量所服從的分佈,只需要告訴網路我們想讓這個分佈轉換為什麼樣子就行了。VAE對隱層的輸出增加了長約束,而在對隱層的取樣過程也能起到和一般 dropout 效果類似的正則化作用。而至於它的名字變分

下面用一個簡單的github上的程式碼實現(原地址:https://github.com/FelixMohr/Deep-learning-with-Python/blob/master/VAE.ipynb)來理解:

這段程式碼目的是生成和MNIST中不一樣的手寫影象,而首先要做的先對MNIST中的資料進行編碼,然後定義一個正態分佈便於解碼時得出我們期望生成的結果,即在解碼時從該分佈中隨機取樣得到“偽造”的影象。

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('MNIST_data')#28*28的單色道影象資料

tf.reset_default_graph()

batch_size = 64

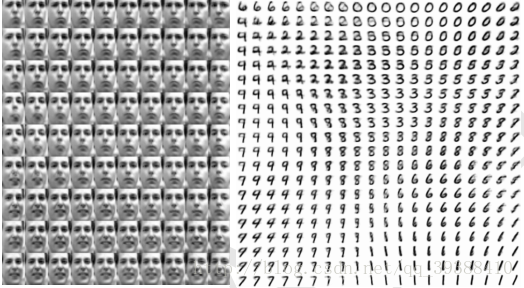

X_in = tf.placeholder(dtype=tf.float32, shape=[None, 28, 28 另外它還有非常好的特性是同時訓練引數編碼器與生成器網路的組合迫使模型學習編碼器可以捕獲可預測的座標系,這使其成為一個優秀的流形學習演算法,如下圖展示的是由變分自動編碼器學到的低維流形的例子,可以看出它發現了兩個存在於面部影象的因素:旋轉角和情緒表達。

而VAE的主要缺點是從在影象上訓練的變分自動編碼器中取樣的樣本往往有些模糊,而且原因尚不清楚,其中一種可能性是因為最小化KL散度而由於模糊性是最大似然的固有效應產生的。

主要參考:

bengio deep learning