tensorflow gfile檔案操作詳解

一、gfile模組是什麼

gfile模組定義在tensorflow/python/platform/gfile.py,但其原始碼實現主要位於tensorflow/tensorflow/python/lib/io/file_io.py,那麼gfile模組主要功能是什麼呢?

google上的定義為:

翻譯過來為:

沒有執行緒鎖的檔案I / O操作包裝器

...對於TensorFlow的tf.gfile模組來說是一個特別無用的描述!

tf.gfile模組的主要角色是:

1.提供一個接近Python檔案物件的API,以及

2.提供基於TensorFlow C ++ FileSystem API的實現。

C ++ FileSystem API支援多種檔案系統實現,包括本地檔案,谷歌雲端儲存(以gs://開頭)和HDFS(以hdfs:/開頭)。 TensorFlow將它們匯出為tf.gfile,以便我們可以使用這些實現來儲存和載入檢查點,編寫TensorBoard log以及訪問訓練資料(以及其他用途)。但是,如果所有檔案都是本地檔案,則可以使用常規的Python檔案API而不會造成任何問題。

以上為google對tf.gfile的說明。

二、gfile API介紹

下面將分別介紹每一個gfile API!

2-1)tf.gfile.Copy(oldpath, newpath, overwrite=False)

拷貝原始檔並建立目標檔案,無返回,其形參說明如下:

oldpath:帶路徑名字的拷貝原始檔;

newpath:帶路徑名字的拷貝目標檔案;

overwrite:目標檔案已經存在時是否要覆蓋,預設為false,如果目標檔案已經存在則會報錯

2-2)tf.gfile.MkDir(dirname)

建立一個目錄,dirname為目錄名字,無返回。

2-3)tf.gfile.Remove(filename)

刪除檔案,filename即檔名,無返回。

2-4)tf.gfile.DeleteRecursively(dirname)

遞迴刪除所有目錄及其檔案,dirname即目錄名,無返回。

2-5)tf.gfile.Exists(filename)

判斷目錄或檔案是否存在,filename可為目錄路徑或帶檔名的路徑,有該目錄則返回True,否則False。

2-6)tf.gfile.Glob(filename)

查詢匹配pattern的檔案並以列表的形式返回,filename可以是一個具體的檔名,也可以是包含萬用字元的正則表示式。

2-7)tf.gfile.IsDirectory(dirname)

判斷所給目錄是否存在,如果存在則返回True,否則返回False,dirname是目錄名。

2-8)tf.gfile.ListDirectory(dirname)

羅列dirname目錄下的所有檔案並以列表形式返回,dirname必須是目錄名。

2-9)tf.gfile.MakeDirs(dirname)

以遞迴方式建立父目錄及其子目錄,如果目錄已存在且是可覆蓋則會建立成功,否則報錯,無返回。

2-10)tf.gfile.Rename(oldname, newname, overwrite=False)

重新命名或移動一個檔案或目錄,無返回,其形參說明如下:

oldname:舊目錄或舊檔案;

newname:新目錄或新檔案;

overwrite:預設為false,如果新目錄或新檔案已經存在則會報錯,否則重新命名或移動成功。

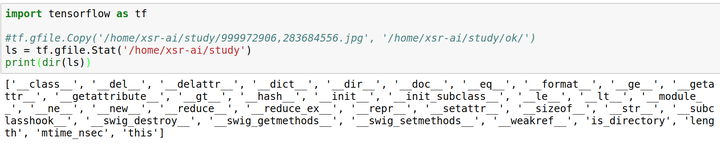

2-11)tf.gfile.Stat(filename)

返回目錄的統計資料,該函式會返回FileStatistics資料結構,以dir(tf.gfile.Stat(filename))獲取返回資料的屬性如下:

2-12)tf.gfile.Walk(top, in_order=True)

遞迴獲取目錄資訊生成器,top是目錄名,in_order預設為True指示順序遍歷目錄,否則將無序遍歷,每次生成返回如下格式資訊(dirname, [subdirname, subdirname, ...], [filename, filename, ...])。

2-13)tf.gfile.GFile(filename, mode)

獲取文字操作控制代碼,類似於python提供的文字操作open()函式,filename是要開啟的檔名,mode是以何種方式去讀寫,將會返回一個文字操作控制代碼。

tf.gfile.Open()是該介面的同名,可任意使用其中一個!

2-14)tf.gfile.FastGFile(filename, mode)

該函式與tf.gfile.GFile的差別僅僅在於“無阻塞”,即該函式會無阻賽以較快的方式獲取文字操作控制代碼。

三、API原始碼# Copyright 2015 The TensorFlow Authors. All Rights Reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ==============================================================================

"""File IO methods that wrap the C++ FileSystem API.

The C++ FileSystem API is SWIG wrapped in file_io.i. These functions call those

to accomplish basic File IO operations.

"""

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import os

import uuid

import six

from tensorflow.python import pywrap_tensorflow

from tensorflow.python.framework import c_api_util

from tensorflow.python.framework import errors

from tensorflow.python.util import compat

from tensorflow.python.util import deprecation

from tensorflow.python.util.tf_export import tf_export

class FileIO(object):

"""FileIO class that exposes methods to read / write to / from files.

The constructor takes the following arguments:

name: name of the file

mode: one of 'r', 'w', 'a', 'r+', 'w+', 'a+'. Append 'b' for bytes mode.

Can be used as an iterator to iterate over lines in the file.

The default buffer size used for the BufferedInputStream used for reading

the file line by line is 1024 * 512 bytes.

"""

def __init__(self, name, mode):

self.__name = name

self.__mode = mode

self._read_buf = None

self._writable_file = None

self._binary_mode = "b" in mode

mode = mode.replace("b", "")

if mode not in ("r", "w", "a", "r+", "w+", "a+"):

raise errors.InvalidArgumentError(

None, None, "mode is not 'r' or 'w' or 'a' or 'r+' or 'w+' or 'a+'")

self._read_check_passed = mode in ("r", "r+", "a+", "w+")

self._write_check_passed = mode in ("a", "w", "r+", "a+", "w+")

@property

def name(self):

"""Returns the file name."""

return self.__name

@property

def mode(self):

"""Returns the mode in which the file was opened."""

return self.__mode

def _preread_check(self):

if not self._read_buf:

if not self._read_check_passed:

raise errors.PermissionDeniedError(None, None,

"File isn't open for reading")

with errors.raise_exception_on_not_ok_status() as status:

self._read_buf = pywrap_tensorflow.CreateBufferedInputStream(

compat.as_bytes(self.__name), 1024 * 512, status)

def _prewrite_check(self):

if not self._writable_file:

if not self._write_check_passed:

raise errors.PermissionDeniedError(None, None,

"File isn't open for writing")

with errors.raise_exception_on_not_ok_status() as status:

self._writable_file = pywrap_tensorflow.CreateWritableFile(

compat.as_bytes(self.__name), compat.as_bytes(self.__mode), status)

def _prepare_value(self, val):

if self._binary_mode:

return compat.as_bytes(val)

else:

return compat.as_str_any(val)

def size(self):

"""Returns the size of the file."""

return stat(self.__name).length

def write(self, file_content):

"""Writes file_content to the file. Appends to the end of the file."""

self._prewrite_check()

with errors.raise_exception_on_not_ok_status() as status:

pywrap_tensorflow.AppendToFile(

compat.as_bytes(file_content), self._writable_file, status)

def read(self, n=-1):

"""Returns the contents of a file as a string.

Starts reading from current position in file.

Args:

n: Read 'n' bytes if n != -1. If n = -1, reads to end of file.

Returns:

'n' bytes of the file (or whole file) in bytes mode or 'n' bytes of the

string if in string (regular) mode.

"""

self._preread_check()

with errors.raise_exception_on_not_ok_status() as status:

if n == -1:

length = self.size() - self.tell()

else:

length = n

return self._prepare_value(

pywrap_tensorflow.ReadFromStream(self._read_buf, length, status))

@deprecation.deprecated_args(

None,

"position is deprecated in favor of the offset argument.",

"position")

def seek(self, offset=None, whence=0, position=None):

# TODO(jhseu): Delete later. Used to omit `position` from docs.

# pylint: disable=g-doc-args

"""Seeks to the offset in the file.

Args:

offset: The byte count relative to the whence argument.

whence: Valid values for whence are:

0: start of the file (default)

1: relative to the current position of the file

2: relative to the end of file. offset is usually negative.

"""

# pylint: enable=g-doc-args

self._preread_check()

# We needed to make offset a keyword argument for backwards-compatibility.

# This check exists so that we can convert back to having offset be a

# positional argument.

# TODO(jhseu): Make `offset` a positional argument after `position` is

# deleted.

if offset is None and position is None:

raise TypeError("seek(): offset argument required")

if offset is not None and position is not None:

raise TypeError("seek(): offset and position may not be set "

"simultaneously.")

if position is not None:

offset = position

with errors.raise_exception_on_not_ok_status() as status:

if whence == 0:

pass

elif whence == 1:

offset += self.tell()

elif whence == 2:

offset += self.size()

else:

raise errors.InvalidArgumentError(

None, None,

"Invalid whence argument: {}. Valid values are 0, 1, or 2."

.format(whence))

ret_status = self._read_buf.Seek(offset)

pywrap_tensorflow.Set_TF_Status_from_Status(status, ret_status)

def readline(self):

r"""Reads the next line from the file. Leaves the '\n' at the end."""

self._preread_check()

return self._prepare_value(self._read_buf.ReadLineAsString())

def readlines(self):

"""Returns all lines from the file in a list."""

self._preread_check()

lines = []

while True:

s = self.readline()

if not s:

break

lines.append(s)

return lines

def tell(self):

"""Returns the current position in the file."""

self._preread_check()

return self._read_buf.Tell()

def __enter__(self):

"""Make usable with "with" statement."""

return self

def __exit__(self, unused_type, unused_value, unused_traceback):

"""Make usable with "with" statement."""

self.close()

def __iter__(self):

return self

def next(self):

retval = self.readline()

if not retval:

raise StopIteration()

return retval

def __next__(self):

return self.next()

def flush(self):

"""Flushes the Writable file.

This only ensures that the data has made its way out of the process without

any guarantees on whether it's written to disk. This means that the

data would survive an application crash but not necessarily an OS crash.

"""

if self._writable_file:

with errors.raise_exception_on_not_ok_status() as status:

ret_status = self._writable_file.Flush()

pywrap_tensorflow.Set_TF_Status_from_Status(status, ret_status)

def close(self):

"""Closes FileIO. Should be called for the WritableFile to be flushed."""

self._read_buf = None

if self._writable_file:

with errors.raise_exception_on_not_ok_status() as status:

ret_status = self._writable_file.Close()

pywrap_tensorflow.Set_TF_Status_from_Status(status, ret_status)

self._writable_file = None

@tf_export("gfile.Exists")

def file_exists(filename):

"""Determines whether a path exists or not.

Args:

filename: string, a path

Returns:

True if the path exists, whether its a file or a directory.

False if the path does not exist and there are no filesystem errors.

Raises:

errors.OpError: Propagates any errors reported by the FileSystem API.

"""

try:

with errors.raise_exception_on_not_ok_status() as status:

pywrap_tensorflow.FileExists(compat.as_bytes(filename), status)

except errors.NotFoundError:

return False

return True

@tf_export("gfile.Remove")

def delete_file(filename):

"""Deletes the file located at 'filename'.

Args:

filename: string, a filename

Raises:

errors.OpError: Propagates any errors reported by the FileSystem API. E.g.,

NotFoundError if the file does not exist.

"""

with errors.raise_exception_on_not_ok_status() as status:

pywrap_tensorflow.DeleteFile(compat.as_bytes(filename), status)

def read_file_to_string(filename, binary_mode=False):

"""Reads the entire contents of a file to a string.

Args:

filename: string, path to a file

binary_mode: whether to open the file in binary mode or not. This changes

the type of the object returned.

Returns:

contents of the file as a string or bytes.

Raises:

errors.OpError: Raises variety of errors that are subtypes e.g.

NotFoundError etc.

"""

if binary_mode:

f = FileIO(filename, mode="rb")

else:

f = FileIO(filename, mode="r")

return f.read()

def write_string_to_file(filename, file_content):

"""Writes a string to a given file.

Args:

filename: string, path to a file

file_content: string, contents that need to be written to the file

Raises:

errors.OpError: If there are errors during the operation.

"""

with FileIO(filename, mode="w") as f:

f.write(file_content)

@tf_export("gfile.Glob")

def get_matching_files(filename):

"""Returns a list of files that match the given pattern(s).

Args:

filename: string or iterable of strings. The glob pattern(s).

Returns:

A list of strings containing filenames that match the given pattern(s).

Raises:

errors.OpError: If there are filesystem / directory listing errors.

"""

with errors.raise_exception_on_not_ok_status() as status:

if isinstance(filename, six.string_types):

return [

# Convert the filenames to string from bytes.

compat.as_str_any(matching_filename)

for matching_filename in pywrap_tensorflow.GetMatchingFiles(

compat.as_bytes(filename), status)

]

else:

return [

# Convert the filenames to string from bytes.

compat.as_str_any(matching_filename)

for single_filename in filename

for matching_filename in pywrap_tensorflow.GetMatchingFiles(

compat.as_bytes(single_filename), status)

]

@tf_export("gfile.MkDir")

def create_dir(dirname):

"""Creates a directory with the name 'dirname'.

Args:

dirname: string, name of the directory to be created

Notes:

The parent directories need to exist. Use recursive_create_dir instead if

there is the possibility that the parent dirs don't exist.

Raises:

errors.OpError: If the operation fails.

"""

with errors.raise_exception_on_not_ok_status() as status:

pywrap_tensorflow.CreateDir(compat.as_bytes(dirname), status)

@tf_export("gfile.MakeDirs")

def recursive_create_dir(dirname):

"""Creates a directory and all parent/intermediate directories.

It succeeds if dirname already exists and is writable.

Args:

dirname: string, name of the directory to be created

Raises:

errors.OpError: If the operation fails.

"""

with errors.raise_exception_on_not_ok_status() as status:

pywrap_tensorflow.RecursivelyCreateDir(compat.as_bytes(dirname), status)

@tf_export("gfile.Copy")

def copy(oldpath, newpath, overwrite=False):

"""Copies data from oldpath to newpath.

Args:

oldpath: string, name of the file who's contents need to be copied

newpath: string, name of the file to which to copy to

overwrite: boolean, if false its an error for newpath to be occupied by an

existing file.

Raises:

errors.OpError: If the operation fails.

"""

with errors.raise_exception_on_not_ok_status() as status:

pywrap_tensorflow.CopyFile(

compat.as_bytes(oldpath), compat.as_bytes(newpath), overwrite, status)

@tf_export("gfile.Rename")

def rename(oldname, newname, overwrite=False):

"""Rename or move a file / directory.

Args:

oldname: string, pathname for a file

newname: string, pathname to which the file needs to be moved

overwrite: boolean, if false it's an error for `newname` to be occupied by

an existing file.

Raises:

errors.OpError: If the operation fails.

"""

with errors.raise_exception_on_not_ok_status() as status:

pywrap_tensorflow.RenameFile(

compat.as_bytes(oldname), compat.as_bytes(newname), overwrite, status)

def atomic_write_string_to_file(filename, contents, overwrite=True):

"""Writes to `filename` atomically.

This means that when `filename` appears in the filesystem, it will contain

all of `contents`. With write_string_to_file, it is possible for the file

to appear in the filesystem with `contents` only partially written.

Accomplished by writing to a temp file and then renaming it.

Args:

filename: string, pathname for a file

contents: string, contents that need to be written to the file

overwrite: boolean, if false it's an error for `filename` to be occupied by

an existing file.

"""

temp_pathname = filename + ".tmp" + uuid.uuid4().hex

write_string_to_file(temp_pathname, contents)

try:

rename(temp_pathname, filename, overwrite)

except errors.OpError:

delete_file(temp_pathname)

raise

@tf_export("gfile.DeleteRecursively")

def delete_recursively(dirname):

"""Deletes everything under dirname recursively.

Args:

dirname: string, a path to a directory

Raises:

errors.OpError: If the operation fails.

"""

with errors.raise_exception_on_not_ok_status() as status:

pywrap_tensorflow.DeleteRecursively(compat.as_bytes(dirname), status)

@tf_export("gfile.IsDirectory")

def is_directory(dirname):

"""Returns whether the path is a directory or not.

Args:

dirname: string, path to a potential directory

Returns:

True, if the path is a directory; False otherwise

"""

status = c_api_util.ScopedTFStatus()

return pywrap_tensorflow.IsDirectory(compat.as_bytes(dirname), status)

@tf_export("gfile.ListDirectory")

def list_directory(dirname):

"""Returns a list of entries contained within a directory.

The list is in arbitrary order. It does not contain the special entries "."

and "..".

Args:

dirname: string, path to a directory

Returns:

[filename1, filename2, ... filenameN] as strings

Raises:

errors.NotFoundError if directory doesn't exist

"""

if not is_directory(dirname):

raise errors.NotFoundError(None, None, "Could not find directory")

with errors.raise_exception_on_not_ok_status() as status:

# Convert each element to string, since the return values of the

# vector of string should be interpreted as strings, not bytes.

return [

compat.as_str_any(filename)

for filename in pywrap_tensorflow.GetChildren(

compat.as_bytes(dirname), status)

]

@tf_export("gfile.Walk")

def walk(top, in_order=True):

"""Recursive directory tree generator for directories.

Args:

top: string, a Directory name

in_order: bool, Traverse in order if True, post order if False.

Errors that happen while listing directories are ignored.

Yields:

Each yield is a 3-tuple: the pathname of a directory, followed by lists of

all its subdirectories and leaf files.

(dirname, [subdirname, subdirname, ...], [filename, filename, ...])

as strings

"""

top = compat.as_str_any(top)

try:

listing = list_directory(top)

except errors.NotFoundError:

return

files = []

subdirs = []

for item in listing:

full_path = os.path.join(top, item)

if is_directory(full_path):

subdirs.append(item)

else:

files.append(item)

here = (top, subdirs, files)

if in_order:

yield here

for subdir in subdirs:

for subitem in walk(os.path.join(top, subdir), in_order):

yield subitem

if not in_order:

yield here

@tf_export("gfile.Stat")

def stat(filename):

"""Returns file statistics for a given path.

Args:

filename: string, path to a file

Returns:

FileStatistics struct that contains information about the path

Raises:

errors.OpError: If the operation fails.

"""

file_statistics = pywrap_tensorflow.FileStatistics()

with errors.raise_exception_on_not_ok_status() as status:

pywrap_tensorflow.Stat(compat.as_bytes(filename), file_statistics, status)

return file_statistics