深度學習之自編碼

阿新 • • 發佈:2019-01-09

前言

看完神經網路及BP演算法介紹後,這裡做一個小實驗,內容是來自斯坦福UFLDL教程,實現影象的壓縮表示,模型是用神經網路模型,訓練方法是BP後向傳播演算法。

理論

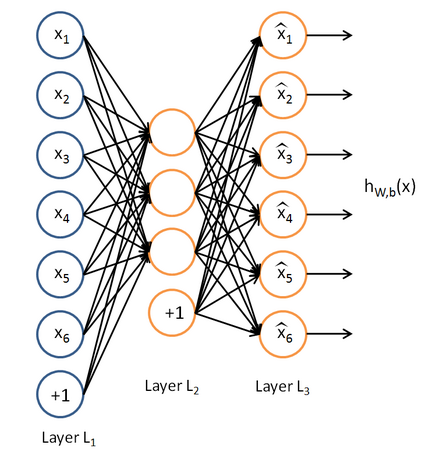

在有監督學習中,訓練樣本是具有標籤的,一般神經網路是有監督的學習方法。我們這裡要講的是自編碼神經網路,這是一種無監督的學習方法,它是讓輸出值等於自身來實現的。 從圖中可以看到,神經網路模型只有一層隱含層,輸出層跟輸入層的神經單元個數是一樣的。如果隱含層單元個數比輸入層少的話,我們用這個模型學到的是輸入資料的壓縮表示,相當於對輸入資料進行降維(這是一種非線性的降維方法)。實際上,如果隱含層單元個數比輸入層多,我們可以讓隱含層的大部分單元啟用值接近0,就是讓它們稀疏,這樣學到的也是壓縮表示。我們模型要使得輸出層跟輸入層一樣,就是隱含層要能夠重建出跟輸入層一樣的輸出層,這樣我們學到的壓縮表示才是有意義的。

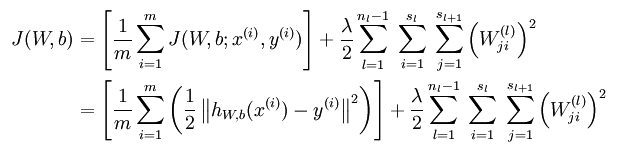

回憶下之前介紹過的損失函式:

從圖中可以看到,神經網路模型只有一層隱含層,輸出層跟輸入層的神經單元個數是一樣的。如果隱含層單元個數比輸入層少的話,我們用這個模型學到的是輸入資料的壓縮表示,相當於對輸入資料進行降維(這是一種非線性的降維方法)。實際上,如果隱含層單元個數比輸入層多,我們可以讓隱含層的大部分單元啟用值接近0,就是讓它們稀疏,這樣學到的也是壓縮表示。我們模型要使得輸出層跟輸入層一樣,就是隱含層要能夠重建出跟輸入層一樣的輸出層,這樣我們學到的壓縮表示才是有意義的。

回憶下之前介紹過的損失函式:

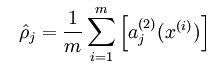

上式是對整個樣本求隱含層節點j的平均值,如果是所有隱含層節點,那麼就組成一個向量。

我們要設定期望隱含層稀疏性的程度,假設為

上式是對整個樣本求隱含層節點j的平均值,如果是所有隱含層節點,那麼就組成一個向量。

我們要設定期望隱含層稀疏性的程度,假設為 ,因此我們希望對於所有的節點j

,因此我們希望對於所有的節點j 。

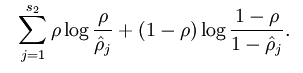

那怎麼衡量實際跟期望的差別呢?

。

那怎麼衡量實際跟期望的差別呢?

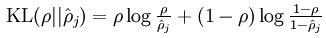

實際上是關於伯努利變數p與q的KL離散度(參考我之前寫的關於資訊熵的部落格)。

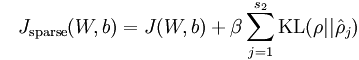

此時損失函式為

實際上是關於伯努利變數p與q的KL離散度(參考我之前寫的關於資訊熵的部落格)。

此時損失函式為

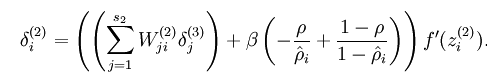

由於加了稀疏項損失函式,對第二層節點求殘差時公式變為

由於加了稀疏項損失函式,對第二層節點求殘差時公式變為

實驗

實驗教程是在Exercise:Sparse Autoencoder,要實現的檔案是sampleIMAGES.m, sparseAutoencoderCost.m,computeNumericalGradient.m 實驗步驟:- 生成訓練集

- 稀疏自編碼目標函式

- 梯度校驗

- 訓練稀疏自編碼

- 視覺化

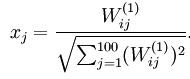

,把x用影象表示出來的。

程式碼如下:

sampleIMAGES.m

,把x用影象表示出來的。

程式碼如下:

sampleIMAGES.m

- <span style="font-size:14px;">function patches = sampleIMAGES()

-

% sampleIMAGES

- % Returns 10000 patches for training

- load IMAGES; % load images from disk

- patchsize = 8; % we'll use 8x8 patches

- numpatches = 10000;

- % Initialize patches with zeros. Your code will fill in this matrix--one

- % column per patch, 10000 columns.

- patches = zeros(patchsize*patchsize, numpatches);

- %% ---------- YOUR CODE HERE --------------------------------------

- % Instructions: Fill in the variable called "patches" using data

- % from IMAGES.

- %

- % IMAGES is a 3D array containing 10 images

- % For instance, IMAGES(:,:,6) is a 512x512 array containing the 6th image,

- % and you can type "imagesc(IMAGES(:,:,6)), colormap gray;" to visualize

- % it. (The contrast on these images look a bit off because they have

- % been preprocessed using using "whitening." See the lecture notes for

- % more details.) As a second example, IMAGES(21:30,21:30,1) is an image

- % patch corresponding to the pixels in the block (21,21) to (30,30) of

- % Image 1

- [m,n,num] = size(IMAGES);

- for i=1:numpatches

- j = randi(num);

- bx = randi(m-patchsize+1);

- by = randi(n-patchsize+1);

- block = IMAGES(bx:bx+patchsize-1,by:by+patchsize-1,j);

- patches(:,i) = block(:);

- end

- %% ---------------------------------------------------------------

- % For the autoencoder to work well we need to normalize the data

- % Specifically, since the output of the network is bounded between [0,1]

- % (due to the sigmoid activation function), we have to make sure

- % the range of pixel values is also bounded between [0,1]

- patches = normalizeData(patches);

- end

- %% ---------------------------------------------------------------

- function patches = normalizeData(patches)

- % Squash data to [0.1, 0.9] since we use sigmoid as the activation

- % function in the output layer

- % Remove DC (mean of images).

- patches = bsxfun(@minus, patches, mean(patches));

- % Truncate to +/-3 standard deviations and scale to -1 to 1

- pstd = 3 * std(patches(:));

- patches = max(min(patches, pstd), -pstd) / pstd;

- % Rescale from [-1,1] to [0.1,0.9]

- patches = (patches + 1) * 0.4 + 0.1;

- end

- </span>

- <span style="font-size:14px;">function [cost,grad] = sparseAutoencoderCost(theta, visibleSize, hiddenSize, ...

- lambda, sparsityParam, beta, data)

- % visibleSize: the number of input units (probably 64)

- % hiddenSize: the number of hidden units (probably 25)

- % lambda: weight decay parameter

- % sparsityParam: The desired average activation for the hidden units (denoted in the lecture

- % notes by the greek alphabet rho, which looks like a lower-case "p").

- % beta: weight of sparsity penalty term

- % data: Our 64x10000 matrix containing the training data. So, data(:,i) is the i-th training example.

- % The input theta is a vector (because minFunc expects the parameters to be a vector).

- % We first convert theta to the (W1, W2, b1, b2) matrix/vector format, so that this

- % follows the notation convention of the lecture notes.

- W1 = reshape(theta(1:hiddenSize*visibleSize), hiddenSize, visibleSize);

- W2 = reshape(theta(hiddenSize*visibleSize+1:2*hiddenSize*visibleSize), visibleSize, hiddenSize);

- b1 = theta(2*hiddenSize*visibleSize+1:2*hiddenSize*visibleSize+hiddenSize);

- b2 = theta(2*hiddenSize*visibleSize+hiddenSize+1:end);

- % Cost and gradient variables (your code needs to compute these values).

- % Here, we initialize them to zeros.

- cost = 0;

- W1grad = zeros(size(W1));

- W2grad = zeros(size(W2));

- b1grad = zeros(size(b1));

- b2grad = zeros(size(b2));

- %% ---------- YOUR CODE HERE --------------------------------------

- % Instructions: Compute the cost/optimization objective J_sparse(W,b) for the Sparse Autoencoder,

- % and the corresponding gradients W1grad, W2grad, b1grad, b2grad.

- %

- % W1grad, W2grad, b1grad and b2grad should be computed using backpropagation.

- % Note that W1grad has the same dimensions as W1, b1grad has the same dimensions

- % as b1, etc. Your code should set W1grad to be the partial derivative of J_sparse(W,b) with

- % respect to W1. I.e., W1grad(i,j) should be the partial derivative of J_sparse(W,b)

- % with respect to the input parameter W1(i,j). Thus, W1grad should be equal to the term

- % [(1/m) \Delta W^{(1)} + \lambda W^{(1)}] in the last block of pseudo-code in Section 2.2

- % of the lecture notes (and similarly for W2grad, b1grad, b2grad).

- %

- % Stated differently, if we were using batch gradient descent to optimize the parameters,

- % the gradient descent update to W1 would be W1 := W1 - alpha * W1grad, and similarly for W2, b1, b2.

- %

- %矩陣向量化形式實現,速度比不用向量快得多

- Jcost = 0; %平方誤差

- Jweight = 0; %規則項懲罰

- Jsparse = 0; %稀疏性懲罰

- [n, m] = size(data); %m為樣本數,這裡是10000,n為樣本維數,這裡是64

- %feedforward前向演算法計算隱含層和輸出層的每個節點的z值(線性組合值)和a值(啟用值)

- %data每一列是一個樣本,

- z2 = W1*data + repmat(b1,1,m); %W1*data的每一列是每個樣本的經過權重W1到隱含層的線性組合值,repmat把列向量b1擴充成m列b1組成的矩陣

- a2 = sigmoid(z2);

- z3 = W2*a2 + repmat(b2,1,m);

- a3 = sigmoid(z3);

- %計算預測結果與理想結果的平均誤差

- Jcost = (0.5/m)*sum(sum((a3-data).^2));

- %計算權重懲罰項

- Jweight = (1/2)*(sum(sum(W1.^2))+sum(sum(W2.^2)));

- %計算稀疏性懲罰項

- rho_hat = (1/m)*sum(a2,2);

- Jsparse = sum(sparsityParam.*log(sparsityParam./rho_hat)+(1-sparsityParam).*log((1-sparsityParam)./(1-rho_hat)));

- %計算總損失函式

- cost = Jcost + lambda*Jweight + beta*Jsparse;

- %反向傳播求誤差值

- delta3 = -(data-a3).*fprime(a3); %每一列是一個樣本對應的誤差

- sterm = beta*(-sparsityParam./rho_hat+(1-sparsityParam)./(1-rho_hat));

- delta2 = (W2'*delta3 + repmat(sterm,1,m)).*fprime(a2);

- %計算梯度

- W2grad = delta3*a2';

- W1grad = delta2*data';

- W2grad = W2grad/m + lambda*W2;

- W1grad = W1grad/m + lambda*W1;

- b2grad = sum(delta3,2)/m; %因為對b的偏導是個向量,這裡要把delta3的每一列加起來

- b1grad = sum(delta2,2)/m;

- %%----------------------------------

- % %對每個樣本進行計算, non-vectorial implementation

- % [n m] = size(data);

- % a2 = zeros(hiddenSize,m);

- % a3 = zeros(visibleSize,m);

- % Jcost = 0; %平方誤差項

- % rho_hat = zeros(hiddenSize,1); %隱含層每個節點的平均啟用度

- % Jweight = 0; %權重衰減項

- % Jsparse = 0; % 稀疏項代價

- %

- % for i=1:m

- % %feedforward向前轉播

- % z2(:,i) = W1*data(:,i)+b1;

- % a2(:,i) = sigmoid(z2(:,i));

- % z3(:,i) = W2*a2(:,i)+b2;

- % a3(:,i) = sigmoid(z3(:,i));

- % Jcost = Jcost+sum((a3(:,i)-data(:,i)).*(a3(:,i)-data(:,i)));

- % rho_hat = rho_hat+a2(:,i); %累加樣本隱含層的啟用度

- % end

- %

- % rho_hat = rho_hat/m; %計算平均啟用度

- % Jsparse = sum(sparsityParam*log(sparsityParam./rho_hat) + (1-sparsityParam)*log((1-sparsityParam)./(1-rho_hat))); %計算稀疏代價

- % Jweight = sum(W1(:).*W1(:))+sum(W2(:).*W2(:));%計算權重衰減項

- % cost = Jcost/2/m + Jweight/2*lambda + beta*Jsparse; %計算總代價

- %

- % for i=1:m

- % %backpropogation向後傳播

- % delta3 = -(data(:,i)-a3(:,i)).*fprime(a3(:,i));

- % delta2 = (W2'*delta3 +beta*(-sparsityParam./rho_hat+(1-sparsityParam)./(1-rho_hat))).*fprime(a2(:,i));

- %

- % W2grad = W2grad + delta3*a2(:,i)';

- % W1grad = W1grad + delta2*data(:,i)';

- % b2grad = b2grad + delta3;

- % b1grad = b1grad + delta2;

- % end

- % %計算梯度

- % W1grad = W1grad/m + lambda*W1;

- % W2grad = W2grad/m + lambda*W2;

- % b1grad = b1grad/m;

- % b2grad = b2grad/m;

- % -------------------------------------------------------------------

- % After computing the cost and gradient, we will convert the gradients back

- % to a vector format (suitable for minFunc). Specifically, we will unroll

- % your gradient matrices into a vector.

- grad = [W1grad(:) ; W2grad(:) ; b1grad(:) ; b2grad(:)];

- end

- %% Implementation of derivation of f(z)

- % f(z) = sigmoid(z) = 1./(1+exp(-z))

- % a = 1./(1+exp(-z))

- % delta(f) = a.*(1-a)

- function dz = fprime(a)

- dz = a.*(1-a);

- end

- %%

- %-------------------------------------------------------------------

- % Here's an implementation of the sigmoid function, which you may find useful

- % in your computation of the costs and the gradients. This inputs a (row or

- % column) vector (say (z1, z2, z3)) and returns (f(z1), f(z2), f(z3)).

- function sigm = sigmoid(x)

- sigm = 1 ./ (1 + exp(-x));

- end

- </span>

computeNumericalGradient.m

- <span style="font-size:14px;">function numgrad = computeNumericalGradient(J, theta)

- % numgrad = computeNumericalGradient(J, theta)

- % theta: a vector of parameters

- % J: a function that outputs a real-number. Calling y = J(theta) will return the

- % function value at theta.

- % Initialize numgrad with zeros

- numgrad = zeros(size(theta));

- %% ---------- YOUR CODE HERE --------------------------------------