深度學習之模型構建

阿新 • • 發佈:2018-10-23

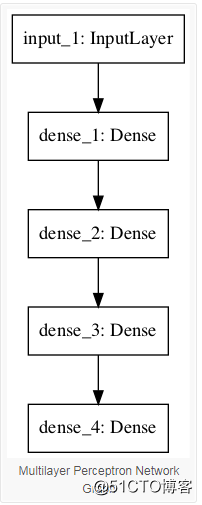

water ssi sum sta eat rom col ffffff oss 標準模型

from keras.utils import plot_model from keras.models import Model from keras.layers import Input from keras.layers import Dense visible = Input(shape=(10,)) hidden1 = Dense(10, activation=‘relu‘)(visible) hidden2 = Dense(20, activation=‘relu‘)(hidden1) hidden3 = Dense(10, activation=‘relu‘)(hidden2) output = Dense(1, activation=‘sigmoid‘)(hidden3) model = Model(inputs=visible, outputs=output) print(model.summary()) plot_model(model, to_file=‘multilayer_perceptron_graph.png‘)

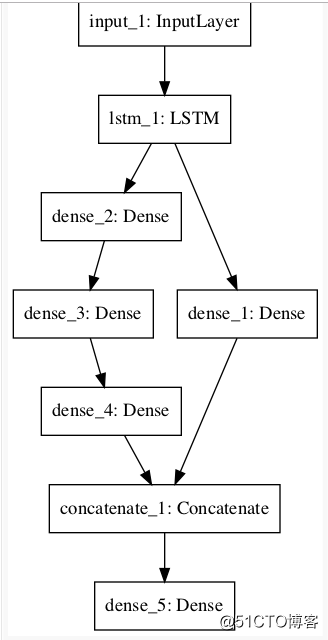

- 層共享模型

from keras.utils import plot_model from keras.models import Model from keras.layers import Input from keras.layers import Dense from keras.layers.recurrent import LSTM from keras.layers.merge import concatenate visible = Input(shape=(100,1)) extract1 = LSTM(10)(visible) interp1 = Dense(10, activation=‘relu‘)(extract1) interp11 = Dense(10, activation=‘relu‘)(extract1) interp12 = Dense(20, activation=‘relu‘)(interp11) interp13 = Dense(10, activation=‘relu‘)(interp12) merge = concatenate([interp1, interp13]) output = Dense(1, activation=‘sigmoid‘)(merge) model = Model(inputs=visible, outputs=output) print(model.summary()) plot_model(model, to_file=‘shared_feature_extractor.png‘)

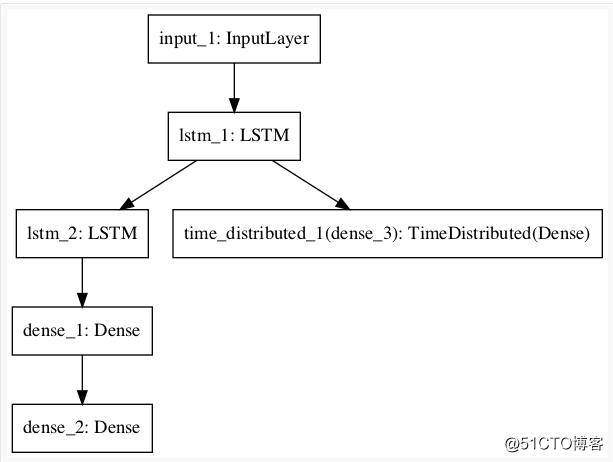

- 多輸出模型

from keras.utils import plot_model from keras.models import Model from keras.layers import Input from keras.layers import Dense from keras.layers.recurrent import LSTM from keras.layers.wrappers import TimeDistributed # input layer visible = Input(shape=(100,1)) # feature extraction extract = LSTM(10, return_sequences=True)(visible) # classification output class11 = LSTM(10)(extract) class12 = Dense(10, activation=‘relu‘)(class11) output1 = Dense(1, activation=‘sigmoid‘)(class12) # sequence output output2 = TimeDistributed(Dense(1, activation=‘linear‘))(extract) # output model = Model(inputs=visible, outputs=[output1, output2]) # summarize layers print(model.summary()) # plot graph plot_model(model, to_file=‘multiple_outputs.png‘)

深度學習之模型構建