LSTM對MNIST資料集做分類

阿新 • • 發佈:2019-01-14

https://morvanzhou.github.io/tutorials/machine-learning/tensorflow/5-08-RNN2/

1.設定 RNN 的引數

RNN 從每張圖片的第一行畫素讀到最後一行, 然後再進行分類判斷.

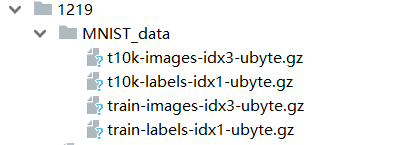

(1)匯入 MNIST 資料並確定 RNN 的各種引數

下載地址: http://yann.lecun.com/exdb/mnist/

import tensorflow as tf from tensorflow.examples.tutorials.mnist import input_data tf.set_random_seed(1) # set random seed # 匯入資料 mnist = input_data.read_data_sets('MNIST_data', one_hot=True) # hyperparameters lr = 0.001 # learning rate training_iters = 100000 # train step 上限 batch_size = 128 n_inputs = 28 # MNIST data input (img shape: 28*28) n_steps = 28 # time steps n_hidden_units = 128 # neurons in hidden layer n_classes = 10 # MNIST classes (0-9 digits)

(2)定義 x, y 的 placeholder 和 weights, biases 的初始狀況

x = tf.placeholder(tf.float32, [None, n_steps, n_inputs]) y = tf.placeholder(tf.float32, [None, n_classes]) weights = { # shape (28, 128) 'in': tf.Variable(tf.random_normal([n_inputs, n_hidden_units])), # shape (128, 10) 'out': tf.Variable(tf.random_normal([n_hidden_units, n_classes])) } biases = { # shape (128, ) 'in': tf.Variable(tf.constant(0.1, shape=[n_hidden_units, ])), # shape (10, ) 'out': tf.Variable(tf.constant(0.1, shape=[n_classes, ])) }

2.定義 RNN 的主體結構

RNN 總共有 3 個組成部分 ( input_layer, cell, output_layer)

# (1)input_layer def RNN(X, weights, biases): # 原始的 X 是 3 維資料, 我們需要把它變成 2 維資料才能使用 weights 的矩陣乘法 # X ==> (128 batches * 28 steps, 28 inputs) X = tf.reshape(X, [-1, n_inputs]) # X_in = W*X + b X_in = tf.matmul(X, weights['in']) + biases['in'] # X_in ==> (128 batches, 28 steps, 128 hidden) 換回3維 X_in = tf.reshape(X_in, [-1, n_steps, n_hidden_units]) # (2)cell # 使用 basic LSTM Cell. lstm_cell = tf.nn.rnn_cell.BasicLSTMCell(n_hidden_units, forget_bias=1.0, state_is_tuple=True) init_state = lstm_cell.zero_state(batch_size, dtype=tf.float32) # 初始化全零 state # (3)output_layer outputs, states = tf.nn.dynamic_rnn(lstm_cell, X_in, initial_state=init_state, time_major=False) results = tf.matmul(states[1], weights['out']) + biases['out'] return results

3.計算 cost 和 train_op

pred = RNN(x, weights, biases)

cost = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=pred, labels=y))

train_op = tf.train.AdamOptimizer(lr).minimize(cost)

4.訓練 RNN

correct_pred = tf.equal(tf.argmax(pred, 1), tf.argmax(y, 1))

accuracy = tf.reduce_mean(tf.cast(correct_pred, tf.float32))

init = tf.initialize_all_variables()

with tf.Session() as sess:

sess.run(init)

step = 0

while step * batch_size < training_iters:

batch_xs, batch_ys = mnist.train.next_batch(batch_size)

batch_xs = batch_xs.reshape([batch_size, n_steps, n_inputs])

sess.run([train_op], feed_dict={

x: batch_xs,

y: batch_ys,

})

if step % 20 == 0:

print(sess.run(accuracy, feed_dict={

x: batch_xs,

y: batch_ys,

}))

step += 1

5.結果展示

0.265625

0.7265625

0.828125

0.8828125

0.84375

0.859375

0.8984375

0.890625

0.84375

0.90625

0.921875

0.90625

0.9140625

0.9140625

0.9375

0.9609375

0.953125

0.921875

0.9453125

0.96875

0.9375

0.9609375

0.890625

0.984375

0.953125

0.953125

0.9453125

0.9453125

0.96875

0.9375

0.953125

0.96875

0.9375

0.9921875

0.9609375

0.9609375

0.953125

0.9609375

0.96875

0.96875

Process finished with exit code 0