最簡單的OpenSL播放PCM實時音訊

這裡是c語言寫的給android用的,可以拿到其他平臺使用。既然是最簡單的,肯定使用起來就是超級簡單如回撥方法就一句程式碼。這裡簡單說一下使用要注意的地方:

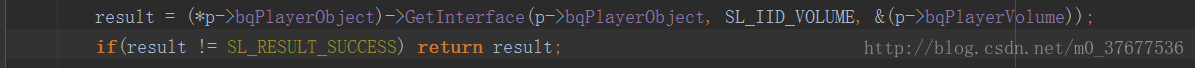

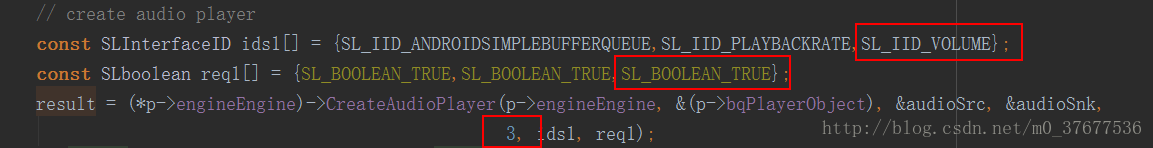

1.如果想要使用opensl的一些功能如音量控制:

只是這樣是不可以的,拿到的bqPlayerVolume為空值,還需要在這個地方開啟一下:

這是我碰到的坑,幫助大家直接跳過。

2. opensl播放音訊速率是一定的,那麼給opensl更新資料的速率也是一定的,opensl跟openal一樣也是有播放快取的,每播放完畢快取中一個pcm音訊資料包就會有回撥,回撥方法就是:

// this callback handler is 我這裡處理超簡單,就是一個數據包計數,傳進去一個pcm資料包就計數加一,這個回撥就計數減一,這個計數就表示音訊播放器裡面將要播放的pcm音訊資料包的數量。就根據這個計數來控制pcm資料的更新速率就可以了。如果快取中播放完畢再更新資料會斷片,就是計數為零時。

3.這裡處理不好會有記憶體洩漏,就是傳給opensl的資料包地址要用陣列或者連結串列存起來,播放完畢再釋放掉。

Opensl_io.h

//

// Created by huizai on 2017/12/4.

//

#ifndef FFMPEG_DEMO_OPENSL_IO_H

#define FFMPEG_DEMO_OPENSL_IO_H

#include <SLES/OpenSLES.h>

#include <SLES/OpenSLES_Android.h>

typedef struct opensl_stream {

// engine interfaces

SLObjectItf engineObject;

SLEngineItf engineEngine;

// output mix interfaces Opensl_io.c

//

// Created by huizai on 2017/12/4.

//

#include "Opensl_io.h"

static void bqPlayerCallback(SLAndroidSimpleBufferQueueItf bq, void *context);

// creates the OpenSL ES audio engine

static SLresult openSLCreateEngine(OPENSL_STREAM *p)

{

SLresult result;

// create engine

result = slCreateEngine(&(p->engineObject), 0, NULL, 0, NULL, NULL);

if(result != SL_RESULT_SUCCESS) goto engine_end;

// realize the engine

result = (*p->engineObject)->Realize(p->engineObject, SL_BOOLEAN_FALSE);

if(result != SL_RESULT_SUCCESS) goto engine_end;

// get the engine interface, which is needed in order to create other objects

result = (*p->engineObject)->GetInterface(p->engineObject, SL_IID_ENGINE, &(p->engineEngine));

if(result != SL_RESULT_SUCCESS) goto engine_end;

engine_end:

return result;

}

// opens the OpenSL ES device for output

static SLresult openSLPlayOpen(OPENSL_STREAM *p)

{

SLresult result;

SLuint32 sr = p->sampleRate;

SLuint32 channels = p->outchannels;

if(channels) {

// configure audio source

SLDataLocator_AndroidSimpleBufferQueue loc_bufq = {SL_DATALOCATOR_ANDROIDSIMPLEBUFFERQUEUE, 2};

switch(sr){

case 8000:

sr = SL_SAMPLINGRATE_8;

break;

case 11025:

sr = SL_SAMPLINGRATE_11_025;

break;

case 16000:

sr = SL_SAMPLINGRATE_16;

break;

case 22050:

sr = SL_SAMPLINGRATE_22_05;

break;

case 24000:

sr = SL_SAMPLINGRATE_24;

break;

case 32000:

sr = SL_SAMPLINGRATE_32;

break;

case 44100:

sr = SL_SAMPLINGRATE_44_1;

break;

case 48000:

sr = SL_SAMPLINGRATE_48;

break;

case 64000:

sr = SL_SAMPLINGRATE_64;

break;

case 88200:

sr = SL_SAMPLINGRATE_88_2;

break;

case 96000:

sr = SL_SAMPLINGRATE_96;

break;

case 192000:

sr = SL_SAMPLINGRATE_192;

break;

default:

break;

return -1;

}

const SLInterfaceID ids[] = {SL_IID_VOLUME};

const SLboolean req[] = {SL_BOOLEAN_FALSE};

result = (*p->engineEngine)->CreateOutputMix(p->engineEngine, &(p->outputMixObject), 1, ids, req);

if(result != SL_RESULT_SUCCESS) return result;

// realize the output mix

result = (*p->outputMixObject)->Realize(p->outputMixObject, SL_BOOLEAN_FALSE);

int speakers;

if(channels > 1)

speakers = SL_SPEAKER_FRONT_LEFT | SL_SPEAKER_FRONT_RIGHT;

else speakers = SL_SPEAKER_FRONT_CENTER;

SLDataFormat_PCM format_pcm = {SL_DATAFORMAT_PCM,channels, sr,

SL_PCMSAMPLEFORMAT_FIXED_16, SL_PCMSAMPLEFORMAT_FIXED_16,

(SLuint32)speakers, SL_BYTEORDER_LITTLEENDIAN};

SLDataSource audioSrc = {&loc_bufq, &format_pcm};

// configure audio sink

SLDataLocator_OutputMix loc_outmix = {SL_DATALOCATOR_OUTPUTMIX, p->outputMixObject};

SLDataSink audioSnk = {&loc_outmix, NULL};

// create audio player

const SLInterfaceID ids1[] = {SL_IID_ANDROIDSIMPLEBUFFERQUEUE,SL_IID_PLAYBACKRATE,SL_IID_VOLUME};

const SLboolean req1[] = {SL_BOOLEAN_TRUE,SL_BOOLEAN_TRUE,SL_BOOLEAN_TRUE};

result = (*p->engineEngine)->CreateAudioPlayer(p->engineEngine, &(p->bqPlayerObject), &audioSrc, &audioSnk,

3, ids1, req1);

if(result != SL_RESULT_SUCCESS) return result;

// realize the player

result = (*p->bqPlayerObject)->Realize(p->bqPlayerObject, SL_BOOLEAN_FALSE);

if(result != SL_RESULT_SUCCESS) return result;

// get the play interface

result = (*p->bqPlayerObject)->GetInterface(p->bqPlayerObject, SL_IID_PLAY, &(p->bqPlayerPlay));

if(result != SL_RESULT_SUCCESS) return result;

// get the play rate

result = (*p->bqPlayerObject)->GetInterface(p->bqPlayerObject, SL_IID_VOLUME, &(p->bqPlayerVolume));

if(result != SL_RESULT_SUCCESS) return result;

// get the play volume

result = (*p->bqPlayerObject)->GetInterface(p->bqPlayerObject, SL_IID_PLAYBACKRATE, &(p->bqPlayerRate));

if(result != SL_RESULT_SUCCESS) return result;

// get the buffer queue interface

result = (*p->bqPlayerObject)->GetInterface(p->bqPlayerObject, SL_IID_ANDROIDSIMPLEBUFFERQUEUE,

&(p->bqPlayerBufferQueue));

if(result != SL_RESULT_SUCCESS) return result;

// register callback on the buffer queue

result = (*p->bqPlayerBufferQueue)->RegisterCallback(p->bqPlayerBufferQueue, bqPlayerCallback, p);

if(result != SL_RESULT_SUCCESS) return result;

// set the player's state to playing

result = (*p->bqPlayerPlay)->SetPlayState(p->bqPlayerPlay, SL_PLAYSTATE_PLAYING);

return result;

}

return SL_RESULT_SUCCESS;

}

// close the OpenSL IO and destroy the audio engine

static void openSLDestroyEngine(OPENSL_STREAM *p)

{

// destroy buffer queue audio player object, and invalidate all associated interfaces

if (p->bqPlayerObject != NULL) {

(*p->bqPlayerObject)->Destroy(p->bqPlayerObject);

p->bqPlayerObject = NULL;

p->bqPlayerPlay = NULL;

p->bqPlayerBufferQueue = NULL;

}

// destroy output mix object, and invalidate all associated interfaces

if (p->outputMixObject != NULL) {

(*p->outputMixObject)->Destroy(p->outputMixObject);

p->outputMixObject = NULL;

}

// destroy engine object, and invalidate all associated interfaces

if (p->engineObject != NULL) {

(*p->engineObject)->Destroy(p->engineObject);

p->engineObject = NULL;

p->engineEngine = NULL;

}

}

// open the android audio device for input and/or output

OPENSL_STREAM *android_OpenAudioDevice(uint32_t sr, uint32_t inchannels, uint32_t outchannels, uint32_t bufferframes)

{

OPENSL_STREAM *p;

//分配記憶體空間並初始化

p = (OPENSL_STREAM *) calloc(1,sizeof(OPENSL_STREAM));

//取樣率

p->sampleRate = sr;

//建立引擎物件及介面

if(openSLCreateEngine(p) != SL_RESULT_SUCCESS) {

android_CloseAudioDevice(p);

return NULL;

}

p->inputDataCount = 0;

//輸出聲道數

p->outchannels = outchannels;

if(openSLPlayOpen(p) != SL_RESULT_SUCCESS) {

android_CloseAudioDevice(p);

return NULL;

}

return p;

}

// close the android audio device

void android_CloseAudioDevice(OPENSL_STREAM *p)

{

if (p == NULL)

return;

openSLDestroyEngine(p);

free(p);

}

// returns timestamp of the processed stream

double android_GetTimestamp(OPENSL_STREAM *p)

{

return p->time;

}

// this callback handler is called every time a buffer finishes playing

void bqPlayerCallback(SLAndroidSimpleBufferQueueItf bq, void *context)

{

OPENSL_STREAM *p = (OPENSL_STREAM *) context;

p->inputDataCount --;

// free(p->inputBuffer);

}

// puts a buffer of size samples to the device

uint32_t android_AudioOut(OPENSL_STREAM *p, uint16_t *buffer,uint32_t size)

{

(*p->bqPlayerBufferQueue)->Enqueue(p->bqPlayerBufferQueue,

buffer, size);

p->inputDataCount ++;

return 0;

}

int android_SetPlayRate(OPENSL_STREAM *p,int rateChange){

SLmillibel value;

SLresult result;

if (!p) return -1;

if (!p->bqPlayerRate) return -1;

result = (*p->bqPlayerRate)->GetRate(p->bqPlayerRate,&value);

if (result != SL_RESULT_SUCCESS)return -1;

if (rateChange<0){

value -= 100;

} else

value += 100;

if (value < 500) value = 500;

if (value > 2000) value = 2000;

result = (*p->bqPlayerRate)->SetRate(p->bqPlayerRate,value);

if (result == SL_RESULT_SUCCESS){

return 0;

} else

return -1;

}相關推薦

最簡單的OpenSL播放PCM實時音訊

這裡是c語言寫的給android用的,可以拿到其他平臺使用。既然是最簡單的,肯定使用起來就是超級簡單如回撥方法就一句程式碼。這裡簡單說一下使用要注意的地方: 1.如果想要使用opensl的一些功能如音量控制: 只是這樣是不可以的,拿到的bqPlayerV

最簡單的基於FFMPEG的音訊編碼器(PCM編碼為AAC

本文介紹一個最簡單的基於FFMPEG的音訊編碼器。該編碼器實現了PCM音訊取樣資料編碼為AAC的壓縮編碼資料。編碼器程式碼十分簡單,但是每一行程式碼都很重要。通過看本編碼器的原始碼,可以瞭解FFMPEG音訊編碼的流程。本程式使用最新版的類庫(編譯時間為2014.5.6),開發平

最簡單音樂播放器,還有歌詞

不是來說播放器的(前面我有一篇VLC的,可以倒回去看),這篇是來分享 這個顯示歌詞的,還是用了前一篇的PickerView的原理進行放大歌詞 使用知識點 歌詞動態放大

最簡單的視音訊播放示例9:SDL2播放PCM

最簡單的視音訊播放示例系列文章列表: ===================================================== 本文記錄SDL播放音訊的技術。在這裡使用的版本是SDL2。實際上SDL本身並不提供視音訊播放的功能,它只

最簡單的基於FFMPEG+SDL的音訊播放器 ver2 (採用SDL2 0)

=====================================================最簡單的基於FFmpeg的音訊播放器系列文章列表:=====================================================簡介之前做過一個

Android直播開發之旅(13):使用FFmpeg+OpenSL ES播放PCM音訊

在Android直播開發之旅(12):初探FFmpeg開源框架一文中,我們詳細介紹了FFmpeg框架的架構、音視訊相關術語以及重要的結構體。為了能夠對這些重要的結構體有個深入的理解,本文將在此基礎上,利用FFmpeg解析rtsp資料流以獲取AAC音訊資料,再對AAC進行解碼為PC

Android 音訊 OpenSL ES PCM資料播放

PCM 資料播放在開發中也經常使用,例如自己編寫播放器,解碼之後的音訊PCM資料,就可以通過OpenSL 播放,比用Java層的AudioTrack更快,延遲更低。 下面我們編寫OpenSL PCM播放,播放的主要邏輯是從檔案讀取PCM資料然後播放,程式碼編

DirectSound播放PCM(可播放實時採集的音訊資料)

前言 該篇整理的原始來源為http://blog.csdn.net/leixiaohua1020/article/details/40540147。非常感謝該博主的無私奉獻,寫了不少關於不同多媒體庫的博文。讓我這個小白學習到不少。現在將其整理是為了收錄,以備自己檢視。一、D

最簡單的視音訊播放示例6:OpenGL播放YUV420P(通過Texture,使用Shader)

=====================================================最簡單的視音訊播放示例系列文章列表:=====================================================本文記錄OpenGL播放視訊

最簡單的基於FFMPEG+SDL的音訊播放器:拆分-解碼器和播放器

=====================================================最簡單的基於FFmpeg的音訊播放器系列文章列表:=====================================================本文補充記錄《

HTML5用audio標籤做一個最簡單的音訊播放器

在做系統的時候,要求做一個音訊播放器,就在網上查找了一些資料,發現這樣的資料還是很千篇一律的,EasyUI框架並沒有給我們一個音訊播放器的功能,在bootstrap上有,但是也是結合html5來寫的,因此,我們在這裡就用純的html5血一個音訊播放器,如何播放本地的音訊。

最簡單的視音訊播放示例7:SDL2播放RGB/YUV

=====================================================最簡單的視音訊播放示例系列文章列表:=====================================================本文記錄SDL播放視訊的技術

最簡單的視音訊播放示例5:OpenGL播放RGB/YUV

=====================================================最簡單的視音訊播放示例系列文章列表:=====================================================本文記錄OpenGL播放視訊

最簡單的視音頻播放演示樣例7:SDL2播放RGB/YUV

pro big 更新 沒有 opaque support 解決 控制 mem =====================================================最簡單的視音頻播放演示樣例系列文章列表:最簡單的視音頻播放演示樣例1:總述最簡單的視音

100行代碼實現最簡單的基於FFMPEG+SDL的視頻播放器(SDL1.x)【轉】

工程 全屏 升級版 gin avcodec ive 系列文章 相同 hello 轉自:http://blog.csdn.net/leixiaohua1020/article/details/8652605 版權聲明:本文為博主原創文章,未經博主允許不得轉載。

使用AndroidTrack播放pcm音訊

package com.tlinux.mp3playeraudiotrack; import android.media.AudioFormat; import android.media.AudioManager; import android.media.AudioTrack; imp

5.基於SDL2播放PCM音訊

接上一篇<基於FFMPEG將音訊解碼為PCM>,接下來就是需要將PCM音訊進行播放,查閱資料是通過SDL進行音視訊的播放,因此這裡記錄一下SDL相關的筆記。。。 一.簡介 摘抄自百度百科: SDL(Simple DirectMedia Layer)是一套開

用DAC解碼PCM資料播放WAV格式音訊檔案

WAV音訊用的是PCM協議,大致就是前面44位元組的一堆描述,用於辨別檔案型別、大小,後面一堆音訊資料。 關於WAV格式、RIFF格式、PCM協議這些的關係,在這篇文章描述得很詳細,這裡就不做介紹了。 RIFF和WAVE音訊檔案格式 先看程式碼: void readWave()

(五) AudioTrack播放pcm音訊

java public class AudioTrackActivity extends BaseActivity { public static void startAudioTrackActivity(Activity activity) {

如何跨平臺python播放pcm音訊

本文首先要感謝以下兩篇部落格的指導: 本文依賴的程式碼請參考文章1,本文主要介紹在Windows,MacOS環境下如何搭建python依賴環境。 首先,需要使用pip命令安裝pysdl2擴充套件包:pip install pysdl2,如果不使用pip命令,則