[機器學習]前向逐步迴歸

阿新 • • 發佈:2019-01-24

前向逐步迴歸演算法可以得到與lasso差不多的效果,但更加簡單。它屬於一種貪心演算法,即每一步都儘可能的減少誤差。

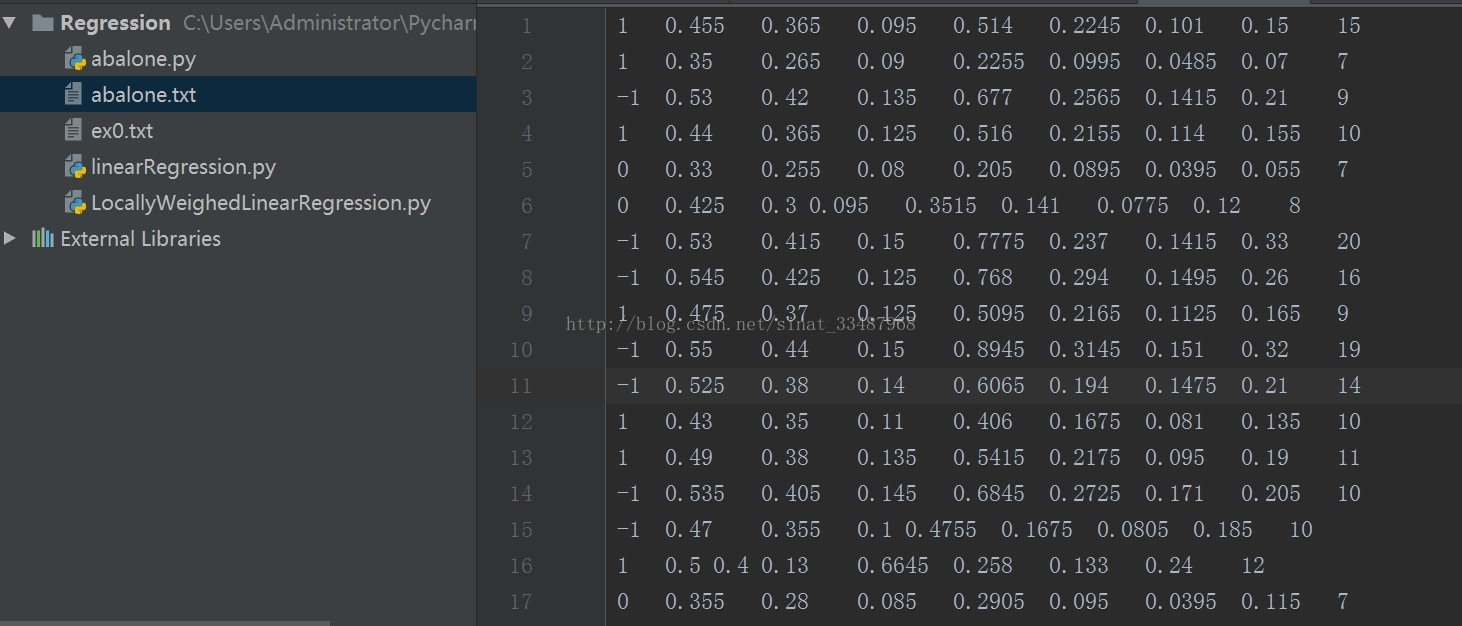

資料如下:

圖片

from numpy import * def rssError(yArr,yHatArr): return((yArr-yHatArr)**2).sum() def loadDataSet(fileName): numFeat = len(open(fileName).readline().split('\t'))-1 dataMat = [] labelMat = [] fr = open(fileName) for line in fr.readlines(): lineArr = [] curLine = line.strip().split('\t') for i in range(numFeat): lineArr.append(float(curLine[i])) dataMat.append(lineArr) labelMat.append(float(curLine[-1])) return dataMat,labelMat def regularize(xMat):#regularize by columns inMat = xMat.copy() inMeans = mean(inMat,0) #calc mean then subtract it off inVar = var(inMat,0) #calc variance of Xi then divide by it inMat = (inMat - inMeans)/inVar return inMat def stageWise(xArr, yArr, eps = 0.01, numIt = 100): xMat = mat(xArr) yMat = mat(yArr).T yMean = mean(yMat, 0) yMat = yMat - yMean xMat = regularize(xMat) m,n = shape(xMat) returnMat = zeros((numIt,n)) ws = zeros((n,1)) wsTest = ws.copy() wsMax = ws.copy() for i in range(numIt): print(ws.T) lowestError = inf for j in range(n): for sign in [-1,1]: wsTest = ws.copy() wsTest[j] += eps*sign yTest = xMat*wsTest rssE = rssError(yMat.A,yTest.A) if rssE < lowestError: lowestError = rssE wsMax = wsTest ws = wsMax.copy() returnMat[i,:] = ws.T return returnMat def main(): xArr,yArr = loadDataSet('abalone.txt') stageWise(xArr,yArr,0.001,5000) if __name__ == '__main__': main()

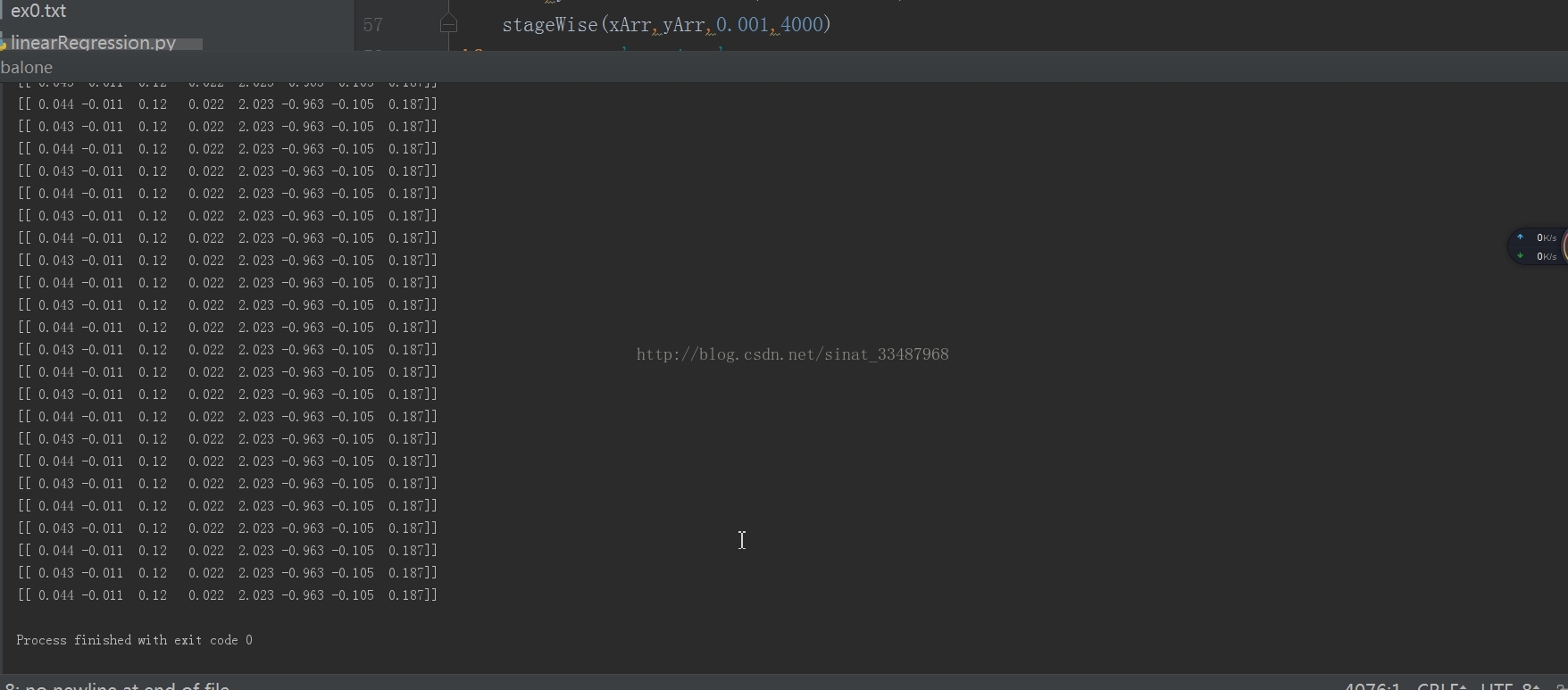

看一下結果:

圖片一