Scrapy框架學習

阿新 • • 發佈:2019-01-26

概述

主要是為了練習使用CrawlSpider類的rules變數中定義多個Rule的用法,體會Scrapy框架的強大、靈活性。

因此,對抓取到的內容只是儲存到JSON檔案中,沒有進行進一步的處理。

原始碼

items.py

class CnblogNewsItem(scrapy.Item):

# 新聞標題

title=scrapy.Field()

# 投遞人

postor=scrapy.Field()

# 釋出時間

pubtime=scrapy.Field()

# 新聞內容

content=scrapy.Field()spiders/cnblognews_spider.py

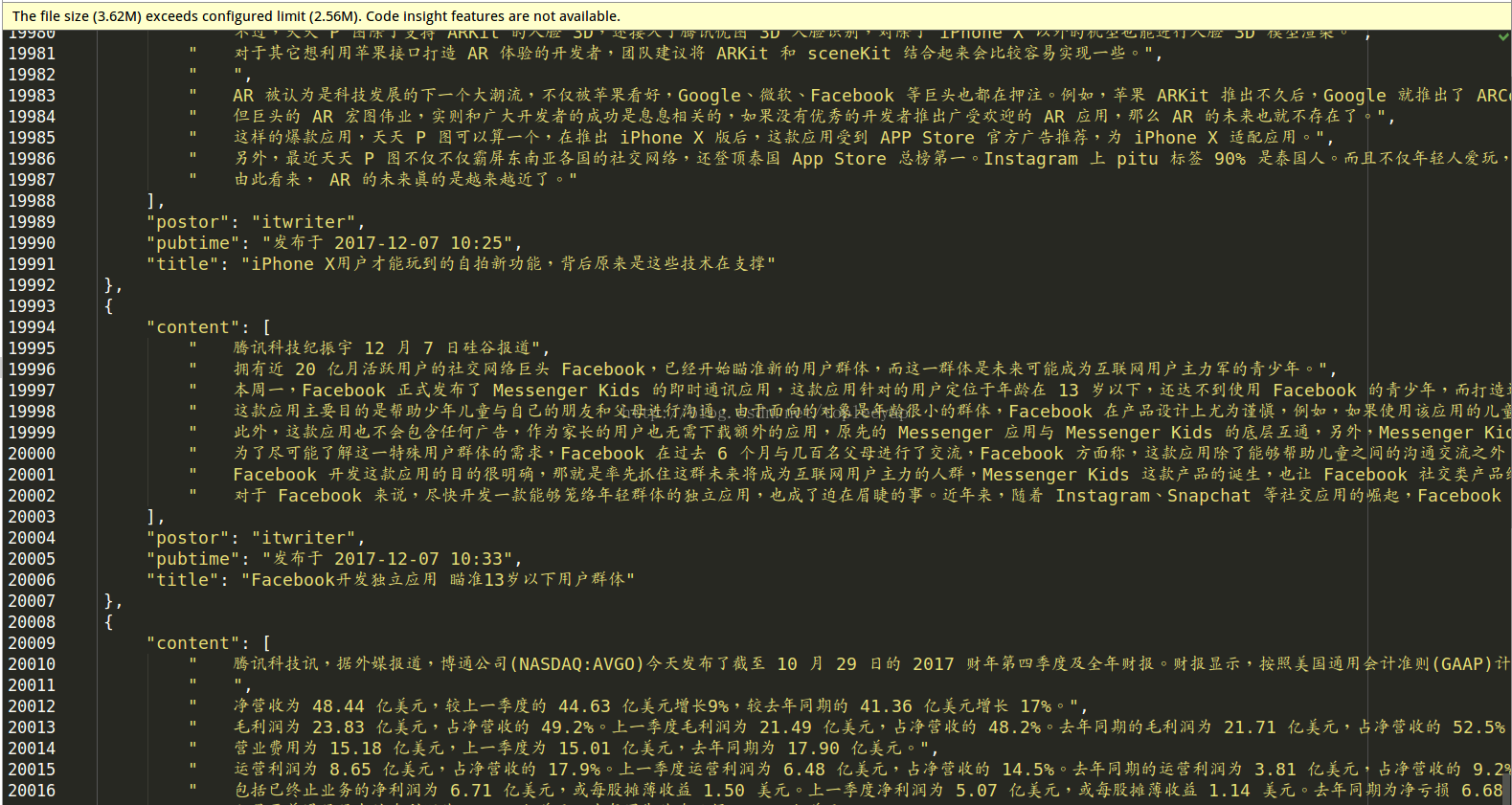

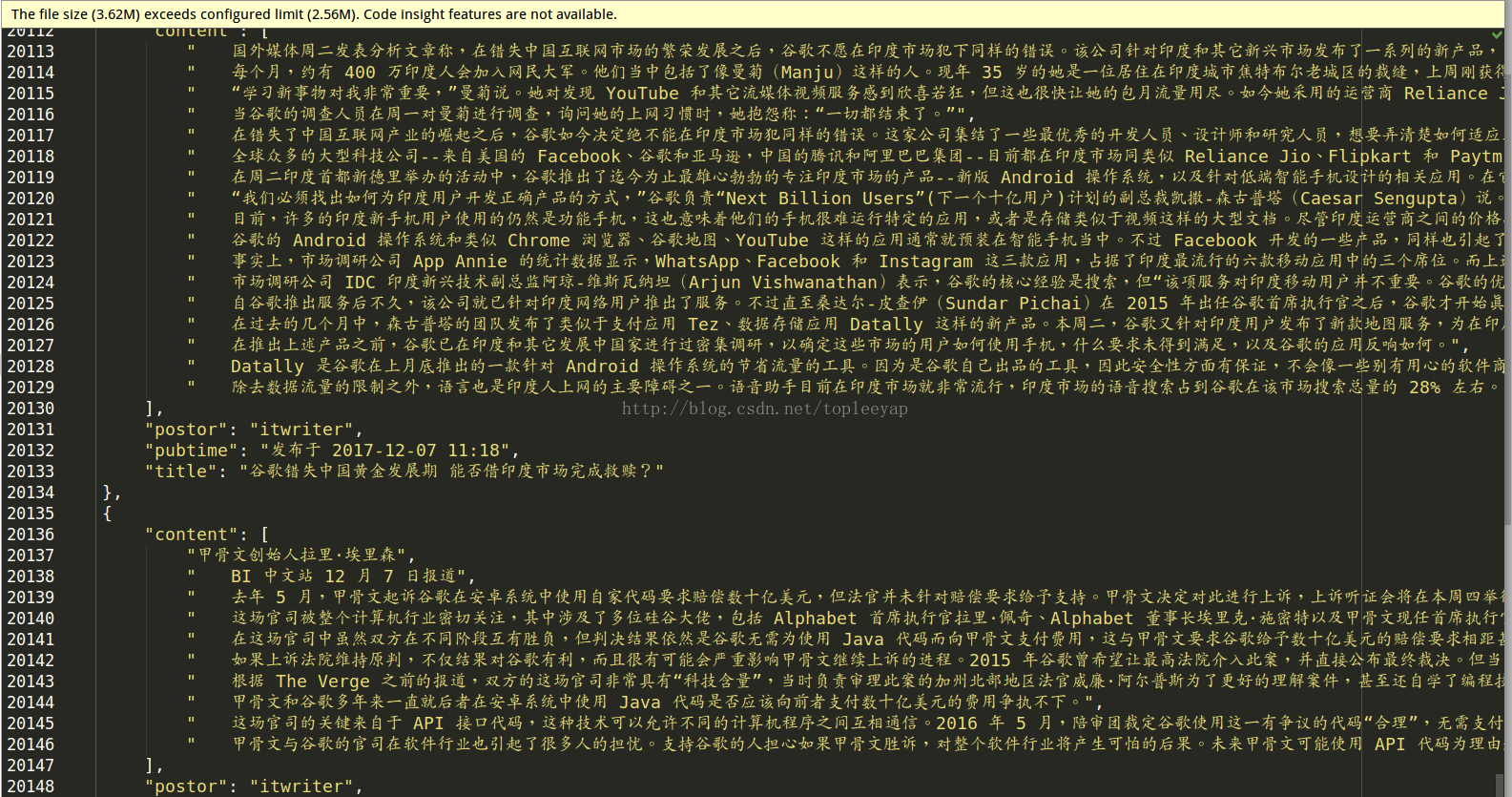

# !/usr/bin/env python # -*- coding:utf-8 -*- from scrapy.spider import CrawlSpider,Rule from scrapy.linkextractors import LinkExtractor from myscrapy.items import CnblogNewsItem class CnblogNewsSpider(CrawlSpider): """ 部落格園新聞爬蟲Spider 爬取新聞列表連結資料 爬取每一條新聞的詳情頁資料 """ name = 'cnblognews' allowed_domains=['news.cnblogs.com'] start_urls=['https://news.cnblogs.com/n/page/1/'] # 新聞頁的LinkExtractor,使用正則規則提取 page_link_extractor=LinkExtractor(allow=(r'page/\d+')) # 每一條新聞的LinkExtractor,使用XPath規則提取 detail_link_extractor=LinkExtractor(restrict_xpaths=(r'//h2[@class="news_entry"]')) rules = [ # 新聞頁提取規則,follow=True,跟進 Rule(link_extractor=page_link_extractor,follow=True), # 新聞詳情頁提取規則,follow=False,不跟進 Rule(link_extractor=detail_link_extractor,callback='parse_detail',follow=False) ] def parse_detail(self,response): """處理新聞詳情頁資料回撥方法""" # print(response.url) title=response.xpath('//div[@id="news_title"]/a/text()')[0].extract() postor = response.xpath('//span[@class="news_poster"]/a/text()')[0].extract() pubtime = response.xpath('//span[@class="time"]/text()')[0].extract() content = response.xpath('//div[@id="news_body"]/p/text()').extract() item=CnblogNewsItem() item['title']=title item['postor']=postor item['pubtime']=pubtime item['content']=content yield item

pipelines.py

class CnblognewsPipeline(object): """部落格園新聞Item PipeLIne""" def __init__(self): self.f=open('cnblognews.json',mode='w') def process_item(self,item,spider): news=json.dumps(dict(item),ensure_ascii=False,indent=4).strip().encode('utf-8') self.f.write(news+',\n') def close_spider(self,spider): self.f.close()

settings.py

ITEM_PIPELINES = {

'myscrapy.pipelines.CnblognewsPipeline': 1,

}