windows下用kinect V2 識別人體骨骼

阿新 • • 發佈:2019-01-27

配置環境:windows10+vs2015+opencv3.0

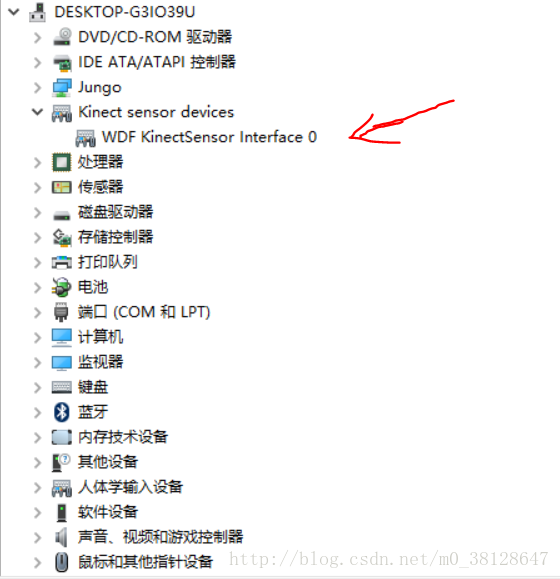

首先到windows官網下載kinect for windows sdk ,我用的是2.0版本,下載後安裝即可。安裝完成後,將Kinect V2連線到電腦USB3.0的口,我的電腦是左手邊最下面的那個,然後開啟裝置管理器,檢視kinect是否連線正常,如下圖所示即為連線正常,如果有感嘆號,就把這個驅動刪了,然後拔掉Kinect,再連線到電腦上,電腦會再次自動安裝驅動,如果還不行,就重啟一下。

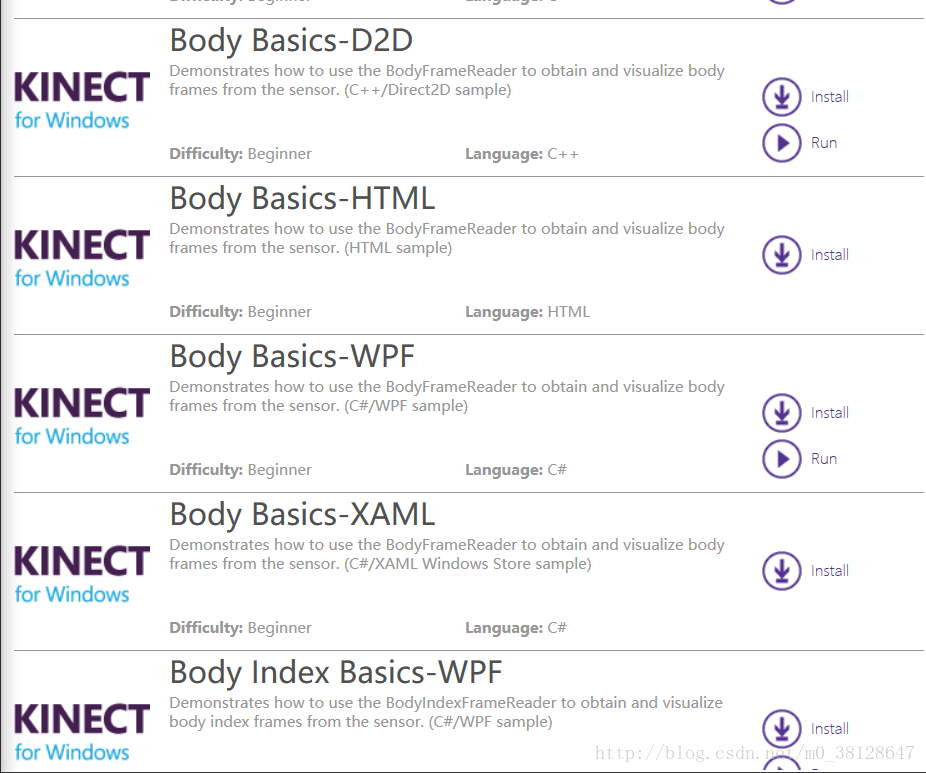

裝好驅動後,開啟剛剛安裝好的SDK Browser(Kinect for windows)v2.0軟體,找到如下圖所示的選項,我只測試了其中有Run按鍵的幾個,這幾個點選Run之後,會出現一個黑色的視窗,這時候不要以為是壞的,當你在kinect攝像頭前面動的時候,它才會顯示東西,並且只顯示人體骨骼。

現在我們算是把整個kinect都安裝好了,接下來就配置系統了。

首先是安裝好opencv,官網上下載解壓即可,還有vs2015也是到windows官網下載安裝,網上相應的安裝教程特別多,找一下就好。

接下來是把opencv和kinect sdk for windows配置到vs2015裡面。

1.新建環境變數,變數名:OPENCV,變數值:D:…\opencv\build,要修改為你自己的opencv安裝路徑,然後再環境變數Path裡面,輸入D:…\opencv\build\x86\vc12\bin,也是相應的你的opencv的安裝路徑。

2.開啟vs2015,新建一個C++專案,然後在【解決方案資源管理器】中右鍵專案名,選擇【屬性】,在【C/C++】的【常規】裡,【附加包含目錄】中加入

【

上面做完之後應該就可以編譯運行了,但是我發現寫程式碼時不會對【Kinect.h】中出現的函式這些進行自動補全,而且語法檢查時提示【Kinect.h】找不到,如果你也出現此問題,那就在【解決方案資源管理器】中的【標頭檔案】這裡右鍵新增【Kinect.h】,它位於【C:\Program Files\Microsoft SDKs\Kinect\v2.0_1409\inc】中。

參考於:http://www.cnblogs.com/xz816111/p/5184273.html

3.配置opencv,可參考這篇文章:http://blog.csdn.net/lanergaming/article/details/48689841

最後,也是我們最終的目的,識別人體骨骼,程式碼如下(宣告:程式碼是我借鑑的複製的別人的,連結在後面):

#include <iostream>

#include <opencv2\imgproc.hpp> //opencv標頭檔案

#include <opencv2\calib3d.hpp>

#include <opencv2\highgui.hpp>

#include <Kinect.h> //Kinect標頭檔案

using namespace std;

using namespace cv;

void draw(Mat & img, Joint & r_1, Joint & r_2, ICoordinateMapper * myMapper);

int main(void)

{

IKinectSensor * mySensor = nullptr;

GetDefaultKinectSensor(&mySensor);

mySensor->Open();

IColorFrameSource * myColorSource = nullptr;

mySensor->get_ColorFrameSource(&myColorSource);

IColorFrameReader * myColorReader = nullptr;

myColorSource->OpenReader(&myColorReader);

int colorHeight = 0, colorWidth = 0;

IFrameDescription * myDescription = nullptr;

myColorSource->get_FrameDescription(&myDescription);

myDescription->get_Height(&colorHeight);

myDescription->get_Width(&colorWidth);

IColorFrame * myColorFrame = nullptr;

Mat original(colorHeight, colorWidth, CV_8UC4);

//**********************以上為ColorFrame的讀取前準備**************************

IBodyFrameSource * myBodySource = nullptr;

mySensor->get_BodyFrameSource(&myBodySource);

IBodyFrameReader * myBodyReader = nullptr;

myBodySource->OpenReader(&myBodyReader);

int myBodyCount = 0;

myBodySource->get_BodyCount(&myBodyCount);

IBodyFrame * myBodyFrame = nullptr;

ICoordinateMapper * myMapper = nullptr;

mySensor->get_CoordinateMapper(&myMapper);

//**********************以上為BodyFrame以及Mapper的準備***********************

while (1)

{

while (myColorReader->AcquireLatestFrame(&myColorFrame) != S_OK);

myColorFrame->CopyConvertedFrameDataToArray(colorHeight * colorWidth * 4, original.data, ColorImageFormat_Bgra);

Mat copy = original.clone(); //讀取彩色影象並輸出到矩陣

while (myBodyReader->AcquireLatestFrame(&myBodyFrame) != S_OK); //讀取身體影象

IBody ** myBodyArr = new IBody *[myBodyCount]; //為存身體資料的陣列做準備

for (int i = 0; i < myBodyCount; i++)

myBodyArr[i] = nullptr;

if (myBodyFrame->GetAndRefreshBodyData(myBodyCount, myBodyArr) == S_OK) //把身體資料輸入陣列

for (int i = 0; i < myBodyCount; i++)

{

BOOLEAN result = false;

if (myBodyArr[i]->get_IsTracked(&result) == S_OK && result) //先判斷是否偵測到

{

Joint myJointArr[JointType_Count];

if (myBodyArr[i]->GetJoints(JointType_Count, myJointArr) == S_OK) //如果偵測到就把關節資料輸入到陣列並畫圖

{

draw(copy, myJointArr[JointType_Head], myJointArr[JointType_Neck], myMapper);

draw(copy, myJointArr[JointType_Neck], myJointArr[JointType_SpineShoulder], myMapper);

draw(copy, myJointArr[JointType_SpineShoulder], myJointArr[JointType_ShoulderLeft], myMapper);

draw(copy, myJointArr[JointType_SpineShoulder], myJointArr[JointType_SpineMid], myMapper);

draw(copy, myJointArr[JointType_SpineShoulder], myJointArr[JointType_ShoulderRight], myMapper);

draw(copy, myJointArr[JointType_ShoulderLeft], myJointArr[JointType_ElbowLeft], myMapper);

draw(copy, myJointArr[JointType_SpineMid], myJointArr[JointType_SpineBase], myMapper);

draw(copy, myJointArr[JointType_ShoulderRight], myJointArr[JointType_ElbowRight], myMapper);

draw(copy, myJointArr[JointType_ElbowLeft], myJointArr[JointType_WristLeft], myMapper);

draw(copy, myJointArr[JointType_SpineBase], myJointArr[JointType_HipLeft], myMapper);

draw(copy, myJointArr[JointType_SpineBase], myJointArr[JointType_HipRight], myMapper);

draw(copy, myJointArr[JointType_ElbowRight], myJointArr[JointType_WristRight], myMapper);

draw(copy, myJointArr[JointType_WristLeft], myJointArr[JointType_ThumbLeft], myMapper);

draw(copy, myJointArr[JointType_WristLeft], myJointArr[JointType_HandLeft], myMapper);

draw(copy, myJointArr[JointType_HipLeft], myJointArr[JointType_KneeLeft], myMapper);

draw(copy, myJointArr[JointType_HipRight], myJointArr[JointType_KneeRight], myMapper);

draw(copy, myJointArr[JointType_WristRight], myJointArr[JointType_ThumbRight], myMapper);

draw(copy, myJointArr[JointType_WristRight], myJointArr[JointType_HandRight], myMapper);

draw(copy, myJointArr[JointType_HandLeft], myJointArr[JointType_HandTipLeft], myMapper);

draw(copy, myJointArr[JointType_KneeLeft], myJointArr[JointType_FootLeft], myMapper);

draw(copy, myJointArr[JointType_KneeRight], myJointArr[JointType_FootRight], myMapper);

draw(copy, myJointArr[JointType_HandRight], myJointArr[JointType_HandTipRight], myMapper);

}

}

}

delete[]myBodyArr;

myBodyFrame->Release();

myColorFrame->Release();

imshow("TEST", copy);

if (waitKey(30) == VK_ESCAPE)

break;

}

myMapper->Release();

myDescription->Release();

myColorReader->Release();

myColorSource->Release();

myBodyReader->Release();

myBodySource->Release();

mySensor->Close();

mySensor->Release();

return 0;

}

void draw(Mat & img, Joint & r_1, Joint & r_2, ICoordinateMapper * myMapper)

{

//用兩個關節點來做線段的兩端,並且進行狀態過濾

if (r_1.TrackingState == TrackingState_Tracked && r_2.TrackingState == TrackingState_Tracked)

{

ColorSpacePoint t_point; //要把關節點用的攝像機座標下的點轉換成彩色空間的點

Point p_1, p_2;

myMapper->MapCameraPointToColorSpace(r_1.Position, &t_point);

p_1.x = t_point.X;

p_1.y = t_point.Y;

myMapper->MapCameraPointToColorSpace(r_2.Position, &t_point);

p_2.x = t_point.X;

p_2.y = t_point.Y;

line(img, p_1, p_2, Vec3b(0, 255, 0), 5);

circle(img, p_1, 10, Vec3b(255, 0, 0), -1);

circle(img, p_2, 10, Vec3b(255, 0, 0), -1);

}

}