利用flandmark進行face alignment

阿新 • • 發佈:2019-02-01

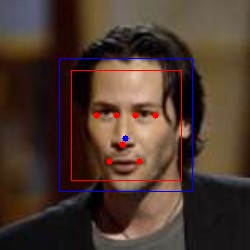

flandmark是一個檢測臉部特徵點的開原始碼庫(http://cmp.felk.cvut.cz/~uricamic/flandmark/), 利用flandmark可以檢測到左右眼睛的左右角點、鼻子、嘴的左右角點,位置如下:

/*

* 5 1 2 6

*

*

* 0/7

*

*

* 3 4

*

*/

檢測效果如下圖:

對於兩幅臉部影象,檢測到各自臉部特徵點後,可以利用其中的三個對應特徵點,進行仿射變換,將兩幅臉部影象對齊。

程式碼:

對齊效果如下,將第一幅圖對齊到第二幅圖,對齊後的圖為第三幅圖#include "libflandmark/flandmark_detector.h" #include <opencv2/core/core.hpp> #include <opencv2/imgproc/imgproc.hpp> #include <opencv2/objdetect/objdetect.hpp> #include <opencv2/highgui/highgui.hpp> using namespace cv; #define _DEBUG_INFO int detectFaceInImage(IplImage *orig, IplImage* input, CvHaarClassifierCascade* cascade, FLANDMARK_Model *model, int *bbox, double *landmarks) { int ret = 0; // Smallest face size. CvSize minFeatureSize = cvSize(40, 40); int flags = CV_HAAR_DO_CANNY_PRUNING; // How detailed should the search be. float search_scale_factor = 1.1f; CvMemStorage* storage; CvSeq* rects; int nFaces; storage = cvCreateMemStorage(0); cvClearMemStorage(storage); // Detect all the faces in the greyscale image. rects = cvHaarDetectObjects(input, cascade, storage, search_scale_factor, 2, flags, minFeatureSize); nFaces = rects->total; double t = (double)cvGetTickCount(); for (int iface = 0; iface < (rects ? nFaces : 0); ++iface) { CvRect *r = (CvRect*)cvGetSeqElem(rects, iface); bbox[0] = r->x; bbox[1] = r->y; bbox[2] = r->x + r->width; bbox[3] = r->y + r->height; ret = flandmark_detect(input, bbox, model, landmarks); #ifdef _DEBUG_INFO // display landmarks cvRectangle(orig, cvPoint(bbox[0], bbox[1]), cvPoint(bbox[2], bbox[3]), CV_RGB(255,0,0) ); cvRectangle(orig, cvPoint(model->bb[0], model->bb[1]), cvPoint(model->bb[2], model->bb[3]), CV_RGB(0,0,255) ); cvCircle(orig, cvPoint((int)landmarks[0], (int)landmarks[1]), 3, CV_RGB(0, 0,255), CV_FILLED); for (int i = 2; i < 2*model->data.options.M; i += 2) { cvCircle(orig, cvPoint(int(landmarks[i]), int(landmarks[i+1])), 3, CV_RGB(255,0,0), CV_FILLED); } #endif } t = (double)cvGetTickCount() - t; int ms = cvRound( t / ((double)cvGetTickFrequency() * 1000.0) ); if (nFaces > 0) { printf("Faces detected: %d; Detection of facial landmark on all faces took %d ms\n", nFaces, ms); } else { printf("NO Face\n"); ret = -1; } cvReleaseMemStorage(&storage); return ret; } /* * 5 1 2 6 * * * 0/7 * * * 3 4 * */ int main( int argc, char** argv ) { if (argc != 3) { fprintf(stderr, "Usage: %s <src_image> <dst_image>\n", argv[0]); exit(1); } // Haar Cascade file, used for Face Detection. char faceCascadeFilename[] = "haarcascade_frontalface_alt.xml"; // Load the HaarCascade classifier for face detection. CvHaarClassifierCascade* faceCascade; faceCascade = (CvHaarClassifierCascade*)cvLoad(faceCascadeFilename, 0, 0, 0); if( !faceCascade ) { printf("Couldnt load Face detector '%s'\n", faceCascadeFilename); exit(1); } // ------------- begin flandmark load model double t = (double)cvGetTickCount(); FLANDMARK_Model * model = flandmark_init("flandmark_model.dat"); if (model == 0) { printf("Structure model wasn't created. Corrupted file flandmark_model.dat?\n"); exit(1); } t = (double)cvGetTickCount() - t; double ms = cvRound( t / ((double)cvGetTickFrequency() * 1000.0) ); printf("Structure model loaded in %d ms.\n", ms); // ------------- end flandmark load model // input image IplImage *src = cvLoadImage(argv[1]); IplImage *dst = cvLoadImage(argv[2]); if (src == NULL) { fprintf(stderr, "Cannot open image %s. Exiting...\n", argv[1]); exit(1); } if (dst == NULL) { fprintf(stderr, "Cannot open image %s. Exiting...\n", argv[2]); exit(1); } // convert image to grayscale IplImage *src_gray = cvCreateImage(cvSize(src->width, src->height), IPL_DEPTH_8U, 1); cvConvertImage(src, src_gray); IplImage *dst_gray = cvCreateImage(cvSize(dst->width, dst->height), IPL_DEPTH_8U, 1); cvConvertImage(dst, dst_gray); // detect landmarks int *bbox_src = (int*)malloc(4*sizeof(int)); int *bbox_dst = (int*)malloc(4*sizeof(int)); double *landmarks_src = (double*)malloc(2*model->data.options.M*sizeof(double)); double *landmarks_dst = (double*)malloc(2*model->data.options.M*sizeof(double)); int ret_src = detectFaceInImage(src, src_gray, faceCascade, model, bbox_src, landmarks_src); int ret_dst = detectFaceInImage(dst, dst_gray, faceCascade, model, bbox_dst, landmarks_dst); if(0 != ret_src || 0 != ret_dst){ printf("Landmark not detected!\n"); return -1; } // *** face alignment begin *** // Point2f srcTri[3]; Point2f dstTri[3]; Mat src_mat(src); Mat dst_mat(dst); Mat warp_mat( 2, 3, CV_32FC1 ); Mat warp_dst; /// Set the dst image the same type and size as src warp_dst = Mat::zeros( src_mat.rows, src_mat.cols, src_mat.type() ); /// Set your 3 points to calculate the Affine Transform srcTri[0] = Point2f( landmarks_src[5*2], landmarks_src[5*2+1] ); srcTri[1] = Point2f( landmarks_src[6*2], landmarks_src[6*2+1] ); srcTri[2] = Point2f( landmarks_src[0*2], landmarks_src[0*2+1] ); dstTri[0] = Point2f( landmarks_dst[5*2], landmarks_dst[5*2+1] ); dstTri[1] = Point2f( landmarks_dst[6*2], landmarks_dst[6*2+1] ); dstTri[2] = Point2f( landmarks_dst[0*2], landmarks_dst[0*2+1] ); /// Get the Affine Transform warp_mat = getAffineTransform( srcTri, dstTri ); /// Apply the Affine Transform just found to the src image warpAffine( src_mat, warp_dst, warp_mat, warp_dst.size() ); // *** face alignment end *** // // show images imshow("src", src_mat); imshow("dst", dst_mat); imshow("warp_dst", warp_dst); //imwrite("src.jpg", src_mat); //imwrite("dst.jpg", dst_mat); //imwrite("warp_dst.jpg", warp_dst); waitKey(0); // cleanup free(bbox_src); free(landmarks_src); free(bbox_dst); free(landmarks_dst); cvDestroyAllWindows(); cvReleaseImage(&src); cvReleaseImage(&src_gray); cvReleaseImage(&dst); cvReleaseImage(&dst_gray); cvReleaseHaarClassifierCascade(&faceCascade); flandmark_free(model); }