二進位制安裝Kubernetes(K8s)叢集---從零安裝教程

一、K8s介紹

1、K8s簡介

(1)使用Kubernetes,你可以快速高效地響應客戶需求:

-

動態地對應用進行擴容。

-

無縫地釋出新特性。

-

僅使用需要的資源以優化硬體使用。

(2)Kubernetes是:

-

簡潔的:輕量級,簡單,易上手

-

可移植的:公有,私有,混合,多重雲(multi-cloud)

-

可擴充套件的: 模組化, 外掛化, 可掛載, 可組合

-

可自愈的: 自動佈置, 自動重啟, 自動複製

2、下載地址

二、實驗環境

selinux iptables off

1、安裝說明

注意:配置檔案中的註釋只是為了說明對應功能,安裝時需刪除,否則會報錯,並且不同版本的配置檔案並不相容!!!

| 主機名 | IP | 系統版本 | 安裝服務 | 功能說明 |

|---|---|---|---|---|

| server1 | 10.10.10.1 | rhel7.3 | docker、etcd、api-server、scheduler、controller-manager、kubelet、flannel、docker-compose、harbor | 作為叢集的Master、服務環境控制相關模組、api網管控制相關模組、平臺管理控制檯模組 |

| server2 | 10.10.10.2 | rhel7.3 | docker、etcd、kubelet、proxy、flannel | 服務節點、用於容器化服務部署和執行 |

| server3 | 10.10.10.3 | rhel7.3 | docker、etcd、kubelet、proxy、flannel | 服務節點、用於容器化服務部署和執行 |

[[email protected] mnt]# cat /etc/hosts

10.10.10.1 server1

10.10.10.2 server2

10.10.10.3 server3

2、把swap分割槽關閉(3臺):

swapoff -a

我們基礎環境就準備好了,下面進行k8s的環境的安裝!!!

三、安裝Docker(3臺)

由於k8s基於docker,docker版本:docker-ce-18.03.1.ce-1.el7

1、如果之前已經安裝了docker:

yum remove docker \ docker-client \ docker-client-latest \ docker-common \ docker-latest \ docker-latest-logrotate \ docker-logrotate \ docker-selinux \ docker-engine-selinux \ docker-engine

注意:如果你使用的系統不是7.3的話(例如7.0),需要把yum源換成7.3的yum源,由於依賴的版本過高無法安裝!!!

2、安裝

[[email protected] mnt]# ls /mnt

container-selinux-2.21-1.el7.noarch.rpm

libsemanage-python-2.5-8.el7.x86_64.rpm

docker-ce-18.03.1.ce-1.el7.centos.x86_64.rpm

pigz-2.3.4-1.el7.x86_64.rpm docker-engine-1.8.2-1.el7.rpm

policycoreutils-2.5-17.1.el7.x86_64.rpm

libsemanage-2.5-8.el7.x86_64.rpm

policycoreutils-python-2.5- 17.1.el7.x86_64.rpm

[[email protected] mnt]# yum install -y /mnt/*

[[email protected] mnt]# systemctl enable docker

[[email protected] mnt]# systemctl start docker

[[email protected] mnt]# systemctl status docker ###可以發現生成doker0網絡卡、172.17.0.1/16

3、測試

[[email protected] mnt]# docker version

Client:

Version: 18.03.1-ce

API version: 1.37

Go version: go1.9.5

Git commit: 9ee9f40

Built: Thu Apr 26 07:20:16 2018

OS/Arch: linux/amd64

Experimental: false

Orchestrator: swarm

Server:

Engine:

Version: 18.03.1-ce

API version: 1.37 (minimum version 1.12)

Go version: go1.9.5

Git commit: 9ee9f40

Built: Thu Apr 26 07:23:58 2018

OS/Arch: linux/amd64

Experimental: false

4、如果想進行IP更改:

[[email protected] ~]# vim /etc/docker/daemon.json

{

"bip": "172.17.0.1/24"

}

[[email protected] ~]# systemctl restart docker

[[email protected] ~]# ip addr show docker0

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN

link/ether 02:42:23:2d:56:4b brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/24 brd 172.17.0.255 scope global docker0

valid_lft forever preferred_lft forever

四、安裝etcd(3臺)

1、建立安裝存放的目錄

[[email protected] mnt]# mkdir -p /usr/local/kubernetes/{bin,config}

[[email protected] mnt]# vim /etc/profile ###為了方便能夠直接執行命令,加入宣告

export PATH=$PATH:/usr/local/kubernetes/bin

[[email protected] mnt]# . /etc/profile 或 source /etc/profile

[[email protected] mnt]# mkdir -p /var/lib/etcd/ ###建立工作目錄--WorkingDirectory(3臺)

2、解壓etcd,移動命令到對應位置

[[email protected] ~]# tar xf /root/etcd-v3.1.7-linux-amd64.tar.gz

[[email protected] ~]# mv /root/etcd-v3.1.7-linux-amd64/etcd* /usr/local/kubernetes/bin/

3、etcd systemd配置檔案

[[email protected] ~]# vim /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

[Service]

Type=simple

WorkingDirectory=/var/lib/etcd

EnvironmentFile=-/usr/local/kubernetes/config/etcd.conf

ExecStart=/usr/local/kubernetes/bin/etcd \

--name=${ETCD_NAME} \

--data-dir=${ETCD_DATA_DIR} \

--listen-peer-urls=${ETCD_LISTEN_PEER_URLS} \

--listen-client-urls=${ETCD_LISTEN_CLIENT_URLS} \

--advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS} \

--initial-advertise-peer-urls=${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--initial-cluster=${ETCD_INITIAL_CLUSTER} \

--initial-cluster-token=${ETCD_INITIAL_CLUSTER_TOKEN} \

--initial-cluster-state=${ETCD_INITIAL_CLUSTER_STATE}

Type=notify

[Install]

WantedBy=multi-user.target

4、etcd配置檔案

[[email protected] ~]# cat /usr/local/kubernetes/config/etcd.conf

#[member]

ETCD_NAME="etcd01" ###修改為本機對應的名字,etcd02,etcd03

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="http://0.0.0.0:2380"

ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"

#[cluster]

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://10.10.10.1:2380" ###修改為本機IP

ETCD_INITIAL_CLUSTER="etcd01=http://10.10.10.1:2380,etcd02=http://10.10.10.2:2380,etcd03=http://10.10.10.3:2380" ###把IP更換成叢集IP

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_INITIAL_CLUSTER_TOKEN="k8s-etcd-cluster"

ETCD_ADVERTISE_CLIENT_URLS="http://10.10.10.1:2379" ###修改為本機IP

[[email protected] ~]# systemctl enable etcd

[[email protected] ~]# systemctl start etcd

注意:etcd啟動的話3臺同時啟動,否則啟動會失敗!!!

5、3臺都安裝完成我們可以進行測試:

[[email protected] ~]# etcdctl cluster-health

member 2cc6b104fe5377ef is healthy: got healthy result from http://10.10.10.3:2379

member 74565e08b84745a6 is healthy: got healthy result from http://10.10.10.2:2379

member af08b45e1ab8a099 is healthy: got healthy result from http://10.10.10.1:2379

cluster is healthy

[[email protected] kubernetes]# etcdctl member list ###叢集自己選擇出leader

2cc6b104fe5377ef: name=etcd03 peerURLs=http://10.10.10.3:2380 clientURLs=http://10.10.10.3:2379 isLeader=true

74565e08b84745a6: name=etcd02 peerURLs=http://10.10.10.2:2380 clientURLs=http://10.10.10.2:2379 isLeader=false

af08b45e1ab8a099: name=etcd01 peerURLs=http://10.10.10.1:2380 clientURLs=http://10.10.10.1:2379 isLeader=false

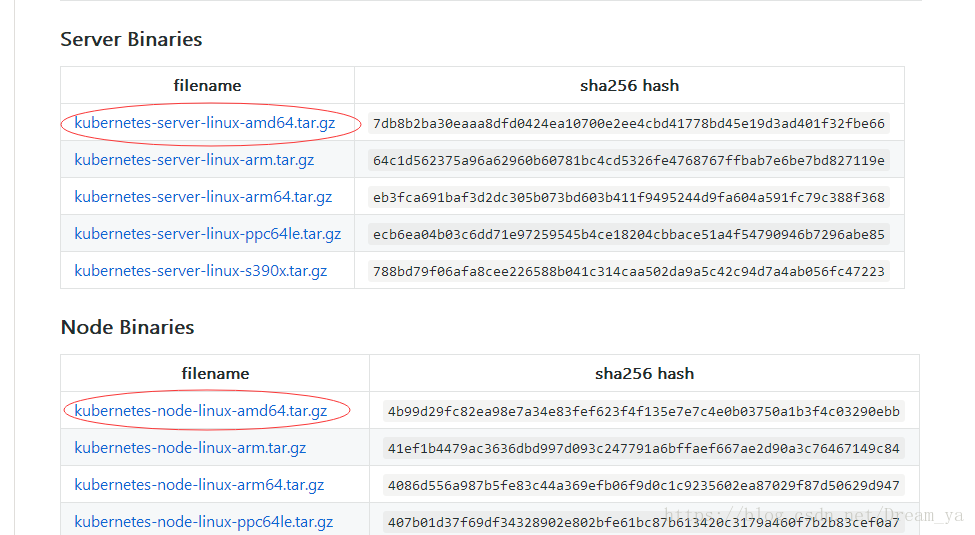

五、安裝Master節點元件

1、移動二進位制到bin目錄下

[[email protected] ~]# tar xf /root/kubernetes-server-linux-amd64.tar.gz

[[email protected] kubernetes]# mv /root/kubernetes/server/bin/{kube-apiserver,kube-scheduler,kube-controller-manager,kubectl,kubelet} /usr/local/kubernetes/bin

2、apiserver安裝

(1)apiserver配置檔案

[[email protected] ~]# vim /usr/local/kubernetes/config/kube-apiserver

#啟用日誌標準錯誤

KUBE_LOGTOSTDERR="--logtostderr=true"

#日誌級別

KUBE_LOG_LEVEL="--v=4"

#Etcd服務地址

KUBE_ETCD_SERVERS="--etcd-servers=http://10.10.10.1:2379"

#API服務監聽地址

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

#API服務監聽埠

KUBE_API_PORT="--insecure-port=8080"

#對叢集中成員提供API服務地址

KUBE_ADVERTISE_ADDR="--advertise-address=10.10.10.1"

#允許容器請求特權模式,預設false

KUBE_ALLOW_PRIV="--allow-privileged=false"

#叢集分配的IP範圍,自定義但是要跟後面的kubelet(服務節點)的配置DNS在一個區間

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.0.0.0/24"

(2)apiserver systemd配置檔案

[[email protected] ~]# vim /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/usr/local/kubernetes/config/kube-apiserver

ExecStart=/usr/local/kubernetes/bin/kube-apiserver \

${KUBE_LOGTOSTDERR} \

${KUBE_LOG_LEVEL} \

${KUBE_ETCD_SERVERS} \

${KUBE_API_ADDRESS} \

${KUBE_API_PORT} \

${KUBE_ADVERTISE_ADDR} \

${KUBE_ALLOW_PRIV} \

${KUBE_SERVICE_ADDRESSES}

Restart=on-failure

[Install]

WantedBy=multi-user.target

[[email protected] ~]# systemctl enable kube-apiserver

[[email protected] ~]# systemctl start kube-apiserver

3、安裝Scheduler

(1)scheduler配置檔案

[[email protected] ~]# vim /usr/local/kubernetes/config/kube-scheduler

KUBE_LOGTOSTDERR="--logtostderr=true"

KUBE_LOG_LEVEL="--v=4"

KUBE_MASTER="--master=10.10.10.1:8080"

KUBE_LEADER_ELECT="--leader-elect"

(2)scheduler systemd配置檔案

[[email protected] ~]# vim /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/usr/local/kubernetes/config/kube-scheduler

ExecStart=/usr/local/kubernetes/bin/kube-scheduler \

${KUBE_LOGTOSTDERR} \

${KUBE_LOG_LEVEL} \

${KUBE_MASTER} \

${KUBE_LEADER_ELECT}

Restart=on-failure

[Install]

WantedBy=multi-user.target

[[email protected] ~]# systemctl enable kube-scheduler

[[email protected] ~]# systemctl start kube-scheduler

4、controller-manager安裝

(1)controller-manage配置檔案

[[email protected] ~]# vim /usr/local/kubernetes/config/kube-controller-manager

KUBE_LOGTOSTDERR="--logtostderr=true"

KUBE_LOG_LEVEL="--v=4"

KUBE_MASTER="--master=10.10.10.1:8080"

(2)controller-manage systemd配置檔案

[[email protected] ~]# vim /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/usr/local/kubernetes/config/kube-controller-manager

ExecStart=/usr/local/kubernetes/bin/kube-controller-manager \

${KUBE_LOGTOSTDERR} \

${KUBE_LOG_LEVEL} \

${KUBE_MASTER} \

${KUBE_LEADER_ELECT}

Restart=on-failure

[Install]

WantedBy=multi-user.target

[[email protected] ~]# systemctl enable kube-controller-manager

[[email protected] ~]# systemctl start kube-controller-manager

5、安裝kubelet

安裝完此服務,kubectl get nodes就可以看到Master,也可以選擇不安裝!!!

(1)kubeconfig配置檔案

[[email protected] ~]# vim /usr/local/kubernetes/config/kubelet.kubeconfig

apiVersion: v1

kind: Config

clusters:

- cluster:

server: http://10.10.10.1:8080 ###Master的IP,即自身IP

name: local

contexts:

- context:

cluster: local

name: local

current-context: local

(2)kubelet配置檔案

[[email protected] ~]# vim /usr/local/kubernetes/config/kubelet

# 啟用日誌標準錯誤

KUBE_LOGTOSTDERR="--logtostderr=true"

# 日誌級別

KUBE_LOG_LEVEL="--v=4"

# Kubelet服務IP地址

NODE_ADDRESS="--address=10.10.10.1"

# Kubelet服務埠

NODE_PORT="--port=10250"

# 自定義節點名稱

NODE_HOSTNAME="--hostname-override=10.10.10.1"

# kubeconfig路徑,指定連線API伺服器

KUBELET_KUBECONFIG="--kubeconfig=/usr/local/kubernetes/config/kubelet.kubeconfig"

# 允許容器請求特權模式,預設false

KUBE_ALLOW_PRIV="--allow-privileged=false"

# DNS資訊,DNS的IP

KUBELET_DNS_IP="--cluster-dns=10.0.0.2"

KUBELET_DNS_DOMAIN="--cluster-domain=cluster.local"

# 禁用使用Swap

KUBELET_SWAP="--fail-swap-on=false"

(3)kubelet systemd配置檔案

[[email protected] ~]# vim /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Requires=docker.service

[Service]

EnvironmentFile=-/usr/local/kubernetes/config/kubelet

ExecStart=/usr/local/kubernetes/bin/kubelet \

${KUBE_LOGTOSTDERR} \

${KUBE_LOG_LEVEL} \

${NODE_ADDRESS} \

${NODE_PORT} \

${NODE_HOSTNAME} \

${KUBELET_KUBECONFIG} \

${KUBE_ALLOW_PRIV} \

${KUBELET_DNS_IP} \

${KUBELET_DNS_DOMAIN} \

${KUBELET_SWAP}

Restart=on-failure

KillMode=process

[Install]

WantedBy=multi-user.target

(4)啟動服務,並設定開機啟動:

[[email protected] ~]# swapoff -a ###啟動之前要先關閉swap

[[email protected] ~]# systemctl enable kubelet

[[email protected] ~]# systemctl start kubelet

注意:服務啟動先啟動etcd,再啟動apiserver,其他無順序!!!

六、安裝node(server2和server3)

1、移動二進位制到bin目錄

[[email protected] ~]# tar xf /root/kubernetes-node-linux-amd64.tar.gz

[[email protected] ~]# mv /root/kubernetes/node/bin/{kubelet,kube-proxy} /usr/local/kubernetes/bin/

2、安裝kubelet

(1)kubeconfig配置檔案

注意:kubeconfig檔案用於kubelet連線master apiserver

[[email protected] ~]# vim /usr/local/kubernetes/config/kubelet.kubeconfig

apiVersion: v1

kind: Config

clusters:

- cluster:

server: http://10.10.10.1:8080

name: local

contexts:

- context:

cluster: local

name: local

current-context: local

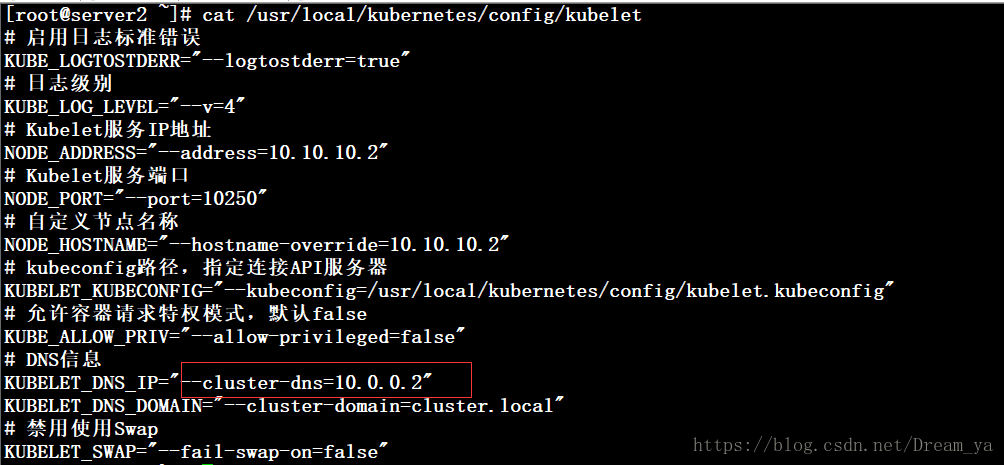

(2)kubelet配置檔案

[[email protected] ~]# cat /usr/local/kubernetes/config/kubelet

#啟用日誌標準錯誤

KUBE_LOGTOSTDERR="--logtostderr=true"

#日誌級別

KUBE_LOG_LEVEL="--v=4"

#Kubelet服務IP地址(自身IP)

NODE_ADDRESS="--address=10.10.10.2"

#Kubelet服務埠

NODE_PORT="--port=10250"

#自定義節點名稱(自身IP)

NODE_HOSTNAME="--hostname-override=10.10.10.2"

#kubeconfig路徑,指定連線API伺服器

KUBELET_KUBECONFIG="--kubeconfig=/usr/local/kubernetes/config/kubelet.kubeconfig"

#允許容器請求特權模式,預設false

KUBE_ALLOW_PRIV="--allow-privileged=false"

#DNS資訊,跟上面給的地址段對應

KUBELET_DNS_IP="--cluster-dns=10.0.0.2"

KUBELET_DNS_DOMAIN="--cluster-domain=cluster.local"

#禁用使用Swap

KUBELET_SWAP="--fail-swap-on=false"

(3)kubelet systemd配置檔案

[[email protected] ~]# vim /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Requires=docker.service

[Service]

EnvironmentFile=-/usr/local/kubernetes/config/kubelet

ExecStart=/usr/local/kubernetes/bin/kubelet \

${KUBE_LOGTOSTDERR} \

${KUBE_LOG_LEVEL} \

${NODE_ADDRESS} \

${NODE_PORT} \

${NODE_HOSTNAME} \

${KUBELET_KUBECONFIG} \

${KUBE_ALLOW_PRIV} \

${KUBELET_DNS_IP} \

${KUBELET_DNS_DOMAIN} \

${KUBELET_SWAP}

Restart=on-failure

KillMode=process

[Install]

WantedBy=multi-user.target

(4)啟動服務,並設定開機啟動:

[[email protected] ~]# swapoff -a ###啟動之前要先關閉swap

[[email protected] ~]# systemctl enable kubelet

[[email protected] ~]# systemctl start kubelet

3、安裝proxy

(1)proxy配置檔案

[[email protected] ~]# cat /usr/local/kubernetes/config/kube-proxy

#啟用日誌標準錯誤

KUBE_LOGTOSTDERR="--logtostderr=true"

#日誌級別

KUBE_LOG_LEVEL="--v=4"

#自定義節點名稱(自身IP)

NODE_HOSTNAME="--hostname-override=10.10.10.2"

#API服務地址(MasterIP)

KUBE_MASTER="--master=http://10.10.10.1:8080"

(2)proxy systemd配置檔案

[[email protected] ~]# cat /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=-/usr/local/kubernetes/config/kube-proxy

ExecStart=/usr/local/kubernetes/bin/kube-proxy \

${KUBE_LOGTOSTDERR} \

${KUBE_LOG_LEVEL} \

${NODE_HOSTNAME} \

${KUBE_MASTER}

Restart=on-failure

[Install]

WantedBy=multi-user.target

[[email protected] ~]# systemctl enable kube-proxy

[[email protected] ~]# systemctl restart kube-proxy

七、安裝flannel(3臺)

1、移動二進位制到bin目錄

[[email protected] ~]# tar xf flannel-v0.7.1-linux-amd64.tar.gz

[[email protected] ~]# mv /root/{flanneld,mk-docker-opts.sh} /usr/local/kubernetes/bin/

2、flannel配置檔案

[[email protected] kubernetes]# vim /usr/local/kubernetes/config/flanneld

# Flanneld configuration options

# etcd url location. Point this to the server where etcd runs,自身IP

FLANNEL_ETCD="http://10.10.10.1:2379"

# etcd config key. This is the configuration key that flannel queries

# For address range assignment,etcd-key的目錄

FLANNEL_ETCD_KEY="/atomic.io/network"

# Any additional options that you want to pass,根據自己的網絡卡名填寫

FLANNEL_OPTIONS="--iface=eth0"

3、flannel systemd配置檔案

[[email protected] kubernetes]# vim /usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network.target

After=network-online.target

Wants=network-online.target

After=etcd.service

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/usr/local/kubernetes/config/flanneld

EnvironmentFile=-/etc/sysconfig/docker-network

ExecStart=/usr/local/kubernetes/bin/flanneld -etcd-endpoints=${FLANNEL_ETCD} -etcd-prefix=${FLANNEL_ETCD_KEY} $FLANNEL_OPTIONS

ExecStartPost=/usr/local/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/docker

Restart=on-failure

[Install]

WantedBy=multi-user.target

RequiredBy=docker.service

4、設定etcd-key

注意: 在一臺上設定即可,會同步過去!!!

[[email protected] ~]# etcdctl mkdir /atomic.io/network

###下面的IP跟你docker本身的IP地址一個網段

[[email protected] ~]# etcdctl mk /atomic.io/network/config "{ \"Network\": \"172.17.0.0/16\", \"SubnetLen\": 24, \"Backend\": { \"Type\": \"vxlan\" } }"

{ "Network": "172.17.0.0/16", "SubnetLen": 24, "Backend": { "Type": "vxlan" } }

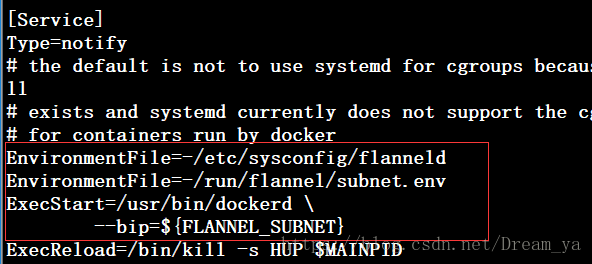

5、設定docker配置

因為docker要使用flanneld,所以在其配置中加入EnvironmentFile=-/etc/sysconfig/flanneld,EnvironmentFile=-/run/flannel/subnet.env,–bip=${FLANNEL_SUBNET}

[[email protected] ~]# vim /usr/lib/systemd/system/docker.service

6、啟動flannel和docker

[[email protected] ~]# systemctl enable flanneld.service

[[email protected] ~]# systemctl restart flanneld.service

[[email protected] ~]# systemctl daemon-reload

[[email protected] ~]# systemctl restart docker.service

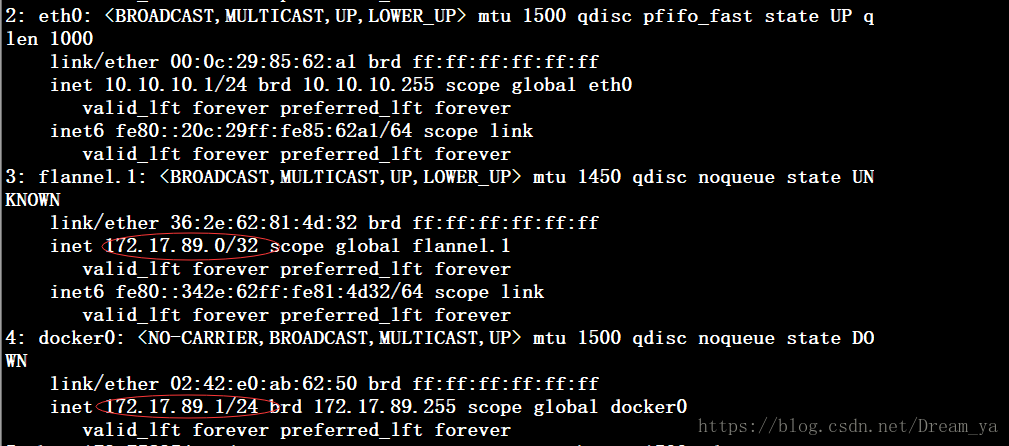

7、測試flannel

ip addr ###發現flanneld生成的IP和Docker的IP在同一個網段即完成

8、叢集測試

如果Master中沒有裝kubelet,kubectl get nodes就看不到Master!!!

[[email protected] ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

10.10.10.1 Ready <none> 1d v1.8.13

10.10.10.2 Ready <none> 1d v1.8.13

10.10.10.3 Ready <none> 1d v1.8.13

[[email protected] ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health": "true"}

這樣一個簡單的K8s叢集就安裝完成了,下面介紹下harbor安裝以及外掛的安裝!!!

八、harbor安裝

此處我是用harbor來管理我的映象倉庫,它的介紹我就不多介紹了!!!

注意:如果要使用本地映象可以不用安裝harbor,但是要安裝busybox,並設定yaml配置檔案imagePullPolicy: Never或imagePullPolicy: IfNotPresent在image同級下加入即可,預設方式為always使用網路的映象。

1、安裝docker-compose

(1)下載方法

https://github.com/docker/compose/releases/ ###官網下載地址

[[email protected] ~]# wget https://github.com/docker/compose/releases/download/1.22.0/docker-compose-Linux-x86_64

(2)安裝

[[email protected] ~]# cp docker-compose-Linux-x86_64 /usr/local/kubernetes/bin/docker-compose

[[email protected] ~]# chmod +x /usr/local/kubernetes/bin/docker-compose

(3)檢視版本

[[email protected] ~]# docker-compose -version

docker-compose version 1.22.0, build f46880fe

2、安裝harbor

(1)下載方法

https://github.com/vmware/harbor/releases#install ###下載地址

[[email protected] ~]# wget http://harbor.orientsoft.cn/harbor-v1.5.0/harbor-offline-installer-v1.5.0.tgz

(2)解壓tar包

[[email protected] ~]# tar xf harbor-offline-installer-v1.5.0.tgz -C /usr/local/kubernetes/

(3)配置harbor.cfg

[[email protected] ~]# grep -v "^#" /usr/local/kubernetes/harbor/harbor.cfg

_version = 1.5.0

###修改為本機IP即可

hostname = 10.10.10.1

ui_url_protocol = http

max_job_workers = 50

customize_crt = on

ssl_cert = /data/cert/server.crt

ssl_cert_key = /data/cert/server.key

secretkey_path = /data

admiral_url = NA

log_rotate_count = 50

log_rotate_size = 200M

http_proxy =

https_proxy =

no_proxy = 127.0.0.1,localhost,ui

email_identity =

email_server = smtp.mydomain.com

email_server_port = 25

email_username = [email protected]

email_password = abc

email_from = admin <[email protected]>

email_ssl = false

email_insecure = false

harbor_admin_password = Harbor12345

auth_mode = db_auth

ldap_url = ldaps://ldap.mydomain.com

ldap_basedn = ou=people,dc=mydomain,dc=com

ldap_uid = uid

ldap_scope = 2

ldap_timeout = 5

ldap_verify_cert = true

ldap_group_basedn = ou=group,dc=mydomain,dc=com

ldap_group_filter = objectclass=group

ldap_group_gid = cn

ldap_group_scope = 2

self_registration = on

token_expiration = 30

project_creation_restriction = everyone

db_host = mysql

db_password = root123

db_port = 3306

db_user = root

redis_url =

clair_db_host = postgres

clair_db_password = password

clair_db_port = 5432

clair_db_username = postgres

clair_db = postgres

uaa_endpoint = uaa.mydomain.org

uaa_clientid = id

uaa_clientsecret = secret

uaa_verify_cert = true

uaa_ca_cert = /path/to/ca.pem

registry_storage_provider_name = filesystem

registry_storage_provider_config =

(4)安裝

[[email protected] ~]# cd /usr/local/kubernetes/harbor/ ###一定要進入此目錄,日誌放在/var/log/harbor/

[[email protected] harbor]# ./prepare

[[email protected] harbor]# ./install.sh

(5)檢視生成的映象

[[email protected] harbor]# docker ps ###狀態為up

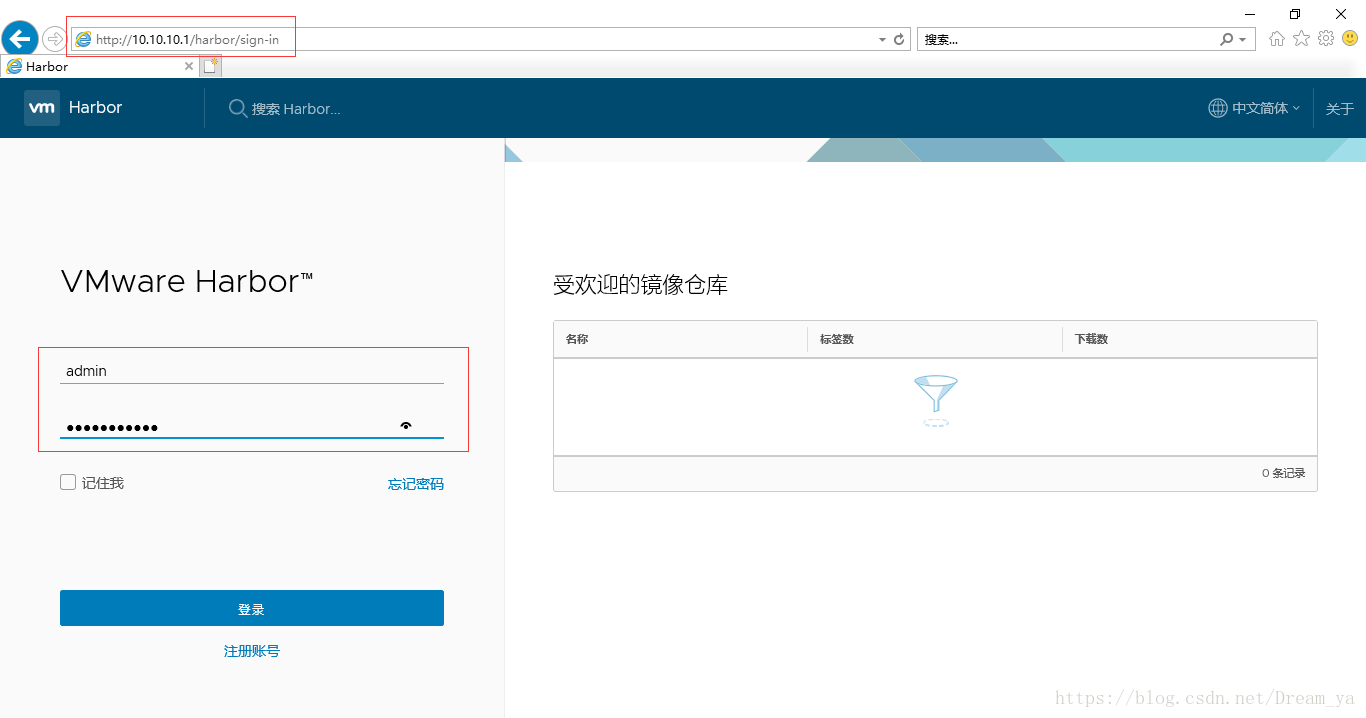

此時你也可以通過瀏覽器輸入http:10.10.10.1(Master的IP)進行登陸,預設賬號:admin預設密碼:Harbor12345!!!

(6)加入認證

<1> server1(Mster)

[[email protected] harbor]# vim /etc/sysconfig/docker

OPTIONS='--selinux-enabled --log-driver=journald --signature-verification=false --insecure-registry=10.10.10.1'

<2> 三臺都加入

[[email protected] ~]# vim /etc/docker/daemon.json

{

"insecure-registries": [

"10.10.10.1"

]

}

(7)測試

<1> shell中登陸

[[email protected] ~]# docker login 10.10.10.1 ###賬號:admin 密碼:Harbor12345

Username: admin

Password:

Login Succeeded

登陸成功後,我們便可以使用harbor倉庫了!!!

<2> 瀏覽器中登陸

(8)登陸報錯:

<1> 報錯:

[[email protected] ~]# docker login 10.10.10.1

Error response from daemon: Get http://10.10.10.1/v2/: unauthorized: authentication required

<2> 解決方法:

加入認證,上面有寫,或者就是密碼輸入錯誤!!!

(9)harbor基本操作

<1> 下線

# cd /usr/local/kubernetes/harbor/

# docker-compose down -v 或 docker-compose stop

<2> 修改配置

修改harbor.cfg和docker-compose.yml

<3> 重新部署上線

# cd /usr/local/kubernetes/harbor/

# ./prepare

# docker-compose up -d 或 docker-compose start

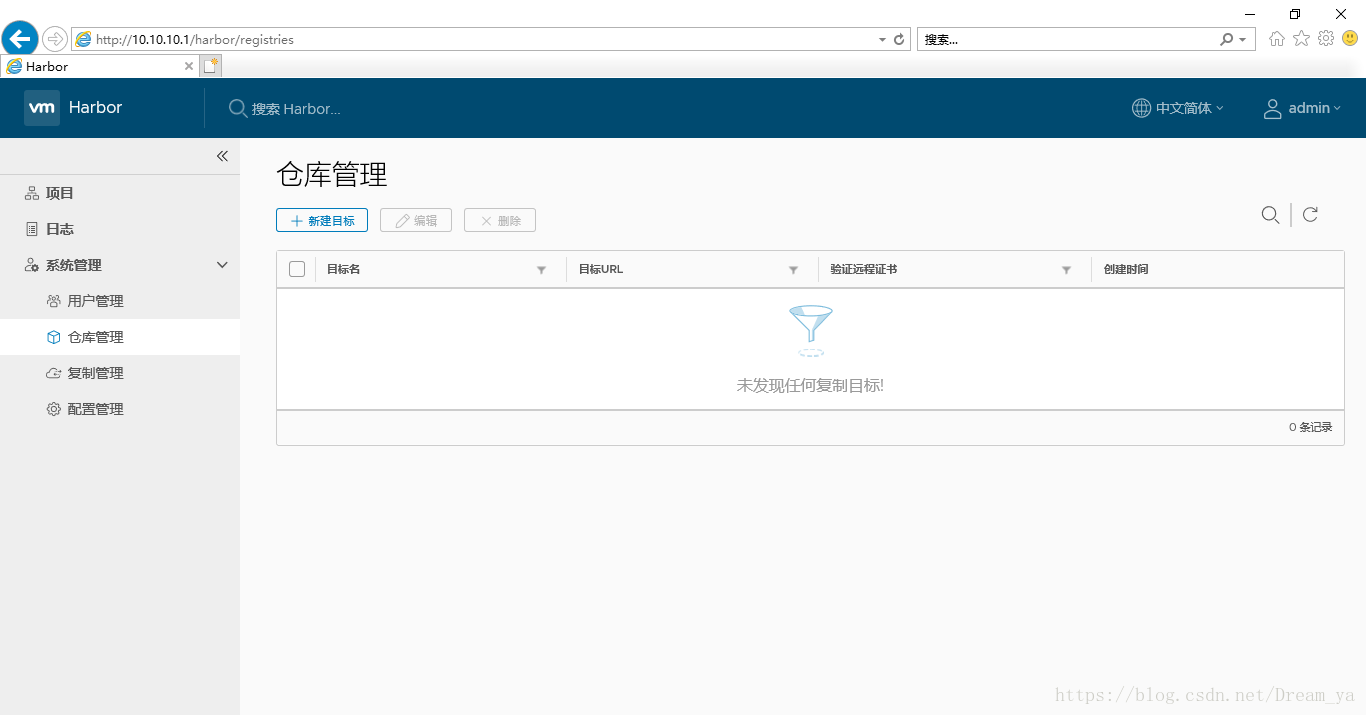

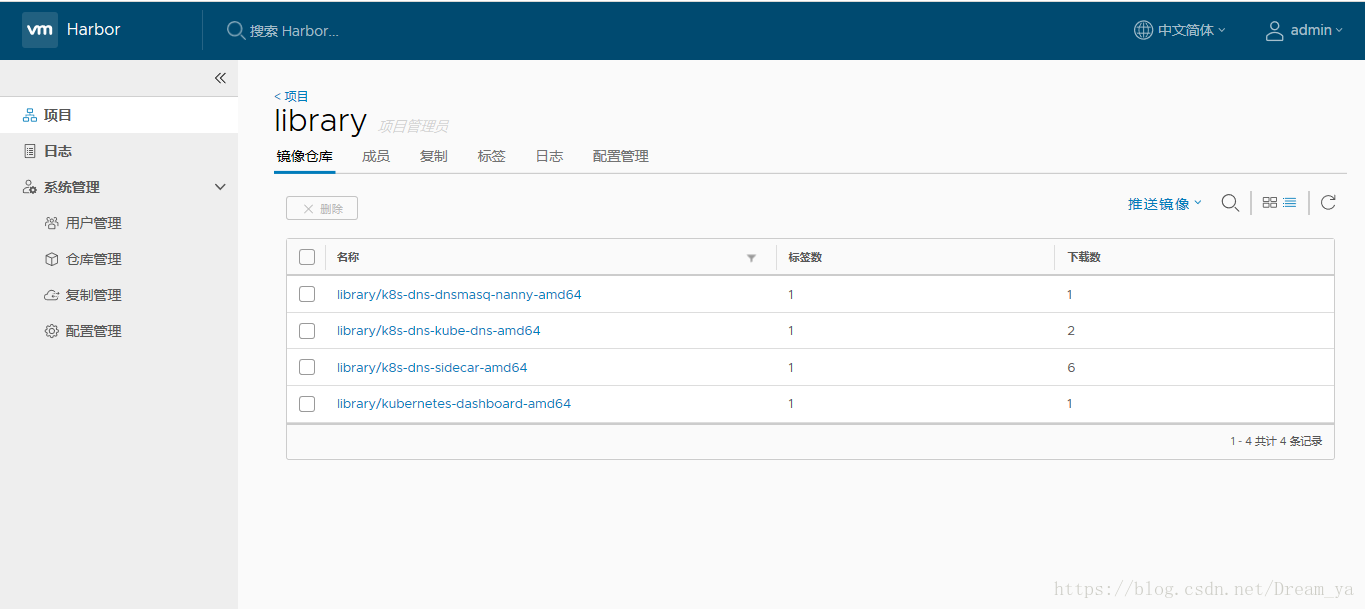

3、harbor使用

用瀏覽器登陸你可以發現其預設是有個專案library,也可以自己建立,我們來使用此專案!!!

(1)對映象進行處理

###打包及刪除

docker tag gcr.io/google_containers/kubernetes-dashboard-amd64:v1.8.3 10.10.10.1/library/kubernetes-dashboard-amd64:v1.8.3

docker rmi gcr.io/google_containers/kubernetes-dashboard-amd64:v1.8.3

docker tag gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.7 10.10.10.1/library/k8s-dns-sidecar-amd64:1.14.7

docker rmi gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.7

docker tag gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.7 10.10.10.1/library/k8s-dns-kube-dns-amd64:1.14.7

docker rmi gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.7

docker tag gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.7 10.10.10.1/library/k8s-dns-dnsmasq-nanny-amd64:1.14.7

docker rmi gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.7

###推送到harbor上

docker push 10.10.10.1/library/kubernetes-dashboard-amd64:v1.8.3

docker push 10.10.10.1/library/k8s-dns-sidecar-amd64:1.14.7

docker push 10.10.10.1/library/k8s-dns-kube-dns-amd64:1.14.7

docker push 10.10.10.1/library/k8s-dns-dnsmasq-nanny-amd64:1.14.7

(2)通過瀏覽器測試

九、kube-dns安裝

(1)我們需要的是這幾個包:

kubedns-sa.yaml

kubedns-svc.yaml.base

kubedns-cm.yaml

kubedns-controller.yaml.base

[[email protected]server1 data]# unzip kubernetes-release-1.8.zip

[[email protected] dns]# pwd

/data/kubernetes-release-1.8/cluster/addons/dns

[[email protected] dns]# cp {kubedns-svc.yaml.base,kubedns-cm.yaml,kubedns-controller.yaml.base,kubedns-sa.yaml} /root

(2)clusterIP檢視

[[email protected] ~]# cat /usr/local/kubernetes/config/kubelet

(3)4個yaml可以合併成此kube-dns.yaml

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "KubeDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.0.0.2 ###此地址是在kubelet的DNS地址

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

---

apiVersion: v1

kind: ConfigMap

metadata:

name: kube-dns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: EnsureExists

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: kube-dns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

strategy:

rollingUpdate:

maxSurge: 10%

maxUnavailable: 0

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

volumes:

- name: kube-dns-config

configMap:

name: kube-dns

optional: true

#imagePullSecrets:

#- name: registrykey-aliyun-vpc

containers:

- name: kubedns

image: 10.10.10.1/library/k8s-dns-kube-dns-amd64:1.14.7

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

livenessProbe:

httpGet:

path: /healthcheck/kubedns

port: 10054

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /readiness

port: 8081

scheme: HTTP

# we poll on pod startup for the Kubernetes master service and

# only setup the /readiness HTTP server once that's available.

initialDelaySeconds: 3

timeoutSeconds: 5

args:

- --domain=cluster.local

- --dns-port=10053

- --config-dir=/kube-dns-config

- --kube-master-url=http://10.10.10.1:8080 ###修改為叢集地址

- --v=2

env:

- name: PROMETHEUS_PORT

value: "10055"

ports:

- containerPort: 10053

name: dns-local

protocol: UDP

- containerPort: 10053

name: dns-tcp-local

protocol: TCP

- containerPort: 10055

name: metrics

protocol: TCP

volumeMounts:

- name: kube-dns-config

mountPath: /kube-dns-config

- name: dnsmasq

image: 10.10.10.1/library/k8s-dns-dnsmasq-nanny-amd64:1.14.7

livenessProbe:

httpGet:

path: /healthcheck/dnsmasq

port: 10054

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

args:

- -v=2

- -logtostderr

- -configDir=/etc/k8s/dns/dnsmasq-nanny

- -restartDnsmasq=true

- --

- -k

- --cache-size=1000

- --no-negcache

- --log-facility=-

- --server=/cluster.local/127.0.0.1#10053

- --server=/in-addr.arpa/127.0.0.1#10053

- --server=/ip6.arpa/127.0.0.1#10053

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

# see: https://github.com/kubernetes/kubernetes/issues/29055 for details

resources:

requests:

cpu: 150m

memory: 20Mi

volumeMounts:

- name: kube-dns-config

mountPath: /etc/k8s/dns/dnsmasq-nanny

- name: sidecar

image: 10.10.10.1/library/k8s-dns-sidecar-amd64:1.14.7

livenessProbe:

httpGet:

path: /metrics

port: 10054

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

args:

- --v=2

- --logtostderr

- --probe=kubedns,127.0.0.1:10053,kubernetes.default.svc.cluster.local,5,SRV

- --probe=dnsmasq,127.0.0.1:53,kubernetes.default.svc.cluster.local,5,SRV

ports:

- containerPort: 10054

name: metrics

protocol: TCP

resources:

requests:

memory: 20Mi

cpu: 10m

dnsPolicy: Default # Don't use cluster DNS.

(4)建立及刪除

# kubectl create -f kube-dns.yaml ###建立

# kubectl delete -f kube-dns.yaml ###刪除,此步驟不用執行

(5)檢視

[[email protected] ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

kube-dns-5855d8c4f7-sbb7x 3/3 Running 0

[[email protected] ~]# kubectl describe pod -n kube-system kube-dns-5855d8c4f7-sbb7x ###報錯的話,可以檢視報錯資訊

十、安裝dashboard

1、配置kube-dashboard.yaml

kind: Deployment

apiVersion: apps/v1beta2

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: 10.10.10.1/library/kubernetes-dashboard-amd64:v1.8.3

ports:

- containerPort: 9090

protocol: TCP

args:

- --apiserver-host=http://10.10.10.1:8080 ###修改為Master的IP

volumeMounts:

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

path: /

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort

ports:

- port: 80

targetPort: 9090

nodePort: 30090

selector:

k8s-app: kubernetes-dashboard

2、建立dashboard

# kubectl create -f kube-dashboard.yaml

3、檢視node的IP

[[email protected] dashboard]# kubectl get pods -o wide --namespace kube-system

NAME READY STATUS RESTARTS AGE IP NODE

kube-dns-5855d8c4f7-sbb7x 3/3 Running 0 1h 172.17.89.3 10.10.10.1

kubernetes-dashboard-7f8b5f54f9-gqjsh 1/1 Running 0 1h 172.17.39.2 10.10.10.3

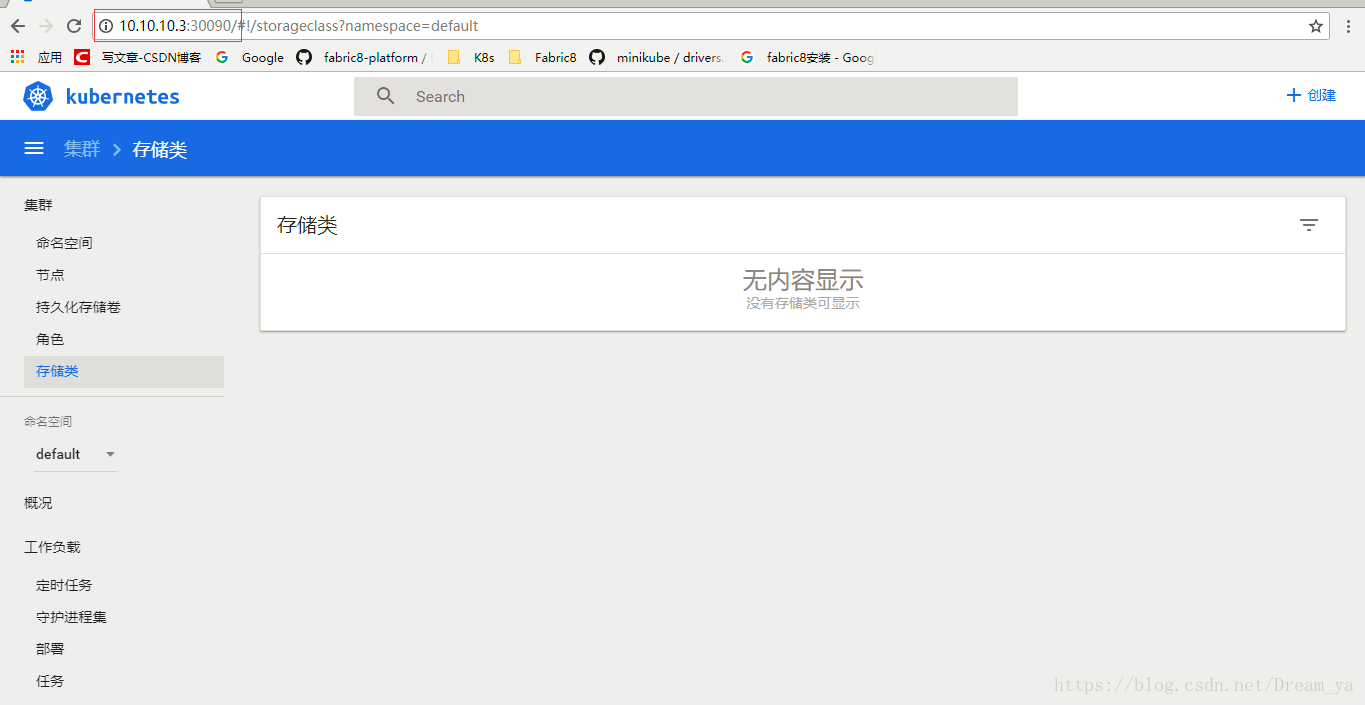

4、測試

(1)瀏覽器

http://10.10.10.3:30090

(2)結果

相關推薦

二進位制安裝Kubernetes(K8s)叢集---從零安裝教程

一、K8s介紹 1、K8s簡介 (1)使用Kubernetes,你可以快速高效地響應客戶需求: 動態地對應用進行擴容。 無縫地釋出新特性。 僅使用需要的資源以優化硬體使用。 (2)Kubernetes是: 簡潔的:輕量級,簡單,易上手

二進位制安裝kubernetes k8s 1.10

容我縷縷: K8S 2核4G40G磁碟 192.168.3.121 node1 2核4G40G磁碟 192.168.3.193

搭建k8s叢集,並安裝Kubernetes

環境介紹基本環境CentOS Linux release 7.5.1804 (Core)JDK1.8.0_161Kubernetes v1.5.2yum源:清華大學部署規劃Master:ip: 10.10.202.158hostname: apm-slave-02安裝節點dockeretcdflannelku

centos7.5 kubernetes/k8s 1.10 離線安裝

自己 contain firewall web enable rom time 最簡 修改ip centos7.5 kubernetes/k8s 1.10 離線安裝 本文介紹在centos7.5使用kubeadm快速離線安裝kubernetes 1.10。采用單ma

centos7.3 kubernetes/k8s 1.10 離線安裝 --已驗證

using over expired eset local 筆記 方式 don boot 本文介紹在centos7.3使用kubeadm快速離線安裝kubernetes 1.10。 采用單master,單node(可以多node),占用資源較少,方便在筆記本或學習環境快速

CentOS7.5從零安裝Python3.7

ps:環境如標題 下載壓縮包 獲取下載連結 此處我們選取Python官網的Python3.7.0,下載地址如下 https://www.python.org/ftp/python/3.7.0/Python-3.7.0.tar.xz 看官也可以自己選擇版本,官

Python從零入門教程 | 在不同的作業系統中安裝Python程式設計環境

Python是一種跨平臺的程式語言,這意味著它能夠執行在所有主要的作業系統中,那麼我們所熟知的作業系統包括:Windows、MacOs、 Linux。那麼今天要講的就是如何在每個作業系統中成功的安裝python. 一、在Windows系統中搭建Python程式設計環境 01.下

Redis Cluster 從零安裝並詳解

Redis 基礎安裝 基礎環境 三臺機器 OS IP CentOS 7.4 192.168.117.135 CentOS 7.4 192.168.117.136 CentOS 7.4 1

Kubernetes(K8S)叢集在centos7.4下建立

自己在搭Kubernetes(K8S)叢集下遇到的坑寫一點隨筆。 本次採用192.168.60.21,192.168.60.22,192.168.60.23作為需要裝的伺服器。 master需要安裝etcd, flannel,docker, kubernetes 192.168.6

Kubernetes(K8S)叢集管理Docker容器(部署篇)

今天這篇文章教給大家如何快速部署一套Kubernetes叢集。K8S叢集部署有幾種方式:kubeadm、minikube和二進位制包。前兩者屬於自動部署,簡化部署操作,我們這裡強烈推薦初學者使用二進位制

(二)超詳細純手工搭建kubernetes(k8s)叢集

1. 部署ETCD(主節點)1.1 簡介kubernetes需要儲存很多東西,像它本身的節點資訊,元件資訊,還有通過kubernetes執行的pod,deployment,service等等。都需要持久化。etcd就是它的資料中心。生產環境中為了保證資料中心的高可用和資料的一

CentOS7環境安裝Kubernetes四部曲之四:安裝kubectl工具

本文是《CentOS7環境安裝Kubernetes四部曲》系列的終篇,經歷了前三篇文章的實戰,我們用rancher搭建了具備master和node的完整K8S環境,但是目前還不能通過kubectl工具在K8S環境做更多的操作,本章我們來實戰安裝和配置kubect

Kubernetes(K8S)叢集管理Docker容器

一、架構拓撲圖 二、環境規劃 角色 IP 元件 master 172.25.77.1 etcd kube-apiserver kube-

kubernetes(k8s)叢集搭建

一、概述 1.簡介 Kubernetes是一個開源的,用於管理雲平臺中多個主機上的容器化的應用,Kubernetes的目標是讓部署容器化的應用簡單並且高效(powerful),Kubernetes提供了應用部署,規劃,更新,維護的一種機制。 Kub

阿里雲Kubernetes服務上從零搭建GitLab+Jenkins+GitOps應用釋出模型的實踐全紀錄

關於GitOps的介紹,可以參考 GitOps:Kubernetes多叢集環境下的高效CICD實踐 1. 在&nbs

微信公共號(企業號)開發框架-gochat的從零開始教程(三): 智慧機器人模版

感恩節來啦,把自己這個開源框架的挖的坑填一下好了~ 之前在第一章的時候就給大家展示過我自己寫的一個智慧聊天機器人,同時具有菜譜查詢、智慧翻譯之類的功能,在這裡我把這個機器人的程式碼開源啦:gochat機器人 這樣大家就可

微信公共號(企業號)開發框架-gochat的從零開始教程(一): 前期準備及環境搭建

最近開發了一個基於go語言的微信公共號/企業號的開發框架——gochat, 可以用來進行微信公共號/企業號的快速開發 gochat的架構是在 beego的基礎上完成的,而beego是一個非常強大的HTTP 框架框架(騰訊、京東、360、微博都有平臺使用了beego),

微信公共號(企業號)開發框架-gochat的從零開始教程(二): 5分鐘快速搭建自己的公共號

上一章裡我們把前期準備和環境配置已經完成啦,本章講一下怎麼通過5分鐘快速搭建自己的公共號~ 首先,前往github頁面下載gochat框架的原始碼 ,原始碼中已經包含了一個最基礎的公共號開發模版。(這裡非常非常希望大家在下載的

作業系統開發從零開始教程

教程主要內容: 1.編寫真實模式多工作業系統雛形,不實現檔案系統 2.編防寫模式多工作業系統雛形,有時間實現檔案系統 3.在編寫的同時分析windows&linux作業系統的技術精髓 作業系統編寫教程提綱 一.編寫一個真實模式多程序系統雛形 1.PC啟動分析,引導扇區編寫 2.中

【QT】QT從零入門教程(十):QT佈局管理QLayout

介紹完常用控制元件之後,我們發現,之前控制元件的大小位置都是通過resize()、move()來設定的,很不方便,當你修改某個控制元件的位置時,其他控制元件也需要進行調整,容易出現牽一髮而動全身的情況。 QT提供了類QLayout進行佈局管理,能很好解