【資料平臺】Pytorch庫初識

阿新 • • 發佈:2019-02-06

PyTorch是使用GPU和CPU優化的深度學習張量庫。

1、安裝,參考官網:http://pytorch.org/

conda install pytorch torchvision -c pytorch2、認識,參考:

https://github.com/yunjey/pytorch-tutorial

https://github.com/jcjohnson/pytorch-examples

http://pytorch-cn.readthedocs.io/zh/latest/

3、demo:

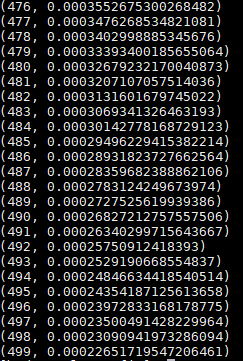

結果如下:# Code in file tensor/two_layer_net_tensor.py import torch dtype = torch.FloatTensor # dtype = torch.cuda.FloatTensor # Uncomment this to run on GPU # N is batch size; D_in is input dimension; # H is hidden dimension; D_out is output dimension. N, D_in, H, D_out = 64, 1000, 100, 10 # Create random input and output data x = torch.randn(N, D_in).type(dtype) y = torch.randn(N, D_out).type(dtype) # Randomly initialize weights w1 = torch.randn(D_in, H).type(dtype) w2 = torch.randn(H, D_out).type(dtype) learning_rate = 1e-6 for t in range(500): # Forward pass: compute predicted y h = x.mm(w1) h_relu = h.clamp(min=0) y_pred = h_relu.mm(w2) # Compute and print loss loss = (y_pred - y).pow(2).sum() print(t, loss) # Backprop to compute gradients of w1 and w2 with respect to loss grad_y_pred = 2.0 * (y_pred - y) grad_w2 = h_relu.t().mm(grad_y_pred) grad_h_relu = grad_y_pred.mm(w2.t()) grad_h = grad_h_relu.clone() grad_h[h < 0] = 0 grad_w1 = x.t().mm(grad_h) # Update weights using gradient descent w1 -= learning_rate * grad_w1 w2 -= learning_rate * grad_w2

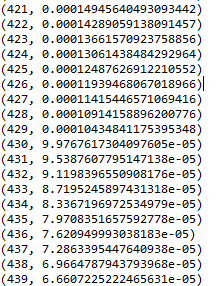

而同樣np跑出來的結果是:

程式碼如下:

# Code in file tensor/two_layer_net_numpy.py import numpy as np # N is batch size; D_in is input dimension; # H is hidden dimension; D_out is output dimension. N, D_in, H, D_out = 64, 1000, 100, 10 # Create random input and output data x = np.random.randn(N, D_in) y = np.random.randn(N, D_out) # Randomly initialize weights w1 = np.random.randn(D_in, H) w2 = np.random.randn(H, D_out) learning_rate = 1e-6 for t in range(500): # Forward pass: compute predicted y h = x.dot(w1) h_relu = np.maximum(h, 0) y_pred = h_relu.dot(w2) # Compute and print loss loss = np.square(y_pred - y).sum() print(t, loss) # Backprop to compute gradients of w1 and w2 with respect to loss grad_y_pred = 2.0 * (y_pred - y) grad_w2 = h_relu.T.dot(grad_y_pred) grad_h_relu = grad_y_pred.dot(w2.T) grad_h = grad_h_relu.copy() grad_h[h < 0] = 0 grad_w1 = x.T.dot(grad_h) # Update weights w1 -= learning_rate * grad_w1 w2 -= learning_rate * grad_w2

根據實際應用場景,後續可深入學習,重點是gpu了。