為文字摘要網路Pointer-Generator Networks製作中文複述訓練資料

阿新 • • 發佈:2019-02-08

下面是pointer-generator的開源專案地址:https://github.com/abisee/pointer-generator。我們現在要用它做中文複述的工作,那麼首先來看一下它是如何處理英文文字摘要的。

Github網頁上給了測試集輸出結果,我們拿出第一篇看下效果:

這是文章原文(article):

這是對這篇文章提供的參考總結(referance summary):washington ( cnn ) president barack obama says he is `` absolutely committed to making sure '' israel maintains a military advantage over iran . his comments to the new york times , published on sunday , come amid criticism from israeli prime minister benjamin netanyahu of the deal that the united states and five other world powers struck with iran . tehran agreed to halt the country 's nuclear ambitions , and in exchange , western powers would drop sanctions that have hurt the iran 's economy . obama said he understands and respects netanyahu 's stance that israel is particularly vulnerable and does n't `` have the luxury of testing these propositions '' in the deal . `` but what i would say to them is that not only am i absolutely committed to making sure they maintain their qualitative military edge , and that they can deter any potential future attacks , but what i 'm willing to do is to make the kinds of commitments that would give everybody in the neighborhood , including iran , a clarity that if israel were to be attacked by any state , that we would stand by them , '' obama said . that , he said , should be `` sufficient to take advantage of this once-in-a-lifetime opportunity to see whether or not we can at least take the nuclear issue off the table , '' he said . the framework negotiators announced last week would see iran reduce its centrifuges from 19,000 to 5,060 , limit the extent to which uranium necessary for nuclear weapons can be enriched and increase inspections . the talks over a final draft are scheduled to continue until june 30 . but netanyahu and republican critics in congress have complained that iran wo n't have to shut down its nuclear facilities and that the country 's leadership is n't trustworthy enough for the inspections to be as valuable as obama says they are . obama said even if iran ca n't be trusted , there 's still a case to be made for the deal . `` in fact , you could argue that if they are implacably opposed to us , all the more reason for us to want to have a deal in which we know what they 're doing and that , for a long period of time , we can prevent them from having a nuclear weapon , '' obama said .

下面是pointer-generator模型給出的結果:1. in an interview with the new york times , president obama says he understands israel feels particularly vulnerable . 2. obama calls the nuclear deal with iran a `` once-in-a-lifetime opportunity '' . 3. israeli prime minister benjamin netanyahu and many u.s. republicans warn that iran can not be trusted .

1. president barack obama says he is `` absolutely committed to making sure '' israel maintains a military advantage over iran . 2. obama said he understands and respects netanyahu 's stance that israel is particularly vulnerable and does n't `` have the luxury of testing these propositions '' .

可以看出來效果還是不錯的。

另外,Github上有提供pretrained model,也提供了英文訓練資料,可以自己訓練模型。那麼接下來我們看一下它的訓練資料是怎樣處理的。

在https://github.com/abisee/cnn-dailymail這個網頁上提供了英文資料處理的程式碼,當然它也提供了處理好了的資料。我們通過下載CNN Stories資料集,找出其中一篇檢視一下。結構很簡單,前面是一篇文章,最後一部分是給出的reference summary,由@highlight標記。reference summay格式如下,文章篇幅過長就不放了。

@highlight

A new tour in Taipei, Taiwan, allows tourists to do four-hour ride-alongs in local taxis

@highlight

Tourists go wherever local fares hire the cabs to go

@highlight

The appeal is going to unexpected locations and meeting chatty locals

@highlight

One English tourist was invited to a Taiwanese family dinner by a passenger in his taxi這 並 不 奇怪

沒什麼 奇怪 的 # -*-coding:utf-8-*-

import os

import struct

import collections

from tensorflow.core.example import example_pb2

# We use these to separate the summary sentences in the .bin datafiles

SENTENCE_START = '<s>'

SENTENCE_END = '</s>'

train_file = "./train/train.txt"

val_file = "./val/val.txt"

test_file = "./test/test.txt"

finished_files_dir = "./finished_files"

VOCAB_SIZE = 200000

def read_text_file(text_file):

lines = []

with open(text_file, "r") as f:

for line in f:

lines.append(line.strip())

return lines

def write_to_bin(input_file,out_file, makevocab=False):

if makevocab:

vocab_counter = collections.Counter()

with open(out_file, 'wb') as writer:

# read the input text file , make even line become article and odd line to be abstract(line number begin with 0)

lines = read_text_file(input_file)

for i, new_line in enumerate(lines):

if i % 2 == 0:

article = lines[i]

if i % 2 != 0:

abstract = "%s %s %s" % (SENTENCE_START, lines[i], SENTENCE_END)

# Write to tf.Example

tf_example = example_pb2.Example()

tf_example.features.feature['article'].bytes_list.value.extend([article])

tf_example.features.feature['abstract'].bytes_list.value.extend([abstract])

tf_example_str = tf_example.SerializeToString()

str_len = len(tf_example_str)

writer.write(struct.pack('q', str_len))

writer.write(struct.pack('%ds' % str_len, tf_example_str))

# Write the vocab to file, if applicable

if makevocab:

art_tokens = article.split(' ')

abs_tokens = abstract.split(' ')

abs_tokens = [t for t in abs_tokens if t not in [SENTENCE_START, SENTENCE_END]] # remove these tags from vocab

tokens = art_tokens + abs_tokens

tokens = [t.strip() for t in tokens] # strip

tokens = [t for t in tokens if t!=""] # remove empty

vocab_counter.update(tokens)

print "Finished writing file %s\n" % out_file

# write vocab to file

if makevocab:

print "Writing vocab file..."

with open(os.path.join(finished_files_dir, "vocab"), 'w') as writer:

for word, count in vocab_counter.most_common(VOCAB_SIZE):

writer.write(word + ' ' + str(count) + '\n')

print "Finished writing vocab file"

if __name__ == '__main__':

if not os.path.exists(finished_files_dir): os.makedirs(finished_files_dir)

# Read the text file, do a little postprocessing then write to bin files

write_to_bin(test_file, os.path.join(finished_files_dir, "test.bin"))

write_to_bin(val_file, os.path.join(finished_files_dir, "val.bin"))

write_to_bin(train_file, os.path.join(finished_files_dir, "train.bin"), makevocab=True)

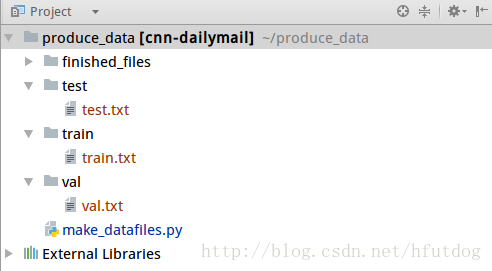

下面是我的工程目錄:

只要將檔案按照格式放在對應的路徑下,產生的.bin會放在finished_files資料夾下,然後就可以拿來訓練pointer-generator了。最後pointer-generator產生的結果就是我們想要的中文複述了。

好了,文章就到這裡了。整篇文章其實沒什麼東西,只是向大家推薦了pointer-generator,和把文字摘要工具應用在中文複述的一點想法。小弟是自然語言處理的新手,還有很多東西需要向大家學習,歡迎找我交流。