ffmpeg-音訊編碼

阿新 • • 發佈:2019-02-09

簡單記錄一下個人做語音聊天時使用的ffmpeg音訊編碼。

我們可以

1.使用ffmpeg將PCM編碼得到想要的格式的資料輸出到記憶體中,然後自己實現流媒體協議或者使用自己的RPC。

2.使用ffmpeg的avio_open直接推流,ffmpeg幫我們實現了http rtmp rtp 協議。

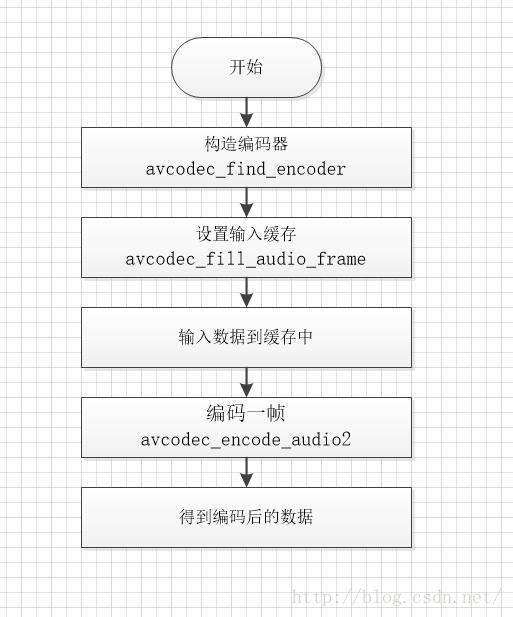

簡單說下編碼輸出到記憶體的流程:

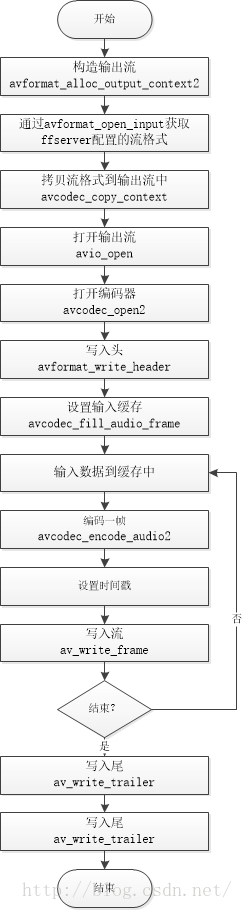

編碼並推流的流程:

直接上程式碼

#ifndef _IEncode_H #define _IEncode_H #include "util.h" extern "C" { #include <libavcodec/avcodec.h> #include <libavformat/avformat.h> #include <libavutil/opt.h> }; struct AudioParams { int sampleRate; int channels; enum AVSampleFormat fmt; long long bitRate; AVCodecID id; int64_t channel_layout; AudioParams() : sampleRate(-1), channels(-1), channel_layout(-1), fmt(AVSampleFormat::AV_SAMPLE_FMT_NONE), bitRate(-1), id(AVCodecID::AV_CODEC_ID_NONE) { } AudioParams(const AudioParams& audioParams) { *this = audioParams; } AudioParams& operator=(const AudioParams& audioParams) { this->sampleRate = audioParams.sampleRate; this->channels = audioParams.channels; this->fmt = audioParams.fmt; this->bitRate = audioParams.bitRate; this->id = audioParams.id; this->channel_layout = audioParams.channel_layout; return *this; } }; class IEncode { public: explicit IEncode(); virtual int init() = 0; int inputData(uint8_t* data, size_t size); int getNextOutPacketData(bool &hasData); int getBufSize() const; AudioParams getAudioParams()const; virtual ~IEncode(); protected: AudioParams audioParams; AVCodec* codec; AVCodecContext* ctx; AVFrame *frame; void *samples; int bufferSize; AVPacket avpkt; AVStream *out_stream; void setFrameParam(AVFrame *frame, AVCodecContext* ctx); virtual void release() = 0; virtual int writeFrame(AVPacket avpkt) = 0; }; #endif // !_IEncode_H

#ifndef _IEncode_MEM_H #define _IEncode_MEM_H #include "IEncode.h" class IEncode_mem : public IEncode { public: typedef void(*writeOutDataFunc)(void *opaque, uint8_t *buf, int buf_size); public: explicit IEncode_mem(); int init() override; void setAudioParams(const AudioParams& audioParams); void setWritePacketFuncCB(writeOutDataFunc func); void setOutputObj(void *outObj); ~IEncode_mem(); private: void* outObj; writeOutDataFunc writeData; int checkParams()const; int checkCodec(AVCodecID id)const; int checkSampleFmt(AVCodec *codec, enum AVSampleFormat sample_fmt)const; int writeFrame(AVPacket avpkt) override; void release() override; }; #endif // !_IEncode_MEM_H

#ifndef _IEncode_URL_H #define _IEncode_URL_H #include "IEncode.h" #include <string> class IEncode_url : public IEncode { public: explicit IEncode_url(); int init() override; void setUrl(const std::string& url); ~IEncode_url(); private: AVOutputFormat *ofmt; AVFormatContext *ofmtCtx; AVFormatContext *ifmtCtx; std::string url; int frameIndex; AVRational time_base1; private: void timeOffset(AVPacket& pkt); void close(); void release() override; int writeFrame(AVPacket avpkt) override; }; #endif // !_IEncode_URL_H

#include "IEncode.h"

#include "error.h"

#include "log.h"

IEncode::IEncode() :

codec(nullptr), ctx(nullptr),

samples(nullptr), bufferSize(0),

frame(nullptr), out_stream(nullptr)

{

}

IEncode::~IEncode()

{

codec = nullptr;

ctx = nullptr;

samples = nullptr;

frame = nullptr;

bufferSize = 0;

}

void IEncode::setFrameParam(AVFrame *frame, AVCodecContext* ctx)

{

frame->nb_samples = ctx->frame_size;

frame->format = ctx->sample_fmt;

frame->channel_layout = ctx->channel_layout;

}

int IEncode::getBufSize() const

{

return bufferSize;

}

int IEncode::inputData(uint8_t* data, size_t size)

{

if (data == nullptr)

return Error::ERROR_INPUT_NULL_DATA;

int got_output = 0;

av_init_packet(&avpkt);

avpkt.data = NULL;

avpkt.size = 0;

memcpy(samples, data, size);

int ret = avcodec_encode_audio2(ctx, &avpkt, frame, &got_output);

if (ret < 0)

{

release();

return Error::ERROR_ENCODEING_FRAME;

}

if (got_output)

{

Error error = Error::ERROR_NONE;

if (writeFrame(avpkt) < 0)

{

error = Error::ERROR_WRITE_FRAME;

}

av_packet_unref(&avpkt);

return error;

}

return Error::ERROR_NONE;

}

int IEncode::getNextOutPacketData(bool &hasData)

{

int count = 0;

hasData = false;

int fog_frame = 0;

int ret = avcodec_encode_audio2(ctx, &avpkt, nullptr, &fog_frame);

if (ret < 0)

{

release();

hasData = false;

return Error::ERROR_ENCODEING_FRAME;

}

if (fog_frame)

{

Error error = Error::ERROR_NONE;

hasData = true;

if (writeFrame(avpkt) < 0)

{

error = Error::ERROR_WRITE_FRAME;

}

av_packet_unref(&avpkt);

return error;

}

return Error::ERROR_NONE;

}

AudioParams IEncode::getAudioParams()const

{

return audioParams;

}#include "IEncode_mem.h"

#include "error.h"

#include "log.h"

IEncode_mem::IEncode_mem():

outObj(nullptr), writeData(nullptr)

{

}

IEncode_mem::~IEncode_mem()

{

release();

}

int IEncode_mem::init()

{

Error error = (Error)checkParams();

if (Error::ERROR_NONE != error)

return error;

codec = avcodec_find_encoder(audioParams.id);

if (!codec)

return Error::ERROR_NO_CODEC_SUPPORT;

ctx = avcodec_alloc_context3(codec);

if (!ctx)

return Error::ERROR_AVCODEC_CONTEXT;

ctx->bit_rate = audioParams.bitRate;

ctx->sample_fmt = audioParams.fmt;

ctx->sample_rate = audioParams.sampleRate;

ctx->channels = audioParams.channels;

ctx->channel_layout = audioParams.channel_layout;

if (avcodec_open2(ctx, codec, NULL) < 0)

return Error::ERROR_OPEN_CODEC;

frame = av_frame_alloc();

if (!frame)

{

avcodec_close(ctx);

av_free(ctx);

return Error::ERROR_FRAME_ALLOCATE;

}

setFrameParam(frame, ctx);

bufferSize = av_samples_get_buffer_size(

NULL,

ctx->channels,

ctx->frame_size,

ctx->sample_fmt,

0

);

if (bufferSize < 0)

{

av_frame_free(&frame);

avcodec_close(ctx);

av_free(ctx);

return Error::ERROR_CANT_GET_BUFF_SIZE;

}

samples = av_malloc(bufferSize);

if (!samples)

{

av_frame_free(&frame);

avcodec_close(ctx);

av_free(ctx);

return Error::ERROR_ALLOCATE_SAMPLES_BUF;

}

int ret = avcodec_fill_audio_frame(frame, ctx->channels, ctx->sample_fmt,

(const uint8_t*)samples, bufferSize, 0);

if (ret < 0)

{

release();

return Error::ERROR_SETUP_FRAME;

}

return Error::ERROR_NONE;

}

int IEncode_mem::writeFrame(AVPacket avpkt)

{

if (writeData)

writeData(outObj, avpkt.data, avpkt.size);

return 0;

}

void IEncode_mem::setWritePacketFuncCB(writeOutDataFunc func)

{

this->writeData = func;

}

void IEncode_mem::setOutputObj(void *outObj)

{

this->outObj = outObj;

}

void IEncode_mem::setAudioParams(const AudioParams& audioParams)

{

this->audioParams = audioParams;

}

int IEncode_mem::checkParams() const

{

Error error = (Error)checkCodec(audioParams.id);

if (Error::ERROR_NONE != error)

return error;

error = (Error)checkSampleFmt(avcodec_find_encoder(audioParams.id), audioParams.fmt);

if (Error::ERROR_NONE != error)

return error;

return error;

}

int IEncode_mem::checkCodec(AVCodecID id)const

{

AVCodec *codec = avcodec_find_encoder(id);

if (!codec)

{

return Error::ERROR_NO_CODEC_SUPPORT;

}

return Error::ERROR_NONE;

}

int IEncode_mem::checkSampleFmt(AVCodec *codec, enum AVSampleFormat sample_fmt)const

{

const enum AVSampleFormat *p = codec->sample_fmts;

while (*p != AV_SAMPLE_FMT_NONE)

{

if (*p == sample_fmt)

return Error::ERROR_NONE;

p++;

}

return Error::ERROR_SAMPLEFMT;

}

void IEncode_mem::release()

{

if (samples)

av_freep(&samples);

if (frame)

av_frame_free(&frame);

if (ctx)

{

avcodec_close(ctx);

av_free(ctx);

}

}#include "IEncode_url.h"

#include "error.h"

#include "log.h"

IEncode_url::IEncode_url() :

url(""),frameIndex(0),

ofmt(nullptr), ofmtCtx(nullptr), ifmtCtx(nullptr)

{

}

IEncode_url::~IEncode_url()

{

close();

frameIndex = 0;

}

int IEncode_url::init()

{

int ret = 0;

ret = avformat_open_input(&ifmtCtx, url.c_str(), nullptr, nullptr);

if (ret < 0)

{

ifmtCtx = nullptr;

return Error::ERROR_FORMAT_OPEN_INPUT;

}

if (0 > avformat_alloc_output_context2(&ofmtCtx, nullptr, nullptr, url.c_str()))

{

release();

return Error::ERROR_ENCODE_URL;

}

ofmt = ofmtCtx->oformat;

AVStream *in_stream = nullptr;

for (unsigned int i = 0; i < ifmtCtx->nb_streams; i++)

{

in_stream = ifmtCtx->streams[i];

audioParams.sampleRate = in_stream->codecpar->sample_rate;

audioParams.bitRate = in_stream->codecpar->bit_rate;

audioParams.fmt = AV_SAMPLE_FMT_FLTP;

audioParams.id = in_stream->codecpar->codec_id;

audioParams.channels = in_stream->codecpar->channels;

time_base1 = in_stream->time_base;

out_stream = avformat_new_stream(ofmtCtx, in_stream->codec->codec);

if (!out_stream)

{

release();

return Error::ERROR_ALLOCATE_STREAM;

}

ret = avcodec_copy_context(out_stream->codec, in_stream->codec);

if (ret < 0)

{

release();

return Error::ERROR_COPY_CONTEXT;

}

out_stream->codec->codec_tag = 0;

if (ofmtCtx->oformat->flags & AVFMT_GLOBALHEADER)

out_stream->codec->flags |= CODEC_FLAG_GLOBAL_HEADER;

}

ctx = out_stream->codec;

avformat_close_input(&ifmtCtx);

if (!(ofmt->flags & AVFMT_NOFILE))

{

ret = avio_open(&ofmtCtx->pb, url.c_str(), AVIO_FLAG_WRITE);

if (ret < 0)

{

release();

return Error::ERROR_AVIO_OPEN;

}

}

avformat_write_header(ofmtCtx, nullptr);

ctx->sample_fmt = AV_SAMPLE_FMT_FLTP;

codec = avcodec_find_encoder((AVCodecID)audioParams.id);

av_opt_set(ctx->priv_data, "tune", "zerolatency", 0);

if (!codec)

{

release();

return Error::ERROR_NO_CODEC_SUPPORT;

}

if (avcodec_open2(ctx, codec, NULL) < 0)

{

release();

return Error::ERROR_OPEN_CODEC;

}

frame = av_frame_alloc();

if (!frame)

{

release();

return Error::ERROR_FRAME_ALLOCATE;

}

setFrameParam(frame, ctx);

bufferSize = av_samples_get_buffer_size(

NULL,

ctx->channels,

ctx->frame_size,

ctx->sample_fmt,

0

);

if (bufferSize < 0)

{

release();

return Error::ERROR_CANT_GET_BUFF_SIZE;

}

samples = av_malloc(bufferSize);

if (!samples)

{

release();

return Error::ERROR_ALLOCATE_SAMPLES_BUF;

}

ret = avcodec_fill_audio_frame(frame, ctx->channels, ctx->sample_fmt,

(const uint8_t*)samples, bufferSize, 0);

if (ret < 0)

{

release();

return Error::ERROR_SETUP_FRAME;

}

return Error::ERROR_NONE;

}

int IEncode_url::writeFrame(AVPacket avpkt)

{

timeOffset(avpkt);

return av_write_frame(ofmtCtx, &avpkt);

}

void IEncode_url::setUrl(const std::string& url)

{

this->url = url;

}

void IEncode_url::timeOffset(AVPacket& avpkt)

{

avpkt.pts = av_rescale_q((frameIndex++)*frame->nb_samples, AVRational{ 1, ctx->sample_rate }, ctx->time_base);

avpkt.dts = avpkt.pts;

avpkt.pos = -1;

}

void IEncode_url::close()

{

if (ofmtCtx)

{

av_write_trailer(ofmtCtx);

}

release();

}

void IEncode_url::release()

{

if (ifmtCtx)

{

avformat_close_input(&ifmtCtx);

}

if (ofmtCtx)

{

avformat_close_input(&ofmtCtx);

}

if (samples)

{

av_freep(&samples);

}

if (frame)

{

av_frame_free(&frame);

}

}這裡我做了去掉延遲的處理:

av_opt_set(ctx->priv_data, "tune", "zerolatency", 0);