DAGScheduler原理剖析與原始碼分析

阿新 • • 發佈:2019-02-12

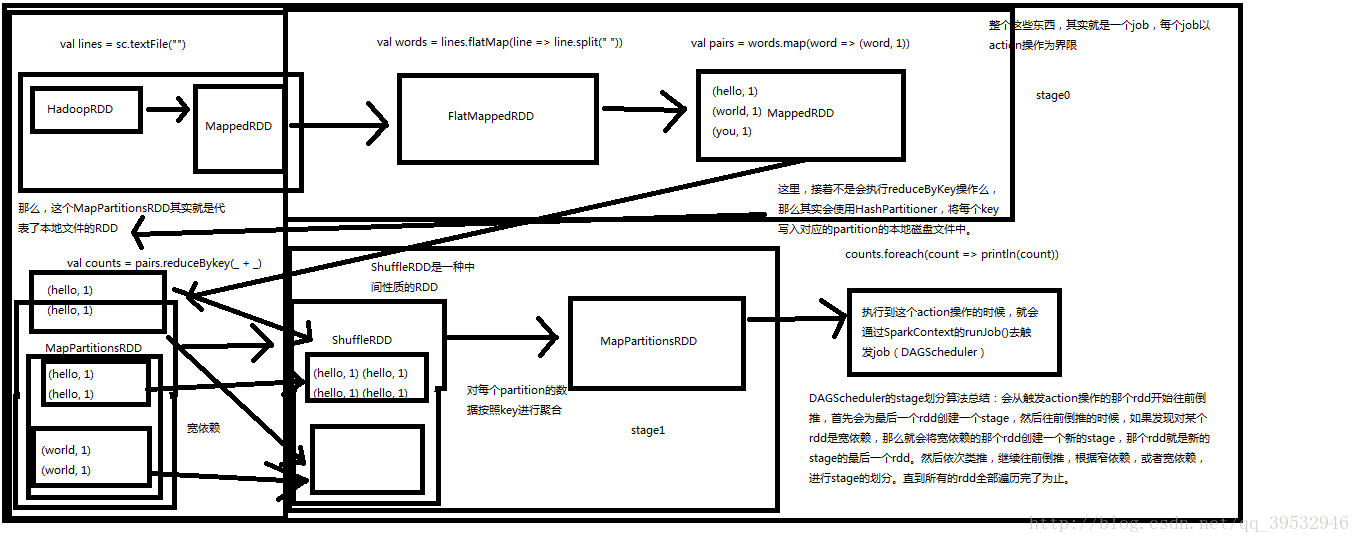

stage劃分演算法:必須對stage劃分演算法很清晰,知道自己的Application被劃分了幾個job,每個job被劃分了幾個stage,每個stage有哪些程式碼,只能在線上報錯的資訊上更快的發現問題或者效能調優。

//DAGscheduler的job排程的核心入口

private[scheduler] def handleJobSubmitted(jobId: Int,

finalRDD: RDD[_],

func: (TaskContext, Iterator[_]) => _,

partitions: Array[Int],

callSite: CallSite,

listener: JobListener,

properties: Properties) {

//使用觸發job的最後一個RDD建立finalStage //獲取某個Stage的父Stage

//如果發現最後一個RDD的所有依賴都是窄依賴,就不會建立新的RDD。

//但是如果這個RDD寬依賴了某個RDD,那麼將會建立一個新的stage。

//並且將新的stage立即返回。

private def getMissingParentStages(stage: Stage): List[Stage] = {

val missing = new HashSet[Stage]

val visited = new HashSet[RDD[_]]

// We are manually maintaining a stack here to prevent StackOverflowError

// 定義了一個棧

val waitingForVisit = new Stack[RDD[_]]

def visit(rdd: RDD[_]) {

if (!visited(rdd)) {

visited += rdd

val rddHasUncachedPartitions = getCacheLocs(rdd).contains(Nil)

if (rddHasUncachedPartitions) {

for (dep <- rdd.dependencies) {

dep match {

//如果是寬依賴的話。

//其實對於每一個有shuffle操作的運算元,底層都對應了三個RDD(MapPartitionsRDD,shuffleRDD,MapPartitionsRDD)

//shuffleRdd的map端的會劃分到新的RDD

case shufDep: ShuffleDependency[_, _, _] =>

//使用寬依賴的RDD建立一個stage,並且會將isshufflemap設定為true

//預設最後一個stage不是shufflemap Stage

//但是fianalstage之前的stage都是shuffleMap stage

val mapStage = getShuffleMapStage(shufDep, stage.firstJobId)

if (!mapStage.isAvailable) {

missing += mapStage

}

//如果是窄依賴,就將RDD放入棧中

case narrowDep: NarrowDependency[_] =>

waitingForVisit.push(narrowDep.rdd)

}

}

}

}

//首先,向棧中推入了stage的最後一個RDD

waitingForVisit.push(stage.rdd)

while (waitingForVisit.nonEmpty) {

//對stage的最後一個RDD,呼叫Visit()方法

visit(waitingForVisit.pop())

}

missing.toList

}

//提交stage,為stage建立一批task,task數量與partition數量相同

private def submitMissingTasks(stage: Stage, jobId: Int) {

logDebug("submitMissingTasks(" + stage + ")")

// 獲取partition數量

stage.pendingPartitions.clear()

val partitionsToCompute: Seq[Int] = stage.findMissingPartitions()

initialized.

if (stage.internalAccumulators.isEmpty || stage.numPartitions == partitionsToCompute.size) {

stage.resetInternalAccumulators()

}

val properties = jobIdToActiveJob(jobId).properties

//將stage加入runningStages佇列

runningStages += stage

stage match {

case s: ShuffleMapStage =>

outputCommitCoordinator.stageStart(stage = s.id, maxPartitionId = s.numPartitions - 1)

case s: ResultStage =>

outputCommitCoordinator.stageStart(

stage = s.id, maxPartitionId = s.rdd.partitions.length - 1)

}

val taskIdToLocations: Map[Int, Seq[TaskLocation]] = try {

stage match {

case s: ShuffleMapStage =>

partitionsToCompute.map { id => (id, getPreferredLocs(stage.rdd, id))}.toMap

case s: ResultStage =>

val job = s.activeJob.get

partitionsToCompute.map { id =>

val p = s.partitions(id)

(id, getPreferredLocs(stage.rdd, p))

}.toMap

}

} catch {

case NonFatal(e) =>

stage.makeNewStageAttempt(partitionsToCompute.size)

listenerBus.post(SparkListenerStageSubmitted(stage.latestInfo, properties))

abortStage(stage, s"Task creation failed: $e\n${e.getStackTraceString}", Some(e))

runningStages -= stage

return

}

stage.makeNewStageAttempt(partitionsToCompute.size, taskIdToLocations.values.toSeq)

listenerBus.post(SparkListenerStageSubmitted(stage.latestInfo, properties))

var taskBinary: Broadcast[Array[Byte]] = null

try {

case stage: ShuffleMapStage =>

closureSerializer.serialize((stage.rdd, stage.shuffleDep): AnyRef).array()

case stage: ResultStage =>

closureSerializer.serialize((stage.rdd, stage.func): AnyRef).array()

}

taskBinary = sc.broadcast(taskBinaryBytes)

} catch {

case e: NotSerializableException =>

abortStage(stage, "Task not serializable: " + e.toString, Some(e))

runningStages -= stage

return

case NonFatal(e) =>

abortStage(stage, s"Task serialization failed: $e\n${e.getStackTraceString}", Some(e))

runningStages -= stage

return

}

//為stage建立指定數量的task

val tasks: Seq[Task[_]] = try {

stage match {

//除了final Stage不是shuffle Stage。

case stage: ShuffleMapStage =>

partitionsToCompute.map { id =>

//給每一個partition建立一個task

//給每個task最佳位置

val locs = taskIdToLocations(id)

val part = stage.rdd.partitions(id)

//給shuffle Stage建立ShuffleStageTask

new ShuffleMapTask(stage.id, stage.latestInfo.attemptId,

taskBinary, part, locs, stage.internalAccumulators)

}

//不是S戶發放了 Stage就是finalStage。那麼建立ResultStage

case stage: ResultStage =>

val job = stage.activeJob.get

partitionsToCompute.map { id =>

//給每一個partition建立一個task

//給每個task最佳位置(就是從stage的最後位置開始找,哪個RDD的Partition被Cache了,或被checkPoint了,那麼task的最佳位置就是RDD被Cache或者被CheckPoint的位置)

val p: Int = stage.partitions(id)

val part = stage.rdd.partitions(p)

val locs = taskIdToLocations(id)

new ResultTask(stage.id, stage.latestInfo.attemptId,

taskBinary, part, locs, id, stage.internalAccumulators)

}

}

} catch {

case NonFatal(e) =>

abortStage(stage, s"Task creation failed: $e\n${e.getStackTraceString}", Some(e))

runningStages -= stage

return

}

if (tasks.size > 0) {

logInfo("Submitting " + tasks.size + " missing tasks from " + stage + " (" + stage.rdd + ")")

stage.pendingPartitions ++= tasks.map(_.partitionId)

logDebug("New pending partitions: " + stage.pendingPartitions)

taskScheduler.submitTasks(new TaskSet(

tasks.toArray, stage.id, stage.latestInfo.attemptId, jobId, properties))

stage.latestInfo.submissionTime = Some(clock.getTimeMillis())

} else {

markStageAsFinished(stage, None)

val debugString = stage match {

case stage: ShuffleMapStage =>

s"Stage ${stage} is actually done; " +

s"(available: ${stage.isAvailable}," +

s"available outputs: ${stage.numAvailableOutputs}," +

s"partitions: ${stage.numPartitions})"

case stage : ResultStage =>

s"Stage ${stage} is actually done; (partitions: ${stage.numPartitions})"

}

logDebug(debugString)

}

}stage劃分演算法總結:

- 從finalstage倒推

- 通過寬依賴進行stage劃分

- 通過遞迴,優先提交父stage