深度學習之路, 從邏輯迴歸開始, 手寫一個分類器.

阿新 • • 發佈:2019-02-15

要給同事講神經網路和tensorflow. 需要普及一些前導知識.

所以我準備了一個課件, 寫了下面這個不使用工具和庫,全手寫的分類器. . 個人感覺, 對於幫助理解機器學習的具體實現過程是很有幫助的. (僅僅為了演示原理,實現寫的比較粗糙,談不上效能. )

放在這裡, 希望可以幫到其他同學.

宣告 : The MIT License

有需要的隨便拿去用.

import numpy as np

import matplotlib

import matplotlib.pyplot as plt

import math

import sys

dataset_raw = [

[0.051267 執行後自動開始訓練, 控制檯輸出loss, 當loss可以接受之後,按ctrl+c停止訓練.

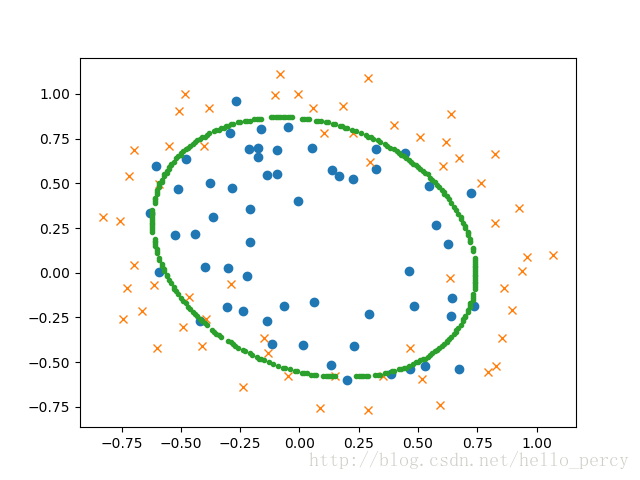

稍等片刻, 可以看到程式影象輸出如下, 點為1, x為0, 綠色的圈是decision bundry.