caffe之Data_Layer層程式碼解析

阿新 • • 發佈:2019-02-17

一、 caffe的資料輸入層, 根據不同的輸入方式有不同的層, 因為本人最早接觸的是通過lmdb資料庫輸入資料,而lmdb對應這DataLayer層, 其實還有一個常用的就是ImageDataLayer層, 這個層 可以直接輸入圖片的路徑, 而不需轉換。

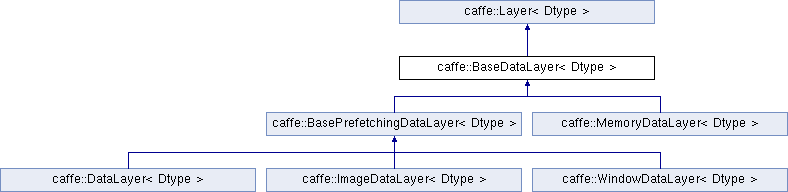

上面這張圖, 反應輸入層的繼承關係和不同層次關係, 可見: DataLayer層 繼承自BasePrefetchingDataLayer層,繼承BaseDataLayer層,繼承Layer層。

DataLayer.hpp: 其實這個層只做了一件事情, 那就是reshape之後,從lmdb資料庫中讀取資料, 然後將讀到的資料指標輸出給top, 如果有標籤,也會輸出標籤。

ImageDataLayer.cpp: 這個層也只做了一件事情, 那就是reshape之後,從檔案列表中讀取資料, 然後將讀到的資料指標輸出給top, 如果有標籤,也會輸出標籤

如果只是使用, 那麼參考我的另一篇微博 http://blog.csdn.net/ming5432ming/article/details/78458916 , 沒有必要往下看。

如想了解下具體實現,請往下走。

二、 下面是DataLayer.hpp

先說結論: 其實這個層只做了一件事情, 那就是reshape之後,從lmdb資料庫中讀取資料, 然後將讀到的資料指標輸出給top, 如果有標籤,也會輸出標籤。

#ifndef CAFFE_DATA_LAYER_HPP_ #define CAFFE_DATA_LAYER_HPP_ #include <vector> #include "caffe/blob.hpp" #include "caffe/data_transformer.hpp" #include "caffe/internal_thread.hpp" #include "caffe/layer.hpp" #include "caffe/layers/base_data_layer.hpp" #include "caffe/proto/caffe.pb.h" #include "caffe/util/db.hpp" namespace caffe { template <typename Dtype> class DataLayer : public BasePrefetchingDataLayer<Dtype> { public: explicit DataLayer(const LayerParameter& param); virtual ~DataLayer(); virtual void DataLayerSetUp(const vector<Blob<Dtype>*>& bottom, const vector<Blob<Dtype>*>& top); virtual inline const char* type() const { return "Data"; } //名字是Data, 這個對應這prototxt檔案中的type變數。 virtual inline int ExactNumBottomBlobs() const { return 0; } //data層沒有輸入bottom層 virtual inline int MinTopBlobs() const { return 1; } virtual inline int MaxTopBlobs() const { return 2; } protected: void Next(); bool Skip(); virtual void load_batch(Batch<Dtype>* batch); shared_ptr<db::DB> db_; //lmdb資料庫操作的指標。 shared_ptr<db::Cursor> cursor_; uint64_t offset_; }; } // namespace caffe #endif // CAFFE_DATA_LAYER_HPP_

下面是DataLayer.cpp

#ifdef USE_OPENCV

#include <opencv2/core/core.hpp>

#endif // USE_OPENCV

#include <stdint.h>

#include <vector>

#include "caffe/data_transformer.hpp"

#include "caffe/layers/data_layer.hpp"

#include "caffe/util/benchmark.hpp"

namespace caffe {

template <typename Dtype>

DataLayer<Dtype>::DataLayer(const LayerParameter& param) // 開啟資料庫

: BasePrefetchingDataLayer<Dtype>(param),

offset_() {

db_.reset(db::GetDB(param.data_param().backend()));

db_->Open(param.data_param().source(), db::READ);

cursor_.reset(db_->NewCursor());

}

template <typename Dtype>

DataLayer<Dtype>::~DataLayer() {

this->StopInternalThread();

}

template <typename Dtype>

void DataLayer<Dtype>::DataLayerSetUp(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

const int batch_size = this->layer_param_.data_param().batch_size(); //從配置檔案中讀取批處理大小;

// Read a data point, and use it to initialize the top blob.

Datum datum;

datum.ParseFromString(cursor_->value()); //讀取一個數據,並存放在datum中, datum是在caffe.proto中定義的資料結構。 裡面包括channel、height、和width

// Use data_transformer to infer the expected blob shape from datum.

vector<int> top_shape = this->data_transformer_->InferBlobShape(datum); //用讀到的資料初始化top_shape結構。

this->transformed_data_.Reshape(top_shape);

// Reshape top[0] and prefetch_data according to the batch_size.

top_shape[0] = batch_size; //因為datum中沒有批處理的資訊,所以在這補充。

top[0]->Reshape(top_shape);

for (int i = 0; i < this->prefetch_.size(); ++i) {

this->prefetch_[i]->data_.Reshape(top_shape); //reshape每一個險種中的prefetch_

}

LOG_IF(INFO, Caffe::root_solver())

<< "output data size: " << top[0]->num() << ","

<< top[0]->channels() << "," << top[0]->height() << ","

<< top[0]->width();

// label

if (this->output_labels_) {

vector<int> label_shape(1, batch_size); //如果有標籤,則輸出標籤結構。

top[1]->Reshape(label_shape);

for (int i = 0; i < this->prefetch_.size(); ++i) {

this->prefetch_[i]->label_.Reshape(label_shape);

}

}

}

template <typename Dtype>

bool DataLayer<Dtype>::Skip() {

int size = Caffe::solver_count();

int rank = Caffe::solver_rank();

bool keep = (offset_ % size) == rank ||

// In test mode, only rank 0 runs, so avoid skipping

this->layer_param_.phase() == TEST;

return !keep;

}

template<typename Dtype>

void DataLayer<Dtype>::Next() {

cursor_->Next();

if (!cursor_->valid()) { //如果資料庫結束,則從頭開始讀取。

LOG_IF(INFO, Caffe::root_solver())

<< "Restarting data prefetching from start.";

cursor_->SeekToFirst();

}

offset_++;

}

// This function is called on prefetch thread

template<typename Dtype>

void DataLayer<Dtype>::load_batch(Batch<Dtype>* batch) { //每一個執行緒都會執行這個函式。

CPUTimer batch_timer;

batch_timer.Start();

double read_time = 0;

double trans_time = 0;

CPUTimer timer;

CHECK(batch->data_.count());

CHECK(this->transformed_data_.count());

const int batch_size = this->layer_param_.data_param().batch_size();

Datum datum;

for (int item_id = 0; item_id < batch_size; ++item_id) {

timer.Start();

while (Skip()) {

Next();

}

datum.ParseFromString(cursor_->value()); //讀取一個數據;

read_time += timer.MicroSeconds();

if (item_id == 0) {

// Reshape according to the first datum of each batch

// on single input batches allows for inputs of varying dimension.

// Use data_transformer to infer the expected blob shape from datum.

vector<int> top_shape = this->data_transformer_->InferBlobShape(datum);

this->transformed_data_.Reshape(top_shape);

// Reshape batch according to the batch_size.

top_shape[0] = batch_size;

batch->data_.Reshape(top_shape); //批處理batch的 reshape

}

// Apply data transformations (mirror, scale, crop...)

timer.Start();

int offset = batch->data_.offset(item_id); //在一個批次中的偏移量;

Dtype* top_data = batch->data_.mutable_cpu_data(); //讀取cpu的指標,並賦值給top_data;

this->transformed_data_.set_cpu_data(top_data + offset);

this->data_transformer_->Transform(datum, &(this->transformed_data_)); //設定cpu的data指標。

// Copy label.

if (this->output_labels_) { //設定label資料。

Dtype* top_label = batch->label_.mutable_cpu_data();

top_label[item_id] = datum.label();

}

trans_time += timer.MicroSeconds();

Next();

}

timer.Stop();

batch_timer.Stop();

DLOG(INFO) << "Prefetch batch: " << batch_timer.MilliSeconds() << " ms.";

DLOG(INFO) << " Read time: " << read_time / 1000 << " ms.";

DLOG(INFO) << "Transform time: " << trans_time / 1000 << " ms.";

}

INSTANTIATE_CLASS(DataLayer);

REGISTER_LAYER_CLASS(Data);

} // namespace caffe

總結: 其實這個層只做了一件事情, 那就是reshape之後,從資料庫中讀取資料, 然後將讀到的資料指標輸出給top。

三、 既然到了這,順便說一下ImageDataLayer層。

下面是ImageDataLayer.hpp

#ifndef CAFFE_IMAGE_DATA_LAYER_HPP_

#define CAFFE_IMAGE_DATA_LAYER_HPP_

#include <string>

#include <utility>

#include <vector>

#include "caffe/blob.hpp"

#include "caffe/data_transformer.hpp"

#include "caffe/internal_thread.hpp"

#include "caffe/layer.hpp"

#include "caffe/layers/base_data_layer.hpp"

#include "caffe/proto/caffe.pb.h"

namespace caffe {

/**

* @brief Provides data to the Net from image files.

*

* TODO(dox): thorough documentation for Forward and proto params.

*/

template <typename Dtype>

class ImageDataLayer : public BasePrefetchingDataLayer<Dtype> {

public:

explicit ImageDataLayer(const LayerParameter& param)

: BasePrefetchingDataLayer<Dtype>(param) {}

virtual ~ImageDataLayer();

virtual void DataLayerSetUp(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top);

virtual inline const char* type() const { return "ImageData"; } //這是prototxt中 的type

virtual inline int ExactNumBottomBlobs() const { return 0; }

virtual inline int ExactNumTopBlobs() const { return 2; }

protected:

shared_ptr<Caffe::RNG> prefetch_rng_;

virtual void ShuffleImages();

virtual void load_batch(Batch<Dtype>* batch);

vector<std::pair<std::string, int> > lines_;

int lines_id_;

};

} // namespace caffe

#endif // CAFFE_IMAGE_DATA_LAYER_HPP_下面是ImageDataLayer.cpp

#ifdef USE_OPENCV

#include <opencv2/core/core.hpp>

#include <fstream> // NOLINT(readability/streams)

#include <iostream> // NOLINT(readability/streams)

#include <string>

#include <utility>

#include <vector>

#include "caffe/data_transformer.hpp"

#include "caffe/layers/base_data_layer.hpp"

#include "caffe/layers/image_data_layer.hpp"

#include "caffe/util/benchmark.hpp"

#include "caffe/util/io.hpp"

#include "caffe/util/math_functions.hpp"

#include "caffe/util/rng.hpp"

namespace caffe {

template <typename Dtype>

ImageDataLayer<Dtype>::~ImageDataLayer<Dtype>() {

this->StopInternalThread();

}

template <typename Dtype>

void ImageDataLayer<Dtype>::DataLayerSetUp(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

const int new_height = this->layer_param_.image_data_param().new_height(); //從網路檔案中讀取引數;

const int new_width = this->layer_param_.image_data_param().new_width();

const bool is_color = this->layer_param_.image_data_param().is_color();

string root_folder = this->layer_param_.image_data_param().root_folder();

CHECK((new_height == 0 && new_width == 0) ||

(new_height > 0 && new_width > 0)) << "Current implementation requires "

"new_height and new_width to be set at the same time.";

// Read the file with filenames and labels

const string& source = this->layer_param_.image_data_param().source();

LOG(INFO) << "Opening file " << source;

std::ifstream infile(source.c_str()); // 開啟網路檔案中的檔案列表。

string line;

size_t pos;

int label;

while (std::getline(infile, line)) {

pos = line.find_last_of(' '); //圖片路徑與標籤用空格隔開

label = atoi(line.substr(pos + 1).c_str());

lines_.push_back(std::make_pair(line.substr(0, pos), label)); //讀出一行的圖片路徑和標籤

}

CHECK(!lines_.empty()) << "File is empty";

if (this->layer_param_.image_data_param().shuffle()) {

// randomly shuffle data

LOG(INFO) << "Shuffling data";

const unsigned int prefetch_rng_seed = caffe_rng_rand();

prefetch_rng_.reset(new Caffe::RNG(prefetch_rng_seed));

ShuffleImages();

} else {

if (this->phase_ == TRAIN && Caffe::solver_rank() > 0 &&

this->layer_param_.image_data_param().rand_skip() == 0) {

LOG(WARNING) << "Shuffling or skipping recommended for multi-GPU";

}

}

LOG(INFO) << "A total of " << lines_.size() << " images.";

lines_id_ = 0;

// Check if we would need to randomly skip a few data points

if (this->layer_param_.image_data_param().rand_skip()) {

unsigned int skip = caffe_rng_rand() %

this->layer_param_.image_data_param().rand_skip();

LOG(INFO) << "Skipping first " << skip << " data points.";

CHECK_GT(lines_.size(), skip) << "Not enough points to skip";

lines_id_ = skip;

}

// Read an image, and use it to initialize the top blob.

cv::Mat cv_img = ReadImageToCVMat(root_folder + lines_[lines_id_].first, //讀取圖片資料

new_height, new_width, is_color);

CHECK(cv_img.data) << "Could not load " << lines_[lines_id_].first;

// Use data_transformer to infer the expected blob shape from a cv_image.

vector<int> top_shape = this->data_transformer_->InferBlobShape(cv_img);

this->transformed_data_.Reshape(top_shape);

// Reshape prefetch_data and top[0] according to the batch_size.

const int batch_size = this->layer_param_.image_data_param().batch_size();

CHECK_GT(batch_size, 0) << "Positive batch size required";

top_shape[0] = batch_size;

for (int i = 0; i < this->prefetch_.size(); ++i) {

this->prefetch_[i]->data_.Reshape(top_shape); //reshape prefetch引數的結構

}

top[0]->Reshape(top_shape);

LOG(INFO) << "output data size: " << top[0]->num() << ","

<< top[0]->channels() << "," << top[0]->height() << ","

<< top[0]->width();

// label

vector<int> label_shape(1, batch_size); //標籤的reshape

top[1]->Reshape(label_shape);

for (int i = 0; i < this->prefetch_.size(); ++i) {

this->prefetch_[i]->label_.Reshape(label_shape);

}

}

template <typename Dtype>

void ImageDataLayer<Dtype>::ShuffleImages() {

caffe::rng_t* prefetch_rng =

static_cast<caffe::rng_t*>(prefetch_rng_->generator());

shuffle(lines_.begin(), lines_.end(), prefetch_rng);

}

// This function is called on prefetch thread

template <typename Dtype>

void ImageDataLayer<Dtype>::load_batch(Batch<Dtype>* batch) {

CPUTimer batch_timer;

batch_timer.Start();

double read_time = 0;

double trans_time = 0;

CPUTimer timer;

CHECK(batch->data_.count());

CHECK(this->transformed_data_.count());

ImageDataParameter image_data_param = this->layer_param_.image_data_param();

const int batch_size = image_data_param.batch_size();

const int new_height = image_data_param.new_height();

const int new_width = image_data_param.new_width();

const bool is_color = image_data_param.is_color();

string root_folder = image_data_param.root_folder();

// Reshape according to the first image of each batch

// on single input batches allows for inputs of varying dimension.

cv::Mat cv_img = ReadImageToCVMat(root_folder + lines_[lines_id_].first,

new_height, new_width, is_color);

CHECK(cv_img.data) << "Could not load " << lines_[lines_id_].first;

// Use data_transformer to infer the expected blob shape from a cv_img.

vector<int> top_shape = this->data_transformer_->InferBlobShape(cv_img);

this->transformed_data_.Reshape(top_shape);

// Reshape batch according to the batch_size.

top_shape[0] = batch_size;

batch->data_.Reshape(top_shape);

Dtype* prefetch_data = batch->data_.mutable_cpu_data();

Dtype* prefetch_label = batch->label_.mutable_cpu_data();

// datum scales

const int lines_size = lines_.size();

for (int item_id = 0; item_id < batch_size; ++item_id) {

// get a blob

timer.Start();

CHECK_GT(lines_size, lines_id_);

cv::Mat cv_img = ReadImageToCVMat(root_folder + lines_[lines_id_].first,

new_height, new_width, is_color);

CHECK(cv_img.data) << "Could not load " << lines_[lines_id_].first;

read_time += timer.MicroSeconds();

timer.Start();

// Apply transformations (mirror, crop...) to the image

int offset = batch->data_.offset(item_id);

this->transformed_data_.set_cpu_data(prefetch_data + offset);

this->data_transformer_->Transform(cv_img, &(this->transformed_data_));

trans_time += timer.MicroSeconds();

prefetch_label[item_id] = lines_[lines_id_].second;

// go to the next iter

lines_id_++;

if (lines_id_ >= lines_size) {

// We have reached the end. Restart from the first.

DLOG(INFO) << "Restarting data prefetching from start.";

lines_id_ = 0;

if (this->layer_param_.image_data_param().shuffle()) {

ShuffleImages();

}

}

}

batch_timer.Stop();

DLOG(INFO) << "Prefetch batch: " << batch_timer.MilliSeconds() << " ms.";

DLOG(INFO) << " Read time: " << read_time / 1000 << " ms.";

DLOG(INFO) << "Transform time: " << trans_time / 1000 << " ms.";

}

INSTANTIATE_CLASS(ImageDataLayer);

REGISTER_LAYER_CLASS(ImageData);

} // namespace caffe

#endif // USE_OPENCV總結: 會發現這個cpp的機構和Data_layer.cpp是類似的, 作用就是從檔案列表中讀取檔案,並送給top結構。