使用scrapy爬取dota2貼吧資料並進行分析

阿新 • • 發佈:2019-02-20

一直好奇貼吧裡的小夥伴們在過去的時間裡說的最多的詞是什麼,那我們就來抓取分析一下貼吧發文的標題內容,並提取分析一下,看看吧友們在說些什麼。

首先我們使用scrapy對所有貼吧文章的標題進行抓取

scrapy startproject btspider

cd btspider

scrapy genspider -t basic btspiderx tieba.baidu.com

修改btspiderx內容

修改items.py# -*- coding: utf-8 -*- import scrapy from btspider.items import BtspiderItem class BTSpider(scrapy.Spider): name = "btspider" allowed_domains = ["baidu.com"] start_urls = [] for x in xrange(91320): if x == 0: url = "https://tieba.baidu.com/f?kw=dota2&ie=utf-8" else: url = "https://tieba.baidu.com/f?kw=dota2&ie=utf-8&pn=" + str(x*50) start_urls.append(url) def parse(self, response): for sel in response.xpath('//div[@class="col2_right j_threadlist_li_right "]'): item = BtspiderItem() item['title'] = sel.xpath('div/div/a/text()').extract() item['link'] = sel.xpath('div/div/a/@href').extract() item['time'] = sel.xpath( 'div/div/span[@class="threadlist_reply_date pull_right j_reply_data"]/text()').extract() yield item

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# https://doc.scrapy.org/en/latest/topics/items.html

import scrapy

class BtspiderItem(scrapy.Item):

title = scrapy.Field()

link = scrapy.Field()

time = scrapy.Field()修改pipelines.py

修改settings.py# -*- coding: utf-8 -*- # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html import codecs import json class BtspiderPipeline(object): def __init__(self): self.file = codecs.open('info', 'w', encoding='utf-8') def process_item(self, item, spider): # line = json.dumps(dict(item)) + "\n" titlex = dict(item)["title"] if len(titlex) != 0: title = titlex[0] #linkx = dict(item)["link"] #if len(linkx) != 0: # link = 'http://tieba.baidu.com' + linkx[0] #timex = dict(item)["time"] #if len(timex) != 0: # time = timex[0].strip() line = title + '\n' #+ link + '\n' + time + '\n' self.file.write(line) return item def spider_closed(self, spider): self.file.close()

BOT_NAME = 'btspider'

SPIDER_MODULES = ['btspider.spiders']

NEWSPIDER_MODULE = 'btspider.spiders'

ROBOTSTXT_OBEY = True

ITEM_PIPELINES = {

'btspider.pipelines.BtspiderPipeline': 300,

}scrapy crawl btspider

所有的標題內容會被儲存為info檔案

等到爬蟲結束,我們來分析info檔案的內容

github上有個示例,改改就能用

git clone https://github.com/FantasRu/WordCloud.git

修改main.py檔案如下:

# coding: utf-8

from os import path

import numpy as np

# import matplotlib.pyplot as plt

# matplotlib.use('qt4agg')

from wordcloud import WordCloud, STOPWORDS

import jieba

class WordCloud_CN:

'''

use package wordcloud and jieba

generating wordcloud for chinese character

'''

def __init__(self, stopwords_file):

self.stopwords_file = stopwords_file

self.text_file = text_file

@property

def get_stopwords(self):

self.stopwords = {}

f = open(self.stopwords_file, 'r')

line = f.readline().rstrip()

while line:

self.stopwords.setdefault(line, 0)

self.stopwords[line.decode('utf-8')] = 1

line = f.readline().rstrip()

f.close()

return self.stopwords

@property

def seg_text(self):

with open(self.text_file) as f:

text = f.readlines()

text = r' '.join(text)

seg_generator = jieba.cut(text)

self.seg_list = [

i for i in seg_generator if i not in self.get_stopwords]

self.seg_list = [i for i in self.seg_list if i != u' ']

self.seg_list = r' '.join(self.seg_list)

return self.seg_list

def show(self):

# wordcloud = WordCloud(max_font_size=40, relative_scaling=.5)

wordcloud = WordCloud(font_path=u'./static/simheittf/simhei.ttf',

background_color="black", margin=5, width=1800, height=800)

wordcloud = wordcloud.generate(self.seg_text)

# plt.figure()

# plt.imshow(wordcloud)

# plt.axis("off")

# plt.show()

wordcloud.to_file("./demo/" + self.text_file.split('/')[-1] + '.jpg')

if __name__ == '__main__':

stopwords_file = u'./static/stopwords.txt'

text_file = u'./demo/info'

generater = WordCloud_CN(stopwords_file)

generater.show()python main.py

由於資料比較大,分析時間會比較長,可以拿到廉價的單核雲主機上後臺分析,等著那結果就好。

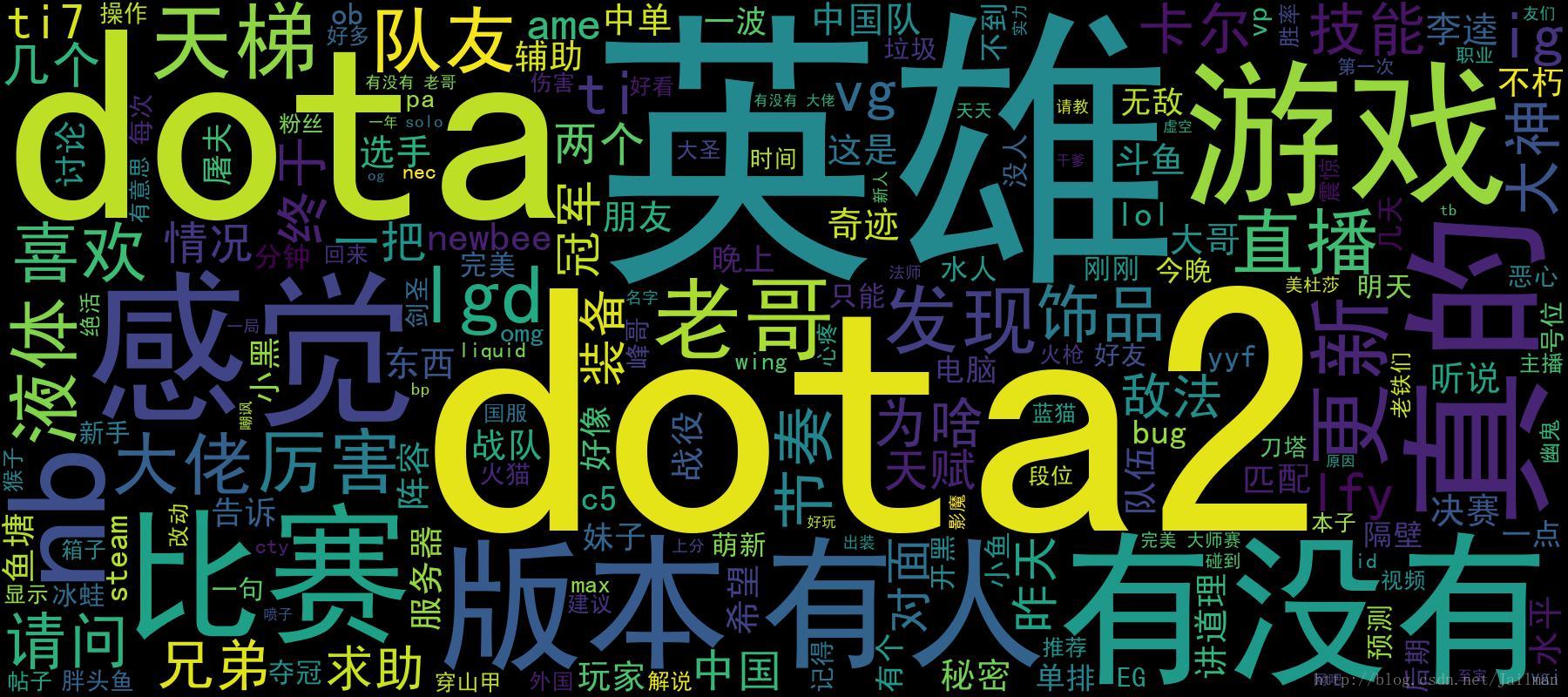

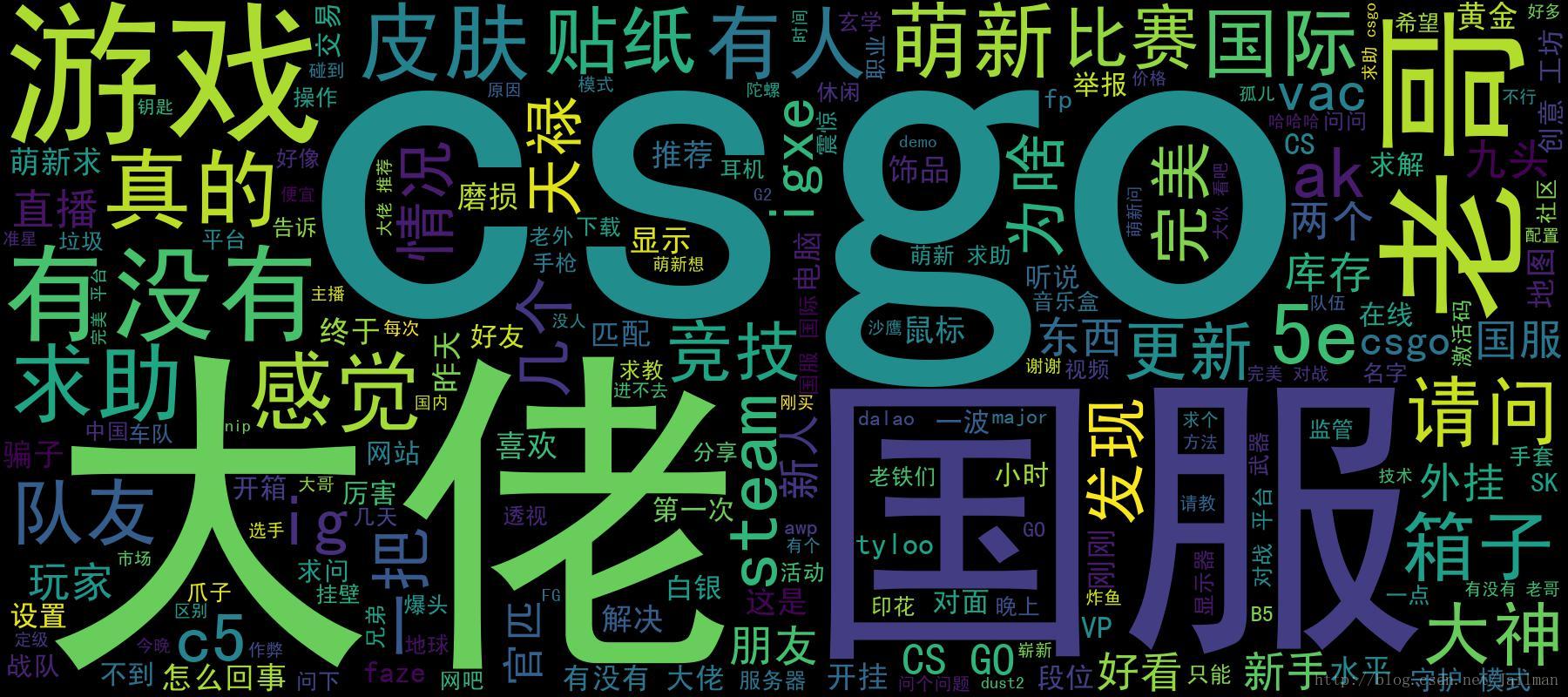

下邊是我分析兩個熱門遊戲貼吧的詞雲圖片